GeNN

advertisement

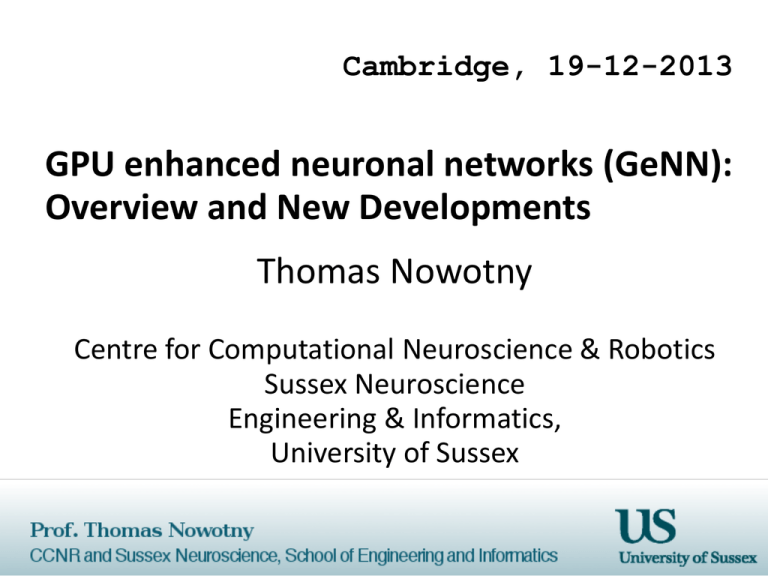

Cambridge, 19-12-2013

GPU enhanced neuronal networks (GeNN):

Overview and New Developments

Thomas Nowotny

Centre for Computational Neuroscience & Robotics

Sussex Neuroscience

Engineering & Informatics,

University of Sussex

Part I

GENN OVERVIEW

Introduction

Fuelled by the games industry,

graphics adaptors have become

very powerful and are now

using massively parallel

graphical processing units

(GPUs)

They offer a new platform for

HPC

NVIDIA® TESLA C2050,

(Image from NVIDIA

product website)

From: Herb Sutter, “The Free Lunch Is Over: A

Fundamental Turn Toward Concurrency in

Software”

NVIDIA® CUDATM

CUDATM= “Common Unified Device Architecture”

Was introduced by NVIDIA® to allow main stream

developers to use massively parallel graphics chips for

GPGPU without the restrictions of shaders

The first CUDA SDK was released Feb 2007 (according

to Wikipedia)

CUDATM is supported on all newer NVIDIA cards

The CUDATM API

Each thread executes what is called a “kernel”, defined

by a C-like function

CUDA is a Single-Instruction-Multiple-Data (SIMD)

environment

Communication between threads is through shared

memory spaces

dV

= -V ...

dt

Block 1

Block 2

Model

n2

Block 3

Grid 1

n1

Block 4

Isyn= …

Synapse Blocks

Grid 2

nsyn

Issues to consider

Correct parallelization

Code structure

Synchronization

Atomics (no synchronization between blocks!)

Block size, number & alignment

(unrolling) loops

Memory access patterns

Use of different memories

Memory bank access conflicts

Coalesced memory access

Host to/from GPU data transfers

CUDA memory architecture

“Local Memory”

Device memory

Constant memory

Texture memory

Shared memory

GPU registers

Graphics adaptor

Random Access

Memory (RAM)

Cache/Registers

Host computer

Program /

CPU

Kernel /

GPU

GPU Neural Network Simulators

Nageswaran et al. (UC Irvine, 2009): General simulator for

Izhikevich neurons with delay, optimized for Izhikevich's thalamocortical model (C++ library)

Fidjeland et al. (Imperial, 2010) - Nemo: General simulator for

Izhikevich neurons with delay, optimized for Izhikevich's thalamocortical model (C++ library)

Goodman & Brette (Ecole Normale Sup., 2009): GPU extensions to

the Brian simulator (one-timestep grids with partial CPU

involvement)

Mutch et al. (MIT, 2010) CNS simulator: Simulator for layered

“cortical networks”, models can be defined by the user (used

exclusively through a MatLab interface)

GeNN: Taking code generation seriously

The GPU enhanced Neuronal Network simulator

Provides a simple C++ API for specifying a neuronal network of

interest

Generates optimised C++ and CUDA code for the model and for the

detected hardware at compile time (e.g. grid/block organisation, HW

capability, model parameters)

GeNN can offer a large variety of different models – only the used

ones actually enter the generated code

Users can define their own model equa

The generated code is compiled with the native NVIDIA compiler

nvcc (and all its optimisations).

GeNN flowchart

Example: Insect olfaction model

Performance

GPU

AL-MB:

50 % all-to-all

Individual

conductances

CPU

spikes communicated to host

(dotted)

spike #

communicated to host

(solid)

Examples of code-gen benefits

Synaptic connectivities:

GeNN

model.addSynapsePopulation("PNKC", NSYNAPSE, DENSE,

INDIVIDUALG, "PN", "KC", myPNKC_p);

model.addSynapsePopulation("PNLHI", NSYNAPSE,

ALLTOALL, INDIVIDUALG, "PN", "LHI", myPNLHI_p);

model.addSynapsePopulation("LHIKC", NGRADSYNAPSE,

SPARSE, INDIVIDUALG, "LHI", "KC", myLHIKC_p);

Each connectivity type can generate entirely different code:

DENSE

SPARSE

Post

gsyn

ID

nsynapse

connectivity matrix

npre-neuron

npost-neuron

npre-neuron

Pre

#

sparse

matrix

representation

Examples of code-gen benefits

New neuron models:

GeNN

int newModel= nModels.size();

n.varNames.push_back(tS("V"));

n.varTypes.push_back(tS("double"));

n.pNames.push_back(tS("tau"));

n.simCode= tS(“$(V) = $(V) + DT/ $(tau)* (- 40.0*$(V))");

nModels.push_back(n);

GeNN

model.addNeuronPopulation("gp", 1000, newModel, p, ini);

Part II

NEW DEVELOPMENTS

SpineML

Courtesy of Dr. Alex Cope

SpineML is an XML-based description format for

networks of point neurons

It is a proposed extension of the NineML format,

including the description of neural dynamics, network

structure, and experimental procedures.

SpineML acts as an exchange format between creation

tools and simulators, as well as between collaborators

working on a shared model

For more details see:

Richmond P, Cope A, Gurney K, Allerton DJ. "From Model Specification to Simulation of

Biologically Constrained Networks of Spiking Neurons" Neuroinformatics. 2013

SpineCreator

SpineCreator is one tool that can be used to author

SpineML models

A Graphical User Interface allows models to be

specified without any programming

Some features:

Graphing

3d visualisation

Extensible simulator support

Version control support

Available at https://github.com/SpineML/SpineCreator

Courtesy of Dr. Alex Cope

SpineML and GeNN

Courtesy of Dr. Alex Cope

SpineML can use code generation for simulator support, so it is very easy to add support for

GeNN

XSLT translation scripts transform the model into GeNN user files, and the GeNN standard

toolchain compiles and runs the simulation

Support for new neuron types (and soon synapses and weight update rules) is supported

through XSLT translation to GeNN system files

BRIAN2 interface

BRIAN is a popular simulator for neuronal networks

(http://briansimulator.org/)

BRIAN2 is in active development and will be entirely codegeneration based

Users will be able to choose target devices,

including Python (as before), C/C++, Android and

GPU/GeNN

Proof of concept

BRIAN2

from brian2 import *

from brian2.devices.genn import *

set_device('genn');

##### Define the model

tau = 10*msecond

eqs = '’’dV/dt = (-40*mV-V)/tau : volt # (unless-refractory)'''

threshold = 'V>-50*mV'

reset = 'V=-60*mV'

refractory = 5*msecond

N = 1000

##### Generate genn code

G = NeuronGroup(N, eqs, reset=reset, threshold=threshold, name='gp')

M = SpikeMonitor(G)

G2 = NeuronGroup(1, eqs, reset=reset, threshold=threshold, name='gp2')

# Run the network for 0 seconds to generate the code

net = Network(G, M, G2)

net.run(0*second)

genn_device.build(net)

GeNN

// setp 1: add variables

n.varNames.clear();

n.varTypes.clear();

n.varNames.push_back(tS("V"));

n.varTypes.push_back(tS("double"));

n.varNames.push_back(tS("lastspike"));

n.varTypes.push_back(tS("double"));

n.varNames.push_back(tS("not_refractory"));

n.varTypes.push_back(tS("bool"));

// step2: add parameters

n.pNames.clear();

n.pNames.push_back(tS("tau"));

n.pNames.push_back(tS("mV"));

// step 3: add simcode

n.simCode= tS(" $(not_refractory) = ($(not_refractory)) || (!(false));\n\

const double _V = $(V) * exp(-(DT) / $(tau)) - 40.0 * $(mV) + 40.0 * $(mV) * exp((DT) / $(tau));\n\

$(V) = _V;\n\

");

// step 4: add thresholder code

n.thresholdCode= tS(" const double _cond = $(V) > -50 * $(mV);\n\”

);

// step 5: add resetter code

n.resetCode= tS(" \n\

$(V) = -60 * $(mV);\n\

");

nModels.push_back(n);

from brian2 import *

from brian2.devices.genn import *

set_device('genn');

##### Define the model

tau = 10*msecond

eqs = '’’dV/dt = (-40*mV-V)/tau : volt # (unless-refractory)'''

threshold = 'V>-50*mV'

reset = 'V=-60*mV'

refractory = 5*msecond

N = 1000

##### Generate genn code

G = NeuronGroup(N, eqs, reset=reset, threshold=threshold, name='gp')

M = SpikeMonitor(G)

G2 = NeuronGroup(1, eqs, reset=reset, threshold=threshold, name='gp2')

# Run the network for 0 seconds to generate the code

net = Network(G, M, G2)

net.run(0*second)

genn_device.build(net)

BRIAN2

// setp 1: add variables

n.varNames.clear();

n.varTypes.clear();

n.varNames.push_back(tS("V"));

n.varTypes.push_back(tS("double"));

n.varNames.push_back(tS("lastspike"));

n.varTypes.push_back(tS("double"));

n.varNames.push_back(tS("not_refractory"));

n.varTypes.push_back(tS("bool"));

// step2: add parameters

n.pNames.clear();

n.pNames.push_back(tS("tau"));

n.pNames.push_back(tS("mV"));

// step 3: add simcode

n.simCode= tS(" $(not_refractory) = ($(not_refractory)) || (!(false));\n\

const double _V = $(V) * exp(-(DT) / $(tau)) - 40.0 * $(mV) + 40.0 * $(mV)

* exp(-(DT) / $(tau));\n\

$(V) = _V;\n\

");

// step 4: add thresholder code

n.thresholdCode= tS(" const double _cond = $(V) > -50 * $(mV);\n\”

);

// step 5: add resetter code

n.resetCode= tS(" \n\

$(V) = -60 * $(mV);\n\

");

nModels.push_back(n);

GeNN

Summary

GeNN is a code-generation based simulator targeting

GPUs with NVIDIA CUDA.

The native C/C++ interface is flexible and convenient for

expert users.

A SpineCreator – SpineML – GeNN pipeline has been

prototyped and will be used in the Green Brain project.

A BRIAN2 – GeNN interface is in development and will

hopefully be released with 1st release of BRIAN2.

Acknowledgments

Ramon Huerta, Alex Cope, Esin Yavuz, James Turner

NVIDIA (professor partnership: 2x Quadro FX 5800

cards) & hardware donation for Green Brain

Funders:

http://www.sourceforge.net/projects/genn