invoked computing

advertisement

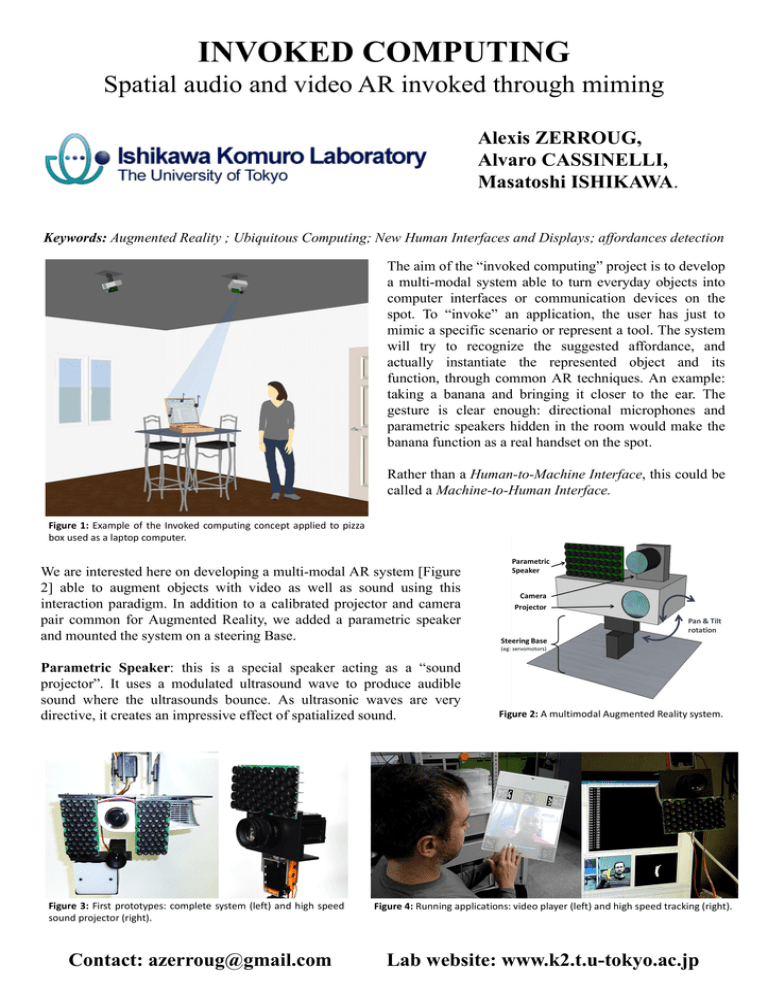

INVOKED COMPUTING Spatial audio and video AR invoked through miming Alexis ZERROUG, Alvaro CASSINELLI, Masatoshi ISHIKAWA. Keywords: Augmented Reality ; Ubiquitous Computing; New Human Interfaces and Displays; affordances detection The aim of the “invoked computing” project is to develop a multi-modal system able to turn everyday objects into computer interfaces or communication devices on the spot. To “invoke” an application, the user has just to mimic a specific scenario or represent a tool. The system will try to recognize the suggested affordance, and actually instantiate the represented object and its function, through common AR techniques. An example: taking a banana and bringing it closer to the ear. The gesture is clear enough: directional microphones and parametric speakers hidden in the room would make the banana function as a real handset on the spot. Rather than a Human-to-Machine Interface, this could be called a Machine-to-Human Interface. Figure 1: Example of the Invoked computing concept applied to pizza box used as a laptop computer. We are interested here on developing a multi-modal AR system [Figure 2] able to augment objects with video as well as sound using this interaction paradigm. In addition to a calibrated projector and camera pair common for Augmented Reality, we added a parametric speaker and mounted the system on a steering Base. Parametric Speaker: this is a special speaker acting as a “sound projector”. It uses a modulated ultrasound wave to produce audible sound where the ultrasounds bounce. As ultrasonic waves are very directive, it creates an impressive effect of spatialized sound. Figure 3: First prototypes: complete system (left) and high speed sound projector (right). Contact: azerroug@gmail.com Figure 2: A multimodal Augmented Reality system. Figure 4: Running applications: video player (left) and high speed tracking (right). Lab website: www.k2.t.u-tokyo.ac.jp