slides - Yisong Yue

advertisement

Machine Learning & Data Mining

CS/CNS/EE 155

Lecture 14:

Embeddings

Lecture 14: Embeddings

1

Announcements

• Kaggle Miniproject is closed

– Report due Thursday

• Public Leaderboard

– How well you think you did

• Private Leaderboard now viewable

– How well you actually did

Lecture 14: Embeddings

2

Lecture 14: Embeddings

3

Last Week

• Dimensionality Reduction

• Clustering

• Latent Factor Models

– Learn low-dimensional representation of data

Lecture 14: Embeddings

4

This Lecture

• Embeddings

– Alternative form of dimensionality reduction

• Locally Linear Embeddings

• Markov Embeddings

Lecture 14: Embeddings

5

Embedding

• Learn a representation U

– Each column u corresponds to data point

• Semantics encoded via d(u,u’)

– Distance between points

http://www.sciencemag.org/content/290/5500/2323.full.pdf

Lecture 14: Embeddings

6

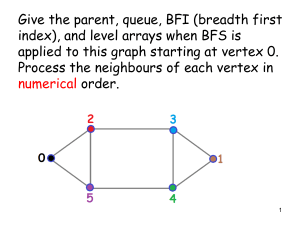

Locally Linear Embedding

• Given:

S = { xi }i=1

N

Unsupervised Learning

• Learn U such that local linearity is preserved

– Lower dimensional than x

– “Manifold Learning”

x’s

u’s

Any neighborhood

looks like a linear plane

https://www.cs.nyu.edu/~roweis/lle/

Lecture 14: Embeddings

7

Locally Linear Embedding

S = { xi }i=1

N

• Create B(i)

– B nearest neighbors of xi

– Assumption: B(i) is approximately linear

– xi can be written as a convex combination of xj in B(i)

xi »

åWx

ij

j

B(i)

jÎB(i)

åW

ij

=1

jÎB(i)

xi

https://www.cs.nyu.edu/~roweis/lle/

Lecture 14: Embeddings

8

Locally Linear Embedding

Given Neighbors B(i), solve local linear approximation W:

2

argmin å xi W

i

åWx

ij

j

jÎB(i)

W

2

xi -

åWx

ij

j

åW

= argmin åWi,*T C iWi,*

ij

=1

jÎB(i)

i

2

=

jÎB(i)

å W (x - x )

ij

i

j

jÎB(i)

T

æ

ö

= çç å Wij (xi - x j )÷÷

è jÎB(i)

ø

=

å å WW C

ij

ik

æ

ö

çç å Wij (xi - x j )÷÷

è jÎB(i)

ø

i

jk

jÎB(i) kÎB(i)

= Wi,*T C iWi,*

C ijk = (xi - x j )T (xi - xk )

https://www.cs.nyu.edu/~roweis/lle/

Lecture 14: Embeddings

9

Locally Linear Embedding

Given Neighbors B(i), solve local linear approximation W:

2

argmin å xi W

i

åWx

ij

j

jÎB(i)

= argmin åWi,*T C iWi,*

W

i

åW

ij

=1

jÎB(i)

C ijk = (xi - x j )T (xi - x j )

• Every xi is approximated as

a convex combination of

neighbors

– How to solve?

Lecture 14: Embeddings

10

Lagrange Multipliers

argmin L(w) º w Cw

T

w

s.t. w = 1

Ñwj

ì

-1

if

ïï

wí

+1

if

ï

ïî [ -1, +1] if

wj < 0

wj > 0

wj = 0

Solutions tend to

be at corners!

$l ³ 0 : (¶w L(y, w) Î lÑw w ) Ù ( w =1)

http://en.wikipedia.org/wiki/Lagrange_multiplier

11

Solving Locally Linear Approximation

Lagrangian:

å (C )

i -1

Wij µ

å

kÎB(i)

i

C

( )

-1

jk

Wij =

kÎB(i)

å å (C )

i -1

lÎB(i) mÎB(i)

Lecture 14: Embeddings

jk

lm

12

Locally Linear Approximation

• Invariant to:

– Rotation

xi »

Axi »

å

5xi »

ij

j

jÎB(i)

åW

AWij x j

ij

jÎB(i)

– Scaling

åWx

=1

jÎB(i)

å 5W x

ij

j

jÎB(i)

– Translation

xi + x ' »

å W (x

ij

jÎB(i)

Lecture 14: Embeddings

j

+ x ')

13

Story So Far: Locally Linear Embeddings

Given Neighbors B(i), solve local linear approximation W:

2

argmin å xi W

i

åWx

ij

jÎB(i)

j

= argmin åWi,*T C iWi,*

W

i

Solution via Lagrange Multipliers:

å (C )

åW

ij

=1

jÎB(i)

C ijk = (xi - x j )T (xi - xk )

i -1

Wij =

kÎB(i)

jk

å å (C )

i -1

lÎB(i) mÎB(i)

lm

• Locally Linear Approximation

https://www.cs.nyu.edu/~roweis/lle/

Lecture 14: Embeddings

14

Recall: Locally Linear Embedding

• Given:

S = { xi }i=1

N

• Learn U such that local linearity is preserved

– Lower dimensional than x

– “Manifold Learning”

x’s

u’s

https://www.cs.nyu.edu/~roweis/lle/

Lecture 14: Embeddings

15

Dimensionality Reduction

Given local approximation W, learn lower dimensional representation:

2

argmin å ui U

i

åWu

ij

j

jÎB(i)

• Find low dimensional U

– Preserves approximate local linearity

x’s

u’s

Neighborhood

represented by Wi,*

https://www.cs.nyu.edu/~roweis/lle/

Lecture 14: Embeddings

16

Given local approximation W, learn lower dimensional representation:

2

argmin å ui U

åWu

ij

i

UU = I K

T

j

jÎB(i)

• Rewrite as:

argmin å M ij ( uiT u j ) º trace (UMU T )

U

ij

M ij = 1[i= j ] - Wij - Wji + åWkiWkj

k

M = (I N - W )T (I N - W )

Symmetric positive semidefinite

https://www.cs.nyu.edu/~roweis/lle/

Lecture 14: Embeddings

17

Given local approximation W, learn lower dimensional representation:

argmin å M ij ( uiT u j ) º trace (UMU T )

U

ij

UU = I K

T

• Suppose K=1

argmin å M ij ( uiT u j ) º trace ( uMuT )

u

ij

= argmax trace ( uM u

+ T

u

)

uu =1

T

pseudoinverse

• By min-max theorem

– u = principal eigenvector of M+

http://en.wikipedia.org/wiki/Min-max_theorem

Lecture 14: Embeddings

18

Recap: Principal Component Analysis

M = VLV

T

• Each column of V is an Eigenvector

• Each λ is an Eigenvalue (λ1 ≥ λ2 ≥ …)

é l

ê 1

l2

L = êê

ê

êë

é 1/ l

1

ê

+

+ T

1 / l2

L + = êê

ê

êë

é 1

ù

ê

ú

1

+

+ T

T

ú

MM = VLL V = V1:2V1:2 = ê

ê

ú

0

ê

0 úû

ë

M = VL V

Lecture 14: Embeddings

ù

ú

ú

ú

0

ú

0 úû

ù

ú

ú

ú

0

ú

0 úû

19

Given local approximation W, learn lower dimensional representation:

argmin å M ij ( u u j ) º trace (UMU

U

T

i

ij

T

)

UU = I K

T

• K=1:

– u = principal eigenvector of M+

– u = smallest non-trivial eigenvector of M

• Corresponds to smallest non-zero eigenvalue

• General K

– U = top K principal eigenvectors of M+

– U = bottom K non-trivial eigenvectors of M

• Corresponds to bottom K non-zero eigenvalues

https://www.cs.nyu.edu/~roweis/lle/

http://en.wikipedia.org/wiki/Min-max_theorem

Lecture 14: Embeddings

20

Recap: Locally Linear Embedding

• Generate nearest neighbors of each xi, B(i)

• Compute Local Linear Approximation:

2

argmin å xi W

i

åWx

ij

åW

ij

j

=1

jÎB(i)

jÎB(i)

• Compute low dimensional embedding

2

argmin å ui U

Lecture 14: Embeddings

i

åWu

ij

UU T = I K

j

jÎB(i)

21

Results for Different Neighborhoods

True Distribution

B=6

2000 Samples

B=9

B=3

B=12

https://www.cs.nyu.edu/~roweis/lle/gallery.html

Lecture 14: Embeddings

22

Embeddings vs Latent Factor Models

• Both define low-dimensional representation

• Embeddings preserve distance:

ui - u j

2

» xi - x j

2

• Latent Factor preserve inner product:

uiT u j » xiT x j

• Relationship:

2

2

ui - u j = ui + u j - 2uiT u j

Lecture 14: Embeddings

2

23

Visualization Semantics

Latent Factor Model

Similarity measured via dot product

Rotational semantics

Can interpret axes

Can only visualize 2 axes at a time

Embedding

Similarity measured via distance

Clustering/locality semantics

Cannot interpret axes

Can visualize many clusters simultaneously

Lecture 14: Embeddings

24

Latent Markov Embeddings

Lecture 14: Embeddings

25

Latent Markov Embeddings

• Locally Linear Embedding is conventional

unsupervised learning

– Given raw features xi

– I.e., find low-dimensional U that preserves approximate

local linearity

• Latent Markov Embedding is a feature

learning problem

– E.g., learn low-dimensional U that captures user-generated

feedback

Lecture 14: Embeddings

26

Playlist Embedding

• Users generate song playlists

– Treat as training data

• Can we learn a probabilistic model of

playlists?

Lecture 14: Embeddings

27

Probabilistic Markov Modeling

• Training set:

S = {s1,...s|S| }

Songs

D = { pi }i=1

N

Playlists

pi = p ,..., p

1

i

Ni

i

Playlist Definition

• Goal: Learn a probabilistic Markov model of playlists:

j

i

j-1

i

P(p | p )

• What is the form of P?

http://www.cs.cornell.edu/People/tj/publications/chen_etal_12a.pdf

Lecture 14: Embeddings

28

First Try: Probability Tables

P(s|s’

)

s1

s2

s3

s4

s5

s6

s7

sstart

s1

0.01

0.03

0.01

0.11

0.04

0.04

0.01

0.05

s2

0.03

0.01

0.04

0.03

0.02

0.01

0.02

0.02

s3

0.01

0.01

0.01

0.07

0.02

0.02

0.05

0.09

s4

0.02

0.11

0.07

0.01

0.07

0.04

0.01

0.01

s5

0.04

0.01

0.02

0.17

0.01

0.01

0.10

0.02

s6

0.01

0.02

0.03

0.01

0.01

0.01

2

0.01

0.08

s7

0.07

0.02

#Parameters

=0.03

O(|S|

) !!!

0.01

0.01

0.09

0.03

…

0.01

…

Lecture 14: Embeddings

29

Second Try: Hidden Markov Models

Ni

Nj

j=1

j=1

P ( pi , z) = P(End | z Ni )Õ P(z j | z j-1 )Õ P(pij | z j )

P(z j | z j-1 )

• #Parameters = O(K2)

P(pij | z j )

• #Parameters = O(|S|K)

• Total = O(K2) + O(|S|K)

Lecture 14: Embeddings

30

Problem with Hidden Markov Models

Ni

Nj

j=1

j=1

P ( pi , z) = P(End | z Ni )Õ P(z j | z j-1 )Õ P(pij | z j )

• Need to reliably estimate P(s|z)

S = {s1,...s|S| }

D = { pi }i=1

N

pi = pi1,..., piNi

• Lots of “missing values” in this training set

Lecture 14: Embeddings

31

Latent Markov Embedding

us: entry point of song s

vs: exit point of song s

{

P(s | s')µ exp - us - vs'

{

P(s | s') =

åexp{- u

exp - us - vs'

s"

s"

2

- vs'

2

}

2

}

}

• “Log-Radial” function

– (my own terminology)

http://www.cs.cornell.edu/People/tj/publications/chen_etal_12a.pdf

Lecture 14: Embeddings

32

Log-Radial Functions

{

{

exp - us - vs'

P(s | s')

=

P(s" | s') exp - us" - vs'

2

}

}

2

us

us”

vs’

2K parameters per song

2|S|K parameters total

Each ring defines an equivalence class of transition probabilities

Lecture 14: Embeddings

33

Learning Problem

S = {s1,...s|S| }

D = { pi }i=1

N

Songs

pi = pi1,..., piNi

Playlists

Playlist Definition

• Learning Goal:

argmax Õ P(pi ) = Õ Õ P(pij | pij-1 )

U,V

i

i

{

P(s | s') =

åexp{- u

exp - us - vs'

s"

s"

2

- vs'

j

}

2

}

=

{

exp - us - vs'

2

}

Z(s')

http://www.cs.cornell.edu/People/tj/publications/chen_etal_12a.pdf

Lecture 14: Embeddings

34

Minimize Neg Log Likelihood

argmax Õ Õ P(pij | pij-1 ) = argmin åå-log P(pij | pij-1 )

U,V

i

j

U,V

i

j

• Solve using gradient descent

– Homework question: derive the gradient formula

– Random initialization

• Normalization constant hard to compute:

– Approximation heuristics

• See paper

P(s | s') =

{

exp - us - vs'

2

}

Z(s')

http://www.cs.cornell.edu/People/tj/publications/chen_etal_12a.pdf

Lecture 14: Embeddings

35

Simpler Version

• Dual point model:

• Single point model:

P(s | s') =

P(s | s') =

{

exp - us - vs'

2

}

Z(s')

{

exp - us - us'

– Transitions are symmetric

2

}

Z(s')

• (almost)

– Exact same form of training problem

Lecture 14: Embeddings

36

Visualization in 2D

Simpler version:

Single Point Model

P(s | s') =

{

exp - us - us'

2

}

Z(s')

Single point model is

easier to visualize

http://www.cs.cornell.edu/People/tj/publications/chen_etal_12a.pdf

Lecture 14: Embeddings

37

Sampling New Playlists

• Given partial playlist:

p = p ,...p

1

j

• Generate next song for playlist pj+1

– Sample according to:

P(s | p ) =

j

{

exp - us - v p j

Z(p j )

Dual Point Model

2

}

P(s | p ) =

j

{

exp - us - up j

2

}

Z(p j )

Single Point Model

http://www.cs.cornell.edu/People/tj/publications/chen_etal_12a.pdf

Lecture 14: Embeddings

38

Demo

http://jimi.ithaca.edu/~dturnbull/research/lme/lmeDemo.html

Lecture 14: Embeddings

39

What About New Songs?

• Suppose we’ve trained U:

P(s | s') =

{

exp - us - us'

2

}

Z(s')

• What if we add a new song s’?

– No playlists created by users yet…

– Only options: us’ = 0 or us’ = random

• Both are terrible!

Lecture 14: Embeddings

40

Song & Tag Embedding

• Songs are usually added with tags

– E.g., indie rock, country

– Treat as features or attributes of songs

• How to leverage tags to generate a reasonable

embedding of new songs?

– Learn an embedding of tags as well!

http://www.cs.cornell.edu/People/tj/publications/moore_etal_12a.pdf

Lecture 14: Embeddings

41

S = {s1,...s|S| }

Songs

T = {T1,...T|S| }

Tags for Each Song

D = { pi }i=1

N

pi = pi1,..., piNi

Playlists

Playlist Definition

Learning Objective:

argmax P(D |U)P(U | A,T )

U,A

Same term as before: P(D |U) = Õ P(pi |U) = Õ Õ P(pij | pij-1,U)

i

i

j

Song embedding ≈ average of tag embeddings:

ì

ï

1

P(U | A,T ) = Õ P(us | A,TS ) µ Õ exp í-l us Ts

ïî

s

s

Solve using gradient descent:

ü

ï

å At ý

ïþ

tÎTs

2

http://www.cs.cornell.edu/People/tj/publications/moore_etal_12a.pdf

Lecture 14: Embeddings

42

Visualization in 2D

http://www.cs.cornell.edu/People/tj/publications/moore_etal_12a.pdf

Lecture 14: Embeddings

43

Revisited: What About New Songs?

• No user has yet s’ added to playlist

– So no evidence from playlist training data:

s’ does not appear in

D = { pi }i=1

N

• Assume new song has been tagged Ts’

– The us’ = average of At for tags t in Ts’

– Implication from objective:

argmax P(D |U)P(U | A,T )

U,A

Lecture 14: Embeddings

44

Recap: Embeddings

• Learn a low-dimensional representation of

items U

• Capture semantics using distance between

items u, u’

• Can be easier to visualize than latent factor

models

Lecture 14: Embeddings

45

Next Lecture

• Recent Applications of Latent Factor Models

• Low-rank Spatial Model for Basketball Play

Prediction

• Low-rank Tensor Model for Collaborative

Clustering

• Miniproject 1 report due Thursday.

Lecture 14: Embeddings

46