Word representations - Boston Data Festival

advertisement

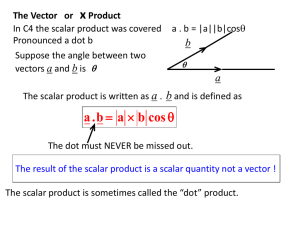

Vector space word representations Rani Nelken, PhD Director of Research, Outbrain @RaniNelken https://www.flickr.com/photos/hyku/295930906/in/photolist-EbXgJ-ajDBs8-9hevWb-s9HX1-5hZqnb-a1Jk8H-a1Mcx7-7QiUWL-6AFs53-9TRtkzbqt2GQ-F574u-F56EA-3imqK7/ Words = atoms? That would be crazy for numbers https://www.flickr.com/photos/proimos/4199675334/ The distributional hypothesis What is a word? Wittgenstein (1953): The meaning of a word is its use in the language Firth (1957): You shall know a word by the company it keeps From atomic symbols to vectors • Map words to dense numerical vectors “representing” their contexts • Map words with similar contexts to vectors with small angle History • Hard Clustering: Brown clustering • Soft clustering: LSA, Random projections, LDA • Neural nets Feedforward Neural Net Language Model Training • Input is one-hot vectors of context (0…0,1,0…0) • We’re trying to learn a vector for each word (“projection”) • Such that the output is close to the one-hot vector of w(t) Simpler model: Word2Vec What can we do with these representations? • Plug them into your existing classifier • Plug them into further neural nets – better! • Improves accuracy on many NLP tasks – – – – Named entity recognition POS tagging sentiment analysis semantic role labeling Back to cheese… • cos(crumbled, cheese) = 0.042 • cos(crumpled, cheese) = 0.203 And now for the magic http://en.wikipedia.org/wiki/Penn_%26_Teller#mediaviewer/File:Penn_and_Teller_(1988).jp “Magical” property • [Paris] - [France] + [Italy] ≈ [Rome] • [king] - [man] + [woman] ≈ [queen] • We can use it to solve word analogy problems Boston: Red_Sox= New_York: ? Demo Why does it work? [king] - [man] + [woman] ≈ [queen] cos (x, ([king] – [man] + [woman])) = cos (x, [king]) – cos(x, [man]) + cos(x, [woman]) [queen] is a good candidate It doesn’t always work • London : England = Baghdad : ? • We expect Iraq, but get Mosul • We’re looking for a word that is close to Baghdad, and to England, but not to London Why did it fail? • London : England = Baghdad : ? • cos(Mosul, Baghdad) >> cos(Iraq, London) • Instead of adding the cosines, multiply them • Improves accuracy Word2Vec • Open source C implementation from Google • Comes with pre-learned embeddings • Gensim: fast python implementation Active field of research • Bilingual embeddings • Joint word and image embeddings • Embeddings for sentiment • Phrase and document embeddings Bigger picture: how can we make NLP less fragile? • 90’s: Linguistic engineering • 00’s: Feature engineering • 10’s: Unsupervised preprocessing References • https://code.google.com/p/word2vec/ • http://www.cs.bgu.ac.il/~yoavg/publications/c onll2014analogies.pdf • http://radimrehurek.com/2014/02/word2vectutorial/ Thanks @RaniNelken We’re hiring for NLP positions