ppt - Kavraki Lab

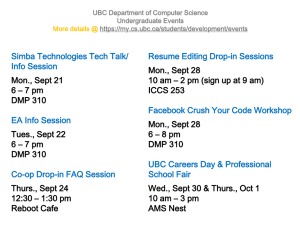

advertisement

SARSOP Successive Approximations of the Reachable Space under Optimal Policies Devin Grady 4 April 2013 Outline 1. Review – POMDP – Point-based Value Iteration – Heuristic Search [point-based] Value Iteration 2. SARSOP – Add a new heuristic and pruning method to HSVI – Results (Thanks Ryan) REVIEW POMDP • Solving a POMDP is very similar to an MDP • The similarities: – State transitions are still stochastic – Value function is a function of our current “state” – We still perform Bellman backups to compute V • The differences: – We have a probability distribution of where we are – We can make (stochastic) observations of our current belief Let’s solve a POMDP! measurements state x1 action u3 state x2 measurements 0 .2 0 .8 z1 0 .7 z2 0 .3 u3 x1 u3 0 .3 z1 0 .7 z2 x2 0 .8 0 .2 u1 u2 100 100 payoff u1 u2 100 50 payoff actions u1, u2 Make a decision… NOW 𝑉1 𝑏 = max 𝑟(𝑏, 𝑢) 𝑢 3 𝑢1 if 𝑝1 ≤ 7 𝜋1 𝑏 = 3 𝑢2 if 𝑝1 > 7 HEY! You said POMDP… • Geez. OK. We don’t know that we observed z1. We must compute expected value. 𝑉1 𝑏 = 𝐸𝑧 𝑉1 𝑏 𝑧 = 𝑝 𝑧𝑖 𝑉1 (𝑏|𝑧𝑖 𝑖 −70𝑝1 + 30 = max 70𝑝1 − 15 −30𝑝1 + 70 + max 30𝑝1 − 35 1 − 𝑝1 1 − 𝑝1 1 − 𝑝1 1 − 𝑝1 HEY! You said POMDP… • Geez. OK. We don’t know that we observed z1. We must compute expected value. Belief Update Lather, Rinse, Repeat • We just did a full backup for T=1! • Repeat for T=2. The POMDP Hex • POMDPs have both the curse of dimensionality and the curse of history. • Scholars maintain that history is the truly unmanageable part. O (|V| x |A| x |Ω| x |S|2 + |A| x |S| x |V||Ω| ) Belief Update (taking an action) Value Backups (sensor measurements) Point-based Value Iteration • Maintains a fixed set of belief points, B • The value function is computed only over B – B only contains reachable beliefs • The number of constraints (|V|) is fixed at |B| – Value updates are now PTIME • PBVI provides an anytime solution that converges to optimal value function • The error in the value function is bounded What does it Mean? • Start with a small number of reachable beliefs • Point-based backup instead of Bellman backup – Implicitly prunes value simplex • Increase # beliefs until timeout O (|V| x |A| x |Ω| x |S|2 + |B| x |A| x |S| x |Ω|) HSVI Value Function • When search ceases, perform full Bellman backups in reverse order • Just insert the constraint vector for the l.b. • Update u.b. based on expected value • Both bounds are uniformly improvable Properties of HSVI • Upper and lower bounds monotonically converge to optimal value function • Local updates preserve improvability • Maximum regret is ε • Finite search depth • Finite depth → finite Bellman updates (New stuff here!) SARSOP Like HSVI… • Except, only look at reachable OPTIMAL belief points instead of all reachable points Algorithm Outline 1. Sample 2. Backup 3. Prune Algorithm Outline 1. Sample 𝑏 ′ 𝑠 ′ = 𝜏 𝑏, 𝑎, 𝑜 = 𝜂Ω 𝑠 ′ , 𝑎, 𝑜 𝑇 𝑠, 𝑎, 𝑠 ′ 𝑏(𝑠) 𝑠 – Select a,o as in HSVI (upper bound, max gap) • Lower bound increases propagate quickly • Upper bound decreases do not – OR: Predict optimal value at b, if the lower bound improves at the root, then explore around b • Cluster beliefs by initial upper bound and entropy Algorithm Outline 1. Sample 𝑏 ′ 𝑠 ′ = 𝜏 𝑏, 𝑎, 𝑜 = 𝜂Ω 𝑠 ′ , 𝑎, 𝑜 𝑇 𝑠, 𝑎, 𝑠 ′ 𝑏(𝑠) 𝑠 – Select a,o as in HSVI (upper bound, max gap) • Lower bound increases propagate quickly • Upper bound decreases do not – OR: Predict optimal value at b, if the lower bound improves at the root, then explore around b • Cluster beliefs by initial upper bound and entropy “Selective Deep Sampling” Algorithm Outline 1. Sample 2. Backup – See HSVI, standard Bellman backup 3. Prune Algorithm Outline 1. Sample 2. Backup 3. Prune – Any b that will never be explored currently is pruned from the belief tree, not in 𝐵 ∗ • If it was incorrect to prune and was in 𝐵∗ it will be added again later – Smaller size of 𝐵 decreases computational cost of each iteration, this is speed-critical! Rock-Sample • Deterministic motions • Noisy sensor for Rock-goodness • +10 for sampling good • -10 for sampling bad • +10 for exiting • No other cost/reward Rock-Sample • Deterministic motions • Noisy sensor for Rock-goodness • +10 for sampling good • -10 for sampling bad • +10 for exiting • No other cost/reward New method… is worse? • The robot position in RockSample is fully observed • The rock goodness values are also completely independent • HSVI search for value function cuts that mask others is particularly suited to this HomeCare • Smaller 𝑆, larger 𝑂 • Follow target in case they need help • Reward if helped in time – Time is uncertain • Movement has small cost SARSOP Overall • It’s a heuristic method – There will always be bad corner cases – It works well overall most of the time • It has become very popular in the past year for POMDP literature – Because it performs well and is fairly straightforward if you understand HSVI? References Kurniawati, Hanna, David Hsu, and Wee Sun Lee. "SARSOP: Efficient pointbased POMDP planning by approximating optimally reachable belief spaces."Proc. Robotics: Science and Systems. Vol. 62. 2008. SARSOP available for download at http://bigbird.comp.nus.edu.sg/pmwiki/farm/appl/ G. Shani, J. Pineau and R. Kaplow. A Survey of Point-based POMDP Solvers. Autonomous Agents and Multi-agent Systems. 2012. T. Smith and R. Simmons. Heuristic Search Value Iteration for POMDPs. Uncertainty in Artificial Intelligence. 2004. J. Pineau, G. Gordon and S. Thrum. Point-based Value Iteration: An anytime algorithm for POMDPs. Int’l Joint Conferences in Artificial Intelligence. 2003. S. Thrun, W. Burgard. and D. Fox. Probabilistic Robotics. MIT Press. 2006.