Dialogue as a Decision Making Process

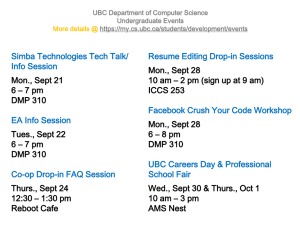

advertisement

Dialogue as a

Decision Making Process

Challenges of Autonomy in the Real

World

Wide range of sensors

Noisy sensors

World dynamics

Adaptability

Incomplete information

Nicholas Roy

Robustness under

uncertainty

Minerva

Pearl

Predicted Health Care Needs

Spoken Dialogue Management

By 2008, need 450,000 additional nurses:

Monitoring and walking assistance

30 % of adults 65 years and older have fallen this year

Cost of preventable falls:

$32 Billion US/year

Alexander 2001

Intelligent reminding

Cost of medication non-compliance:

Dunbar-Jacobs 2000

$1 Billion US/year

We want...

Natural dialogue...

With untrained (and untrainable) users...

In an uncontrolled environment...

Across many unrelated domains

Cost of errors...

Medication is not taken, or taken incorrectly

Robot behaves inappropriately

User becomes frustrated, robot is ignored, and becomes

useless

How to generate such a policy?

1

Perception and Control

Probabilistic Methods for Dialogue

Management

Markov Decision Processes model action

uncertainty

Probabilistic

Perception

Control

(Levin et. al, 1998, Goddeau & Pineau, 2000)

Many techniques for learning optimal policies,

especially reinforcement learning

World state

Markov Decision Processes

A Markov Decision Process is given formally by the following:

a set of states S={s1, s2, ..., sn}

a set of actions A={a1, a2, ..., am }

a set of transition probabilities T(si , a, sj ) = p(sj |a, si )

a set of rewards R: S ×A? ´

a discount factor g� [0, 1]

an initial state s0˛ S

Bellman's equation (Bellman, 1957) computes the expected

reward for each state recursively,

(Singh et al. 1999, Litman et al. 2000, Walker 2000)

The POMDP in Dialogue Management

State: Represents desire of user

e.g. want_tv, want_meds

This state is unobservable to the dialogue system

Observations: Utterances from speech recogniser

e.g. .I want to take my pills now.

The system must infer the user's state from the possibly noisy or

ambiguous observations

Where do the emission probabilities come from?

At planning time, from a prior model

At run time, from the speech recognition engine

and determines the policy that maximises the expected,

discounted reward

The MDP in Dialogue Management

State: Represents desire of user

e.g. want_tv, want_meds

Assume utterances from speech recogniser give

state

e.g. I want to take my pills now.

Markov Decision Processes

Model the world as different states the system can be in

e.g. current state of completion of a form

Each action moves to some new state with probability p(i; j)

Observation from user determines posterior state

Actions are: robot motion, speech acts

Reward: maximised for satisfying user task

2

Markov Decision Processes

Markov Decision Processes

Optimal policy maximizes expected future (discounted) reward

Policy found using value iteration

Since we can compute a policy that maximises

the expected reward...

then if we have ...

a reasonable reward function

a reasonable transition model

Do we get behaviour that satisfies the user?

Fully Observable State Representation

Fully Observable State Representation

Advantage: No state identification/tracking problems

Disadvantage: What if the observation is noisy or false?

Perception and Control

Talk Outline

Robots in the real world

Probabilistic

Perception

Model

P(x)

argmax P(x)

World state

Robust

Control

Partially Observable Markov Decision

Processes

Solving large POMDPs

Deployed POMDPs

3

Control Models

POMDPs

Markov Decision Processes

Probabilistic

Perception

Model

le

t

t

i

Br

P(x )

argmax P(x )

Control

Actions

a1

Beliefs

b1

Sondik, 1971

b2

T(sj|a i, si)

World state

Partially Observable Markov Decision Processes

Probabilistic

Perception

Model

P(x )

Observable

Observations

Z1

O(zj|si)

Hidden

States

Control

Rewards

World state

Navigation as a POMDP

Z2

S2

S1

R1

The POMDP in Dialogue Management

Controller chooses actions based

on probability distributions

State: Represents desire of user

e.g. want_tv, want_meds

This state is unobservable to the dialogue system

Observations: Utterances from speech recogniser

e.g. .I want to take my pills now.

The system must infer the user's state from the possibly

noisy or ambiguous observations

Where do the emission probabilities come from?

Action, observation

At planning time, from a prior model

At run time, from the speech recognition engine

State Space

State is hidden from the controller

Actions are still robot motion, speech acts

Reward: maximised for satisfying user task

The POMDP in Dialogue Management

The POMDP in Dialogue Management

want_ABC

want_NBC

want_NBC

want_CBS

want_CBS

Probability still distributed

among multiple states

4

The POMDP in Dialogue Management

The POMDP in Dialogue Management

want_ABC

He wants the

schedule for

NBC!

want_ABC

Dateline is

on NBC

right now.

want_NBC

want_CBS

want_NBC

want_CBS

request_done

Probability mass shifts to a new state as a result of

the action.

Probability mass still distributed among multiple

states, but mostly centered on the true state now

POMDP Advantages

A Simple POMDP

Models information gathering

Computes trade-off between:

Getting reward

Being uncertain

p(s)

Am I here, or over there?

State is hidden

s3

s1

s2

MDP makes decisions based on uncertain foreknowledge

POMDP makes decisions based on uncertain knowledge

POMDP Policies

POMDP Policies

Belief Space

Current

belief

Value Function over Belief Space

Current

belief

p(s)

Optimal

Action

Belief Space

Optimal

Action

5

Scaling POMDPs

This simple 20 state

maze problem takes

24 hours for 7 steps

of value iteration.

The Real World

Maps with 20,000 states

600 state dialogues

Goal

1 hour, Zhang &

Zhang

2001

Littman

et al.

1997

Hauskrecht 2000

Structure in POMDPs

Belief Space Structure

Factored models

Boutilier & Poole, 1996

Guestrin , Koller & Parr, 2001

Information Bottleneck models

The controller may be globally

uncertain...

but not usually.

Poupart & Boutilier, 2002

Hierarchical POMDPs

Pineau & Thrun, 2000

Mahadevan & Theocharous 2002

Many others

Belief Compression

Control Models

le

b

a

act

r

t

In

Previous models

If uncertainty has few degrees of freedom,

belief space should have few dimensions

le

t

t

i

Br

Probabilistic

Perception

Model

P(x)

argmax P(x )

Probabilistic

Perception

Model

Control

Control

World state

World state

Each mode has few

degrees of freedom

P(x)

Compressed POMDPs

Probabilistic

Perception

Model

P(x )

Low-dimensional P(x )

Control

World state

6

The Augmented MDP

Model Parameters

Represent beliefs using

Reward function

p(s)

~

b = arg max b(s ); H (b)

s

Back-project to high

dimensional belief

N

H (b)= -� p(si ) log 2 p( si )

i =1

~

R(b)

Discretise into 2-dimensional belief space MDP

s1

s2

s3

Compute expected reward from belief:

~

R (b ) = E b ( R (s )) = � p ( s) R (s)

S

Model Parameters

Augmented MDP

Use forward model

~

~

bi

~

bj

~

bk

1.

Discretize state-entropy space

2.

Compute reward function and transition

function

3.

Solve belief state MDP

bj

Low dimension

z1

Full dimension

a

~

bi

p i(s)

a, z1

p j(s)

Deterministic

process

Stochastic process

Nursebot Domain

MDP Graph

Medication scheduling

Time and place tracking

Appointment scheduling

Simple outside knowledge

e.g. weather

Simple entertainment

e.g. TV schedules

Sphinx speech recognition, Festival speech

synthesis

7

Accumulation of Reward – Simulated 7

State Domain

An Example Dialogue

Observation

hello

what is like

what time is it for will the

was on abc

was on abc

what is on nbc

yes

go to the that pretty good what

that that hello be

the bedroom any i

go it eight a hello

the kitchen hello

True State

request begun

start meds

want time

want tv

want abc

want nbc

want nbc

send robot

send robot bedroom

send robot bedroom

send robot

send robot kitchen

Belief Entropy

0.406

2.735

0.490

1.176

0.886

1.375

0.062

0.864

1.839

0.194

1.110

1.184

Action

say hello

ask repeat

say time

ask which station

say abc

confirm channel nbc

say nbc

ask robot where

confirm robot place

go to bedroom

ask robot where

go to kitchen

Reward

100

-100

100

-1

100

-1

100

-1

-1

100

-1

100

Larger Slope ==

Better Performance

Accumulation of Reward – Simulated 17

State Domain

POMDP Dialogue Manager Performance

POMDP Dialogue Manager Performance

POMDP Dialogue Manager Performance

8

Finding Dimensionality

Example Reduction

E-PCA will indicate

appropriate number of

bases, depending on

beliefs encountered

Planning

Planning

S1

S1

E-PCA

Discretize

E-PCA

S2

S2

S3

Original POMDP

S3

Original POMDP

Low-dimensional

belief space

Model Parameters

Low-dimensional

belief space

Discrete belief

space MDP

Model Parameters

Reward function

Transition function

p(s)

~

bj

Back-project to high

dimensional belief

~

R(b)

s1

s2

~

~

T(bi, a, b j)=?

s3

Compute expected reward from belief:

~

R (b ) = E b ( R (s )) = � p ( s) R (s)

~

bi

S

12

Model Parameters

Model Parameters

Use forward model

Use forward model

~

~

bi

~

b j

~

bk

bj

Low dimension

z1

~

bj

Full dimension

a

~

b i

p i(s)

a, z11

p j(s)

~

bk

z1

~

a

~b

i

z2

~

bq

~

T(bi, a, b j) � p(z|s)b i(s|a)

if b j(s) = b i(s|a,z)

= 0

otherwise

Deterministic

process

Stochastic process

E-PCA POMDPs

1.

Collect sample beliefs

2.

Find low-dimensional belief representation

3.

Discretize

4.

Compute reward function and transition

function

5.

Solve belief state MDP

Robot Navigation Example

Initial Distribution

Goal state

True robot position

Goal position

Robot Navigation Example

People Finding as a POMDP

Factored state space

True robot position

Goal position

2 dimensions: fully-observable robot position

6 dimensions: distribution over person positions

Regular grid gives ˜ 10 16 states

13

Variable Resolution Discretization

Variable Resolution Dynamic Programming (1991)

Variable Resolution

Parti-Game

Utile Distinction Trees

Parti-game (Moore, 1993)

Variable Resolution Discretization (Munos & Moore,

2000)

POMDP Grid-based Approximations (Hauskrecht,

2001)

Instance-based

Nearest-neighbour state

representation

Deterministic

Suffix tree representation

Improved POMDP Grid-based Approximations (Zhou &

Hansen, 2001)

Variable Resolution

Instance-based

Stochastic

Reward statistics splitting

criterion

Combine the two approaches:

“Stochastic Parti-Game”

Refining the Grid

~

Non-regular grid using samples

V(b

b11)

~

T(b1 , a1,~b2 )

~

b1

~b

2

~b

3

~

~

T(b1 , a2, b5 )

~

V(b'

b' 1)

~b

4

~

b5

Sample beliefs according to policy

Construct new model

~

The Optimal Policy

~

Keep new belief if V(b'1) > V(b1)

Compute model parameters using nearest-neighbour

Policy Comparison

Average time to find person

250

Original distribution

200

Reconstruction using

EPCA and 6 bases

Time

150

100

50

0

True MDP

Robot position

True person position

Closest

Densest Maximum

Likelihood

E-PCA

Var. Res.

E-PCA

E-PCA: 72 states

Var. Res. E-PCA: 260 states

14

Summary

POMDPs for robotic control improve system

performance

POMDPs can scale to real problems

Belief spaces are structured

Compress to low-dimensional statistics

Find controller for low-dimensional space

Open Problems

Better integration and modelling of people

Better spatial and temporal models

Integrating learning into control models

Integrating control into learning models

15