Review of the literature on mapping techniques

advertisement

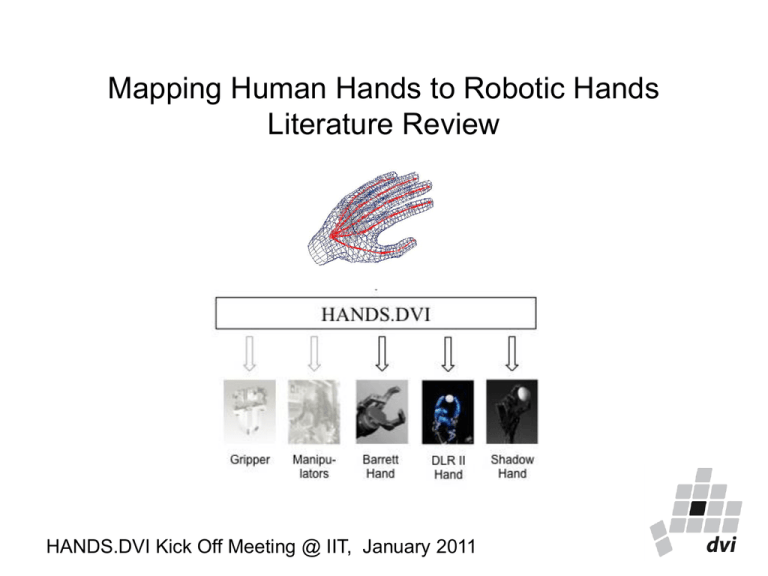

Mapping Human Hands to Robotic Hands Literature Review HANDS.DVI Kick Off Meeting @ IIT, January 2011 Outline Different mapping approaches: - Fingertip mapping - Joint-to-Joint mapping - Object-based mapping Fingertip Mapping Liu, J., Zhang, Y. “Mapping human hand motion to dexterous robotic hand” (2008) The motion of the human hand (the master) is detected by a CyberGlove in order to control a 4 fingers robotic hand (the slave). A method of fingertip mapping is developed based on Virtual Fingers in Cartesian space. Fingertip Mapping – Virtual Finger approach (1/2) Arbib, Iberall (1985) “A virtual finger is a group of real fingers acting as a single functional unit” This concept is used to formally characterize different fingers in an abstract way. Thumb is functional different from the last four fingers so human thumb is corresponding with a robot finger. Human ring and pinkie fingers, considered as a VF, are mapped on a single robot finger. Fingertip Mapping – Virtual Finger approach (2/2) The mapping is done as follows: p4 p45 p5 Where p4 is the ring fingertip position, p5 is the little fingertip position, k4 and k5 are the interconnection weights among different ring and little fingers. P45 is the virtual fingertip position. As suggested in H.Y. Hu, X. H. Gao, J. W. Li, J. Wang, and H. Liu “Calibrating Human Hand for Teleoperating the HIT/DLR Hand” (2004), if inverse kinematic solutions are not possible, an approximate pose for the robotic hand is computed. Joint-to-Joint mapping Goldfeder C, Ciocarlie MT, Allen PK. “Dimensionality reduction for handindependent dexterous robotic grasping.” (2007) In order to extend the synergistic framework to a number of different robotic hand, in this work, a joint-to-joint mapping is adopted, based dierctly on similarities between human and non-human hands. Examples: MCP joint proximal joint IP joint distal joint Abduction spread angle (Barrett hand) Object-based Mapping Griffin WB, Findley RP, Turner ML, Cutkosky MR. “Calibration and Mapping of a Human Hand for Dexterous Telemanipulation.” (2000). This method assumes that a sphere (a virtual object) is held between human thumb and index finger. Size, position and orientation of the virtual object are scaled independently to create a transformed virtual object in the robotic hand workspace. This modified virtual object is then used to compute the robotic fingertip positions. Workspace Matching (1/3) The object size parameter is varied non linearly: - Comfortable manipulation region: the gain on the object size is proportional to the size difference between the hands. - Edges of the workspace: the gain increases. The modified virtual object size is computed as: Workspace Matching (2/3) The virtual object midpoint is computed as the midpoint between the human thumb and index fingertip. The midpoint is then projected on the x-y plane. The orientation of the objected is based on the angle of this projection. The calculated object midpoint in the human hand frame is transformed to the robotic hand frame using a standard transform with a unity gain in otder to allow rolling motions of robotic fingers. Workspace Matching (3/3) The midpoint parameter is also varied non-linearly Around the comfortable pinch position, we wish to map motions to the preferred manipulation region for the robotic hand. When the user extends his fingers away from or towards the palm, the robot should approach its own workspace limits. The vertical position of the modified midpoint will be: Object-based extended mapping The method presented above is useful to map onto robotic hands (such grippers) the motion of human thumb and index fingers. In order to extend it to movements that involves other fingers, such as synergistic motions, our idea is to use the Virtual Finger concept to bring us back to the case of two-fingers motions.