Chapter 6.7

advertisement

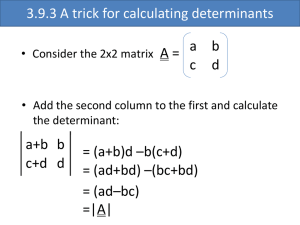

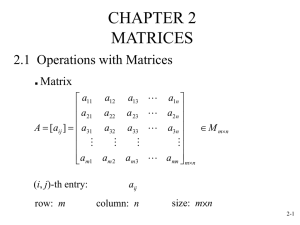

Chapter 6.7 Determinants • In this chapter all matrices are square; for example: 1x1 (what is a 1x1 matrix, in fact?), 2x2, 3x3 • Our goal is to introduce a new concept, the determinant, which is only defined for square matrices, yet any size square matrices • The determinant is, first of all, just a number; and, since we want to have a natural definition for it, we say that for 1x1 matrices the determinant is EXACTLY that number: det(a11 ) a11 a11 • Examples: det(3) 3 3; det( 5) 5 5 • Notation: we use either the keyword “det” in front of the matrix OR we replace the brackets (or parenthesis, if applicable) with vertical bars • Careful: for 1x1 matrices DO NOT confuse the vertical bars notation with the absolute value notation (the context will tell you whether the number is assumed to be a matrix, in which case it’s determinant, or a number, in which case it’s absolute value) • What about bigger matrices? The idea is to define a bigger matrix’ determinant based on smaller (sub-)matrices’ determinant • Take a 2x2 matrix; a smaller matrix is a 1x1 matrix, whose determinant we know right now; hence we can define the 2x2 matrix determinant – Choose a row (usually the first one) a11 a 21 a12 this one! a22 – take the first entry in the row, and remove from the matrix the column corresponding to it and the chosen row; what’s left is a matrix of order 2-1=1 * # # a 22 – take the determinant of this matrix (we know how to compute it!); we call this the minor and it’s usually denoted by capital letter with the initial entry indices (in our case 11) A11 a22 a22 – The last thing we do for the 11 index is to build the cofactor, which is the minor multiplied by (-1) to the power (sum of indices), in our case 1+1=2; the usual notation for cofactor is c with original indices 11 c11 (1) A11 a22 – Take now the second entry and build its cofactor: remove the second column and the first row; what’s left is a 1x1 matrix, namely # * a21 a 21 # – compute the minor A12 a21 – Get the cofactor: 1 2 c12 (1) A12 a21 – we exhausted the row, and now we construct the sum: a11 a12 a21 a22 a11c11 a12 c12 a11a22 a12 a21 • For the 2x2 matrix we get, in fact, an easy to remember formula: product of the first diagonal (NW-SE) minus product of the second diagonal (NE-SW) • Example: 1 5 3 2 1 (2) 5 3 2 15 17 • Things get a bit more complicated as we go to 3x3 matrices; we use the VERY SAME TECHNIQUE, though – take the matrix a11 a 21 a31 a12 a22 a32 a13 a23 a33 – Choose a row - again, usually we take the first row, so let’s go with that one – take first entry and compute its cofactor: remove the first column and the first row, and we get the following matrix: * # # a 22 # a32 # a23 a33 – We compute this smaller (2x2) matrix’ determinant (because we know now how!) and so we get the minor of index 11: a22 A11 a32 a23 a22 a33 a23 a32 a33 – finally, the cofactor: 11 c11 (1) A11 (a22 a33 a23a32 ) – Next entry’s cofactor: (less talk, just computation) # a 21 a31 A12 # a21 # a23 a31 # a33 * a21 a23 a31 a33 a23 a33 a21a33 a23 a31 – So, the cofactor is: c12 (1)12 A12 (a21a33 a23a31 ) – and, for the last entry of this row, voila the cofactor: 1 3 c13 (1) A13 (1) 4 a21 a22 a31 a32 (a21a32 a22 a31 ) – Hence the determinant for our 3x3 matrix is a11 a12 a13 a21 a22 a23 a11c11 a12 c12 a13c13 a31 a32 a33 a11 (a22 a33 a23 a32 ) a12 (a21a33 a23 a31 ) a13 (a21a32 a22 a31 ) • There actually is a way of remembering even this formula, reminiscent of the 2x2 matrix’ determinant; again, we have a first diagonal (NW-SE), but also 2 “first halfdiagonals”; the product of entries on each gets added; we have a second diagonal (NESW) and 2 “second half-diagonals”, and the product of entries on each, respectively, gets substracted (for alternate description please read at the bottom of page 280) • Example: 1 2 3 4 5 6 7 8 9 1 5 9 2 6 7 4 8 3 3 5 7 2 4 9 6 8 1 45 84 96 105 72 48 0 • One interesting issue: as mentioned, you could choose any row you want (and, in fact, if you “look sidewise”, you could do a very similar thing for columns!); but the process is pretty complex, right? So how can we be so sure we get the same number all the time? Well, be assured - it really works, regardless of row (or of column, in fact) • Why would you choose a different row? For example, you have a lot of 0 (zero) entries in that row; since you got to multiply those entries with the respective cofactors, 0 times anything is 0! So we don’t need to compute those cofactors! • Example: 1 2 3 0 4 0 4 3 3 – if you choose the first row, you need to compute all three cofactors; but if you choose the second row, you only need compute the second entry’s cofactor! 1 # 3 # * # 1 3 A 1 3 3 12 9 22 4 3 4 3 4 # 3 – the determinant is hence (I‘m mentioning the other 2 cofactors, but, as you see, they get multiplied by 0, so we don’t care what values they have): 1 2 3 0 4 0 0 c21 4 c22 0 c23 4 (1) 2 2 A22 4 (9) 36 4 3 3 • As an exercise, try to prove that, by choosing the second row in a 2x2 matrix you get the same number (you can work with an actual matrix, or try the general form, with a’s) • Complicated as it was for 3x3 matrices, you can see now how complicated it could get even further (4x4, 5x5 and so on); still the method still works, and the moment you know how to compute a 3x3 matrix’ determinant, you can compute the 4x4 matrix’ one; the moment you know how to compute a 4x4 matrix’ determinant, you can compute the 5x5 matrix’ one • But … computing a determinant is not always a hideously long and intricate task, because the way we compute this number leaves a few backdoors open • For instance: – 1. If each of the entries in a certain row (or column) of the matrix is 0, then its determinant is 0 (remember the example above? What if the 4 was also a 0?) – 2. If two rows (or columns) are identical, then the determinant is 0 1 2 3 1 2 3 0 2 1 5 – 3. If the matrix is upper/lower triangular (in particular, if it is diagonal) then the determinant is equal to the product of the main (first) diagonal entries 1 2 3 0 1 2 1 1 2 2 0 0 2 – 4. If one modifies the matrix by adding a multiple of one row to another row (same for columns), the determinant doesn’t change here you see the connection to elementary matrix operations! 1 3 5 1 3 5 1 3 5 4 3 5 4 1 3 3 5 5 3 0 0 3 2 1 3 2 1 3 2 1 3 (1) 2 1 3 5 3 (3 10) 21 2 1 – 5. If one interchanges two rows (or columns) the determinant changes sign 1 2 3 4 3 4 1 2 – 6. If one multiplies the entries of a row (or column) by a number, the determinant gets multiplied by that number 3 1 3 2 1 2 3 3 5 3 5 – 7. If one multiplies the matrix by a scalar (which, if you remember, means multiplying all elements by that number - all rows, that is, and see 6.) then the determinant gets multiplied by that number as many times as many rows it has (its size; for a 2x2 matrix, twice; 3x3 matrix, thrice; and so on) 3 1 3 2 1 2 2 2 7 2 7 2 6 4 14 – 8. If one multiplies two matrices, the determinant of the product is the product of the determinants A B A B – 9. The determinant of the transpose of a mtrix equal the determinant of the original matrix A A T 1 3 5 1 0 4 0 1 23 1 3 4 3 2 5 2 2 • One last thing: as you can see, it’s very convenient to have an upper triangular matrix (or lower, or diagonal); on the other hand, when reducing a matrix (a square matrix, that is) that’s exactly what we get • So … why not use this? As you can see, one elementary operation doesn’t change the determinant (adding a multiple of a row to another one; probably the most important one; see 4.); a second one only changes its sign (interchanging two rows; see 5.); the last one multiplies the determinant by a controllable quantity (multiplying by that quantity a certain row; see 6.) • Here’s an example: 2 3 0 3 4 2 3 0 9 31 1 4 6 8 2 8 3 1 – think of this as “factoring out the 3 out of the second row” 2 3 0 31 4 2 8 1 2 3 R2 2 R1 1 2 3 3 3 2 3 0 3 0 7 6 R3 4 R1 1 4 8 1 0 0 11 – (we now have an upper triangular matrix, so we can stop here - or, if you can waste time, NOT DURING THE EXAM! you can continue reducing the matrix by “factoring out” the -7 out of the second row etc) 3 (1 (7) (11)) 231 • This method is called triangulation (for obvious reasons!) • It’s especially useful for higher order matrices (4x4, 5x5, etc) since we don’t have to compute many complex cofactors, but rather use simple elementary operations; both methods take time, though (in general, expect to waste a lot of time when computing a large matrix, with few 0 …)