Week 3 Power Point Slides

advertisement

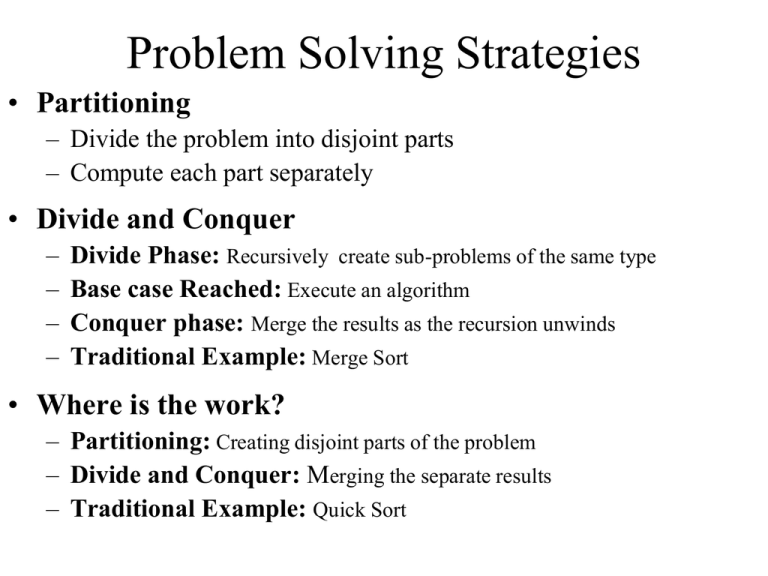

Problem Solving Strategies

• Partitioning

– Divide the problem into disjoint parts

– Compute each part separately

• Divide and Conquer

–

–

–

–

Divide Phase: Recursively create sub-problems of the same type

Base case Reached: Execute an algorithm

Conquer phase: Merge the results as the recursion unwinds

Traditional Example: Merge Sort

• Where is the work?

– Partitioning: Creating disjoint parts of the problem

– Divide and Conquer: Merging the separate results

– Traditional Example: Quick Sort

Parallel Sorting Considerations

• Distributed memory

–

–

–

–

Distributed system precision differences can cause unpredictable results

Traditional algorithms can require excessive communication

Modified algorithms minimize communication requirements

Typically, data is scattered to the P processors

• Shared Memory

– Critical sections and Mutual Exclusion locks can inhibit performance

– Modified algorithms eliminate the need for locks

– Each processor can sort N/P data points or they can work in parallel in a

more fine grain manner (no need for processor communication).

Two Related Sorts

Bubble Sort

void bubble(char[] *x, int N)

{ int sorted=0, i, size=N-1;

char* temp;

while (!sorted)

{ sorted=1;

for (i=0;i<size;i++)

{ if (strcmp(x[i],x[i+1]>0)

{ strcpy(temp,x[i]);

strcpy(x[i],x[i+1]);

strcpy(x[i+1],temp);

sorted = 0;

}

}

size--;

} }

1.

2.

Odd-Even Sort

void oddEven(char[] *x, int N)

{ int even=0,sorted=0,i,size=N-1;

char *temp;

while(!sorted)

{ sorted=1;

for(i=even; i<size; i+=2)

{ if(strcmp(x[i],x[i+1]>0)

{ strcpy(temp,x[i]);

strcpy(x[i],x[i+1]);

strcpy(x[i+1],temp);

sorted = 0;

} }

even = 1 – even;

} }

Sequential version: Odd-Even has no advantages

Parallel version: Processors can work independently without data conflicts

Bubble, Odd Even Example

Bubble Pass

Odd Even Pass

Bubble: Smaller values move left one spot per pass. Largest value

move immediately to the end. The loop size can shrink by one each pass.

Odd Even: Large values move only one position per pass. The loop

size cannot shrink. However, all interchanges can occur in parallel.

One Parallel Iteration

Distributed Memory

• Odd Processors:

sendRecv(pr data, pr-1 data);

mergeHigh(pr data, pr-1 data)

if(r<=P-2)

{ sendRecv(pr data, pr+1 data);

mergeLow(pr data, pr+1 data)

}

• Even Processors:

sendRecv(pr data, pr+1 data);

mergeLow(pr data, pr+1 data)

if(r>=1)

{ sendrecv(pr data, Pr-1 data);

mergeHigh(pr data, pr-1 data)

}

Shared Memory

• Odd Processors:

mergeLow(pr data, pr-1 data) ; Barrier

if (r<=P-2) mergeHigh(pr data,pr+1 data)

Barrier

• Even Processors:

mergeHigh(pr data, pr+1 data) ; Barrier

if (r>=1) mergeLow(pr data, pr-1 data)

Barrier

Notation: r = Processor rank, P =

number of processors, pr data is the block

of data belonging to processor, r

Note: P/2 Iterations are necessary

to complete the sort

A Distributed Memory

Implementation

• Scatter the data among available processors

• Locally sort N/P items on each processor

• Even Passes

– Even processors, p<N-1, exchange data with processor, p+1.

– Processors, p and p+1 perform a partial merge where p extracts the

lower half and p+1 extracts the upper half.

• Odd Passes

– Even processors, p>=2, exchange data with processor, p-1.

– Processors, p, and p-1 perform a partial merge where p extracts the

upper half and p-1 extracts the lower half.

• Exchanging Data: MPI_Sendrecv

Partial Merge – Lower keys

Store the lower n keys from arrays a and b into array c

mergeLow(char[] *a, char[] *b, char *c, int n)

{ int countA=0, countB=0, countC=0;

while (countC < n)

{ if (strcmp(a[countA],b[countB])

{

strcpy(c[countC++], a[countA++]); }

else

{

strcpy(c[countC++], a[countB++); }

}

}

To merge upper keys:

1. Initialize the counts to n-1

2. Decrement the counts instead of increment

3. Change the countC < n to countC >= 0

Bitonic Sequence

[3,5,8,9,10,12,14,20]

[95,90,60,40,35,23,18,0]

10,12,14,20]

[95,90,60,40,35,23,18,0]

[3,5,8,9

Increasing and then decreasing where the end can wrap around

Unsorted: 10,20,5,9.3,8,12,14,90,0,60,40,23,35,95,18

Step 1: 10,20 9,5 3,8 14,12 0,90 60,40 23,35 95,18

Step 2: [9,5][10,20][14,12][3,8][0,40][60,90][95,35][23,18]

5,9 10,20 14,12 8,3 0,40 60,90 95,35 23,18

Step 3: [5,9,8,3][14,12,10,20] [95,40,60,90][0,35,23,18]

[5,3][8,9][10,12][14,20] [95,90][60,40][23,35][0,18]

3,5, 8,9, 10,12, 14,20 95,90, 60,40, 35,23, 18,0

Step 4: [3,5,8,9,10,12,14,0] [95,90,60,40,35,23,18,20]

[3,5,8,0] [10,12,14,9] [35,23,18,20][95,90,60,40]

[3,0][8,5] [10,9][14,12] [18,20][35,23] [60,40][95,90[

Sorted: 0,3,5,8,9,10,12,14,18,20,23,35,40,60,90,95

Bitonic

Sort

Bitonic Sorting Functions

void bitonicSort(int lo, int n, int dir) void bitonicMerge(int lo, int n, int dir)

{

{

if (n>1)

if (n>1)

{

{

int m=n/2;

int m=n/2;

for (int i=lo; i<lo+m; i++)

bitonicSort(lo, m, UP);

compareExchange(i, i+m, dir);

bitonicSort(lo+m, m, DOWN;

bitonicMerge(lo, m, dir);

bitonicMerge(lo, n, dir);

bitonicMerge(lo+m, m, dir);

} }

} }

Notes:

1.

2.

dir = 0 for DOWN, and 1 for UP

compareExchange moves

a. low value left if dir = UP

b. high value left if dir = DOWN

Bitonic Sort Partners/Direction

level

j

rank

rank

rank

rank

rank

rank

rank

rank

rank

rank

rank

rank

rank

rank

rank

rank

=

=

=

=

=

=

=

=

=

=

=

=

=

=

=

=

0

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

partners

partners

partners

partners

partners

partners

partners

partners

partners

partners

partners

partners

partners

partners

partners

partners

1

0

=

=

=

=

=

=

=

=

=

=

=

=

=

=

=

=

1/L,

0/H,

3/H,

2/L,

5/L,

4/H,

7/H,

6/L,

9/L,

8/H,

11/H,

10/L,

13/L,

12/H,

15/H,

14/L,

2

0

2/L

3/L

0/H

1/H

6/H

7/H

4/L

5/L

10/L

11/L

8/H

9/H

14/H

15/H

12/L

13/L

Algorithm Steps

2

3

3

3

1

0

1

2

1/L,

0/H,

3/L,

2/H,

5/H,

4/L,

7/H,

6/L,

9/L,

8/H,

11/L,

10/H,

13/H,

12/L,

15/H,

14/L,

4/L

5/L

6/L

7/L

0/H

1/H

2/H

3/H

12/H

13/H

14/H

15/H

8/L

9/L

10/L

11/L

2/L

3/L

0/H

1/H

6/L

7/L

4/H

5/H

10/H

11/H

8/L

9/L

14/H

15/H

12/L

13/L

1/L,

0/H,

3/L,

2/H,

5/L,

4/H,

7/L,

6/H,

9/H,

8/L,

11/H,

10/L,

13/H,

12/L,

15/H,

14/L,

4

0

4

1

4

2

4

3

8/L

9/L

10/L

11/L

12/L

13/L

14/L

15/L

0/H

1/H

2/H

3/H

4/H

5/H

6/H

7/H

4/L

5/L

6/L

7/L

0/H

1/H

2/H

3/H

12/L

13/L

14/L

15/L

8/H

9/H

10/H

11/H

2/L

3/L

0/H

1/H

6/L

7/L

4/H

5/H

10/L

11/L

8/H

9/H

14/L

15/L

12/H

13/H

1/L

0/H

3/L

2/H

5/L

4/H

7/L

6/H

9/L

8/H

11/L

10/H

13/L

12/H

15/L

14/H

partner = rank ^ (1<<(level-j-1));

direction = ((rank<partner) == ((rank & (1<<level)) ==0))

Java Partner/Direction Code

public static void main(String[] args)

{ int nproc = 16, partner, levels = (int)(Math.log(nproc)/Math.log(2));

for (int rank = 0; rank<nproc; rank++)

{

System.out.printf("rank = %2d partners = ", rank);

for (int level = 1; level <= levels; level++ )

{ for (int j = 0; j < level; j++)

{ partner = rank ^ (1<<(level-j-1));

String dir = ((rank<partner)==((rank&(1<<level))==0))?"L":"H";

System.out.printf("%3d/%s", partner, dir);

}

if (level<levels) System.out.print(", ");

}

System.out.println();

} }

Parallel Bitonic Pseudo code

IF master processor

Create or retrieve data to sort

Scatter it among all processors (including the master)

ELSE

Receive portion to sort

Sort local data using an algorithm of preference

FOR( level = 1; level <= lg(P) ; level++ )

FOR ( j = 0; j<level; j++ )

partner = rank ^ (1<<(level-j-1));

Exchange data with partner

IF ((rank<partner) == ((rank & (1<<level)) ==0))

extract low values from local and received data (mergeLow)

ELSE extract high values from local and received data (mergeHigh)

Gather sorted data at the master

Bucket Sort Partitioning

• Algorithm:

– Assign a range of values to each processor

– Each processor sorts the values assigned

– The resulting values are forwarded to the master

• Steps

1. Scatter N/P numbers to each processor

2. Each Processor

a.

b.

c.

d.

Creates smaller buckets of numbers for designated for each

processor

Sends the designated buckets to the various processors and

receives the designated buckets it expects to receive

Sorts its section

Sends its data back to the processor with rank 0

Bucket Sort Partitioning

Unsorted Numbers

Unsorted Numbers

P1

P2

P3

Pp

Sorted

Sequential Bucket Sort

1.

2.

3.

4.

Drop sections of data to sort

into buckets

Sort each bucket

Copy sorted bucket data back

into the primary array

Complexity O(b * (n/b lg(n/b))

Sorted

Parallel Bucket Sort

Notes: a. Bucket Sort works well for uniformly distributed data

b. Recursively finding mediums from a data sample

(Sample Sort) attempts to equalize bucket sizes

Rank (Enumeration) Sort

1. Count the numbers smaller to each number, src[i] or

duplicates with a smaller index

2. The count is the final array position for x

for (i=0; i<N; i++)

{ count = 0;

for (j=0; j<N; j++)

if (src[i] > src[j] || src[i]=src[j] && j<i) x++;

dest[x] = src[i];

}

3. Shared Memory parallel implementation

a.

b.

Assign groups of numbers to each processor

Find positions of N/P numbers in parallel

Counting Sort

Works on primitive fixed point types: int, char, long, etc.

1. Master scatters the data among the processors

2. In parallel, each processor counts the total

occurrences for each of the N/P data points

3. Processors perform a collective sum operation

4. Processors performs an all-to-all collective prefix sum

operation

5. In parallel, each processor stores the N/P data items

appropriately in the output array

6. Sorted data gathered at the master processor

Note: This logic can be repeated to implement a radix sort

P0

Merge Sort

P0

P4

P0

P0

P2

P1

P2

P4

P3

P4

P6

P5

P6

• Scatter N/P items to each processor

• Sort Phase: Processors sort its data with a method of choice

• Merge Phase: Data routed and a merge is performed at each level

for (gap=1; gap<P; gap*=2)

{ if ((p/gap)%2 != 0) { Send data to p–gap; break; }

else

{

Receive data from p+gap

Merge with local data

} }

P7

Quick Sort

• Slave computers

– Perform the quick sort algorithm

• Base Case: if data length < threshold, send to master (rank = 0)

• Recursive Step: quick sort partition the data

– Request work from the master processor

• If none terminate

• Receive data, sort and send back to master

• Master computer

–

–

–

–

Scatter N/P items to each processor

When receive work request: Send data to slave or termination message

When receive sorted data: Place data correctly in final data list

When data sorted: save data and terminate

Note: Distributed work pools requires load balancing. Processors

maintain local work pools. When the local load queue falls below a

threshold, processors request work from their neighbors