Simultaneous Inferences and Other Regression Topics

advertisement

Simultaneous Inferences and

Other Regression Topics

KNNL – Chapter 4

Bonferroni Inequality

Suppose we have 2 events: A1 and A2 with: P A1 P A2

Prob that A1 and/or A2 occur :

P A1

A2 P A1 P A2 P A1

A2

Prob that neither A1 nor A2 occur (complementary event of A1

P A1

A2 1 P A1

P A1

A2 1 P A1 P A2 P A1

A2 ) :

A2

A2 1 P A1 P A2

For these events: P A1

A2 1 1 2

Application: We want simultaneous Confidence Intervals for b0 and b1 such that we can

be (1-)100% confident that both intervals contain true parameter:

A1 ≡ Event that CI for b0 does not cover b0 A2 ≡ Event that CI for b1 does not cover b1

Then: The probability that both intervals are correct is ≥ 1-2

Thus, if we construct (1-(/2))100% CIs individually, Pr{Both Correct} ≥ 1-2(/2) = 1-

Joint Confidence Intervals for b0 and b1

Goal: Want Confidence Intervals for b 0 , b1 so that we can be

(1- )100% Confident that BOTH intervals contain the true parameter.

"Trick:" Make each confidence interval at 1 / 2 100% Confidence

B t 1 4 ; n 2

1 / 2 100% CI for b0 :

1 / 2 100% CI for b1 :

b0 Bs b0

b1 Bs b1

Note: If we want to be 95% confident that both intervals are

correct, we set-up 97.5% confidence intervals for each parameter

df

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

t(.975,df) t(.9875,df)

12.706

25.452

4.303

6.205

3.182

4.177

2.776

3.495

2.571

3.163

2.447

2.969

2.365

2.841

2.306

2.752

2.262

2.685

2.228

2.634

2.201

2.593

2.179

2.560

2.160

2.533

2.145

2.510

2.131

2.490

2.120

2.473

2.110

2.458

2.101

2.445

2.093

2.433

2.086

2.423

2.080

2.414

2.074

2.405

2.069

2.398

2.064

2.391

2.060

2.385

2.056

2.379

2.052

2.373

2.048

2.368

2.045

2.364

2.042

2.360

Simultaneous Estimation of Mean Responses

• Working-Hotelling Method: Confidence Band for

Entire Regression Line. Can be used for any number

of Confidence Intervals for means, simultaneously

• Bonferroni Method: Can be used for any g

Confidence Intervals for means by creating

(1-/g)100% CIs at each of g specified X levels

^

^

W 2 F 1 ; 2, n 2

Working-Hotelling: Y h Ws Y h

^

^

Bonferroni: Y h Bs Y h

B t 1 2 g ; n 2

Bonferroni t-table ( = 0.05, 2-sided)

g

1

2

3

4

5

6

7

8

9

10

1-.05/(2g)

0.9750

0.9875

0.9917

0.9938

0.9950

0.9958

0.9964

0.9969

0.9972

0.9975

df

t(1-.05/2g,df) t(1-.05/2g,df) t(1-.05/2g,df) t(1-.05/2g,df) t(1-.05/2g,df) t(1-.05/2g,df) t(1-.05/2g,df) t(1-.05/2g,df) t(1-.05/2g,df) t(1-.05/2g,df)

1

12.706

25.452

38.188

50.923

63.657

76.390

89.123

101.856

114.589

127.321

2

4.303

6.205

7.649

8.860

9.925

10.886

11.769

12.590

13.360

14.089

3

3.182

4.177

4.857

5.392

5.841

6.232

6.580

6.895

7.185

7.453

4

2.776

3.495

3.961

4.315

4.604

4.851

5.068

5.261

5.437

5.598

5

2.571

3.163

3.534

3.810

4.032

4.219

4.382

4.526

4.655

4.773

6

2.447

2.969

3.287

3.521

3.707

3.863

3.997

4.115

4.221

4.317

7

2.365

2.841

3.128

3.335

3.499

3.636

3.753

3.855

3.947

4.029

8

2.306

2.752

3.016

3.206

3.355

3.479

3.584

3.677

3.759

3.833

9

2.262

2.685

2.933

3.111

3.250

3.364

3.462

3.547

3.622

3.690

10

2.228

2.634

2.870

3.038

3.169

3.277

3.368

3.448

3.518

3.581

11

2.201

2.593

2.820

2.981

3.106

3.208

3.295

3.370

3.437

3.497

12

2.179

2.560

2.779

2.934

3.055

3.153

3.236

3.308

3.371

3.428

13

2.160

2.533

2.746

2.896

3.012

3.107

3.187

3.256

3.318

3.372

14

2.145

2.510

2.718

2.864

2.977

3.069

3.146

3.214

3.273

3.326

15

2.131

2.490

2.694

2.837

2.947

3.036

3.112

3.177

3.235

3.286

16

2.120

2.473

2.673

2.813

2.921

3.008

3.082

3.146

3.202

3.252

17

2.110

2.458

2.655

2.793

2.898

2.984

3.056

3.119

3.173

3.222

18

2.101

2.445

2.639

2.775

2.878

2.963

3.034

3.095

3.149

3.197

19

2.093

2.433

2.625

2.759

2.861

2.944

3.014

3.074

3.127

3.174

20

2.086

2.423

2.613

2.744

2.845

2.927

2.996

3.055

3.107

3.153

21

2.080

2.414

2.601

2.732

2.831

2.912

2.980

3.038

3.090

3.135

22

2.074

2.405

2.591

2.720

2.819

2.899

2.965

3.023

3.074

3.119

23

2.069

2.398

2.582

2.710

2.807

2.886

2.952

3.009

3.059

3.104

24

2.064

2.391

2.574

2.700

2.797

2.875

2.941

2.997

3.046

3.091

25

2.060

2.385

2.566

2.692

2.787

2.865

2.930

2.986

3.035

3.078

26

2.056

2.379

2.559

2.684

2.779

2.856

2.920

2.975

3.024

3.067

27

2.052

2.373

2.552

2.676

2.771

2.847

2.911

2.966

3.014

3.057

28

2.048

2.368

2.546

2.669

2.763

2.839

2.902

2.957

3.004

3.047

29

2.045

2.364

2.541

2.663

2.756

2.832

2.894

2.949

2.996

3.038

30

2.042

2.360

2.536

2.657

2.750

2.825

2.887

2.941

2.988

3.030

40

2.021

2.329

2.499

2.616

2.704

2.776

2.836

2.887

2.931

2.971

50

2.009

2.311

2.477

2.591

2.678

2.747

2.805

2.855

2.898

2.937

60

2.000

2.299

2.463

2.575

2.660

2.729

2.785

2.834

2.877

2.915

70

1.994

2.291

2.453

2.564

2.648

2.715

2.771

2.820

2.862

2.899

80

1.990

2.284

2.445

2.555

2.639

2.705

2.761

2.809

2.850

2.887

90

1.987

2.280

2.440

2.549

2.632

2.698

2.753

2.800

2.841

2.878

100

1.984

2.276

2.435

2.544

2.626

2.692

2.747

2.793

2.834

2.871

∞

1.960

2.241

2.394

2.498

2.576

2.638

2.690

2.734

2.773

2.807

Simultaneous Predictions of New Responses

• Scheffe’s Method: Widely used method for making

simultaneous tests and confidence intervals. Like WH, based on F-distribution, but does increase with g,

the number of simultaneous predictions

• Bonferroni Method: Can be used for any g

Confidence Intervals for means by creating

(1-/g)100% CIs at each of g specified X levels

^

Scheffe: Y h Ss pred

^

Bonferroni: Y h Bs pred

S gF 1 ; g , n 2

B t 1 2 g ; n 2

Regression Through the Origin

• In some applications, it is believed that the

regression line goes through the origin

• This implies that E{Y|X} = b1X (proportional relation)

• Note, that if we imply that all Y=0 when X=0, then

the variance of Y is 0 when X=0 (not consistent with

the regression models we have fit so far)

• Should only be used if there is a strong theoretical

reason

• Analysis of Variance and R2 interpretation are

changed. Should only use t-test for slope

Regression Through the Origin

i ~ N 0, 2 independent

Model: Yi b1 X i i

Least Squares Estimation:

n

Q Yi b1 X i

2

i 1

n

Q

2 Yi b1 X i X i Setting derivative to 0, and solving for b1

b1

i 1

n

n

n

XY bX

i 1

i i

1

i 1

2

i

b1

XY

i 1

n

X

i 1

^

Y i b1 X i

i i

2

i

X

n i Yi

2

i 1

Xi

i 1

n

n

^

SSE ei2

ei Yi Y i

s 2 MSE

i 1

SSE

n 1

^

MSE X

X h2

MSE

2

2

2

s b1 n

s Yh

s pred MSE 1 n

n

2

2

2

Xi

Xi

Xi

i 1

i 1

i 1

1 100% CI for b1 : b1 t 1 / 2 , n 1 s b1

2

h

1 100% CI for E Yh b1 X h : Y h t 1 / 2 , n 1 s

^

1 100% CI for Yh ( new) : Y h t 1 / 2 , n 1 s pred

^

^

Yh

Measurement Errors

• Measurement Error in the Dependent Variable (Y): As

long as there is not a bias (consistently recording too

high or low), no problem (Measurement Error is

absorbed into ).

• Measurement Error in the Independent Variable (X):

Causes problems in estimating b1 (biases downward)

when the observed (recorded) value is random. See

next slide for description.

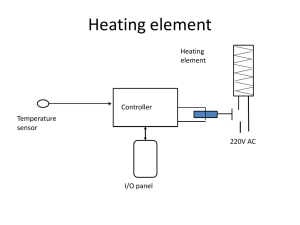

• Measurement Error in the Independent Variable (X):

Not a problem when the observed (recorded) value

is fixed and actual value is random (e.g. temperature

on oven is set at 400⁰ but actual temperature is not)

Measurement Error in X (Random)

Observed (recorded) Value: X i*

True (unobserved) Value: X i

i X i* X i

True Model: Yi b 0 b1 X i i b 0 b1 X i* i i b 0 b1 X i* i b1 i

Assumptions: No Bias in Measurement Error, and uncorrelated with Random Error

E i E i E i i 0

X i* , i b1 i E X i* E X i* i b1 i E i b1 i

E X i* X i i b1 i

E i i b1 i E i i b1 E i2 b1 2 i

The recorded value X i* is not independent of the "error" term: i b1 i

E Yi | X

*

i

b

*

0

b X

*

1

*

i

where b b1

*

1

X2

X2 Y2

b1

Inverse Prediction/Calibration

Goal: Predict a new X value based on an observed new Y value, based on existing Regression:

Model: Yi b 0 b1 X i i

^

Y b0 b1 X

Observe a new (typically easy to measure) Yh (new )

and want to predict X h (new ) corresponding to it (difficult to measure)

^

Point Estimate: X h (new )

Yh (new ) b0

b1

Approximate 1 100% Prediction Interval: X h (new ) t 1 / 2 , n 2 s pred X

^

2

^

X h (new ) X

MSE 1

2

where: s pred X 2 1 n

2

b1 n

Xi X

i 1

t 1 / 2 , n 2 MSE

Approximate Interval is appropriate if

is small (say < 0.1)

n

2

b12 X i X

2

i 1

Bonferroni or Scheffe adjustments should be made for multiple simultaneous predictions

Choice of X Levels

• Note that all variances and standard errors depend on

SSXX which depends on the spacing of the X levels, and

the sample size.

• Depending on the goal of research, when planning a

controlled experiments, and selecting X levels, choose:

2 levels if only interested in whether there is an effect and its

direction

3 levels if goal is describing relation and any possible curvature

4 or more levels for further description of response curve and

any potential non-linearity such as an asymptote