Document

advertisement

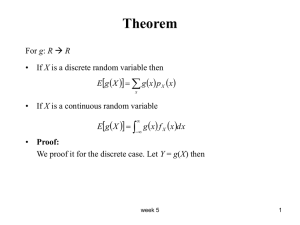

Handout Ch 4 實習 微積分複習第二波(1) f ( x) h( x) g ( x), 則f ' ( x) h' ( x) g ( x) g ' ( x)h( x) h( x ) h' ( x ) g ( x ) h( x ) g ' ( x ) f ( x) , 且g ( x) 0, 則f ' ( x) g ( x) g ( x) 2 chain rule dy y f ( g ( x)),則 f ' ( g ( x)) g ' ( x) dx Example 1. f ( x) (2 x 3)(3x 2 ) f ' ( x) 2 (3x 2 ) 6 x (2 x 3) 18x 2 18x 3. f ( x) ln(x 2 1) 2x f ' ( x) 2 x 1 2 2x 3 x 1 2 ( x 1) 1 (2 x 3) 5 f ' ( x) ( x 1) 2 ( x 1) 2 2. f ( x) 4. f ( x) ( x 2 3x 2)17 f ' ( x) 17( x 2 3x 2)16 (2 x 3) 微積分複習第二波(2) 變數變換 Let u f(x), f'(x)dx du f(x)f'(x)dx udu Example x 0 1 x2 dx ? 令u 1 x 2 , 則du 2 xdx 原式= 1 3 11 1 du ln(u ) 1 2u 2 這是什麼鬼 微積分複習第二波(3) Ch 4積分補充 d tan 1 ( x) 1 dx 1 x2 1 1 dx tan ( x) 1 x 2 ( ( )) 2 2 4 歸去來析 ( 乾脆去死,台語) 5 Expectation of a Random Variable Discrete distribution E( X ) xf ( x) All x Continuous distribution E ( X ) xf ( x)d ( x) E(X) is called expected value, mean or expectation of X. E(X) can be regarded as being the center of gravity of that distribution. x f ( x) E(X) exists if and only if All x 6 E(X) exists if and only if x f ( x)dx . Whenever X is a bounded random variable, then E(X) must exist. The Expectation of a Function Let Y r (X ) , then Er ( X ) E (Y ) yg ( y ) r ( x) f ( x) y x Let Y r (X ), then Er ( X ) E (Y ) yg ( y)dy r ( x) f ( x)dx Suppose X has p.d.f as follows: 2 x for 0 x 1 f ( x) 0 otherwise. 4 1 1 , then E (Y ) 0 x1 2 (2 x)dx 2 0 x 3 2 dx . 5 Let Y X Let Y r ( X1 , X 2 ,, X n ), it can be shown that 12 E (Y ) r ( x1 ,, xn ) f ( x1 , xn )dx1 dxn . Rn 7 Example 1 (4.1.3) In a class of 50 students, the number of students ni of each age i is shown in the following table: Agei ni 8 18 20 19 22 20 4 21 3 25 1 If a student is to be selected at random from the class, what is the expected value of his age Solution 9 E[X]=18*0.4+19*0.44+20*0.08+21*0.06+ 25*0.02=18.92 Agei 18 19 20 21 25 ni 20 22 4 3 1 Pi 0.4 0.44 0.08 0.06 0.02 Properties of Expectations If there exists a constant such that Pr(X a) 1 , then E ( X ) a . If there exists a constant b such that Pr(X b) 1, then E ( X ) b . If X1 ,, X n are n random variables such that each E ( X i ) exists, then E( X 1 X n ) E( X 1 ) E( X n ) . For all constants a1,, an and b E (a1 X1 an X n b) a1E ( X1 ) an E( X n ) b. Usually E ( g (X)) g ( E (X)). Only linear functions g satisfy E ( g (X)) g ( E (X)). If X 1 ,, X n are n independent random variable such that each E ( X i ) exists, then n n E ( X i ) E ( X i ) i 1 10 i 1 Example 2 (4.2.7) 11 Suppose that on each play of a certain game a gambler is equally likely to win or to lose. Suppose that when he wins, his fortune is doubled; and when he loses, his fortune is cut in half. If he begins playing with a given fortune c, what is the expected value of his fortune after n independent plays of the game? Solution 12 Properties of the Variance Var(X ) = 0 if and only if there exists a constant c such that Pr(X = c) = 1. For constant a and b, Var(aX b) a 2Var ( X ) . Proof : E ( X ) , then E (aX b) a b Var (aX b) E (aX b a b) 2 E (aX a ) 2 a 2 E ( X ) 2 a 2 Var ( X ). Var ( X ) E ( X 2 ) E ( X ) 2 Proof : Var ( X ) E ( X ) 2 E ( X 2 2X 2 ) E ( X 2 ) 2E ( X ) 2 E( X 2 ) 2 13 Properties of the Variance If X1 , …, Xn are independent random variables, then Var( X 1 X n ) Var( X 1 ) Var( X n ) Proof: Suppose n 2 first. E ( X 1 X 2 ) 1 2 Var(X 1 X 2 ) E ( X 1 X 2 1 2 ) 2 E ( X 1 1 ) 2 ( X 2 2 ) 2 2( X 1 1 )( X 2 2 ) Var( X 1 ) Var( X 2 ) 2 E ( X 1 1 )( X 2 2 ) Since X 1 and X 2 are independent, E ( X 1 1 )( X 2 2 ) E ( X 1 1 ) E ( X 2 2 ) ( 1 1 )( 2 2 ) 0 Var (X 1 X 2 ) Var( X 1 ) Var( X 2 ) 14 If X1,…, Xn are independent random variables, then 2 2 Var (a1 X 1 an X n b) a1 Var ( X 1 ) an Var ( X n ) Example 3 (4.3.4) Suppose that X is a random variable for which E(X)=μ and Var(X)=σ2. Show that E[X(X-1)]= ( -1)+ 15 2 Solution E[ X ( X 1)] E[ X X ] 2 E( X ) 2 Var ( X ) [ E ( X )] 2 ( 1) 2 16 2 2 Moment Generating Functions Consider a given random variable X and for each real number t, we shall let (t ) E (etX ). The function is called the moment generating function (m.g.f.) of X. Suppose that the m.g.f. of X exists for all values of t in some open interval around t = 0. Then, d d (0) E (e tX ) E e tX E ( X ) dt t 0 dt t 0 More generally, (n) d n tX dn tX (0) n E (e ) E n e E ( X n etX )t 0 E ( X n ) dt t 0 t 0 dt Thus, (0) E ( X ), (0) E ( X 2 ), (0) E ( X 3 ), and so on. 17 Properties of Moment Generating Functions Let X has m.g.f. 1 ; let Y = aX+b has m.g.f. 2 . Then for every value of t such that 1 (at ) exists, 2 (t ) ebt 1 (at ) tY t ( aX b ) ebt E(e atX ) ebt 1 (at ) Proof: 2 (t ) E(e ) E e Suppose that X1,…, Xn are n independent random variables; and for i = 1,…, n, let i denote the m.g.f. of Xi. Let Y X 1 X n , and let the m.g.f. of Y be denoted by . Then for every value of t such that i (t ) exists, we have n (t ) i (t ) i 1 Proof: (t ) E (e ) E e tY 18 t ( X1 X n ) n n n tX i tX i E e E (e ) i (t ) i 1 i 1 i 1 The m.g.f. for the Binomial Distribution Suppose that a random variable X has a binomial distribution with parameters n and p. We can represent X as the sum of n independent random variables X1,…, Xn. Pr(X i 1) p and Pr(X i 0) q 1 p Determine the m.g.f. of X X1 X n i (t ) E (etX ) (et )Pr(X i 1) et (0) Pr(X i 0) pet q i (t ) ( pet q) n 19 Uniqueness of Moment Generating Functions If the m.g.f. of two random variables X1 and X2 are identical for all values of t in an open interval around t = 0, then the probability distributions of X1 and X2 must be identical. The additive property of the binomial distribution Suppose X1 and X2 are independent random variables. They have binomial distributions with parameters n1 and p and n2 and p. Let the m.g.f. of X1 + X2 be denoted by . (t ) ( pet q) n ( pet q) n ( pet q) n n 1 2 1 2 The distribution of X1 + X2 must be binomial distribution with parameters n1 + n2 and p. 20 Example 4 (4.4.8) Suppose that X is a random variable for which the m.g.f. is as follows: (t ) e t 2 3t 21 for - t Find the mean and the variance of X Solution (t ) (2t 3)e ' t 2 3t and (t ) (2t 3) e '' 2 t 2 3t 2e t 2 3t T herefore, (0) 3; (0) 11 9 2 ' 22 2 '' 2 Properties of Variance and Covariance If X and Y are random variables such that Var ( X ) and Var (Y ) , then Var ( X Y ) Var ( X ) Var (Y ) 2Cov( X , Y ) Var (aX bY c) a 2Var ( X ) b2Var (Y ) 2abCov( X ,Y ) n n Var X i Var ( X i ) 2 i 1 i 1 i j Correlation only measures linear relationship. 23 Cov( X i , X j ) Two random variables can be dependent, but uncorrelated. Example: Suppose that X can take only three values –1, 0, and 1, and that each of these three values has the same probability. Let Y=X 2. So X and Y are dependent. E(XY)=E(X 3)=E(X)=0, so Cov(X,Y) = E(XY) – E(X)E(Y)=0 (uncorrelated). Example 5 (4.6.11) 24 Suppose that two random variables X and Y cannot possibly have the following properties: E(X)=3, E(Y)=2, E(X2)=10. E(Y2)=29, and E(XY)=0 Solution 25