201211-ETL-met-Hadoo..

advertisement

ETL with Hadoop and MapReduce

Jan Pieter Posthuma –SQL Zaterdag 17 november 2012

Agenda

Big Data

Why do we need it?

Hadoop

MapReduce

Pig and Hive

Demo’s

Expectations

What to cover

Simple idea’s of how to use

MapReduce for ETL

Different ways to achieve this

What not to cover

Best Practice

Internals of Hadoop

Deep analysis of Big Data

Big Data

Too much data transformed to insight in a

traditional BI way

Why do we need it?

Classical BI solution

Source

Stage

DWH

±10Gb

±10Gb

±10Gb

Filter

Data

mart

Report

±100Mb

±10Kb

Σ ±30Gb

Big Data is about reducing time to insight:

- No ETL

- No Cleansing

- No Load

‘Analyze data when it arrives’

Hadoop

Replaces the need of additional Staging, DWH and ETL

–

Additional storage needed for highly unstructured data

Easy retrieval for (structured) data

–

Pig

–

Hive

–

SQOOP

–

ODBC for Hive

–

Polybase (HDFS)

Big Data ecosystem

BI tools

Reports

Excel

Dashboards

(Virtual) datamarts

Hive & Pig

Sqoop

Map/

Reduce

HDFS

Hadoop

Relational

Databases

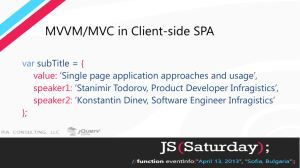

MapReduce

Map function:

var map = function (key, value, context) {}

Reduce function:

var reduce = function (key, values, context) {}

var map = function

(key, value, context)

MapReduce.js

distributed and

scheduled

multiple times

to all nodes

⁞

var map = function

(key, value, context)

⁞

var map = function

(key, value, context)

Processing data segments

context :=

(key, value)

var reduce =function

(key, values, context)

Hive and Pig

Principle is the same: easy data retrieval

Both use MapReduce

Different founders Facebook (Hive) and Yahoo (PIG)

Different language SQL like (Hive) and more procedural (PIG)

‘Of the 150k jobs Facebook runs daily, only 500 are MapReduce jobs.

The rest are is HiveQL’

Demo

Query RDW Data

RDW data with Hive table

Pig and MapReduce to get data from KNMI

KNMI.js 1/2

var map = function (key, value, context)

{

if (value[0] != '#')

{

var allValues = value.split(',');

if (allValues[7] != '')

{

context.write(allValues[0]+'-'+allValues[1], allValues[0] + ',' + allValues[1] + ',' + allValues[7]);

}

}

};

KNMI.js 2/2

var reduce = function (key, values, context)

{

var mMax = -9999;

var mMin = 9999;

var mKey = key.split('-');

while (values.hasNext())

{

var mValues = values.next().split(',');

mMax = mValues[2] > mMax ? mValues[2] : mMax;

mMin = mValues[2] < mMin ? mValues[2] : mMin;

}

context.write(key.trim(), mKey[0].toString() + '\t' + mKey[1].toString() + '\t' + mMax.toString() + '\t' +

mMin.toString());

};