Testing Techniques

advertisement

Practical Testing Techniques

Verification and Validation

• Validation

– does the software do what was wanted?

• “Are we building the right system?”

– This is difficult to determine and involves subjective

judgments (reviews, etc.)

• Verification

– does the software meet its specification?

• “Are we building the system right?”

– This can only be objective if the specifications are sufficiently

precise

– Implications?

• Everything must be verified

– …including the verification process itself

Testing

• Testing is a verification technique

– critically important for quality software

• Industry averages:

– 30-85 errors per 1000 lines of code;

– 0.5-3 errors per 1000 lines of code not detected before

delivery.

• The ability to test a system depends on a

thorough, competent requirements document.

Goals of Testing

• Goal: show a program meets its

specification

– But: testing can never be complete for nontrivial programs

• What is a successful test?

– One in which no errors were found?

– One in which one or more errors were

found?

Goals of Testing - 2

• Testing should be:

– repeatable

• if you find an error, you want to repeat the test to show

others

• if you correct an error, you want to repeat the test to check

that you fixed it

– systematic

• random testing is not enough

• select test sets that are representative of real uses

• select test sets that cover the range of behaviors of the

program

– documented

• keep track of what tests were performed, and what the

results were

Goals of Testing - 3.

• Therefore you need a way to document

test cases showing:

– the input

– the expected output

– the actual result

• These test plans/scripts are critical to

project success!

Errors/Bugs

• Errors of all kinds are known as “bugs”.

• Bugs come in two main types:

– compile-time (e.g., syntax errors)

which are cheap to fix

– run-time (usually logical errors)

which are expensive to fix.

Testing Strategies

• Never possible for designer to anticipate

every possible use of system. Systematic

testing is therefore essential.

• Offline strategies:

1. syntax checking & “lint” testers;

2. walkthroughs (“dry runs”);

3. inspections

• Online strategies:

1. black box testing;

2. white box testing.

Syntax Checking

• Detecting errors at compile time is preferable to

having them occur at run time!

• Syntax checking will simply determine whether a

program “looks” acceptable

• “lint” programs try to do deeper tests on code:

– will detect “this line will never be executed”

– “this variable may not be initialized”

• Compilers do a lot of this in the form of “warnings”.

Inspections

• Formal procedure, where a team of

programmers read through code, explaining

what it does.

• Inspectors play “devils advocate”, trying to

find bugs.

• Time consuming process!

• Can be divisive/lead to interpersonal

problems.

• Often used only for critical code.

Walkthroughs

• Similar to inspections, except that inspectors

“mentally execute” the code using simple test

data.

• Expensive in terms of human resources.

• Impossible for many systems.

• Usually used as discussion aid.

• Inspections/walkthroughs typically take 90120 minutes.

• Can find 30%-70% of errors.

Black Box Testing

• Generate test cases from the

specification

– i.e. don’t look at the code

• Advantages:

– avoids making the same assumptions as

the programmer

– test data is independent of the

implementation

– results can be interpreted without knowing

implementation details

Consider this Function

function max_element ($in_array)

{

/* Returns the largest element in

$in_array

*/

…

return $max_elem;

}

A Test Set

input

3 16 4 32 9

9 32 4 16 3

22 32 59 17 88 1

1 88 17 59 32 22

135791357

753197531

9 6 7 11 5

5 11 7 6 9

561 13 1024 79 86 222 97

97 222 86 79 1024 13 561

• Is this enough testing?

out put

32

32

88

88

9

9

11

11

1024

1024

OK?

yes

yes

yes

yes

yes

yes

yes

yes

yes

yes

“Black Box” Testing

• In black box testing, we ignore the internals of

the system, and focus on the relationship

between inputs and outputs.

• Exhaustive testing would mean examining

system output for every conceivable input.

– Clearly not practical for any real system!

• Instead, we use equivalence partitioning and

boundary analysis to identify characteristic

inputs.

Black Box Testing

• Three ways of selecting test cases:

– Paths through the specification

• e.g. choose test cases that cover each part of the

preconditions and postconditions

– Boundary conditions

• choose test cases that are at or close to boundaries for

ranges of inputs

– Off-nominal cases

• choose test cases that try out every type of invalid input

(the program should degrade gracefully, without loss of

data)

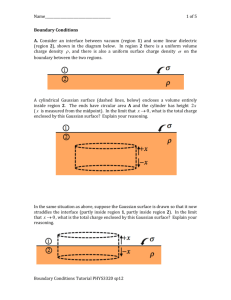

Equivalence Partitioning

• Suppose system asks for “a number between

100 and 999 inclusive”.

• This gives three equivalence classes of input:

– less than 100

– 100 to 999

– greater than 999

• We thus test the system against characteristic

values from each equivalence class.

• Example: 50 (invalid), 500 (valid), 1500

(invalid).

Boundary Analysis

• Arises from the observation that most

programs fail at input boundaries.

• Suppose system asks for “a number between

100 and 999 inclusive”.

• The boundaries are 100 and 999.

• We therefore test for values:

99 100 101

998 999 1000

lower boundary

upper boundary

White (Clear) Box Testing

• In white box testing, we use knowledge of the

internal structure to guide development of

tests.

• The ideal: examine every possible run of a

system.

– Not possible in practice!

• Instead: aim to test every statement at least

once

White Box Testing

• Examine the code and test all paths

…because black box testing can never

guarantee we exercised all the code

• Path completeness:

– A test set is path complete if each path

through the code is exercised by at least

one case in the test set

White Box Testing - Example

if ($signal > 5) {

echo “hello”;

} else {

echo ‘‘goodbye’’;

}

• There are two possible paths through this

code, corresponding to $signal > 5 and

$signal <= 5.

• Aim to execute each one.

White Box Testing - Example 2

Consider an extremely simplified version of the code to authorize

withdrawals from an ATM:

$balance = get_account_balance($user);

if ($amount_requested <= 300) {

if ($amount_requested < $balance) {

dispense_cash($amount_requested);

} else {

echo “You don’t have enough to withdraw $amount_requested”;

}

} else {

echo “You can not withdraw more than $300”;

}

Mini-exercise: Document the white-box tests you need to create to

test this code snippet.

Weaknesses of Path

Completeness

• Path completeness is usually infeasible

– e.g. for ($j=0, $i=0; $i<100; $i++)

if ($a[i]==True) $j=$j+1;

– there are 2100 paths through this program

segment (!)

… and even if you test every path, it

doesn’t mean your program is correct

Loop Testing

Another kind of boundary analysis:

1. skip the loop entirely

2. only one iteration through the loop

3. two iterations through the loop

4. m iterations through the loop (m < n)

5. (n-1), n, and (n+1) iterations

where n is the maximum number of

iterations through the loop

Test Planning

Test Planning

• Testing must be taken seriously, and rigorous

test plans and test scripts developed.

• These are generated from requirements

analysis document (for black box) and

program code (for white box).

• Distinguish between:

1. unit tests;

2. integration tests;

3. system tests.

Alpha and Beta Testing

• In-house testing is usually called alpha

testing.

• For software products, there is usually an

additional stage of testing, called beta testing.

• Involves distributing tested code to “beta test

sites” (usually prospective/friendly customers)

for evaluation and use.

• Typically involves a formal procedure for

reporting bugs.

Review: Triangle Classification

Question: How many test cases are enough?

Answer: A lot!

• expected cases (one for each type of triangle): (3,4,5), (4,4,5),

(5,5,5)

• boundary cases (only just not a triangle): (1,2,3)

• off-nominal cases (not valid triangle): (4,5,100)

• vary the order of inputs for expected cases: (4,5,4), (5,4,4), …

• vary the order of inputs for the boundary case: (1,3,2), (2,1,3),

(2,3,1), (3,2,1), (3,1,2)

• vary the order of inputs for the off-nominal case: (100,4,5),

(4,100,5), …

• choose two equal parameters for the off-nominal case: (100,4,4), …

• bad input: (2, 3, q)

• vary the order of bad inputs: …

Documenting Test Cases

• Describe how to test a

system/module/function

• Description must identify

– short description (optional)

– system state before executing the test

– function to be tested

– input (parameter) values for the test

– expected outcome of the test

Test Automation

• Testing is time consuming and repetitive

• Software testing has to be repeated

after every change (regression testing)

• Write test drivers that can run

automatically and produce a test report

Exercise: Create a Test Plan

• What are you going to test?

– functions, features, subsystems, the entire

system

• What approach are you going to use?

– white box, black box, in-house, outsourced,

inspections, walkthroughs, etc.

– what variant of each will you employ?

• When will you test?

– after a new module is added? after a change

is made? nightly? ….

– what is your testing schedule?

Exercise: Create a Test Plan - 2

• What pass/fail criteria will you use?

– Provide some sample test cases.

• How will you report bugs?

– what form/system will you use?

– how will you track them and resolve them?

• What are the team

roles/responsibilities?

– who will do what?