Document

advertisement

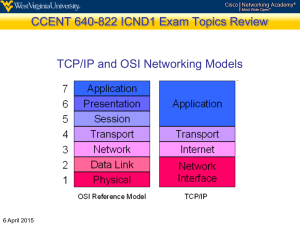

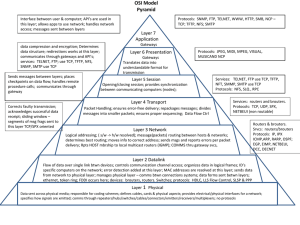

Optimizing Network Performance Alan Whinery U. Hawaii ITS April 7, 2010 IP, TCP, ICMP When you transfer a file with HTTP or FTP A TCP connection is set up between sender and reciver The sending computer hands the file to TCP, which slices the file into pieces, called segments, which it assigns numbers, called Sequence Numbers TCP hands each piece to IP, which makes datagrams IP hands each piece to Ethernet driver, which transmits frames (continued >>> ) IP, TCP, ICMP Ethernet carries the frame (through switches) to a router, which: takes the IP datagrams out of the Ethernet frames decides where it should go next Check cache OR queue for CPU If it is not forwarded*, the router may send an ICMP message back to the sender to tell it why hands it to a different Ethernet driver etc. (...) * reasons routers neglect to forward: no route, expired TTL, failed IP checksum, Access-list drop, input-queue flushes, selective discard IP, TCP, ICMP The last router delivers the datagrams to the receiving computer by sending them in frames across the final link the receiving computer extracts the datagrams from the frames, extracts the segments from the datagrams sends a TCP acknowledgement for this segment's Sequence Number back to the sender good segments are handed to the application (i.e. web browser) which will write them to a file on disk elements on each end computer Disk – data rate, errors DMA – data rate, errors Ethernet (link) driver – link neg., speed duplex, errors Features: (Int. Coa., Chk. Off., Seg. Off.) buffer sizes, frame size FCS check TCP (OS) – transport, error/congestion recovery Features (Con. Av., Buffer sizes, SACK,ECN,TS) parameters – MSS, buffer/window sizes IP4 (OS) – MTU, TTL, Checksum IP6 (OS) – MTU, Hop Limit Cable or transmission space Brain teaser A packet capture near a major UHNet ingress/egress point will observe IP datagrams with Good CRCs carrying TCP with bad CRCs. On the order of a dozen or so per hour How can this be? It's either an unimaginable coincidence, OR The source host has bit errors between the calculation of TCP checksum and that of IP checksum elements on each switch (L2/bridge) link negotiation/physical input queue output queue vlan tagging/processing FCS check Spanning Tree (changes/port-change-blocking) elements on each router Everything the switch has, plus route table/route cache changing, possibly temporarily invalid When cache changes, “process routing” adds latency ARP TCP Like pouring water from a bucket into a two-liter soda bottle. (important to take the cap off first) :^) If you pour too fast, some water gets lost when loss occurs, you pour more slowly TCP continues re-trying until all of the water is in the bottle Round Trip Time RTT, similar to the round trip time reported by “ping”, is how long it takes a packet to traverse the network from the sender to the receiver and then back to the sender. Bandwidth * Delay Product BDP is the one-half RTT times the useful “bottleneck” transmission rate (BW) of the network path It's actually BW * the one-way delay -- 0.5 * RTT is an estimate of one-way delay Equal to the amount of data that will be “in flight” in a “full pipe” from the Sender to the receiver when the earliest possible ACK is received. How TCP works S = sender R = receiver S & R set up a “connection” S starts sending segments not larger than MSS R starts acknowledging segments as they are received in good condition. S & R negotiate RWIN MSS, etc Acknowledgments refer to last segment received, not every single segment S limits unacknowledged “data in flight” to R's advertised RWIN How TCP works TCP performance on a connection is limited by the following three numbers: Sender's socket buffer (you can set this) Congestion Window (calculated during transfer) Must hold 2 * BDP of data to “fill pipe” Sender's estimate of the available bandwidth Scratchpad number kept by sender based on ACK/loss history Receiver's Receive Window (you can set this) must equal ~ BDP to “fill pipe” These can be specified with nuttcp and iperf OS defaults can be specified in each OS How TCP works original TCP was unable to deal with out-of-order segments was forced to throw away received segments that occurred after a lost segment Modern TCP Has SACK (selective acknowledgements) Timestamps Explicit Congestion Notification TCP Congestion Avoidance Early TCP performed poorly in the face of lost packets, a problem which became more serious as transfer rates increased Although bit-rates went up, RTT remained the same. Many TCP variants have been customized for large bandwidth-delay products HSTCP, FAST TCP, BIC TCP, CUBIC TCP, H-TCP, Compound TCP Modern Ethernet drivers Current Ethernet devices offer several optimizations TCP/IP checksum offloading TCP segmentation offloading NIC chipset does checksumming for TCP and Ipv4 OS sends large blocks of data to NIC, NIC chops it up Implies TCP Checksum offloading Interrupt Coalescing After receiving an Ethernet frame, NIC waits for more before raising interrupt to ICU Modern Ethernet drivers Optimizing the NIC's switch connection(s) Teaming Flow-control (PAUSE frames) Combining more than one NIC into one “link” Allowing the switch to pause the NIC's sending I have not found an example of negative effects Can band-aid problem NICs by smoothing rate and preventing queue drops (and therefore keeping TCP from seeing congestion) VLANs Very useful on some servers, as you can set up several interfaces on one NIC Although it is offered in some Windows drivers, I have only made it work in Linux Modern Ethernet drivers Optimizing the driver's use of the bus/dma/etc. Or Ethernet switch Scatter-gather Write-combining Data transfer “coalescing” Message Signaled interrupts Multipart DMA transfers PCI 2.2 and PCI-E messages that expand available interrupts and relieve the need for interrupt connector pins Multiple receive queues (hardware steering) Modern Ethernet drivers Although there are gains to be had from tweaking offloading and other opts Always baseline a system with defaults before changing things Sometimes, disabling all offloading and coalescing can stabilize performance (perhaps exposing a bug) Segmentation offloading affects a machine's perspective when packet capturing its own frames on its own interface ethtool Linux utility for interacting with Ethernet drivers Support and output format varies between drivers Shows useful statistics View or set features (offloading, coalescing, etc) Set Ethernet driver ring buffer sizes Blink LEDs for NIC identification Show link condition, speed, duplex, etc. ethtool Linux utility for interacting with Ethernet drivers root@bongo:~# ethtool eth0 Settings for eth0: Supported ports: [ MII ] Supported link modes: 10baseT/Half 10baseT/Full 100baseT/Half 100baseT/Full 1000baseT/Full Supports auto-negotiation: Yes Advertised link modes: 10baseT/Half 10baseT/Full 100baseT/Half 100baseT/Full 1000baseT/Full Advertised auto-negotiation: Yes Speed: 1000Mb/s Duplex: Full Port: MII PHYAD: 1 Transceiver: external Auto-negotiation: on Supports Wake-on: g Wake-on: d Link detected: yes ethtool Linux utility for interacting with Ethernet drivers root@bongo:~# ethtool -i eth0 driver: forcedeth version: 0.61 firmware-version: Bus-info: 0000:00:14.0 root@uhmanoa:/home/whinery# ethtool eth2 Settings for eth2: Supported ports: [ ] Supported link modes: Supports auto-negotiation: No Advertised link modes: Not reported Advertised auto-negotiation: No Speed: Unknown! (10000) Duplex: Full Port: Twisted Pair PHYAD: 0 Transceiver: internal Auto-negotiation: off Current message level: 0x00000004 (4) Link detected: yes modinfo Extract status and documentation from Linux modules (like Ethernet drivers) root@bongo:~# modinfo forcedeth filename: /lib/modules/2.6.24-26-rt/kernel/drivers/net/forcedeth.ko license: GPL description: Reverse Engineered nForce ethernet driver author: Manfred Spraul <manfred@colorfullife.com> srcversion: 9A02DCF1CF871DD11BB129E alias: pci:v000010DEd00000AB3sv*sd*bc*sc*i* (...) depends: vermagic: 2.6.24-26-rt SMP preempt mod_unload parm: max_interrupt_work:forcedeth maximum events handled per interrupt (int) parm: optimization_mode:In throughput mode (0), every tx & rx packet will generate an interrupt. In CPU mode (1), interrupts are controlled by a timer. (int) parm: poll_interval:Interval determines how frequent timer interrupt is generated by [(time_in_micro_secs * 100) / (2^10)]. Min is 0 and Max is 65535. (int) parm: msi:MSI interrupts are enabled by setting to 1 and disabled by setting to 0. (int) parm: msix:MSIX interrupts are enabled by setting to 1 and disabled by setting to 0. (int) parm: dma_64bit:High DMA is enabled by setting to 1 and disabled by setting to 0. (int) NDT Network Diagnostic Tool written by Rich Carlson of US Dept. of Energy Argonne Lab/Internet2 Server written in C, primary client is a Java Applet NPAD (Network Path and Application Diagnosis) By Matt Mathis and John Heffner, Pittsburgh Supercomputing Center Allows for analysis of network loss, throughput not for a target rate and RTT Attempts to guide user to solution of network problems Iperf Command-line throughput test server/client Works on Linux/Windows/Mac OS X/ etc. Originally developed by NLANR/DAST Performs unicast TCP and UDP tests Performs multicast UDP tests Allows setting TCP parameters Original development ended in 2002 Sourceforge fork project has produced mixed results Nuttcp Command-line throughput test server/client Runs on Linux, Windows, Mac OS X etc By Bill Fink, Rob Scott Does everything iperf does Also third party testing Bidirectional traceroutes More extensive output Nuttcp nuttcp -T30 -i1 -vv 192.168.222.5 30 second TCP send from this host to target nuttcp -T30 -i1 -vv 192.168.2.1 192.168.2.2 30 second TCP send from 2.1 to 2.2 This host is neither 2.1 nor 2.2 Each of the slaves must be running “nuttcp -S” Nuttcp (or iperf) and periodic reports C:\bin\nuttcp>nuttcp.exe -i1 -T10 128.171.6.156 22.1875 MB / 1.00 sec = 186.0967 Mbps 7.3125 MB / 1.00 sec = 61.3394 Mbps 14.0000 MB / 1.00 sec = 117.4402 Mbps 12.8125 MB / 1.00 sec = 107.4796 Mbps 7.1250 MB / 1.00 sec = 59.7715 Mbps 6.4375 MB / 1.00 sec = 53.9991 Mbps 10.7500 MB / 1.00 sec = 90.1771 Mbps 4.8750 MB / 1.00 sec = 40.8945 Mbps 9.5625 MB / 1.00 sec = 80.2164 Mbps 1.9375 MB / 1.00 sec = 16.2529 Mbps 97.0625 MB / 10.11 sec = 80.5500 Mbps 3 %TX 6 %RX Seeing 10 1-second samples tells you more about a test than one 10-second average Testing notes Neither iperf nor nuttcp uses TCP auto-tuning