Multiple Regression II

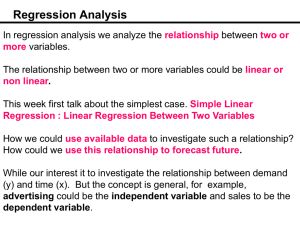

advertisement

Multiple Regression II KNNL – Chapter 7 Extra Sums of Squares • For a given dataset, the total sum of squares remains the same, no matter what predictors are included (when no missing values exist among variables) • As we include more predictors, the regression sum of squares (SSR) increases (technically does not decrease), and the error sum of squares (SSE) decreases • SSR + SSE = SSTO, regardless of predictors in model • When a model contains just X1, denote: SSR(X1), SSE(X1) • Model Containing X1, X2: SSR(X1,X2), SSE(X1,X2) • Predictive contribution of X2 above that of X1: SSR(X2|X1) = SSE(X1) – SSE(X1,X2) = SSR(X1,X2) – SSR(X1) • Extends to any number of Predictors Definitions and Decomposition of SSR SSTO SSR X 1 SSE X 1 SSR X 1 , X 2 SSE X 1 , X 2 SSR X 1 , X 2 , X 3 SSE X 1 , X 2 , X 3 SSR X 1 | X 2 SSR X 1 , X 2 SSR X 2 SSE X 2 SSE X 1 , X 2 SSR X 2 | X 1 SSR X 1 , X 2 SSR X 1 SSE X 1 SSE X 1 , X 2 SSR X 3 | X 1 , X 2 SSR X 1 , X 2 , X 3 SSR X 1 , X 2 SSE X 1 , X 2 SSE X 1 , X 2 , X 3 SSR X 2 , X 3 | X 1 SSR X 1 , X 2 , X 3 SSR X 1 SSE X 1 SSE X 1 , X 2 , X 3 SSR X 1 , X 2 SSR X 1 SSR X 2 | X 1 SSR X 2 SSR X 1 | X 2 SSR X 1 , X 2 , X 3 SSR X 1 SSR X 2 | X 1 SSR X 3 | X 1 , X 2 SSR X 1 , X 2 , X 3 SSR X 2 SSR X 1 | X 2 SSR X 3 | X 1 , X 2 SSR X 1 , X 2 , X 3 SSR X 1 SSR X 2 , X 3 | X 1 Note that as the # of predictors increases, so does the ways of decomposing SSR ANOVA – Sequential Sum of Squares Source of Variation Regression X1 X2|X1 X3|X1,X2 Error Total SS SSR(X1,X2,X3) SSR(X1) SSR(X2|X1) SSR(X3|X1,X2) SSE(X1,X2,X3) SSTO df 3 1 1 1 n-4 n-1 MS MSR(X1,X2,X3) MSR(X1) MSR(X2|X1) MSR(X3|X1,X2) MSE(X1,X2,X3) SSR X 1 SSR X 2 | X 1 MSR X 2 | X 1 1 1 SSR X 3 | X 1 , X 2 MSR X 3 | X 1 , X 2 1 SSR X 1 , X 2 , X 3 MSR X 1 , X 2 , X 3 3 SSR X 2 , X 3 | X 1 MSR X 2 , X 3 | X 1 2 MSR X 1 Extra Sums of Squares & Tests of Regression Coefficients (Single bk) Full Model: Y b b X b X b X ~ NID 0, 2 i 0 1 i1 2 i2 3 i3 i i H 0 : b3 0 H A : b3 0 Reduced Model: Yi b 0 b1 X i1 b 2 X i 2 i SSE ( R ) SSE ( F ) df R df F General Linear Test: F * SSE ( F ) df F Full Model: SSE ( F ) SSE X 1 , X 2 , X 3 Reduced Model: SSE ( R) SSE X 1 , X 2 df F n 4 df R n 3 SSE ( R ) SSE ( F ) SSE X 1 , X 2 SSE X 1 , X 2 , X 3 SSR X 3 | X 1 , X 2 df R df F n 3 n 4 1 SSR X 3 | X 1 , X 2 1 MSR X 3 | X 1 , X 2 F* SSE X 1 , X 2 , X 3 MSE X 1 , X 2 , X 3 n4 Rejection Region: F * F 1 ;1, n 4 H0 ~ F 1, n 4 P-value P F 1; n 4 F * Extra Sums of Squares & Tests of Regression Coefficients (Multiple bk) Full Model: Yi b 0 b1 X i1 b 2 X i 2 b3 X i 3 i i ~ NID 0, 2 H 0 : b 2 b3 0 H A : b 2 and/or b3 0 Reduced Model: Yi b 0 b1 X i1 i SSE ( R) SSE ( F ) df R df F General Linear Test: F * SSE ( F ) df F Full Model: SSE ( F ) SSE X 1 , X 2 , X 3 Reduced Model: SSE ( R) SSE X 1 df F n 4 df R n 2 SSE ( R ) SSE ( F ) SSE X 1 SSE X 1 , X 2 , X 3 SSR X 2 , X 3 | X 1 df R df F n 2 n 4 2 SSR X 2 , X 3 | X 1 2 MSR X 2 , X 3 | X 1 F* SSE X 1 , X 2 , X 3 MSE X 1 , X 2 , X 3 n4 Rejection Region: F * F 1 ; 2, n 4 H0 ~ F 2, n 4 P-value P F 2; n 4 F * Extra Sums of Squares & Tests of Regression Coefficients (General Case) Full Model: Yi b 0 b1 X i1 b 2 X i 2 ... b p 1 X i , p 1 i i ~ NID 0, 2 H 0 : b q ... b p 1 0 H A : At least one of b q ... b p 1 0 Reduced Model: Yi b 0 b1 X i1... b q 1 X i ,q 1 i q p SSE ( R ) SSE ( F ) df R df F General Linear Test: F * SSE ( F ) df F Full Model: SSE ( F ) SSE X 1 , X 2 ..., X p 1 df F n p Reduced Model: SSE ( R) SSE X 1 , X 2 ..., X q 1 df R n q SSE ( R ) SSE ( F ) SSE X 1 , X 2 ..., X q 1 SSE X 1 , X 2 ..., X p 1 SSR X q ,..., X p 1 | X 1 , X 2 ..., X q 1 df R df F n q n p p q SSR X q ,..., X p 1 | X 1 , X 2 ..., X q 1 p q MSR X q ,..., X p 1 | X 1 , X 2 ..., X q 1 H 0 F* ~ SSE X 1 , X 2 ..., X p 1 MSE X 1 , X 2 ..., X p 1 n p Rejection Region: F * F 1 ; p q, n p P-value P F p q; n p F * F p q, n p Other Linear Tests Suppose firm has two types of advertising: print X 1 , in $1000s and internet X 2 , in $1000s as well as promotional expenditures X 3 , in $1000s : Let Sales = Y , they vary their expenditures on n periods and observe sales in each (Price is constant) Yi b 0 b1 X i1 b 2 X i 2 b3 X i 3 i i ~ NID 0, 2 Test of equal effects of increasing each input by 1 unit (say $1000s): H 0 : b1 b 2 b3 H A : H 0 is False Full Model: Yi b 0 b1 X i1 b 2 X i 2 b3 X i 3 i Reduced Model: Yi b 0 b1 X i1 b1 X i 2 b1 X i 3 i Yi b 0 b1Wi i Wi X i1 X i 2 X i 3 df F n 4 Yi b 0 b1 X i1 X i 2 X i 3 i df R n 2 Test that Mean sales when all inputs=0 is $10,000 and effect of increasing X 3 by 1 unit is 1: H 0 : b 0 10, b3 1 H A : H 0 is False (all units are $1000s) Full Model: Yi b 0 b1 X i1 b 2 X i 2 b3 X i 3 i df F n 4 Reduced Model: Yi 10 b1 X i1 b 2 X i 2 1X i 3 i Yi 10 1X i 3 b1 X i1 b 2 X i 2 i U i b1 X i1 b 2 X i 2 i U i Yi 10 1X i 3 df R n 2 (no intercept) Coefficients of Partial Determination-I Proportion of Variation Explained by 1 or more variables, not explained by others Regression of Y on X 1 : Yi b 0 b1 X i1 i Variation Explained: SSR X 1 Unexplained: SSE X 1 SSTO SSR X 1 Regression of Y on X 2 : Yi b 0 b 2 X i 2 i Variation Explained: SSR X 2 Unexplained: SSE X 2 SSTO SSR X 2 Regression of Y on X 1 , X 2 : Yi b 0 b1 X i1 b 2 X i 2 i Variation Explained: SSR X 1 , X 2 Unexplained: SSE X 1 , X 2 SSTO SSR X 1 , X 2 Proportion of Variation in Y , Not Explained by X 1 , that is Explained by X 2 : RY22|1 SSE X 1 SSE X 1 , X 2 SSR X 1 , X 2 SSR X 1 SSR X 1 , X 2 SSR X 1 SSR X 2 | X 1 SSE X 1 SSE X 1 SSTO SSR X 1 SSE X 1 Proportion of Variation in Y , Not Explained by X 2 , that is Explained by X 1 : RY21|2 SSE X 2 SSE X 1 , X 2 SSR X 1 , X 2 SSR X 2 SSR X 1 , X 2 SSR X 2 SSR X 1 | X 2 SSE X 2 SSE X 2 SSTO SSR X 2 SSE X 2 Coefficients of Partial Determination-II RY21|23 SSE X 2 , X 3 SSR X 1 , X 2 , X 3 SSR X 2 , X 3 SSTO SSR X 2 , X 3 RY2 2|13 RY23|12 RY2 23|1 SSE X 2 , X 3 SSE X 1 , X 2 , X 3 SSR X 1 , X 2 , X 3 SSR X 1 , X 2 SSTO SSR X 1 , X 2 SSR X 1 , X 2 , X 3 SSR X 1 SSTO SSR X 1 SSE X 2 , X 3 SSE X 2 , X 3 SSTO SSR X 1 , X 3 SSE X 1 SSR X 1 , X 2 , X 3 SSR X 2 , X 3 SSR X 1 | X 2 , X 3 SSR X 1 , X 2 , X 3 SSR X 1 , X 3 SSE X 1 SSE X 1 , X 2 , X 3 SSR X 2 | X 1 , X 3 SSE X 1 , X 3 SSR X 3 | X 1 , X 2 SSE X 1 , X 2 SSR X 1 , X 2 , X 3 SSR X 1 SSE X 1 SSR X 2 , X 3 | X 1 SSE X 1 Coefficient of Partial Correlation: RY 2|1 sgn b 2 RY2 2|1 ^ ^ ^ ^ ^ if b 2 0 in Y b 0 b 1 X 1 b 2 X 2 sgn b 2 ^ ^ ^ ^ ^ if b 0 in Y b b X b 2 0 1 2 X2 1 Standardized Regression Model - I • Useful in removing round-off errors in computing (X’X)-1 • Makes easier comparison of magnitude of effects of predictors measured on different measurement scales • Coefficients represent changes in Y (in standard deviation units) as each predictor increases 1 SD (holding all others constant) • Since all variables are centered, no intercept term Standardized Regression Model - II Standardized Random Variables: Scaled to have mean = 0, SD = 1: Yi Y sY sY Yi Y 2 X ik X k sk i n 1 sk X ik X k i n 1 Correlation Transformation: Yi * 1 Yi Y n 1 sY X ik* 1 X ik X k sk n 1 Standardized Regression Model: Yi * b1* X i*1 ... b p* 1 X i*, p 1 i* s Note: b k Y sk * bk k 1,..., p 1 b 0 Y b1 X 1 ... b p 1 X p 1 k 1,..., p 1 2 k 1,..., p 1 Standardized Regression Model - III Standardized Regression Model: Yi * b1* X i*1 ... b p* 1 X i*, p 1 i* X 11* X* n( p 1) X n*1 X 1,* p 1 X n*, p 1 Y1* * Y * Y 1 n1 * Y1 1 r 21 *' * X X ( p 1)( p 1) rp 1,1 r1, p 1 r1, p 1 rXX 1 r12 1 rp 1,2 rY 1 r Y2 *' * X Y r ( p 1)1 YX rY , p 1 This results from: X ik* i 2 1 X ik X k n 1 sk i X X * ik * ik ' i Y X * i * ik i X*' X* s bk Y sk 1 X ik X k n 1 sk i 1 Yi Y n 1 sY i b* X*' Y* ( p 1)( p 1) ( p 1)1 * bk 1 i 2 sk 2 ( p 1)1 n 1 2 1 2 s X 1 s X k k 1 X ik X k sk n 1 2 X ik X k X ik ' X k ' 1 X ik ' X k ' 1 s X k , X k ' i rkk ' s s s n 1 s X s X n 1 k' k k' k k' b* X*' X* k 1,..., p 1 X ik X k 1 X*'Y* 1 i s s Y k 1 b* rXX rYX b0 Y b1 X 1 ... b p 1 X p 1 Y Y X i n 1 ik Xk s Y , X k s Y s X k rYk Multicollinearity • Consider model with 2 Predictors (this generalizes to any number of predictors) Yi = b0+b1Xi1+b2Xi2+i • When X1 and X2 are uncorrelated, the regression coefficients b1 and b2 are the same whether we fit simple regressions or a multiple regression, and: SSR(X1) = SSR(X1|X2) SSR(X2) = SSR(X2|X1) • When X1 and X2 are highly correlated, their regression coefficients become unstable, and their standard errors become larger (smaller t-statistics, wider CIs), leading to strange inferences when comparing simple and partial effects of each predictor • Estimated means and Predicted values are not affected