ch4RBF - Temple University

advertisement

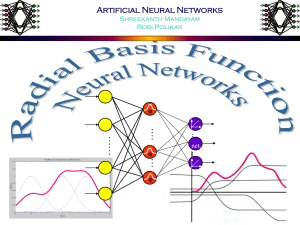

Ch. 4: Radial Basis Functions Stephen Marsland, Machine Learning: An Algorithmic Perspective. CRC 2009 based on slides from many Internet sources Longin Jan Latecki Temple University latecki@temple.edu Perceptorn In RBFN architecture x1 x2 x3 h1 h2 W1 W2 h3 W3 f(x) Wm xn Input layer hm Hidden layer Output layer architecture Three layers • Input layer – Source nodes that connect to the network to its environment • Hidden layer – Hidden units provide a set of basis function – High dimensionality • Output layer – Linear combination of hidden functions architecture Radial basis function m f(x) = wjhj(x) j=1 hj(x) = exp( -(x-cj)2 / rj2 ) Where cj is center of a region, rj is width of the receptive field Function Approximation with Radial Basis Functions RBF Networks approximate functions using (radial) basis functions as the building blocks. 7 Exact Interpolation • RBFs have their origins in techniques for performing exact function interpolation [Bishop, 1995]: – Find a function h(x) such that h(xn) = tn n=1, ... N • Radial Basis Function approach (Powel 1987): – Use a set of N basis functions of the form f(||x-xn||), one for each point,where f(.) is some non-linear function. – Output: h(x) = Sn wn f(||x-xn||) 8 Exact Interpolation • Goal (exact interpolation): – Find a function h(x) such that h(xn) = tn n=1, ... N • Radial Basis Function approach (Powel 1987): – Use a set of N basis functions of the form f(||x-xn||), one for each point,where f(.) is some non-linear function. – Output: h(x) = Sn wn f(||x-xn||) w1 f(||x1-x1||) + w2 f(||x1-x2||) + ... + wN f(||x1-xN||) = t1 w1 f(||x2-x1||) + w2 f(||x2-x2||) + ... + wN f(||x2-xN||) = t2 ... fW = T w1 f(||xN-x1||) + w2 f(||xN-x2||) + ... + wN f(||xN-xN||)= tN 9 Exact Interpolation 10 Exact Interpolation 11 • Due to noise that may be present in the data exact interpolation is rarely useful. • By introducing a number of modifications, we arrive at RBF networks: • Complexity rather than the size of the data is what is important – Number of the basis functions need not be equal to N • Centers need not be constrained by the input • Each basis function can have its own adjustable width parameter s • Bias parameter may be included in the linear sum. 12 Illustrative Example - XOR Problem f1 f2 13 Function Approximation via Basis Functions and RBF Networks • • Using nonlinear functions, we can convert a nonlinearly separable problem into a linearly separable one. • From a function approximation perspective, this is equivalent to implementing a complex function (corresponding to the nonlinearly separable decision boundary) using simple functions (corresponding to the linearly separable decision boundary) • • Implementing this procedure using a network architecture, yields the RBF networks, if the nonlinear mapping functions are radial basis functions. • Radial Basis Functions: – Radial: Symmetric around its center – Basis Functions: Also called kernels, a set of functions whose linear combination can generate an arbitrary function in a given function space. 14 RBF Networks 15 RBF Networks 16 Network Parameters • What do these parameters represent? • • • f: The radial basis function for the hidden layer. This is a simple nonlinear mapping function (typically Gaussian) that transforms the d- dimensional input patterns to a (typically higher) H-dimensional space. The complex decision boundary will be constructed from linear combinations (weighted sums) of these simple building blocks. • uJi: The weights joining the first to hidden layer. These weights constitute the center points of the radial basis functions. Also called prototypes of data. • s: The spread constant(s). These values determine the spread (extend) of each radial basis function. • Wjk: The weights joining hidden and output layers. These are the weights which are used in obtaining the linear combination of the radial basis functions. They determine the relative amplitudes of the RBFs when they are combined to form the complex function. • ||x-uJ||: the Euclidean distance between the input x and the prototype vector uJ. Activation of the hidden unit is determined according to this distance through f. • • • • 17 18 Training RBF Networks Approach 1: Exact RBF Approach 2: Fixed centers selected at random Approach 3: Centers are obtained from clustering Approach 4: Fully supervised training 19 Training RBF Networks • Approach 1: Exact RBF • Guarantees correct classification of all training data instances. • Requires N hidden layer nodes, one for each training instance. • No iterative training is involved: w are obtained by solving a set of linear equations • Non-smooth, bad generalization 20 Exact Interpolation 21 Exact Interpolation 22 Too Many Receptive Fields? • In order to reduce the artificial complexity of the RBF, we need to use fewer number of receptive fields. • • Approach 2: Fixed centers selected at random. • Use M < N data points as the receptive field centers. • Fast but may require excessive centers • • Approach 3: Centers are obtained from unsupervised learning (clustering). • Centers no longer has to coincide with data points • This is the most commonly used procedure, providing good results. 23 Approach 2 Approach 3 Approach 3.b 24 Determining the Output Weights through learning (LMS) 25 RBFs for Classification 26 Homework • Problem 4.1, p. 117 • Problem 4.2, p. 117