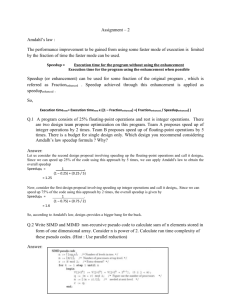

note4

advertisement

Lecture 4 Analytical Modeling of Parallel Programs Parallel Computing Fall 2008 1 Performance Metrics for Parallel Systems Number of processing elements p Execution Time Parallel runtime: the time that elapses from the moment a parallel computation starts to the moment the last processing element finishes execution. Ts: serial runtime Tp: parallel runtime Total Parallel Overhead T0 Total time collectively spent by all the processing elements – running time required by the fastest known sequential algorithm for solving the same problem on a single processing element. T0=pTp-Ts 2 Performance Metrics for Parallel Systems Speedup S: The ratio of the serial runtime of the best sequential algorithm for solving a problem to the time taken by the parallel algorithm to solve the same problem on p processing elements. S=Ts(best)/Tp Example: adding n numbers: Tp=Θ(logn), Ts= Θ(n), S= Θ(n/logn) Theoretically, speedup can never exceed the number of processing elements p(S<=p). Proof: Assume a speedup is greater than p, then each processing element can spend less than time Ts/p solving the problem. In this case, a single processing element could emulate the p processing elements and solve the problem in fewer than Ts units of time. This is a contradiction because speedup, by definition, is computed with respect to the best sequential algorithm. Superlinear speedup: In practice, a speedup greater than p is sometimes observed, this usually happens when the work performed by a serial algorithm is greater than its parallel formulation or due to hardware features that put the serial implementation at a disadvantage. 3 Example for Superlinear speedup Superlinear speedup: Example1: Superlinear effects from caches: With the problem instance size of A and 64KB cache, the cache hit rate is 80%. Assume latency to cache of 2ns and latency of DRAM of 100ns, then memory access time is 2*0.8+100*0.2=21.6ns. If the computation is memory bound and performs one FLOP/memory access, this corresponds to a processing rate of 46.3 MFLOPS. With the problem instance size of A/2 and 64KB cache, the cache hit rate is higher, i.e., 90%, 8% the remaining data comes from local DRAM and the other 2% comes from the remote DRAM with latency of 400ns, then memory access time is 2*0.9+100*0.08+400*0.02=17.8. The corresponding execution rate at each processor is 56.18MFLOPS, and for two processors the total processing rate is 112.36MFLOPS. Then the speedup will be 112.36/46.3=2.43! 4 Example for Superlinear speedup Superlinear speedup: Example2: Superlinear effects due to exploratory decomposition: explore leaf nodes of an unstructured tree. Each leaf has a label associated with it and the objective is to find a node with a specified label, say ‘S’. The solution node is the rightmost leaf in the tree. A serial formulation of this problem based on depth-first tree traversal explores the entire tree, i.e. all 14 nodes, time is 14 units time. Now a parallel formulation in which the left subtree is explored by processing element 0 and the right subtree is explored by processing element 1. The total work done by the parallel algorithm is only 9 nodes and corresponding parallel time is 5 units time. Then the speedup is 14/5=2.8. 5 Performance Metrics for Parallel Systems(cont.) Efficiency E Cost(also called Work or processor-time product) W Ratio of speedup to the number of processing element. E=S/p A measure of the fraction of time for which a processing element is usefully employed. Examples: adding n numbers on n processing elements: Tp=Θ(logn), Ts= Θ(n), S= Θ(n/logn), E= Θ(1/logn) Product of parallel runtime and the number of processing elements used. W=Tp*p Examples: adding n numbers on n processing elements: W= Θ(nlogn). Cost-optimal: if the cost of solving a problem on a parallel computer has the same asymptotic growth(in Θ terms) as a function of the input size as the fastest-known sequential algorithm on a single processing element. Problem Size W2 The number of basic computation steps in the best sequential algorithm to solve the problem on a single processing element. W2=Ts of the fastest known algorithm to solve the problem on a sequential computer. 6 Parallel vs Sequential Computing: Amdahl’s Theorem 0.1 (Amdahl’s Law) Let f, 0 ≤ f ≤ 1, be the fraction of a computation that is inherently sequential. Then the maximum obtainable speedup S on p processors is S ≤1/(f + (1 − f)/p) Proof. Let T be the sequential running time for the named computation. fT is the time spent on the inherently sequential part of the program. On p processors the remaining computation, if fully parallelizable, would achieve a running time of at most (1−f)T/p. This way the running time of the parallel program on p processors is the sum of the execution time of the sequential and parallel components that is, fT + (1 − f)T/p. The maximum allowable speedup is therefore S ≤ T/(fT + (1 − f)T/p) and the result is proven. 7 Amdahl’s Law Amdahl used this observation to advocate the building of even more powerful sequential machines as one cannot gain much by using parallel machines. For example if f = 10%, then S ≤ 10 as p → ∞. The underlying assumption in Amdahl’s Law is that the sequential component of a program is a constant fraction of the whole program. In many instances as problem size increases the fraction of computation that is inherently sequential decreases with time. In many cases even a speedup of 10 is quite significant by itself. In addition Amdahl’s law is based on the concept that parallel computing always tries to minimize parallel time. In some cases a parallel computer is used to increase the problem size that can be solved in a fixed amount of time. For example in weather prediction this would increase the accuracy of say a three-day forecast or would allow a more accurate five-day forecast. 8 Parallel vs Sequential Computing: Gustaffson’s Law Theorem 0.2 (Gustafson’s Law) Let the execution time of a parallel algorithm consist of a sequential segment fT and a parallel segment (1 − f)T and the sequential segment is constant. The scaled speedup of the algorithm is then. S =(fT + (1 − f)Tp)/(fT + (1 − f)T) = f + (1 − f)p For f = 0.05, we get S = 19.05, whereas Amdahl’s law gives an S ≤ 10.26. 1 proc p proc fT fT (1-f)Tp (1-f)T T(f+(1-f)p) T Amdahl’s Law assumes that problem size is fixed when it deals with scalability. Gustafson’s Law assumes that running time is fixed. 9 Brent’s Scheduling Principle (Emulations) Suppose we have an unlimited parallelism efficient parallel algorithm, i.e. an algorithm that runs on zillions of processors. In practice zillions of processors may not available. Suppose we have only p processors. A question that arises is what can we do to “run” the efficient zillion processor algorithm on our limited machine. One answer is emulation: simulate the zillion processor algorithm on the p processor machine. Theorem 0.3 (Brent’s Principle) Let the execution time of a parallel algorithm requires m operations and runs in parallel time t. Then running this algorithm on a limited processor machine with only p processors would require time m/p + t. Proof: Let mi be the number of computational operations at the i-th step, i.e. mi m .If we assign the p processors on the i-th step to work on these mi operations they can conclude in time mi / p mi / p 1 . Thus the total running time on p processors would be t m / p m / p 1 t m / p t m / p i i i 1 i i i 10 End Thank you! 11