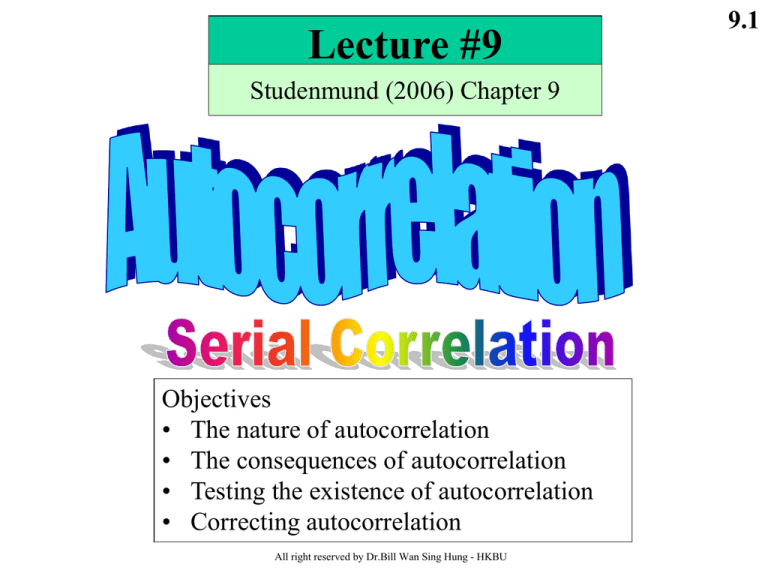

Lecture #9

Studenmund (2006) Chapter 9

Objectives

• The nature of autocorrelation

• The consequences of autocorrelation

• Testing the existence of autocorrelation

• Correcting autocorrelation

All right reserved by Dr.Bill Wan Sing Hung - HKBU

9.1

Time Series Data

Time series process of economic variables

e.g., GDP, M1, interest rate, exchange rate,

imports, exports, inflation rate, etc.

Realization

An observed time series data set generated

from a time series process

Remark:

Age is not a realization of time series process.

Time trend is not a time series process too.

All right reserved by Dr.Bill Wan Sing Hung - HKBU

9.2

9.3

Decomposition of time series

Xt = Trend + seasonal + random

Trend

Xt

Cyclical or

seasonal

random

time

All right reserved by Dr.Bill Wan Sing Hung - HKBU

Static Models

9.4

Ct = 0 + 1Ydt + t

Subscript “t” indicates time. The regression is a

contemporaneous relationship, i.e., how does

current consumption (C) be affected by current Yd?

Example: Static Phillips curve model

inflatt = 0 + 1unemployt + t

inflat: inflation rate

unemploy: unemployment rate

All right reserved by Dr.Bill Wan Sing Hung - HKBU

9.5

Finite Distributed Lag Models

Effect

at time t

Economic action

at time t

Effect

at time t+1

Effect

at time t+2

….

Effect

at time t+q

Forward Distributed Lag

Effect (with order q)

Ct =0+0Ydt+t

Ct+1=0+0Ydt+1+1Ydt+tCt=0 +0Ydt+1Ydt-1+t

….

Ct+q=0+1Ydt+q+…+1Ydt+tCt=0+1Ydt+…+1Ydt-q+t

All right reserved by Dr.Bill Wan Sing Hung - HKBU

9.6

Economic action

at time t

Effect

at time t-1

Backward Distributed Lag Effect

Effect

at time t-2

Effect

at time t-3

….

Effect

at time t-q

Yt= 0+0Zt+1Zt-1+2Zt-2+…+2Zt-q+t

Initial state: zt = zt-1 = zt-2 = c

All right reserved by Dr.Bill Wan Sing Hung - HKBU

C = 0 + 0Ydt + 1Ydt-1 + 2Ydt-2 + t

Long-run propensity (LRP) = (0 + 1 + 2)

Permanent unit change in C for 1 unit

permanent (long-run) change in Yd.

Distributed Lag model in

general:

Ct = 0 + 0Ydt + 1Ydt-1 +…+ qYdt-q

+ other factors + t

LRP (or long run multiplier) = 0 + 1 +..+ q

All right reserved by Dr.Bill Wan Sing Hung - HKBU

9.7

Time Trends

Linear time trend

Yt = 0 + 1t + t

9.8

Constant absolute change

Exponential time trend

ln(Yt) = 0 + 1t + t Constant growth rate

Quadratic time trend

Yt = 0 + 1t + 2t2 + t Accelerate change

For advances on time series analysis and modeling , welcome to take

ECON 3670

All right reserved by Dr.Bill Wan Sing Hung - HKBU

Definition: First-order of Autocorrelation, AR(1)

Yt = 0 + 1 X1t + t

t = 1,……,T

Cov (t, s) = E (t s) 0

If

and if

9.9

where t s

t = t-1 + ut

where

and

-1 < < 1

( : RHO)

ut ~ iid (0, u2) (white noise)

This scheme is called first-order autocorrelation and denotes as AR(1)

Autoregressive : The regression of t can be explained by

itself lagged one period.

(RHO) : the first-order autocorrelation coefficient

or ‘coefficient of autocovariance’

All right reserved by Dr.Bill Wan Sing Hung - HKBU

9.10

Example of serial correlation:

Year

1990

…

...

2002

2003

2004

2005

2006

2007

Consumptiont = 0 + 1 Incomet + errort

230

…

…

558

699

881

925

984

1072

320

…

…

714

822

907

1003

1174

1246

u1990

….

….

u2002

u2003

u2004

u2005

u2006

u2007

Error term

represents

other factors

that affect

consumption

TaxPay2006

TaxPay2007

The current year Tax Pay may be determined by previous year rate

TaxPay2007 =

TaxPay2006 + u2007

t = t-1 + ut

All right reserved by Dr.Bill Wan Sing Hung - HKBU

ut ~ iid(0, u2)

9.11

If t = 1 t-1 + ut

it is AR(1), first-order autoregressive

If t = 1 t-1 + 2 t-2 + ut

it is AR(2), second-order autoregressive

If t = 1 t-1 + 2 t-2 + 3 t-3 + ut

it is AR(2), third-order autoregressive

……………………………………………….

High order

autocorrelation

If t = 1 t-1 + 2 t-2 + …… + n t-n + ut

it is AR(n), nth-order autoregressive

Autocorrelation AR(1) :

-1 < < 1

Cov (t t-1) > 0

=> 0 < < 1

positive AR(1)

Cov (t t-1) < 0

=> -1 < < 0

negative AR(1)

All right reserved by Dr.Bill Wan Sing Hung - HKBU

^

i

Positive autocorrelation

Positive autocorrelation

^

i

x

x

x

x

x

x

x

x

x

0

x

time 0

x

x

x

^

i

time

x

x

x

9.12

x

Cyclical: Positive autocorrelation

x

x

0

x

x

x

xx

x

x

x

x

x

x

x

x

x

time

All right reserved by Dr.Bill Wan Sing Hung - HKBU

The current error

term tends to have

the same sign as

the previous one.

9.13

^

i

Negative autocorrelation

x

x

x

x

x

x

x

x

x

time

x

x

x

x

x

The current error term tends to have the opposite sign from the previous.

^

i

x

0

No autocorrelation

x

x x x x x

x

x x x

x

x

x

x

x

x

x

xx

x x

x x

x

x

time

The current error term tends to be randomly appeared from the previous.

All right reserved by Dr.Bill Wan Sing Hung - HKBU

The meaning of : The error term t at time t is a linear

combination of the current and past disturbance.

0<<1

-1 < < 0

The further the period is in the

past, the smaller is the weight of

that error term (t-1) in

determining t

=1

The past is equal importance to

the current.

>1

The past is more importance than

the current.

All right reserved by Dr.Bill Wan Sing Hung - HKBU

9.14

9.15

The consequences of serial correlation:

1. The estimated coefficients are still unbiased.

^ )=

E(

k

BLUE

k

^

2. The variances of the k is no longer the smallest

^

3. The standard error of the estimated coefficient, Se(k)

becomes large

Therefore, when AR(1) is existing in the regression,

The estimation will not be “BLUE”

All right reserved by Dr.Bill Wan Sing Hung - HKBU

Example: Two variable regression model: Yt = 0 + 1X1t + t

9.16

xy

^

The OLS estimator of 1, ===> 1 =

xt2

If

E(t t-1) = 0

then

2

^)=

Var (

1

xt2

If E(tt-1) 0, and t = t-1 + ut , then

2

22

xt xt+1 2 xt xt+2

^

+ ….

Var (1)AR1=

+

+

2

2

2

2

xt

xt

xt

xt

-1 < < 1

If = 0, zero autocorrelation, than Var(^1)AR1 = Var(^1)

^

^

If 0, autocorrelation, than Var(1)AR1 > Var(1)

The AR(1) variance is not the smallest

All right reserved by Dr.Bill Wan Sing Hung - HKBU

9.17

Autoregressive scheme:

t = t-1 + ut

==> t-1 = t-2 + ut-1

==> t-2 = t-3 + ut-2

==> t = [ t-2 + ut-1] + ut

t = 2 t-2 + ut-1 + ut

=> t = 2 [ t-3 + ut-2] + ut-1 + ut

t = 3 t-3 + 2 ut-2 + ut-1 + ut

2

E(t t-1) =

1 - 2

E(t t-2) = 2

E(t t-3) = 2 2

…………….

E(t t-k) = k-1 2

It means the more periods in the past,

the less effect on current period

k-1 becomes smaller and smaller

All right reserved by Dr.Bill Wan Sing Hung - HKBU

How to detect autocorrelation ?

DW* or d*

All right reserved by Dr.Bill Wan Sing Hung - HKBU

9.18

5% level of significance,

k = 1,

n=24

dL = 1.27

du = 1.45

DW* = 0.9107

DW* < dL

k is the number of

independent variables

(excluding the intercept)

All right reserved by Dr.Bill Wan Sing Hung - HKBU

9.19

Durbin-Watson Autocorrelation test

9.20

From OLS regression result: where d or DW* = 0.9107

Check DW Statistic Table (At 5% level of significance, k’ = 1, n=24)

H0 : no autocorrelation

=0

H1 : yes, autocorrelation exists.

or > 0

positive autocorrelation

dL = 1.27

du = 1.45

Reject

H0

region

dL

0

1.27

du

1.45

2

DW*

0.9107

All right reserved by Dr.Bill Wan Sing Hung - HKBU

9.21

Durbin-Watson test

Y = 0 + 1 X2 + …… + k Xk + t

obtain ^

t ,

DW-statistic(d)

OLS :

Assuming AR(1) process: t = t-1 + ut

I.

-1 < < 1

H0 : ≤ 0

no positive autocorrelation

H1 : > 0

yes, positive autocorrelation

DW*

Compare d* and dL, du (critical values)

if d* < dL

==> reject H0

if d* > du

==> not reject H0

if dL d* du ==> this test is inconclusive

All right reserved by Dr.Bill Wan Sing Hung - HKBU

9.22

Durbin-Watson test(Cont.)

T

DW =

( d)

(^t - ^

t-1)2

^

d 2(1-)

2 (1 - ^)

t=2

T

^

t2

t=1

^

d ≈ 2 (1- )

d

≈ 1 - ^

2

==> ^

≈ 1- d

2

==>

^ 1

Since -1

implies 0 d 4

dL

0

1.27

du

1.45

2

(4-dU)

(4-dL)

2.55

2.73

All right reserved by Dr.Bill Wan Sing Hung - HKBU

4

9.23

Durbin-Watson test(Cont.)

II.

H0 : ≥0

H1 : < 0

no negative autocorrelation

yes, negative autocorrelation

we use (4-d)

(when d is greater than 2)

if (4 - d) < dL

or 4 - dL < d < 4

==> reject H0

if dL (4 - d) du

or 4 - du d 4 - dL ==> inconclusive

if dL (4 - d) du

or 4 - du > d > 2

dL

0

1.27

==> not reject H0

du

1.45

2

All right reserved by Dr.Bill Wan Sing Hung - HKBU

(4-dU)

(4 - dL)

2.55

2.73

4

Durbin-Watson test(Cont.)

II.

H0 : =0

No autocorrelation

H1 : 0

If d < dL

or d > 4 - dL

two-tailed test for auto correlation

either positive or negative AR(1)

==> reject H0

If

du < d < 4 - du ==> not reject H0

If

dL d du

or 4 - du d 4 - dL

==> inconclusive

All right reserved by Dr.Bill Wan Sing Hung - HKBU

9.24

9.25

For example :

^ = 23.1 - 0.078 CAP - 0.146 CAP + 0.043T

UM

t

t

t-1

t

(15.6) (2.0)

(3.7)

(10.3)

_

^ = 0.677 SSR = 29.3 DW = 0.23 n = 68

R2 = 0.78 F = 78.9

(i)

Observed

K = 3 (number of independent variable) (excluding intercept)

(ii) n = 68 ,

(iii) dL = 1.525 ,

dL = 1.372 ,

= 0.01

0.05

du = 1.703

du = 1.546

significance level

0.05

0.01

Reject H0, positive autocorrelation exists

All right reserved by Dr.Bill Wan Sing Hung - HKBU

H0 : = 0

positive autocorrelation

H1 : > 0

reject

H0

H0 : = 0

negative autocorrelation

H1 : < 0

not

reject

0

du

1.372

1.525

1.546

1.703

reject

H0

not

reject

inconclusive

dL

9.26

inconclusive

2

4-du

4-dL

2.45

2.297

2.63

2.475

0.23

All right reserved by Dr.Bill Wan Sing Hung - HKBU

4

DW

(d)

1% & 5%

Critical values

9.27

The assumptions underlying the d(DW) statistics :

1. Intercept term must be included.

2. X’s are nonstochastic

3. Only test AR(1) : t = t-1 + ut

where ut ~ iid (0, u2)

4. Not include the lagged dependent variable,

Yt = 0+ 1 Xt1 + 2 Xt2 + …… + kXtk + Yt-1 + t

Y

100

...

...

20

N.A.

N.A.

37

41

...

...

1980

235

81

N.A.

missing

82

N.A.

93

253

94

281

95

All right reserved by Dr.Bill Wan Sing Hung - HKBU

X

15

...

(autoregressive model)

5. No missing observation

1970

Lagrange Multiplier (LM) Test or called Durbin’s m test

Or Breusch-Godfrey (BG) test of higher-order autocorrelation

Test Procedures:

(1) Run OLS and obtain the residuals ^t.

(2) Run ^t against all the regressors in the model

plus the additional regressors, ^t-1, ^t-2, ^t-3,…, ^t-p.

^t = 0 + 1 Xt + ^

t-1 + ^t-2 + ^t-3 + … + ^t-p + u

Obtain the R2 value from this regression.

(3) compute the BG-statistic: (n-p)R2

(4) compare the BG-statistic to the 2p (p is # of degree-order)

(5) If BG > 2p, reject Ho,

it means there is a higher-order autocorrelation

If BG < 2p, not reject Ho,

it means there is a no higher-order autocorrelation

All right reserved by Dr.Bill Wan Sing Hung - HKBU

9.28

Remedy: 1. First-difference transformation

Yt = 0 + 1 Xt + t

Yt-1 = 0 + 1 Xt-1 + t-1

9.29

assume = 1

==> Yt - Yt-1 = 0 - 0 + 1 (Xt - Xt-1) + (t - t-1)

==>

DYt =

1 DXt + t

no intercept

2. Add a trend (T)

Yt = 0 + 1 Xt + 2 T + t

Yt-1 = 0 + 1 Xt-1 + 2 (T -1) + t-1

==> (Yt - Yt-1) = (0 - 0) + 1 (Xt - Xt-1) + 2 [T- (T -1)] + (t - t-1)

==> DYt = 1 DXt + 2*1 + ’t

==> DYt = 2* + 1 DXt + ’t

If ^

1* > 0 => an upward trend in Y

^

(2 > 0) All right reserved by Dr.Bill Wan Sing Hung - HKBU

3. Cochrane-Orcutt Two-step procedure (CORC)

(1). Run OLS on

and obtains ^

t

(2). Run OLS on

and obtains ^

Yt = 0 + 1 Xt + t

^

t = ^t-1 + ut

Where u~(0, )

Generalized

Least Squares

(GLS)

method

(3). Use the ^ to transform the variables :

Yt* = Yt - ^ Yt-1

Xt* = Xt - ^

Xt-1

(4). Run OLS on

9.30

Yt = 0 + 1 Xt + t

^ t-1

-) ^

Yt-1 = 0 ^ + 1 ^ Xt-1 +

^ t-1)

^ +1(Xt - ^Xt-1) + (t -

(Yt - ^Yt-1)= 0(1-)

Yt* = 0* + 1* Xt* + ut

All right reserved by Dr.Bill Wan Sing Hung - HKBU

4. Cochrane-Orcutt Iterative Procedure

(5). If DW test shows that the autocorrelation still existing, than

it needs to iterate the procedures from (4). Obtains the t*

(6). Run OLS

^

t* = ^t-1* + ut’

DW2

^

^

(1 )

2

^

and obtains ^

which is the second-round estimated

^

(7). Use the ^

to transform the variable

^

Yt** = Yt - ^

Yt-1

Yt = 0 + 1 Xt + t

^ X

Xt** = Xt - ^

t-1

^

^

^

^

^ t-1 + ^

^

Yt-1 = 0 ^

+ 1 X

t-1

All right reserved by Dr.Bill Wan Sing Hung - HKBU

9.31

Cochrane-Orcutt Iterative procedure(Cont.)

(8). Run OLS on

Where is

Yt** = 0** + 1** Xt** + t**

^

^

^

^

^

^

^

^

(Yt - Yt-1) = 0 (1 - ) + 1 (Xt - Xt-1) + (t - t-1)

(9). Check on the DW3 -statistic, if the autocorrelation is still

existing, than go into third-round procedures and so on.

^ ^

^

^ < 0.01).

^-

Until the estimated ’s differs a little (

All right reserved by Dr.Bill Wan Sing Hung - HKBU

9.32

Example: Studenmund (2006) Exercise 14 and Table 9.1, pp.342-3449.33

(1)

Low DW statistic

Obtain the

Residuals

(Usually after you

run regression, the

residuals will be

immediately stored

in this icon

All right reserved by Dr.Bill Wan Sing Hung - HKBU

(2)

9.34

Give a new name

for the residual series

Run regression of the

current residual on

the lagged residual

ˆt ˆt -1 t

Obtain the

^

estimated ρ(“rho”)

All right reserved by Dr.Bill Wan Sing Hung - HKBU

(3)

Transform the Y* and X*

New series are created,

but each first observation

is lost.

All right reserved by Dr.Bill Wan Sing Hung - HKBU

9.35

(4)

Run the

transformed

regression

Obtain the estimated result

which is improved

All right reserved by Dr.Bill Wan Sing Hung - HKBU

9.36

(5)~(9)

9.37

The Cochrane-Orcutt Iterative procedure in the EVIEWS

The is the EVIEWS’

Command to run the

iterative procedure

All right reserved by Dr.Bill Wan Sing Hung - HKBU

The result of the Iterative procedure

9.38

This is the

estimated ρ

Each

variable

is

transformed

The DW

is improved

All right reserved by Dr.Bill Wan Sing Hung - HKBU

9.39

Generalized least Squares (GLS)

5. Prais-Winsten transformation

Yt = 0 + 1 Xt + t

t = 1,……,T

Assume AR(1) : t = t-1 + ut

(1)

-1 < < 1

Yt-1 = 0 + 1 Xt-1 + t-1

(2)

(1) - (2) => (Yt - Yt-1) = 0 (1 - ) + 1 (Xt - Xt-1) + (t - t-1)

GLS

=> Yt* = 0* + 1* Xt* + ut

All right reserved by Dr.Bill Wan Sing Hung - HKBU

9.40

To avoid the loss of the first observation, the first

observation of Y1* and X1* should be transformed as :

^2 (Y )

Y1* = 1 -

1

^2 (X )

X1* = 1 -

1

but Y2* = Y2 - ^

Y1 ; X2* = X2 - ^ X1

…...

…...

…...

…...

…...

…...

Y3* = Y3 - ^ Y2 ; X3* = X3 - ^ X2

Yt* = Yt - ^ Yt-1 ; Xt* = Xt - ^ Xt-1

All right reserved by Dr.Bill Wan Sing Hung - HKBU

Edit the

figure here

To restore

the first

observation

Yt = 0 + 1 Xt + t

6. Durbin’s Two-step method :

Since (Yt - Yt-1) = 0 (1 - ) + 1 (Xt - Xt-1) + ut

=> Yt = 0* + 1 Xt - 1 Xt-1 + Yt-1 + ut

I. Run OLS => Y = * + * X - * X + * Y + u

0

1

t

2

t-1

3

t-1

t

this specification t

Obtain

^3* as an estimated ^

(RHO)

II. Transforming the variables :

Yt* = Yt - ^3* Yt-1

and Xt* = Xt - ^3* Xt-1

as Yt* = Yt - ^Yt-1

^X

as X * = X -

t

t

t-1

III. Run OLS on model : Yt* = 0 + 1 Xt* + ’t

^0 = ^0 (1 - ) and ^1 = ^1

where

All right reserved by Dr.Bill Wan Sing Hung - HKBU

9.41

9.42

Including this

lagged term of Y

Obtain the estimated

^

ρ(“rho”)

All right reserved by Dr.Bill Wan Sing Hung - HKBU

Limitation of Durbin-Watson Test:

9.43

Lagged Dependent Variable and Autocorrelation

Yt = 0 + 1 X1t + 2 X2t + …… + k Xk.t + 1 Yt-1 +t

DW statistic will often be closed to 2 or

DW is not reliable

DW does not converge to 2 (1 - ^)

Durbin-h Test:

Compare h* to Z

If

Compute

h*

=^

n

1 - n*Var (^1)

where Zc ~ N (0,1) normal distribution

|h*| > Zc => reject H0 : = 0 (no autocorrelation)

All right reserved by Dr.Bill Wan Sing Hung - HKBU

Durbin-h Test:

Compute

h* = ^

n

9.44

1 - n*Var (^1)

24

h* 0.7772*

1 - 24 * (0.10617) 2

h* = 4.458 > Z

Therefore reject

H0 : = 0 (no autocorrelation)

All right reserved by Dr.Bill Wan Sing Hung - HKBU