18-k-nn - University of Iowa

advertisement

K-means method for

Signal Compression:

Vector Quantization

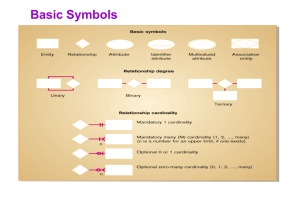

Voronoi Region

Blocks of signals:

A sequence of audio.

A block of image pixels.

Formally: vector example: (0.2, 0.3, 0.5, 0.1)

A vector quantizer maps k-dimensional vectors in the

vector space Rk into a finite set of vectors

Y = {yi: i = 1, 2, ..., N}.

Each vector yi is called a code vector or a codeword. and

the set of all the codewords is called a

codebook. Associated with each codeword, yi, is a nearest

neighbor region called Voronoi region, and it is defined by:

The set of Voronoi regions partition the entire space Rk .

Two Dimensional Voronoi Diagram

Codewords in 2-dimensional space. Input vectors are

marked with an x, codewords are marked with red circles,

and the Voronoi regions are separated with boundary lines.

The Schematic of a Vector

Quantizer (signal compression)

Compression Formula

Amount of compression:

Codebook size is K, input vector of dimension L

In order to inform the decoder of which code

vector is selected, we need to use log 2 K bits.

• E.g. need 8 bits to represent 256 code vectors.

Rate: each code vector contains the

reconstruction value of L source output samples,

the number of bits per vector component would

be: log 2 K / L .

K is called “level of vector quantizer”.

Vector Quantizer Algorithm

1.

2.

3.

Determine the number of codewords, N, or the size of

the codebook.

Select N codewords at random, and let that be the

initial codebook. The initial codewords can be randomly

chosen from the set of input vectors.

Using the Euclidean distance measure clusterize the

vectors around each codeword. This is done by taking

each input vector and finding the Euclidean distance between

it and each codeword. The input vector belongs to the

cluster of the codeword that yields the minimum distance.

Vector Quantizer Algorithm (contd.)

4.

Compute the new set of codewords. This is done by

obtaining the average of each cluster. Add the component

of each vector and divide by the number of vectors in the

cluster.

where i is the component of each vector (x, y, z, ...

directions), m is the number of vectors in the cluster.

5.

Repeat steps 2 and 3 until the either the codewords

don't change or the change in the codewords is small.

Other Algorithms

Problem: k-means is a greedy algorithm, may

fall into Local minimum.

Four methods selecting initial vectors:

Random

Splitting (with perturbation vector) Animation

Train with different subset

PNN (pairwise nearest neighbor)

Empty cell problem:

No input corresponds to am output vector

Solution: give to other clusters, e.g. most populate

cluster.

VQ for image compression

Taking blocks of images as vector L=NM.

If K vectors in code book:

need to use log 2 K bits.

log 2 K / L

Rate:

The higher the value K, the better quality, but lower

compression ratio.

Overhead to transmit code book:

codebook size K

16

64

256

1024

Overhead bits/pixel

0.03125

0.125

0.5

2

Train with a set of images.

K-Nearest Neighbor

Learning

22c:145

University of Iowa

Different Learning Methods

Parametric Learning

The target function is described by a set of

parameters (examples are forgotten)

E.g., structure and weights of a neural network

Instance-based Learning

Learning=storing all training instances

Classification=assigning target function to a new

instance

Referred to as “Lazy” learning

Instance-based Learning

Its very similar to a

Desktop!!

General Idea of Instancebased Learning

Learning: store all the data instances

Performance:

when a new query instance is

encountered

• retrieve a similar set of related instances

from memory

• use to classify the new query

Pros and Cons of Instance

Based Learning

Pros

Can construct a different approximation to the

target function for each distinct query

instance to be classified

Can use more complex, symbolic

representations

Cons

Cost of classification can be high

Uses all attributes (do not learn which are

most important)

Instance-based Learning

K-Nearest Neighbor Algorithm

Weighted Regression

Case-based reasoning

k-nearest neighbor (knn)

learning

Most basic type of instance learning

Assumes all instances are points in

n-dimensional space

A distance measure is needed to

determine the “closeness” of

instances

Classify an instance by finding its

nearest neighbors and picking the

most popular class among the

neighbors

1-Nearest Neighbor

3-Nearest Neighbor

Important Decisions

Distance measure

Value of k (usually odd)

Voting mechanism

Memory indexing

Euclidean Distance

Typically used for real valued attributes

Instance x (often called a feature

vector)

a1 ( x ), a 2 ( x ), a n ( x )

Distance between two instances xi and

xj

n

d ( xi , x j )

(a

r 1

r

( x i ) a r ( x j ))

2

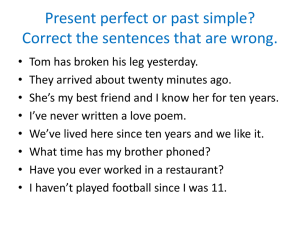

Discrete Valued Target Function

Training algorithm:

For each training example <x, f(x)>, add the

example to the list training_examples

Classification algorithm:

Given a query instance xq to be classified.

Let x1…xk be the k training examples nearest to xq

Return

k

fˆ ( x q ) arg max

vV

( v , f ( x ))

i

i 1

where ( a , b ) 1 if a b

( a , b ) 0 otherwise

Continuous valued target function

Algorithm computes the mean value

of the k nearest training examples

rather than the most common value

Replace fine line in previous algorithm

with

k

fˆ ( x q )

f ( xi )

i 1

k

Training dataset

Customer ID Debt

Income

Marital Status

Risk

Abel

High

High

Married

Good

Ben

Low

High

Married

Doubtful

Candy

Medium

Very low

Unmarried

Poor

Dale

Very high

Low

Married

Poor

Ellen

High

Low

Married

Poor

Fred

High

Very low

Married

Poor

George

Low

High

Unmarried

Doubtful

Harry

Low

Medium

Married

Doubtful

Igor

Very Low

Very High

Married

Good

Jack

Very High

Medium

Married

Poor

k-nn

K=3

Distance

Score for an attribute is 1 for a match

and 0 otherwise

Distance is sum of scores for each

attribute

Voting scheme: proportionate

voting in case of ties

Query:

Zeb

High

Medium

Married

?

Customer ID Debt

Income

Marital Status

Risk

Abel

High

High

Married

Good

Ben

Low

High

Married

Doubtful

Candy

Medium

Very low

Unmarried

Poor

Dale

Very high

Low

Married

Poor

Ellen

High

Low

Married

Poor

Fred

High

Very low

Married

Poor

George

Low

High

Unmarried

Doubtful

Harry

Low

Medium

Married

Doubtful

Igor

Very Low

Very High

Married

Good

Jack

Very High

Medium

Married

Poor

Query:

Yong

Low

High

Married

?

Customer ID Debt

Income

Marital Status

Risk

Abel

High

High

Married

Good

Ben

Low

High

Married

Doubtful

Candy

Medium

Very low

Unmarried

Poor

Dale

Very high

Low

Married

Poor

Ellen

High

Low

Married

Poor

Fred

High

Very low

Married

Poor

George

Low

High

Unmarried

Doubtful

Harry

Low

Medium

Married

Doubtful

Igor

Very Low

Very High

Married

Good

Jack

Very High

Medium

Married

Poor

Query:

Vasco

High

Low

Married

?

Customer ID Debt

Income

Marital Status

Risk

Abel

High

High

Married

Good

Ben

Low

High

Married

Doubtful

Candy

Medium

Very low

Unmarried

Poor

Dale

Very high

Low

Married

Poor

Ellen

High

Low

Married

Poor

Fred

High

Very low

Married

Poor

George

Low

High

Unmarried

Doubtful

Harry

Low

Medium

Married

Doubtful

Igor

Very Low

Very High

Married

Good

Jack

Very High

Medium

Married

Poor

Voronoi Diagram

Decision surface formed by the training

examples of two attributes

Examples of one attribute

Distance-Weighted Nearest

Neighbor Algorithm

Assign weights to the neighbors

based on their ‘distance’ from the

query point

Weight ‘may’ be inverse square of the

distances

All

training points may influence a

particular instance

Shepard’s method

Kernel function for DistanceWeighted Nearest Neighbor

Examples of one attribute

Remarks

+Highly effective inductive inference

method for noisy training data and

complex target functions

+Target function for a whole space

may be described as a combination

of less complex local approximations

+Learning is very simple

- Classification is time consuming

Curse of Dimensionality

-

When the dimensionality increases, the volume of the

space increases so fast that the available data becomes

sparse. This sparsity is problematic for any method that

requires statistical significance.

Curse of Dimensionality

Suppose there are N data points of

dimension n in the space [-1/2, 1/2]n.

The k-neighborhood of a point is defined

to be the smallest hypercube containing

the k-nearest neighbor.

Let l be the average side length of a kneighborhood. Then the volume of an

average hypercube is dn.

So dn/1n = k/N, or d = (k/N)1/n

d = (k/N)1/n

N

k

n

d

1,000,000

10

2

0.003

1,000,000

10

3

0.02

1,000,000

10

17

0.5

1,000,000

10

200

0.94

When n is big, all the points are outliers.

- Curse of Dimensionality

- Curse of Dimensionality