Chapter 8 PowerPoint

advertisement

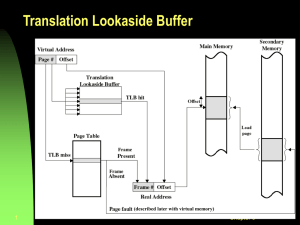

Operating System Concepts chapter 8 CS 355 Operating Systems Dr. Matthew Wright Background • A process generates a stream of memory requests. • Memory access takes many CPU clock cycles, during which the processor may have to stall as it waits for data. • The operating system and hardware work together to: – Provide the data that the process requests – Protect data from improper access by another process • We will ignore details about caches, because they are managed by the hardware. Address Binding Address binding involves converting a variable in the source program to a physical address in memory. This happens in multiple stages: 1. Compile time: If the memory location of the program is known when it is compiled, then absolute code can be generated. If the location changes, the code must be recompiled. 2. Load time: If the memory location is not known at compile time, the compiler must generate relocatable code. Binding may happen when the program is loaded into memory. 3. Execution time: If the process can be moved during its execution from one memory segment to another, then binding may be delayed until run time. This is the most common method, though it requires hardware support. Base and Limit Registers • Each process has a separate memory space. • Protection can be achieved by using a base register and a limit register. • Only the operating system (in kernel mode) can set the base and limit registers. • If the CPU requests an absolute memory location M, the hardware verifies that base ≤ M < base + limit. If this is not the case, the hardware generates a trap to the operating system. Logical and Physical Addresses • Logical address: generated by a process running on the CPU, also called a virtual address • Physical address: seen by the memory unit • Memory-Management Unit (MMU): hardware device that maps logical addresses to physical addresses This simple MMU adds the value stored in a relocation register to the logical addresses that arrive from the CPU. Fundamental Problem Problems • Main memory is not large enough to hold all the programs on a system. • Main memory is volatile. • Memory requirements of processes may change over time. Solutions • Swapping: moving processes between memory and disk • Paging: allocating fixed-size blocks of memory to processes • Segmentation: allocating blocks of memory to processes according to the needs of constructs within each process Swapping • A process can be swapped temporarily out of memory to a backing store, and then brought back into memory for continued execution. • Backing store: fast disk large enough to accommodate copies of all memory images for all users • Roll out, roll in: swapping variant used for priority-based scheduling algorithms; lower-priority process is swapped out so higher-priority process can be loaded and executed • The major part of swap time is transfer time. Total transfer time is directly proportional to the amount of memory swapped. • Swapping is slow, but it can be necessary if the main memory cannot hold all running processes. • Modified versions of swapping are found on many systems (i.e., UNIX, Linux, and Windows). Memory Allocation • How do we allocate memory to processes? • Continuous memory allocation: each process is contained in a single contiguous section of memory • OS reserves some memory for itself (often with low addresses). • When the scheduler selects a process for execution, the dispatcher sets the relocation (base) and limit registers. • For protection, every requested address is checked against these registers. Memory Allocation • We could divide memory into various partitions, each of which holds a process. • Variable-partition scheme: OS keeps a table indicating which parts of memory are occupied and which are available. • A block of available memory is called a hole. • If a new process arrives, the OS finds a hole for it. • If a hole is too big for the process, the OS splits it into two parts: one for the process, and one that is returned to the set of holes. • Two adjacent holes can be combined to make one larger hole. Memory Allocation Dynamic storage-allocation problem: How do we choose where to place a process from a list of holes? 1. First fit: allocate the first hole that is big enough 2. Best fit: allocate the smallest hole that is big enough (requires searching the entire list of holes, unless they are ordered by size) 3. Worst fit: allocate the largest hole (also requires searching the entire list; produces the largest leftover hole) First fit and best fit are generally better than worst fit in terms of speed and storage utilization. First fit is generally faster. Fragmentation • Over time, memory becomes fragmented. • External fragmentation: total memory space exists to satisfy a request, but it is not contiguous (many small holes) – 50-percent rule: Statistically, if N partitions are allocated via first-fit, another 0.5N partitions are lost to fragmentation. • Internal Fragmentation: allocated memory may be slightly larger than requested memory; this size difference is memory internal to a partition, but not being used – Example: A process requests 8424 bytes, and we have a hole of 8428 bytes. It’s not worth keeping track of a 4-byte hole, so we allocate the entire 8428 bytes, and we lose 4 bytes to internal fragmentation. • We could reduce external fragmentation by compaction, shuffling memory contents to place all free memory together in one large block. – Compaction is slow, and only possible only if address binding is done at execution time. Paging • Paging allows the physical address space of a process to be noncontiguous and avoids external fragmentation. • Paging is handled by the hardware, together with the operating system. • Idea: – Divide physical memory into fixed-size blocks called frames. – Divide logical memory into blocks called pages, of the same size as the frames. – To run a process, place each of its pages into an available frame in memory. – Set up a table to translate logical to physical addresses. Paging Hardware • Every address from the CPU is divided into a page number and a page offset. • Address spaces whose size is a power of 2 make this particularly easy. frame at location f Paging Example In this example: • Logical memory contains 16 bytes: 4 pages of 4 bytes each. • Page table contains 4 entries. • Physical memory consists of 32 bytes: 8 frames of 4 bytes each. In reality, pages are often 4 KB or 8 KB, though they can be much larger. Paging: User View • User program views its memory as one contiguous space. • All aspects of paging are hidden from the user program. • A user program cannot access memory that it does not own, for it can only access the pages provided by its page table. • The operating system uses the page table to translate addresses when it interacts with a process’s memory (e.g. when a process makes a system call that requests I/O). • The operating system also keeps track of which frames are available in a frame table. Paging: Hardware Support • Memory address translation through the page table must be fast. • A small page table can be implemented as a set of registers. • Modern systems allow large page tables (e.g. 1 million entries), which are kept in main memory. A page-table base register (PTBR) points to the page table. • Problem: If the page table is stored in memory, then accessing a memory location requires two memory accesses (one for the page-table entry, another for the actual memory location), which would be intolerably slow. • Solution: Use a small, fast hardware cache called a translation look-aside buffer (TLB) to store commonly-used page-table entries. – When the CPU generates a logical address, the system checks to see if its page number is in the TLB. If so, the frame number is quickly available. – If the page number is not in the TLB (a TLB miss), then the system consults the page table in memory – After a TLB miss, the system adds the page/frame combination to the TLB, replacing an existing entry if the TLB is full. Paging: Hardware with TLB Paging: Multiple Processes • Address-space identifiers (ASIDs) are basically process identifiers that allow the TLB to contain entries for multiple processes simultaneously. • In this case, the TLB stores an ASID with each entry – The ASID of the currently running process is compared with that of the page to provide memory protection. – If the ASIDs don’t match, the memory access attempt is treated as a TLB miss. • If the TLB does not support ASIDs, it must be flushed (erased) with each context switch. Paging: TLB Performance • Hit ratio: the percentage of times that a requested page number is found in the TLB • Effective memory-access time: average time required for a memory access • Example: – suppose it takes 15 nanoseconds to search the TLB and 100 ns to access memory – Memory access takes 115 ns if a TLB hit occurs – Memory access takes 215 ns if a TLB miss occurs – If the hit ratio is 80%, then: effective access time = 0.80 × (115) + 0.20 (215) = 135 ns – If the hit ratio is 95%, then: effective access time = 0.95 × (115) + 0.05 (215) = 120 ns Paging: Protection • The page table stores various protection bits along with each entry. • One special bit indicates whether a page is read-only. • The valid-invalid bit indicates whether a page is in the logical address space of a given process. • Since few processes use their entire available address space, most of the page table could be unused. The page-table length register (PTLR) indicates the size of the page table, and is checked against every logical address. Paging: Shared Pages • Paging makes it relatively easy for processes to share common code. • For example, multiple copies of a text editor might be running at the same time, sharing the same program code. • Shared code must be read-only. • Only one copy of the shared code must be kept in memory, and each user’s page table maps to the common code. • See the example on the following slide… Paging: Shared Pages Example Paging: Hierarchical Page Tables • With the large address space of modern computers, the page table itself can become too large to store in one contiguous block of memory. • One solution is to use two-level paging: paging the page table. • Example: 32-bit logical address space, 4 KB page size page number page offset p1 p2 d 10 bits 10 bits 12 bits • This is called a forward-mapped page table. Paging: Hierarchical Page Tables • For a system with a 64-bit logical address space, two-level paging is not enough. • We could divide the outer page table into smaller pages, resulting in three-level paging. • Often, even three-level paging is not enough. • To ensure that all page sizes are small, a 64-bit address system could require seven levels of paging, which would be much too slow. Paging: Hashed Page Tables • For address spaces larger than 32 bits, a hashed page table may be used. • Each entry in the hash table contains a linked list of elements (to handle collisions). • Each element contains a virtual page number, the value of the mapped page frame, and a pointer to the next element in the list. Paging: Inverted Page Tables • Usual design: – Each process has its own page table. – Page table entries contain physical addresses, indexed by virtual addresses. – Drawback: a page table may contain many unused entries, and may consume lots of memory • Inverted Page Table: – A single, system-wide page table, with one entry for each physical frame of memory – Page table contains virtual addresses, indexed by physical addresses – Decreases the amount of memory required to store the page table – Increases the amount of time required to search the page table – Uses a hash function to make searching faster – Difficult to implement shared memory with this solution Paging: Inverted Page Tables Segmentation • Users view memory as a collection of variable-sized segments, with no ordering among segments. • Examples of segments: objects, methods, variables, etc. • With segmentation, a logical address space is a collection of segments – Each segment has a name and a length – A user specifies each address by two quantities: a segment name and an offset • The compiler creates segments, and the loader assigns segment numbers to segment names. Segmentation: Hardware • A segment table maps segment numbers and offsets to physical addresses. • Each entry contains a segment base (the address of the segment in memory) and a segment limit (the length of the segment). Segmentation: Example Paging vs. Segmentation Paging • Invisible from the user program • Divides physical memory into blocks (pages) • Pages are of uniform, predetermined size • Logical address is a positive integer Segmentation • Visible to the user program • Divides logical memory into blocks (segments) • Segments are of arbitrary sizes • Logical address is an ordered pair: (segment, offset) In both cases, the operating system works together with the hardware to translate logical addresses into physical addresses. Example: Intel Pentium • The Intel Pentium supports both pure segmentation and segmentation with paging. • Overview: – CPU generates logical address, which goes to the segmentation unit – Segmentation unit produces linear address, which goes to the paging unit – Paging unit generates physical address in main memory Example: Pentium Segmentation • The logical address space of a process is divided into two partitions – One partition holds segments that are private to that process, indexed by the local descriptor table (LDT). – The other partition holds segments that are shared, indexed by the global descriptor table (GDT). • Logical addresses consist of a selector and an offset. • The CPU has six segment registers to hold segment addresses. Example: Pentium Paging • Pentium allows page sizes of either 4 KB or 4 MB. • Page directory includes a page size bit that indicates the size of a given page. • Page tables can be swapped to disk, and another bit in the directory indicates whether a page is on the disk. Example: Linux on the Pentium • Linux is designed to run on various processors, so it uses minimal segmentation. • On the Pentium, Linux uses six segments 1. A segment for kernel code 4. A segment for user data 2. A segment for kernel data 5. A task-state segment 3. A segment for user code 6. A default LDT segment • Linux uses a three-level paging model that works well for 32-bit and 64bit architectures, with linear addresses divided into four parts: • On the Pentium, the size of the middle directory is zero bits, so the paging is effectively two-level. • Each task in Linux has its own set of page tables. Example: Three-level Paging in Linux On the Pentium, the size of the middle directory is zero.