Linear and multiple regression

advertisement

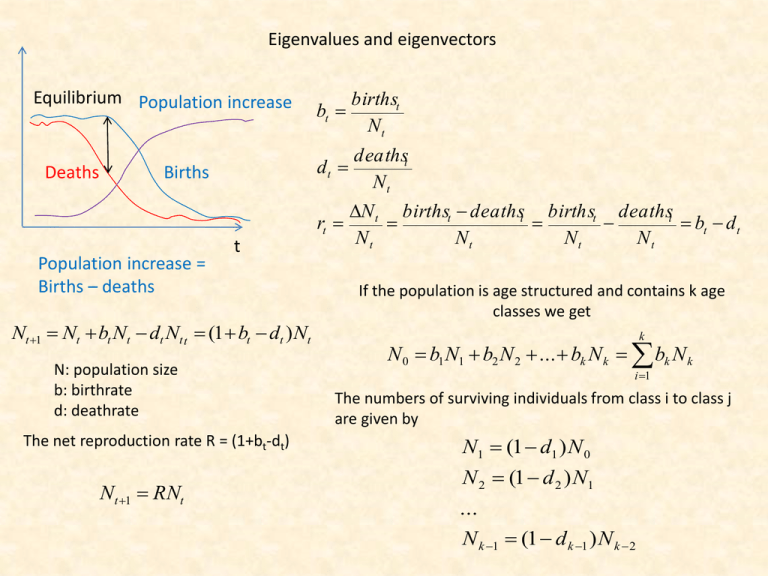

Eigenvalues and eigenvectors

Equilibrium Population increase

Deaths

Births

Population increase =

Births – deaths

t

Nt 1 Nt bt Nt dt Nt t (1 bt dt ) Nt

N: population size

b: birthrate

d: deathrate

The net reproduction rate R = (1+bt-dt)

Nt 1 RNt

bt

birthst

Nt

dt

deathst

Nt

rt

N t birthst deathst birthst deathst

bt d t

Nt

Nt

Nt

Nt

If the population is age structured and contains k age

classes we get

k

N 0 b1 N1 b2 N 2 ... bk N k bk N k

i 1

The numbers of surviving individuals from class i to class j

are given by

N1 (1 d1 ) N 0

N 2 (1 d 2 ) N1

...

N k 1 (1 d k 1 ) N k 2

Leslie matrix

Assume you have a population of organisms that is age structured.

Let fX denote the fecundity (rate of reproduction) at age class x.

Let sx denote the fraction of individuals that survives to the next age class x+1 (survival rates).

Let nx denote the number of individuals at age class x

We can denote this assumptions in a matrix model called the Leslie model. We have w-1 age classes,

w is the maximum age of an individual.

L is a square matrix.

k

N b1 N1 b2 N 2 ... bk N k bk N k

i 1

N1 (1 d1 ) N 0

N 2 (1 d 2 ) N1

...

N k 1 (1 d k 1 ) N k 2

n0

n1

N t n2

...

n

w 1

Nt 1 LNt

f 0 f1 f 2

s0 0 0

0 s

0

1

L

0 0 s2

... ... ...

0 0 0

f3

...

0

...

0

0

...

...

...

...

0

sw 2

fw 1

0

0

0

0

0

Nt 1 Lt N0

Numbers per age class at time t=1 are the dot product of the Leslie matrix with the abundance

vector N at time t

n0

f 0 f1 f 2 v

n1

s0 0 0 v

n

0 s

0

1

2

...

0 0 s2

... ... ...

...

n

w 1 t 1 0 0 0 v

f3

0

0

...

...

...

0

...

...

...

0

sw 2

fw 1 n0

0 n1

0 n2

0 ...

0 ...

0 nw 1 t

The sum of all fecundities gives

the number of newborns

n0s0 gives the number of

individuals in the first age class

Nw-1sw-2 gives the number of

individuals in the last class

The Leslie model is a linear approach.

It assumes stable fecundity and mortality rates

The effect pof the initial age composition disappears over time

Age composition approaches an equilibrium although the whole

population might go extinct.

Population growth or decline is often exponential

An example

Age class

1

2

3

4

5

6

7

Generation

0

1000

2000

2500

1000

500

100

10

N0

L

1000

2000

2500

1000

500

100

10

0

0.4

0

0

0

0

0

0.5

0

0.8

0

0

0

0

1.2

0

0

0.5

0

0

0

1.5

0

0

0

0.3

0

0

1.1

0

0

0

0

0.1

0

0.2

0

0

0

0

0

0.004

0.005

0

0

0

0

0

0

1

2

3

4

5

6

7

8

6070.05 4335.002 3216.511

3709.4 3822.356 3338.88 3195.559 3199.811

400 2428.02 1734.001 1286.604 1483.76 1528.942 1335.552 1278.224

1600

320 1942.416 1387.201 1029.284 1187.008 1223.154 1068.442

1250

800

160 971.208 693.6003 514.6418 593.504 611.5769

300

375

240

48 291.3624 208.0801 154.3925 178.0512

50

30

37.5

24

4.8 29.13624 20.80801 15.43925

0.4

0.2

0.12

0.15

0.096

0.0192 0.116545 0.083232

9

3037.552

1279.924

1022.579

534.2208

183.4731

17.80512

0.061757

10

2873.77

1215.021

1023.939

511.2894

160.2662

18.34731

0.07122

10000

100

10

6

1000

Abundance

At the long run the

population dies out.

Reproduction rates

are too low to

counterbalance the

high mortality rates

1

2

3

4

5

1

0.1

7

0.01

0

5

10

15

Time

20

11

2783.134

1149.508

972.0165

511.9697

153.3868

16.02662

0.073389

12

2681.059

1113.254

919.6063

486.0083

153.5909

15.33868

0.064106

Important properties:

1. Eventually all age classes

grow or shrink at the

same rate

2. Initial growth depends on

the age structure

3. Early reproduction

contributes more to

population growth than

25

late reproduction

Leslie matrix

fk

0

0

0

0

0

n1

n2

N t n3

...

nk

10000

100

1

2

3

4

5

10

6

1000

Nt 1 LNt

Nt 1 L N0

t

Abundance

b1 b2 b3 b4 ...

...

d1 0 0 0

0 d

0 0

...

2

L

...

0 0 d2 0

... ... ... ... ...

0 0 0 0 d

k 1

1

0.1

7

0.01

0

5

10

15

20

Time

Does the Leslie approach predict a stationary point where population abundances doesn’t

change any more?

dN

0

dt

Nt 1 LNt Nt

We’re looking for a vector that doesn’t change direction when multiplied with the Leslie matrix.

This vector is called the eigenvector U of the matrix.

Eigenvectors are only defined for square matrices.

LU U

LU U 0

[L I ]U 0

I: identity matrix

25

The insulin – glycogen system

At high blood glucose levels insulin stimulates glycogen synthesis and inhibits glycogen breakdown.

dN

fN g

dt

N

g

Ce ft

f

At equilibrium we have

fN g 0

N

f

1

g

0

The change in glycogen concentration can be

modelled by the sum of constant production

and concentration dependent breakdown

fN g 0

f

T

N 1T 0 0

1 N 1

g

1 N 2 f

2

N

g 0

1

N

0

2

N

N 2 1 0 f

1

0

0

1

g

0

The vector {-f,g} is the eigenvector of the dispersion matrix and gives the stationary point.

The value -1 is called the eigenvalue of this system.

How to transform vector A

into vector B?

Y

XA B

2 1 7

1

1

.

5

2

.

5

3 9

B

Multiplication of a vector with a

square matrix defines a new

vector that points to a different

direction.

The matrix defines a

transformation in space

A

The vectors that don’t change during

transformation are the eigenvectors.

X

XA A

Y

In general we define

XU U

B

A

XA B

X

Image transformation

X contains all the information necesssary

to transform the image

U is the eigenvector and the

eigenvalue of the square matrix X

XU U XU U 0

XU IU 0

[ X I]U 0

[ X Λ]U 0

The basic equation

XU U

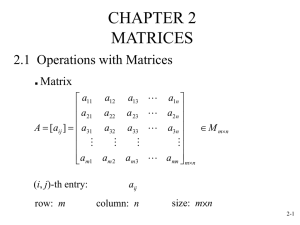

a11

a21

...

a

m1

a11

a21

...

a

m1

a12

a22

...

am 2

a12

a22

...

am 2

... a1n u1

u1

... a1n u1

u1

...

... ... ...

u

... amn un

n

... a1n u1 0

... a1n u1 0

... ... ... ... ...

... amn un 0 0

... 0 u1

... 0 u1

... ...

... un

The matrices A and L have the same properties. We

have diagonalized the matrix A.

We have reduced the information contained in A

into a characteristic value , the eigenvalue.

a11 a12 u1

a21 a22 u2

a11 a12 u1

u1

a21 a22 u2

u2

v1 1 0 u1

v2 0 2 u2

v1

u

L 1

v2

u2

v1

v2

A nxn matrix has n eigenvalues and n eigenvectors

Symmetric matrices and their transposes have identical eigenvectors and eigenvalues

Eigenvectors of

symmetric

matrices are

orthogonal.

a11

a21

v1

v2

a21 u1

u a

u 1 ; 11

a22 u 2

u 2 a21

T

a11

a21

a21 u1 v1 u1

v

u u 1

a22 u 2 v2 u 2

v2

T

a11

a21

a21 y1

u1

v

a22 y2

u2

T

a11

a21

a21 u1 u1

a22 u 2 u 2

u1

u2

v1

v2

a21 v1

v

v 1

a22 v2

v2

v

u 1

v2

T

T

T

T

T

T

u1

u2

v1

v2

a11

a21

a21 v1

a22 v2

u1

u v

v 1 1 u (v1u1 v2u 2 ) v (v1u1 v2u 2 )

u2

u 2 v2

u1 v1

(v1u1 v2u 2 ) 0 0

u 2 v2

uv

How to calculate eigenvectors and eigenvalues?

[A i I] ui 0

The equation is either zero for the trivial case u=0 or if [A-I]

=0

2

1

2 1

1 0

0

0

3

4

3 4

0 1

(2 )(4 ) 3 1 1; 1 5

(2 )u1 u 2 0

1 u1

2 1

1 0 u1

2

1 1

0

0

u

u

u

3

4

0

1

3

4

3u

(4

)u

0

2

2

1 3

1

2

A ui i ui

2

3

2

3

1 1 1

1

1

4 1 1

1

1 1 5

1

5

4 3 15

3

The general solutions of 2x2 matrices

a12

a

A 11

a21 a22

Au u [ A I ]u 0

a11

a21

a12 0

u 0

a22 0

a11

a21

a12

0

a22

a11

a21

a11a22 (a11 a22 ) 2 a21a12 0

2 (a11 a22 ) a21a12 a11a22

a a

a a

( 11 22 ) 2 a21a12 a11a22 11 22

2

4

2

1, 2

a a

a a

11 22 a21a12 a11a22 11 22

2

4

2

Dispersion matrix

Distance matrix

a11

A

a21

a21

a11

1, 2 a11 a21

a12

0

a22

a21

a

A 11

a21 a22

2

1, 2

a a

a a

2

11 22 a21 a11a22 11 22

2

4

1, 2

a11 a22 4a21 (a11 a22 ) 2

2

2

2

a12

a

A 11

a21 a22

Au u [ A I ]u 0

a11 i

a21

a12 ui1

0

a22 i ui 2

a12 u1

a11

0

a22 u 2

a21

(a11 )u1 a12u 2 0

a21u1 ( a22 )u 2 0

This system is indeterminate

a11u1 a12u2 ... a1mum 0

a11 a12 ... a1m u1

a22 ... ... u2

a21u1 a22u2 ... a2 mum 0

a

A 21

0

... ... ... ... ...

...

a

am1u1 am 2u2 ... amm um 0

m1 ... ... amm um

a12u2 ... a1nun a11u1

u2

a21

a22 ... a1m

a22u2 ... a2 nun a21u1

u

a

... ... ... 3 u1 31

...

...

a ... ... a ...

m2

mm

am 2u2 ... amm um am1u1

um

am1

Matrix reduction

u11 1

a12 1

a11 1

0 a11 1 a12u12 0

a

a

22

1 u12

21

a12

u12

1 a11

u21 1

a12

a

A 11

a21 a22

Au u [ A I ]u 0

a11 i

a21

a12 ui1

0

a22 i ui 2

a21 (a22 2 )u22 0

u22

a11 ... a1m

A ... ... ...

a

m1 ... amm

a12

2 a22

Higher order matrices

Au u [ A I ]u 0

a1m u1

a11 ...

...

...

...

... 0

a

... amm um

m1

a1m

a11 ...

det ...

...

... 0

a

... amm

m1

m b1m 1 b2 m 2 ... bm 0

Characteristic polynomial

Eigenvalues and eigenvectors can only be computed analytically

to the fourth power of m.

Higher order matrices need numerical solutions

The power method to find the largest eigenvalue.

The power method is an interative process that starts from an initial guess of the

eigenvector to approximate the eigenvalue

Au u

Au0 0u0

Let the first component u11 of u1 being 1.

Au 1u

Rescale u1 to become 1 for the first component. This gives a

second guess for .

Au2 2u2

Repeat this procedure until the difference n+1 – n is less than a

predefined number e.

1

1

A

4

-2

2

X0

X1

1

1

1

u0

X2

5

-1

3

u1

1

1

1

0

u2

1

1

1

X3

X4

X5

X6

X7

X8

4.6 4.565217 4.561905 4.561587 4.561556 4.561553 4.561553

-1.4 -1.43478

-1.4381 -1.43841 -1.43844 -1.43845 -1.43845

2.6 2.565217 2.561905 2.561587 2.561556 2.561553 2.561553

u3

u4

u5

u6

u7

u8

1

1

1

1

1

1

1

1

-0.2 -0.30435 -0.31429 -0.31524 -0.31533 -0.31534 -0.31534 -0.31534

0.6 0.565217 0.561905 0.561587 0.561556 0.561553 0.561553 0.561553

1

1

0

0

0

2

5

3

4

5

6

7

8

4.6 4.565217 4.561905 4.561587 4.561556 4.561553 4.561553

Having the eigenvalues

thew eigenvectors come

immediately from solving

the linear system

a11 1

a21

...

a

m1

a12

a22 2

...

...

u1

...

... u2

0

...

... ...

... amm m um

...

a1m

using matrix reduction

Some properties of eigenvectors

If L is the diagonal matrix of

eigenvalues:

The eigenvectors of symmetric

matrices are orthogonal

ΛU UΛ

A( sym m etric) :

AU UΛ AUU1 A ULU 1

U' U 0

Eigenvectors do not change after a

matrix is multiplied by a scalar k.

Eigenvalues are also multiplied by k.

The product of all

eigenvalues equals the

determinant of a matrix.

det A i 1 i

[ A I ]u [kA kI ]u 0

The determinant is zero if at

least one of the eigenvalues is

zero.

In this case the matrix is

singular.

If A is trianagular or diagonal the

eigenvalues of A are the diagonal

entries of A.

n

A

2

3

3

-1

2

4

3

-6

-5

5

Eigenvalues

2

3

4

5

Page Rank

p A dpB

k

k BA

k

1 d

dpC CA dpD DA

cB

cC

cD

N

pB dpA

k

k AB

k

1 d

dpC CB dpD DB

cA

cC

cD

N

pC dpA

k AC

k

k

1 d

dpB BC dpD DC

cA

cB

cD

N

pD dpA

k

k AD

k

1 d

dpB BD dpC CD

cA

cB

cC

N

In large webs (1-d)/N is very small

k

k

k

p A 0 p A dpB BA dpC CA dpD DA

cB

cC

cD

pB dpA

k

k AB

k

0 pB dpC CB dpD DB

cA

cC

cD

k

k

k

pC dpA AC dpB BC 0 pC dpD DC

cA

cB

cD

k

k

k

pD dpA AD dpB BD dpC CD 0 pD

cA

cB

cC

0

k AB

d c

A

k

d AC

cA

k

d AD

cA

d

k BA

cB

0

k BC

cB

k

d BD

cB

kCA

cC

k

d CB

cC

d

d

0

d

k DA

cD

k

d DB

cD

k

d DC

cD

d

kCD

cC

0

p A p A

pB pB

pC pC

p p

D D

A standard eigenvector problem

Pu u

The requested raking is simply contained in the

largest eigenvector of P.

A

B

C

D

0

kA

d c

A

d kA

cA

k

d A

cA

d

kB

cB

0

kB

cB

k

d B

cB

kC

cC

k

d C

cC

d

d

0

d

kC

cC

kD

cD

k D p A p A

d

cD pB pB

k D pC pC

d

cD p p

D D

0

d

0

0

0

1

p A

0

.

15

1

0

.

15

0

.

15

/

2

pB

0

0.15

1

0.15 / 2 pC

0

p

0

0

1

D

1 0.85

1

0

.

85

1

4 1 0.85

1 0.85

The data points of

the new system are

close to the new xaxis. The variance

within the new

system is much

smaller.

Principal axes

u2

15

10

u1

10

5

5

u2

-8

-6

-4

-2 -5 0

2

4

6

8

10

-10

-8

-6

-4

-2

0

2

4

6

8

10

-5

-10

-15

0

Y

Y

0

-10

u1

-10

X

X

15

Y

10

-1 0

-8

-6

Principal axes define the longer and shorter

radius of an oval around the scatter of data

u1

points.

The quotient of longer to short principal axes

measure how close the data points are associated

(similar to the coefficient of correlation).

u25

0

-4

-2

-5 0

-1 0

-1 5

2

4

6

8

X

V(u1;u 2) (X( X ;Y ) M( X ;Y ) )U

10

The principal axes span a new Cartesian system .

Principal axes are orthogonal.

Major axis regression

15

The largest major axis defines a regression

line through the data points {xi,yi}.

u1

10

The major axis is identical with the

largest eigenvector of the associated

covariance matrix.

The length of the axes are given by the

eigenvalues.

The eigenvalues measure therefore the

association between a and y.

5

u2

Y

0

-10

-8

-6

-4

-2 -5 0

-10

-15

X

2

4

6

8

10

The first principal axis is

given by the largest

eigenvalue

(D I)U 0

Major axis regression minimizes the Euclidean

distances of the data points to the regression line.

2

s X2 sY2 4s XY

(s X2 sY2 ) 2

1, 2

2

u 21 a1

u 2

12

u 22 2 a22

a12

u 22

2 a22

s X2

D Σ

s XY

u21 1

u22

mMAR

s xy

sy2

s XY

2

sY

s xy

u 22

u 21 s y 2

bMAR Y m X

The relationship between ordinary least square (OLS) and major axis (MAR) regression

s

r XY

s X sY

mOLS

mOLS r

s XY

2

sX

sY

sX

mMAR

mMAR r

2

mMAR mOLS

sX

sY 2

s XY

sY 2

s X sY

sY 2

Going Excel

X

Y

Covariance matrix

0.7198581

0.2683467

0.9514901

0.8280125

0.4483117

0.9386594

0.301201

0.4835976

0.7188817

0.7992589

0.3955747

0.802986

0.5685275

0.0697029

0.3099076

0.9044138

0.3853803

0.2352667

0.8249179

0.8661037

0.066128

2.486935

0.351561

2.705939

2.097096

0.87799

2.705279

0.960431

1.898561

1.956716

2.734179

0.958425

2.721408

1.25693

0.296154

0.688048

2.466684

0.981417

0.945944

2.378733

2.56935

0.603999

0.084878 0.23295

0.23295 0.791836

3

2.5

0

0

2

Y

Eigenvalues

0.015021

0.861692

y=3.3347-0.2379

1.5

y = 2.8818x + 0.0185

1

Eigenvectors

0.957858 0.287241

-0.28724 0.957858

0.5

0

0

Eigenvectors

0.979137

0.2032

-0.2032 0.979137

a

b

Parameters

3.334687

-0.23791

Values to draw the MAR regression

0.1 0.095561

0.9 2.76331

0.2

0.4

0.6

X

0.8

1

Errors in the x and y variables cause OLS regression to predict lower

slopes. Major axis regression is closer to the correxct slope.

Y

1

=3*A4+2

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

5

8

11

14

17

20

23

26

29

32

35

38

41

44

47

50

53

56

59

62

65

=A4+A4*(LOS =B4+B4*(LOS

()-0.5)

()-0.5)

0.620370962

1.405829923

3.932365359

3.369965031

6.754318881

3.140987171

4.848193641

11.07692858

10.48809173

5.174301246

5.632905042

13.32938498

16.94257054

15.78715334

21.75787273

12.77137686

13.25068353

22.70011271

25.34824478

26.61513272

24.21743343

Covariance matrix

4.053401137

71.08896 93.07421

5.955994942

93.07421 264.3759

14.33527103

10.78551619

Eigenvalues

16.24109695

33.55803

27.95607099

301.9068

25.99785179

36.23837193

30.37969645

Eigenvectors

46.52139803

0.927438 0.373977

34.70506056

-0.37398 0.927438

44.35231794

25.73278151

25.52100704

Parameters

52.94515263 a

2.479933

48.07135318 b

8.847974

38.67108652

28.7831935 Values to draw the MAR regression

40.06533423

0.5 10.08794

60.76764637

27 75.80618

57.47387651

Y

X

80

70

60

50

40

30

20

10

0

The MAR slope is always steeper than the OLS slope.

If both variables have error terms MAR should be

preferred.

y=2.48+8.85

y=3+2

y = 1.37x + 15.86

0

10

20

30

X

Major axis

regression

(MAR)

Ordinary

least squares

regression

(OLS)

MAR is not stable after rescaling of only one of the variables

34

60

92

1

18

144

50

114

64

102

1

12

11

1

114

1

2

119

85

2

143

35

169

50

97

0

2

Latitude

of capitals

(decimal

degrees)

41.33

42.5

48.12

37.73

39.55

53.87

50.9

43.82

51.15

42.65

27.93

49.22

41.92

35.33

45.82

37.1

35.15

50.1

55.63

36.4

59.35

62

60.32

48.73

52.38

36.1

37.9

Years

below

zero

0.094444

0.166667

0.255556

0.002778

0.05

0.4

0.138889

0.316667

0.177778

0.283333

0.002778

0.033333

0.030556

0.002778

0.316667

0.002778

0.005556

0.330556

0.236111

0.005556

0.397222

0.097222

0.469444

0.138889

0.269444

0

0.005556

400

Covariance matrix

80.38689 420.4235

420.4235 3803.503

Covariance matrix

80.38689 1.167843

1.167843 0.029348

y=8.97-350.6

300

D

41.33

42.5

48.12

37.73

39.55

53.87

50.9

43.82

51.15

42.65

27.93

49.22

41.92

35.33

45.82

37.1

35.15

50.1

55.63

36.4

59.35

62

60.32

48.73

52.38

36.1

37.9

Days

below

zero

200

100

Eigenvalues

33.50203

3850.388

y = 5.32x - 179.4

0.012379

80.40386

0

0

Eigenvectors

0.993839 0.110831

-0.11083 0.993839

a1

b1

Parameters

8.96715

-350.55

Values to draw the MAR regression

1 -341.583

80 366.8216

MAR

a1/a2

OLS

a1/a2

Days/360

OLS regression retains the

correct scaling factor, MAR

does not.

0.014528 0.999894

-0.99989 0.014528

a2

b2

Parameters

0.01453

-0.48722

0.8

40

L

60

80

y=0.0145-0.487

0.6

y = 0.0148x - 0.498

0.4

-0.47269

0.675179

20

1

D

Latitude

of capitals

(decimal

degrees)

0.2

0

617.1465

359.4595

0

20

40

L

60

80

MAR should not be used for comparing slopes if variables have

different dimensions and were measured in different units, because

the slope depends on the way of measurement.

If both variables are rescaled in the same manner (by the same

factor) this problem doesn’t appear.

Scaling a matrix

AU UL

A U U L A U L U 1

A n (U L U 1 )(U L U 1 )(U L U 1 )...

A n U (L U 1 U...) U 1 U L n U 1 U in I n U 1 U in U 1

A n U in I n U 1

A n U in U 1

A simple way to take the power of a square matrix

The variance and covariance of data according to the principal axis

Σ ( x; y )

11 ... 1m

1

... ... ...

( X M )T ( X M )

n 1

m1 ... mm

y

(x;y)

u1

u2

V(u1;u 2) (X( x; y ) M( x; y ) )U

The vector of data points in the

new system comes from the

transformation according to the

principal axes

x

Σ (u1;u 2)

1

1

1

V T (u1;u 2 ) V(u1;u 2)

U T ( X M )T ( X M ) U

UT Σ ( x; y ) U

n 1

n 1

n 1

Eigenvectors are normalized to the length of one

k2 uk T ( x; y )uk uk T k uk k uk T uk k

The variance of variable k in the new

system is equal to the eigenvalue of the kth

principal axis.

The eigenvectors are orthogonal

jk u j T ( x; y )uk u j T k uk k u j T uk 0

The covariance of variables j and k in the

new system is zero. The new variables are

independent.