Matrix Approach to Simple Linear Regression

advertisement

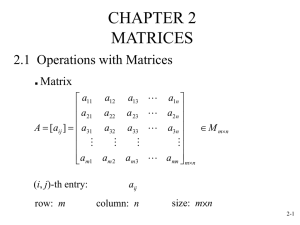

Matrix Approach to Simple Linear Regression KNNL – Chapter 5 Matrices • Definition: A matrix is a rectangular array of numbers or symbolic elements • In many applications, the rows of a matrix will represent individuals cases (people, items, plants, animals,...) and columns will represent attributes or characteristics • The dimension of a matrix is it number of rows and columns, often denoted as r x c (r rows by c columns) • Can be represented in full form or abbreviated form: a11 a 21 A ai1 ar1 a12 a22 a1 j a2 j ai 2 aij ar 2 arj a1c a2 c aij i 1,..., r ; j 1,..., c aic arc Special Types of Matrices Square Matrix: Number of rows = # of Columns r c 20 32 50 b b A 12 28 42 B 11 12 b21 b22 28 46 60 Vector: Matrix with one column (column vector) or one row (row vector) d1 d D 2 E ' 17 31 F ' f1 f 2 f 3 d3 d4 Transpose: Matrix formed by interchanging rows and columns of a matrix (use "prime" to denote transpose) 57 C 24 18 6 15 22 G 23 8 13 25 6 8 G ' 15 13 32 22 25 h1c hr1 h11 h11 h i 1,..., r ; j 1,..., c H ' h j 1,..., c; i 1,..., r H ij ji r c c r hr1 h1c hrc hrc Matrix Equality: Matrices of the same dimension, and corresponding elements in same cells are all equal: b11 b12 4 6 A = B b b11 4, b12 6, b21 12, b22 10 b 12 10 21 22 Regression Examples - Toluca Data X Y1 Y Response Vector: Y 2 n1 Yn Y' Y1 Y2 Yn 1 n 1 X 1 1 X 2 Design Matrix: X n 2 1 X n 1 1 1 X' 2 n X X X 2 n 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 80 30 50 90 70 60 120 80 100 50 40 70 90 20 110 100 30 50 90 110 30 90 40 80 70 Y 399 121 221 376 361 224 546 352 353 157 160 252 389 113 435 420 212 268 377 421 273 468 244 342 323 Matrix Addition and Subtraction Addition and Subtraction of 2 Matrices of Common Dimension: 70 6 7 7 0 2 7 4 7 2 0 42 42 C D C D C D 14 6 10 14 12 6 24 18 10 14 12 6 4 6 22 22 22 22 10 12 a1c b1c a11 b11 a i 1,..., r ; j 1,..., c B b i 1,..., r ; j 1,..., c A ij ij r c r c ar1 br1 arc brc a1c b1c a11 b11 a b i 1,..., r ; j 1,..., c AB ij ij r c ar1 br1 arc brc a1c b1c a11 b11 a b i 1,..., r ; j 1,..., c A B ij ij r c ar1 br1 arc brc Regression Example: Y1 Y Y 2 n1 Yn E Y1 E Y 2 E Y n1 E Yn 1 ε 2 n1 n Y E Y ε n1 n1 n1 Y1 E Y1 1 E Y1 1 Y E Y E Y 2 2 2 2 since 2 Yn E Yn n E Yn n Matrix Multiplication Multiplication of a Matrix by a Scalar (single number): 2 1 3(2) 3(1) 6 3 k 3 A k A 2 7 3(2) 3(7) 6 21 Multiplication of a Matrix by a Matrix (#cols(A) = #rows(B)): If c A rB : A B AB = abij i 1,..., rA ; j 1,..., cB rA c A rB cB rA cB abij sum of the products of the c A rB elements of i th row of A and jth column of B : 2 5 A 3 1 32 0 7 3 1 B 22 2 4 2(1) 5(4) 16 18 2(3) 5(2) A B AB 3(3) (1)(2) 3(1) (1)(4) 7 7 32 2 2 32 0(3) 7(2) 0(1) 7(4) 14 28 c If c A rB c : A B AB = abij = aik bkj i 1,..., rA ; j 1,..., cB rA c A rB cB rA cB k 1 Matrix Multiplication Examples - I Simultaneous Equations: a11 x1 a12 x2 y1 a21 x1 a22 x2 y2 a11 a12 x1 y1 (2 equations: x1 , x2 unknown): a21 a22 x2 y2 a11 x1 a12 x2 y1 AX = Y a21 x1 a22 x2 y2 4 2 Sum of Squares: 42 2 32 4 2 3 2 29 3 1 X 1 0 1 X 1 1 X X 2 1 2 0 0 Regression Equation (Expected Values): 1 1 X X n 1 n 0 Matrix Multiplication Examples - II Matrices used in simple linear regression (that generalize to multiple regression): Y ' Y Y1 Y2 1 X'X X1 1 X'Y X1 1 X2 1 X2 Y1 Y n Yn 2 Yi 2 i 1 Yn 1 X 1 n 1 1 X 2 n Xn X i 1 X n i 1 Y1 n Y i 1 Y2 i 1 n Xn X Y i i Yn i 1 Xi i 1 n 2 Xi i 1 n 1 X 1 0 1 X 1 1 X X 2 1 2 0 0 X 1 1 X n 0 1 X n Special Matrix Types Symmetric Matrix: Square matrix with a transpose equal to itself: A = A': 6 19 8 6 19 8 A 19 14 3 A' 19 14 3 A 8 3 1 8 3 1 Diagonal Matrix: Square matrix with all off-diagonal elements equal to 0: 4 0 0 b1 0 0 A 0 1 0 B 0 b2 0 Note:Diagonal matrices are symmetric (not vice versa) 0 0 2 0 0 b3 Identity Matrix: Diagonal matrix with all diagonal elements equal to 1 (acts like multiplying a scalar by 1): 1 0 0 a11 a12 a13 a11 a12 a13 I 0 1 0 A a21 a22 a23 IA AI A a21 a22 a23 33 33 0 0 1 a31 a32 a33 a31 a32 a33 Scalar Matrix: Diagonal matrix with all diagonal elements equal to a single number" k 0 0 0 0 0 0 1 0 0 0 0 1 0 0 k 0 0 k I k 44 0 0 1 0 0 k 0 0 0 k 0 0 0 1 1-Vector and matrix and zero-vector: 1 1 1 r 1 1 1 J r r 1 1 1 0 0 0 r 1 0 Note: 1 ' 1 1 1 1r r 1 1 1 1 r 1 1 1 1 1 ' 1 1 r 11r 1 1 1 1 1 J r r 1 Linear Dependence and Rank of a Matrix • Linear Dependence: When a linear function of the columns (rows) of a matrix produces a zero vector (one or more columns (rows) can be written as linear function of the other columns (rows)) • Rank of a matrix: Number of linearly independent columns (rows) of the matrix. Rank cannot exceed the minimum of the number of rows or columns of the matrix. rank(A) ≤ min(rA,ca) • A matrix if full rank if rank(A) = min(rA,ca) 1 A 22 4 4 B 22 4 3 A1 12 21 A 2 21 3 B1 B 2 12 21 21 3A1 A 2 0 0B1 0B 2 0 Columns of A are linearly dependent rank(A ) = 1 Columns of B are linearly independent rank(B) = 2 Matrix Inverse • Note: For scalars (except 0), when we multiply a number, by its reciprocal, we get 1: 2(1/2)=1 x(1/x)=x(x-1)=1 • In matrix form if A is a square matrix and full rank (all rows and columns are linearly independent), then A has an inverse: A-1 such that: A-1 A = A A-1 = I 2 8 A 4 2 2 36 A -1 4 36 8 36 2 36 2 36 A -1 A 4 36 8 4 32 36 2 8 36 36 2 4 2 8 8 36 36 36 16 16 36 36 1 0 I 32 4 0 1 36 36 0 0 000 0 0 0 1 0 0 4 1 / 4 0 0 4 0 0 1/ 4 -1 -1 B 0 2 0 B 0 1/ 2 0 BB 0 0 0 0 2 1/ 2 0 0 0 0 0 1 0 I 0 0 0 0 0 6 0 0 1/ 6 000 0 0 6 1/ 6 0 0 1 Computing an Inverse of 2x2 Matrix a12 a A 11 full rank (columns/rows are linearly independent) 22 a21 a22 Determinant of A A a11a22 a12 a21 Note: If A is not full rank (for some value k ): a11 ka12 a21 ka22 A a11a22 a12 a21 ka12 a22 a12 ka22 0 a22 a12 -1 a Thus A does not exist if A is not full rank 22 a 21 11 While there are rules for general r r matrices, we will use computers to solve them A 1 1 A Regression Example: 1 X 1 1 X n 2 X'X X n X i i 1 1 X n X'X 1 1 n n X i X i 1 2 n 2 Xi i 1 n X i i 1 2 1 X n 2 n Xi X 1 i 1 X'X X n 2 Xi X i 1 2 n n 2 Xi n n n i 1 2 2 X'X n X i X i n X i n X i X i 1 n i 1 i 1 i 1 Xi i 1 n X i2 i 1 n n X i i 1 n n 2 Xi X i 1 1 n 2 Xi X i 1 X n Note: X i n X i 1 n i 1 Xi X 2 n 2 n n i 1 i 1 X i2 n X X i2 X i X i 1 2 2 nX 2 Use of Inverse Matrix – Solving Simultaneous Equations AY = C where A and C are matrices of of constants, Y is matrix of unknowns A -1 AY A -1C Y = A -1C Equation 1: 12 y1 6 y2 48 (assuming A is square and full rank) Equation 2: 10y1 2 y2 12 y1 48 Y C Y = A -1C 12 y2 6 2 6 1 2 1 -1 A 12(2) 6(10) 10 12 84 10 12 12 6 A 10 2 6 48 1 96 72 1 168 2 1 2 Y=A C 84 10 12 12 84 480 144 84 336 4 -1 Note the wisdom of waiting to divide by | A | at end of calculation! Useful Matrix Results All rules assume that the matrices are conformable to operations: Addition Rules: AB BA ( A B ) C A ( B C) Multiplication Rules: (AB)C A(BC) C( A B) CA + CB k ( A B) kA kB k scalar Transpose Rules: ( A ') ' A ( A B) ' A ' B ' ( AB) ' B'A' (ABC)' = C'B'A' Inverse Rules (Full Rank, Square Matrices): (AB)-1 = B -1 A -1 (ABC)-1 = C-1B -1 A -1 (A -1 )-1 = A (A')-1 = (A -1 )' Random Vectors and Matrices Shown for case of n=3, generalizes to any n: Random variables: Y1 , Y2 , Y3 Y1 Y Y2 Y3 E Y1 Expectation: E Y E Y2 E Y3 In general: E Y E Yij i 1,..., n; j 1,..., p n p Variance-Covariance Matrix for a Random Vector: Y1 E Y1 2 Y E Y E Y Y E Y ' E Y2 E Y2 Y1 E Y1 Y2 E Y2 Y3 E Y3 Y E Y 3 3 2 Y1 E Y1 E Y2 E Y2 Y1 E Y1 Y3 E Y3 Y1 E Y1 Y E Y Y E Y Y E Y Y E Y Y E Y Y E Y Y E Y Y E Y Y E Y Y E Y 1 1 2 2 1 1 3 3 2 1 2 2 3 3 21 2 2 2 2 3 3 2 2 3 3 31 12 13 22 23 32 32 Linear Regression Example (n=3) Error terms are assumed to be independent, with mean 0, constant variance 2 : E i 0 1 ε 2 3 Y = Xβ + ε 2 i 2 0 E ε 0 0 i , j 0 i j 2 0 0 2 2 σ ε 0 0 2I 2 0 0 E Y E Xβ + ε Xβ E ε Xβ 2 0 0 σ 2 Y σ 2 Xβ + ε σ 2 ε 0 2 0 2 I 2 0 0 Mean and Variance of Linear Functions of Y A matrix of fixed constants Y random vector k n n1 a11 W AY k 1 ak 1 a1n Y1 W1 a11Y1 ... a1nYn random vector: W Wk ak 1Y1 ... aknYn akn Yn E W1 E a11Y1 ... a1nYn a11 E Y1 ... a1n E Yn E W E Wk E ak 1Y1 ... aknYn ak1 E Y1 ... akn E Yn a1n E Y1 a11 AE Y ak 1 akn E Yn E A Y - E Y Y - E Y 'A' = AE Y - E Y Y - E Y ' A' = Aσ σ 2 W = E AY - AE Y AY - AE Y ' = E A Y - E Y A Y - E Y ' = 2 Y A' Multivariate Normal Distribution 12 12 Y1 1 Y 2 2 Y 2 μ = E Y 2 Σ = σ 2 Y 21 1n 2 n Yn n Multivariate Normal Density function: f Y 2 n /2 Σ 1/2 1 exp Y - μ 'Σ -1 Y - μ 2 Yi ~ N i , i2 i 1,..., n Yi , Y j ij i j Note, if A is a (full rank) matrix of fixed constants: W = AY ~ N Aμ, AΣA' 1n 2n 2 n Y ~ N μ, Σ Simple Linear Regression in Matrix Form Simple Linear Regression Model: Yi 0 1 X i i i 1,..., n Y1 0 1 X 1 1 Y X 0 1 2 2 2 Y X n 0 1 n n Defining: Y1 Y Y= 2 Yn 1 X 1 1 X 2 X 1 X n β 0 1 1 ε 2 Y = Xβ + ε n Assuming constant variance, and independence of error terms i : 2 0 0 2 2 2 σ Y = σ ε 0 0 0 0 2I n n 2 Further, assuming normal distributio n for error terms i : Y ~ N Xβ, 2 I 0 1 X 1 X 1 2 since: Xβ 0 E Y 0 1 X n Estimating Parameters by Least Squares Normal equations obtained from: n Q Q , and setting each equal to 0: 0 1 n nb0 b1 X i Yi i 1 n i 1 n n b0 X i b1 X X iYi i 1 i 1 2 i i 1 n Note: In matrix form: X ' X n X i i 1 Xi i 1 n X i2 i 1 n n Y i b i 1 Defining b 0 X'Y n b1 X iYi i 1 X'Xb = X'Y b = X'X X'Y -1 Based on matrix form: Q Y - Xβ ' Y - Xβ = Y'Y - Y'Xβ - β'X'Y + β'X'Xβ n n n n 2 2 Y ' Y 2 0 Yi 1 X iYi n 0 2 0 1 X i 1 X i2 i 1 i 1 i 1 i 1 n n Q 2 Y 2 n 2 X i 0 1 i i 1 i 1 0 2 X ' Y 2 X ' X (Q) n n Q n β 2 2 X Y 2 X 2 X i i 0 i 1 i i 1 i 1 1 i 1 Setting this equal to zero, and replacing with b X ' Xb X ' Y Fitted Values and Residuals ^ ^ Y i b0 b1 X i ei Yi Y i In Matrix form: ^ Y 1 b0 b1 X 1 ^ b b X ^ -1 -1 Y Y 2 0 1 2 Xb = X X'X X'Y = HY H = X X'X X' ^ b b X Y n 0 1 n H is called the "hat" or "projection" matrix, note that H is idempotent (HH = H ) and symmetric(H = H'): HH = X X'X X'X X'X X' X'I X'X X' X X'X X' H -1 -1 -1 -1 ^ ^ Y Y 1 1 Y1 Y 1 ^ Y ^ ^ e Y2 Y 2 2 Y 2 Y - Y = Y - Xb = Y - HY = (I - H)Y ^ ^ Y Y n Yn Y n n ^ H' = X X'X X' ' = X X'X X' = H -1 -1 ^ Note: E Y = E HY = HE Y = HXβ = X X'X X'Xβ = Xβ σ 2 Y = Hσ 2IH' 2 H E e = E I H Y = I H E Y = I H Xβ Xβ - Xβ = 0 σ 2 e = I H σ 2I I H ' 2 I H ^ s Y MSE H 2 -1 s 2 e = MSE I H Analysis of Variance n Total (Corrected) Sum of Squares: SSTO Yi Y i 1 2 n Yi n Yi 2 i 1 n i 1 2 2 n Note: Y ' Y Yi 2 i 1 n Yi i 1 1 Y'JY n n 1 J n n 1 1 1 1 1 SSTO Y ' Y Y'JY Y ' I J Y n n SSE e'e = Y - Xb ' Y - Xb = Y'Y - Y'Xb - b'X'Y + b'X'Xb = Y'Y - b'X'Y = Y' I - H Y since b'X'Y = Y'X' X'X X'Y = Y'HY -1 2 n Yi 1 1 SSR SSTO SSE b'X'Y i 1 Y'HY Y'JY Y ' H J Y n n n Note that SSTO, SSR, and SSE are all QUADRATIC FORMS: Y'AY for symmetric matrices A Inferences in Linear Regression b = X'X X'Y Þ E b = X'X X'E Y = X'X X'Xβ = β -1 -1 -1 σ 2 b = X'X X'σ 2 Y X X'X = σ 2 X'X X'IX X'X = σ 2 X'X -1 Recall: X'X -1 -1 2 1 X n 2 n Xi X i 1 X n 2 Xi X i 1 -1 n 2 Xi X i 1 1 n 2 Xi X i 1 ^ 1 Xh Xh ^ s 2 b MSE X'X ^ s 2 Y h Xh's 2 b Xh MSE Xh' X'X Xh Predicted New Response at X X h : Y h b0 b1 X h Xh'b -1 2 MSE X MSE n n Xi X 2 i 1 s b X MSE n 2 Xi X i 1 X Estimated Mean Response at X X h : Y h b0 b1 X h Xh'b -1 s 2 pred MSE 1 Xh' X'X Xh -1 -1 -1 n 2 Xi X i 1 MSE n 2 Xi X i 1 X MSE 2