Multi-GPU and Stream Programming

advertisement

Multi-GPU and Stream

Programming

Kishan Wimalawarne

Agenda

•

•

•

•

Memory

Stream programming

Multi-GPU programming

UVA & GPUDirect

Memory

• Paged locked memory (Pinned memory)

– Useful in concurrent kernel execution

– Use cudaHostAlloc() and cudaFreeHost() allocate and free

page-locked host memory

• Mapped memory

– A block of page-locked host memory can also be mapped

into the address space of the device by passing flag

cudaHostAllocMapped to cudaHostAlloc()

Zero-Copy

• Zero-Copy enables GPU threads to directly access

host memory.

• Requires mapped pinned (non-pageable) memory.

• Zero copy can be used in place of streams because

kernel-originated data transfers automatically

overlap kernel execution without the overhead of

setting up and determining the optimal number of

streams

• Use cudaSetDeviceFlags() with cudaDeviceMapHost()

Zero-Copy

Stream Programming

Introduction

• Stream programming (pipeline) is a useful parallel

pattern.

• Data transfer from host to device is a major

performance bottleneck in GPU programming

• CUDA provides support for asynchronous data transfer

and kernel executions.

• A stream is simply a sequence of operations that are

performed in order on the device.

• Allow concurrent execution of kernels.

• Maximum number of concurrent kernel calls to be

launched is 16.

Introduction

Asynchronous memory Transfer

• Use cudaMemcpyAsync() instead of

cudaMemcpy().

• cudaMemcpyAsync() – non-blocking data

transfer method uses pinned host memory .

• cudaError_t cudaMemcpyAsync ( void * dst,

const void * src, size_t count,

enum

stream)

cudaMemcpyKind, cudaStream_t

Stream Structures

• cudaStream_t

– Sepcifies a stream in a CUDA program

• cudaStreamCreate(cudaStream_t * stm)

– Instantiate streams

Streaming example

Event processing

• Events are used for

– Monitor device behavior

– Accurate rate timing

• cudaEvent_t e

• cudaEventCreate(&e);

• cudaEventDestroy(e);

Event processing

• cudaEventRecord() records and event associated with

a stream.

• cudaEventElapsedTime() finds the time between two

input events.

• cudaEventSynchronize() blocks until the event has

actually been recorded

• cudaEventQuery() Check status of an event.

• cudaStreamWaitEvent() makes all future work

submitted to stream wait until event reports

completion before beginning execution.

• cudaEventCreateWithFlags() create events with flags

e.g:- cudaEventDefault, cudaEventBlockingSync

Stream Synchronization

• cudaDeviceSynchronize() waits until all preceding

commands in all streams of all host threads have

completed.

• cudaStreamSynchronize() takes a stream as a parameter

and waits until all preceding commands in the given stream

have completed

• cudaStreamWaitEvent() takes a stream and an event as

parameters and makes all the commands added to the

given stream after the call to cudaStreamWaitEvent() delay

their execution until the given event has completed.

• cudaStreamQuery() provides applications with a way to

know if all preceding commands in a stream have

completed.

Multi GPU programming

Multiple device access

• cudaSetDevice(devID)

– Devise selection within the code by specifying the

identifier and making CUDA kernels run on the

selected GPU.

Peer to peer memory Access

• Peer-to-Peer Memory Access

– Only on Tesla or above

– cudaDeviceEnablePeerAccess() to check peer

access

Peer to peer memory Copy

• Using cudaMemcpyPeer()

– works for Geforce 480 and other GPUs.

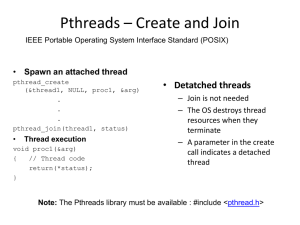

Programming multiple GPUs

• The most efficient way to use multiple GPUs is

to use host threads for multiple GPUs and

divide the work among them.

– E.g- pthreads

• Need to combine the parallelism of multi-core

processor to in conjunction with multiple

GPU's.

• In each thread use cudaSetDevice() to specify

the device to run.

Multiple GPU

• For each computation on GPU create a

separate thread and specify the device a

CUDA kernel should run.

• Synchronize both CPU threads and GPU.

Multiple GPU Example

void * GPUprocess(void *id){

long tid;

tid = (long)id;

if(tid ==0){

cudaSetDevice(tid);

cudaMalloc((void **)&p2 , size);

cudaMemcpy(p2, p0, size, cudaMemcpyHostToDevice );

test<<<10*5024, 1024>>>(p2,tid +2);

cudaMemcpy(p0,p2 , size, cudaMemcpyDeviceToHost );

}else if(tid ==1){

cudaSetDevice(tid);

cudaMalloc((void **)&p3 , size);

cudaMemcpy(p3, p1, size, cudaMemcpyHostToDevice );

test<<<10*5024, 1024>>>(p3,tid +2);

cudaMemcpy(p1,p3 , size, cudaMemcpyDeviceToHost );

}

Multiple GPU Example

#include <pthread.h>

int NUM_THREADS=2;

pthread_t thread[NUM_THREADS];

pthread_attr_t attr;

pthread_attr_init(&attr);

pthread_attr_setdetachstate(&attr, PTHREAD_CREATE_JOINABLE);

for(t=0; t<NUM_THREADS; t++) {

rc = pthread_create(&thread[t], &attr, GPUprocess, (void *)t);

if (rc) {

printf("ERROR; return code from pthread_create() is %d\n", rc);

exit(-1);

}

}

Unified Virtual Address

Space (UVA)

• 64-bit process on Windows Vista/7 in TCC

mode (only on Tesla)

GPUDirect

• Build on UVA for Tesla (fermi) products.

GPUDirect