We are the primitives

advertisement

cheap silicon:

myth or reality?

Picking the right data plane hardware for

software defined networking

Gergely Pongrácz, László Molnár, Zoltán Lajos Kis, Zoltán Turányi

TrafficLab, Ericsson Research, Budapest, Hungary

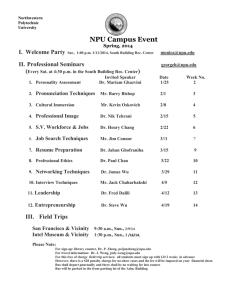

DP CHIP landscape

the usual way of thinking

Programmability

Generic NP

run-to-completion

The main question that is seldom asked:

How big is the difference?

Assuming same

use case and

table sizes

SNP, Netronome

(lower performance)

NP4

Programmable

pipeline

Fulcrum

Broadcom/Marvel

Fixed

pipeline

(higher performance)

performance

Page 2

first comparison

Chip name (nm)

Gbps

Ericsson SNP 4000 (45)

200

Mpps

300

Power /

10G

4W

Type

NPU

So Cavium

it seems

there

is

a

5-10x

NPUs

~4-5

W

/ 10G

Octeon III (28)

100

?

5W

NPU

Tilera Gx8036 (40)

40

60

6.25W

NPU

difference

between

“cheap

Intel X-E5 4650 DPDK (32)

50

80

24W/ 10G

CPU

CPUs

~25 W

EzChip NP4

(55) programmable

100

180

3.5W

PP

silicon”

and

Marvell Xelerated AX (65)

100

150

PP

Prog. pipelines

~3-4 W ?/ 10G

EzChip NP5devices

(28)

200

?

3W

PP

Netronome NFP-6 (22)

200

300

2.5W

NPU

Intel FM6372 (65)

720

1080

1W

Switch

640

?

Switch

Switches

~0.5

W ?/ 10G

BCM56840 (40)

Marvell Lion 2 (40)

960

Page 3

720

0.5W

Switch

the PBB scenario

- modelling summary -

Simple NP/CPU model

Accelerators

(e.g., RE engines, TCAM,

hw queue, encyption)

Ethernet

(e.g, 10G, 40G)

Optional accelerators

Fabric

(e.g, Interlaken)

I/O

Processing unit(s)

(e.g., pipeline, execution unit)

External

Resource

Control

(e.g., optional TCAM,

external memory)

(e.g, TCAM)

External memory

(e.g, DDR3)

System

(e.g, PCIe)

On-Chip memory

(e.g., cache, scratchpad)

Page 5

Internal bus

None

External

port

Fabric

256 cores

@ 1 GHz

96x10G

System

L1

•

•

•

•

L2

SRAM

• eDRAM

4B/clock • 24 Gtps

per

core • shared

Internal

bus

>128B

• >2 MB

Page 6

Low latency

RAM

8 MCT

• 340 Mtps

• >1 GB

(e.g, RLDRAM)

High capacity

memory

(e.g, DDR3)

packet walkthrough

1. read frame from I/O: copy to L2 memory, copy header to

L1 memory

2. parse fixed header fields

3. find extended VLAN {sport, S-VID eVLAN}: table in L2

memory

4. MAC lookup and learning

{eVLAN, C-DMAC B-DMAC, dport, flags}: table in ext.

memory

5. encapsulate: create new header, fill in values (no further

lookup)

6. send frame to I/O

Page 7

assembly code

›

Don’t worry, no time for this

–

but the code pieces can be found in the paper

Page 8

pps/bw calculation

only summary*

› PBB processing in average

– 104 clock cycles

– 25 L2 operations (depends on packet size)

– 1 external RAM operation

› Calculated performance (pps)

– packet size = 750B 960 Mpps = 5760 Gbps

› cores + L1 memory: 2462 Mpps

› L2 memory: 960 Mpps

› ext. memory: 2720 Mpps

– packet size = 64B bottleneck moves to cores 2462 Mpps = 1260

Gbps

* assembly code and detailed calculation is available in the paper

Page 9

Ethernet PBB scenario

overview of results

› Results are theoretical: programmable chips today are designed for

more complex tasks with less I/O ports

Chip name

Power

Mpps

Mpps /

Watt

Type

13-16

vs.

10-1380WMpps

Ericsson

SNP 4000

1080 / Watt:

13.5

NPU

Netronome NFP-6

50W

493

9.9

NPU

Cavium Octeon

III

50W

480around

9.6

NPU

20-30%

advantage:

Tilera Gx8036

25W

180

7.2

NPU

1.25x instead

of118 10x 1 CPU

Intel X-E5 4650 DPDK

120W

EzChip NP4

35W

333

9.5

PP

Intel FM6372

80W

1080

13.5

Switch

Marvell Lion 2

45W

720

16

Switch

Page 10

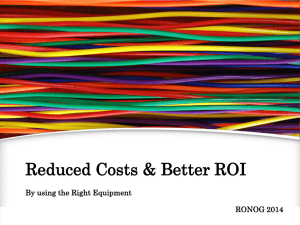

summary and

next steps

I’d have to make it really fast if I spent >8

minutes so far

what we’ve learned

so far…

› Performance depends mainly on the use case, not on the selected hardware solution

– not valid for Intel-like generic CPU – much lower perf. at simple use cases

› but even this might change with manycore Intel products (e.g. Xeon Phi)

– on a board/card level local processor also counts – known problem for NP4

› Future memory technologies (e.g. HMC, HBM, 3D) might change the picture again

– much higher transaction rate, low power consumption

Page 12

But! – no free lunch

the hard part: I/O balance

› So far it seems that a programmable NPU would be suitable for

all tasks

› BUT! For which use case shall we balance the I/O and the

processing complex?

– today we have a (mostly) static I/O built together with the NPU

– we do have >10x packet processing performance difference between

important use cases

› How to solve it?

› Different NPU – I/O flavors: still quite static solution

– but an (almost) always oversubscribed I/O could do the job

› I/O – forwarding separation: modular HW

Page 13

what is next

ongoing and planned activities

› Prove by prototyping

– use ongoing OpenFlow prototyping activity

– OF switch can be configured to act as PBB

– SNP hardware will be available in our lab at 2013 Q4

– Intel (DPDK) version is ready, first results will be demonstrated @

EWSDN 13

› Evaluate the model and make it more accurate

– more accurate memory and processor models

› e.g. calculate with utilization based power consumption

– identify possible other bottlenecks

› e.g. backplane, on-chip network

Page 14

thank you!

And let’s discuss these further