Document

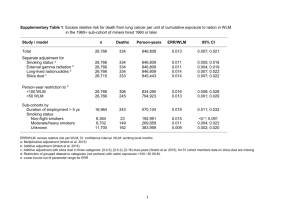

advertisement

zSeries Scalability and Availability

improvements

Latest hardware and software advancements

Fri Sep 26, 2014: 10:15-11:30

Track 2

Speaker: Donald Zeunert (BMC Software)

Abstract / Summary

• Recent IBM system and subsystem scalability

improvements allow significant consolidation.

– This save CPU and Memory resources

– Avoids creating more server instances to manage

• Bottlenecks - Faster CPUs mean everything else

relatively slower

– Need to look at what are the bottlenecks and what did we ignore

when it was released

Areas of improvements

• Performance / Scalability

– Hardware, Microcode, PR/SM

– Software – z/OS, CICS, DB2

• Availability

– Software / hardware

IT Budgets – Save $

• Need to look at

technology

impact on

Software

License Costs?

• New hardware

or software

costs money

– Does it save

me any?

Relative speed of devices

CPU faster everything else relatively

slower

• RAM is now slow

– > CHIP Cache on

z196, EC12

• Paging devices are

slower

– EC12 Flash memory

Topic 1 – Hardware /

Microcode Performance

• zEC12 Benefits

– Workloads may use fewer MSUs

– Warning track

– Other exclusives

• CF Sharing - Thin interrupts

• Hiperdispatch

– Why do we need ?

– Parked engines

z10 vs zEC12 Chip Cache

• Each

generation

CPs getting

– more levels

of chip

cache

– More MB at

each level

• Chip cache

hits = fewer

CPU secs

zEC12

RNI = Ratio of Chip /

local sourcing to total

sourcing (Local +

Remote)

Disclaimer – “Your Mileage

may vary”

Migrating from gasoline Exotic to electric supercar will improve MPG

But what if you also have an 18-wheeler

zPCR Workload RNI

Characteristics

zEC12 – Workloads may use fewer MSUs

– Onlines may get better response, use significantly less CPU secs

and MSUs. But does this help my hardware and software bills?

• Yes, if onlines are driving your box capacity and 4HRA

– Step 1 – Run or find your last Sub-Capacity Reporting Tool (SCRT)

report and determine what hour / shift is the peak(s).

– Step 2 – Determine 4HRA contributors % Batch, CICS, etc.

– Step 3 – Look at zPCR chart for RNI (High?) of your workload

– Step 4 – If workload has high RNI run zCPR model upgrade to zEC2

using your Hardware Capacity SMF 113s Cache stats

RNI by Hour correlated with 4HRA

Day shift

OLTP High

RNI –

matches

4HRA

Peak 4HRA

High RNI

at peak

RNI

3:00 AM

Night shift –

4HRA lower

RNI, not as

CHIP cache

constrained

MSU

1:00PM

zEC12 – Warning Track Interrupt

More consistent

response time

PR/SM CP

Stealing

Old Way

New Way - Warning Track Interupt

z/OS is not directly involved in EC12 presents z/OS "Warning Track"

the undispatching of a logical interrupt when it wants to undispatch a

processor from physical CP. LCP logical processor.

saved status info is stored off This gives z/OS a grace period to

z/OS

undispatch (save status) the workunit.

Pro

If undispatched in grace period then z/OS

can dispatch it on another logical

processor.

Con

Any pending work on that LCP If grace period expires before z/OS returns

would remain undispatched logical CP to PR/SM, PR/SM undispatches

until that LCP is put back on

the logical CP. Then it works like before.

any PCP

Warning Track - %

Successful and Rate

• High rate of WTIs / second – (typical low weight / overcommited)

• Logical removed from Physical successfully > 98% of the time

• making work eligible to be re-dispatched on different logical.

• Provides more consistent OLTP response time

WTI Metrics are in SMF70s, support to collect in;

CMF PTFs BQM0868 (5.8) or BQM0867 (5.9) or

RMF APAR OA37803

zEC12 – Other exclusives

• Storage Class Memory (SCM) – AKA Flash Express

– PCIe - 1.4 TB of memory per mirrored card pair

• PR/SM Absolute Capping

– Expressed in terms of 1/100ths of a processor (0.01 to 255.0)

• Smoother capping

• 4HRA Max Spike(s)

• Data Compression Express (zEDC)

– (PCIe) device – Offload software compression

• SMF Logstreams

– Get Softinflate (OA41156 ) if machines w/o zEDC

• Numerous other exploiters

Type

CF Thin interrupts – Improved

Service times

Parm

CF Polling

Dynamic CF Dispatching

Coupling Thin Interrupts

DYNDISP=NO

DYNDISP=YES

DYNDISP=THIN

LPAR Time Slicing

Dispatch

algorithm

Pros

Cons

CF Time based

Event driven Dispatching algorithm for CF

CF releases engine if no

engine sharing

work left.

Best performance More effective engine

Improved Sync

for dedicated CF, sharing than polling

Service times.

not suitable for

loop. CF now

Thin interrupt to dispatch

shared CF

managing slice.

processor when new work

arrives

Holds CF processor Uses whole slice even

Requires z/OS 1.12 or

yields very poor / if no work, can not be

higher w/ PTFs and CFCC

erratic Sync Service interupted / stolen

microcode level 19

times

High Sync times = GCP Spin loop = wasted MSUs = contribute 4HRA

Logical / Physical Guaranteed

SJS* LPAR weight < 10% of 16 CPs so

guaranteed < 1.6 CPs, actually max is 2 -> 3

These LPARs using about twice guarantee

Using White Space /

more than guaranteed

share.

Impacting 4HRA?

Guaranteed Share

calculations

Name LP CT

VMR

4

SYSP

3

VM4

2

VM5

2

VM9

2

DB2A

3

DB2B

3

SYSN

3

SJSE

3

SYSM

3

SJSB

2

SJSC

3

SJSD

3

ESAJ

3

IMSA

3

SJSH

2

IOC2

1

Total

Wgt

500

500

500

500

500

400

400

400

300

300

300

250

250

200

150

50

50

5550

Rel Shr Guar CPs %MSU Over commit

9.01%

1.44

36%

178%

9.01%

1.44

48%

108%

9.01%

1.44

72%

39%

9.01%

1.44

72%

39%

9.01%

1.44

72%

39%

7.21%

1.15

38%

160%

7.21%

1.15

38%

160%

7.21%

1.15

38%

160%

5.41%

0.86

29%

247%

5.41%

0.86

29%

247%

5.41%

0.86

43%

131%

4.50%

0.72

24%

316%

4.50%

0.72

24%

316%

3.60%

0.58

19%

420%

2.70%

0.43

14%

594%

0.90%

0.14

7%

1288%

0.90%

0.14

14%

594%

• %MSU is % of 1 CP so

work runs 100-%MSU

slower than if full CP

– VMR CPs are 64%

slower than full due

to over commit

– Unless using

HiperDispatch with

parked CPs

– Warning track helps

• If not over commit

can’t exceed # of CPs to

LPAR

– How much white

space do you need?

HiperDispatch – view of over

commit

Guaranteed

0.86

0.72

1.15

Lots of Parked Med/Low = slower effective MSUs and overhead

Performance / Scalability

improvements in Software

How does IBM charge for

sub-capacity?

Sub-Cap Reporting Tool (SCRT)

4448 MSUs - CEC

utilization of a LPAR

w/ DB2 licensed

199 MSUs – DB2

Service class CPU

(not complete DB2)

But not DB2 peak

• IBM MLC costs

30% of IT budget

• Reducing 4HRA

MSUs lowers bills

– Paid on peak LPAR

where product(s)

run

– Less GCP MSUs

• zIIP

– LPAR consolidation

DB2 Software Performance /

Scalability

• DB2 v10 / 11 – ( $/ MSU higher, but fewer MSUs?)

– Max # concurrent threads,

– zIIP offload sequential prefetch (batch)

• Watch for zIIP overcommit / zIIP on GCP

– IDAA – offload and WLM goal awareness

– V11 DDF Enclave classification enhancements

• Also requires z/OS 2.1 WLM

• Package Name: 128 characters (instead of 8)

• Procedure Name: 128 characters (instead of 18)

DB2 V10 – Seq prefetch zIIP

overcommit

• DB2 V10 Sequential

prefetch zIIP

eligibility can

overcommit zIIPs

• Monitor zIIP > 50%

potential for GCP

• Monitor DB2 WLM

Service classes w/

SMF72s or DBM1

jobs w/ SMF30s for

zIIP eligible on GCP

DB2 V10 – 10x more Threads per

SSID

• Higher concurrent threads = fewer SSIDs / LPAR

Software Performance /

Scalability

• CICS –

– TOR CPU Starvation

– Threadsafe vs QR, fewer regions = less function shipping

= Save CPU & memory

• Convert via 80/20 rule, and whatever is left on QR runs faster

• Target with CICS stats - #context switches, CPU secs

CICS Scalability - L8 running

threadsafe

Lower

QR

TCB

CPU

Originally DB2 was only OPENAPI TRUE

Now MQ, CICS/DLI, native sockets

• Non-threadsafe program

calls DB2, CICS switches

from the QR TCB to an

open TCB, and back to

after DB2 request

• Threadsafe Program stays

on Open TCB until nonthreadsafe CICS API call

• Force to OTE / L8 w/o

OPENAPI TRUE call

– CONCURRENCY(THREADS

AFE) and API=OPENAPI

CICS TOR CPU Starvation / responsiveness

• Problem from TORs

and AORs running at

the same dispatch

priority.

– AORs heavily

consumes CPU.

– TORs need to wait

too long to receive

work and return

results to the caller

• Especially when

large # of

transactions enter

AORs w/o TOR

26

• Old Circumventions: Move TORs

to a service class with higher

importance than AORs

– Option 1 - : Exempt all regions from being

managed by response time goals and classify

TORs to a service class with higher

importance than AORs.

• Disadvantages:

• Loose WLM Server management of variable

dispatching priorities, memory protection,

etc.

• No response time data available in Service

class reports

– Option 2 - Exempt only AORs and move them

to a service class with lower importance than

the CICS service classes with response time

goals.

• Disadvantage:

• WLM Server Mgmt lost

• WLM SrvCls BTE reports for highest CPU

consumption eliminated.

CICS – Work Manager / Consumer Model

Allows CICS to use

similar model to WAS

with DB2 / DDF.

WLM classification both

from APAR OA35428

•

•

•

Use NEW WLM SrvCls classification

option “BOTH” for TORs

Define a STC service class for

TORs which has a higher

importance than the AORs response

time goals

Retain “Manage Regions by Goals

of Transaction” for AORs.

•

•

27

TORs managed towards the goals of the STC’s

service class

– WLM ensures bookkeeping of transaction

completions for CICS response time for

service class

– CICS transactions are managed towards CICS

response time goals and the

AORs Response time goal management

unchanged

Software Performance /

Scalability

• IMS 13 - 117K trans / second ,

– 4x Max # PSTs,

– IMS Connect WLM routing,

• z/OS –

– CF "fair queueing" algorithm for queued CF requests

• Selection FIFO prior to APAR (OA41203) z/OS 1.12+

• Burst to single structure could negatively affect all structures.

• Now allows low volume high priority signaling to get

processed

– SMF logstreams, SMF Compress (zEDC),

– VSAM RLS based User Catalogs (enqueues < CPU)

• IP - RoCE (Remote Direct Memory Access over

Converged Ethernet)

z/OS 2.1 VSAM RLS User

Catalog Benchmarks

Non-VSAM Delete Performance

RLS Improve %

NonRLS RLS

Elapsed

Min

CPU Sec

80.42

1269.3

8.42

298.7

89.51 • Help batch elapsed

76.46 time when high # of

temporary cataloged

datasets

Non-VSAM Define Performance

RLS Improve %

NonRLS

RLS

Elapsed

Min

CPU Sec

48.84

685.6

21.42

130.8

56.13

76.46

CPU measured in the CATALOG, GRS, SMSVSAM,

and XCFAS address spaces. Source:

Terri Menendez of IBM RLS/VSAM/Catalog R&D

– Reduce batch

window squeeze

– Possibly reduce

4HRA MSUs by

moving peak

Monitor VSAM RLS for User

Catalogs

• Monitor z/OS Health

checkers for RLS

– Enable RLS HCs

– 8 RLS Msgs that go

to MVS console

– CF IGWLOCK00

• Monitor RLS

– See SHARE RLS

Performance

sessions for what to

monitor

• True / False

contention

• DSN false

invalidates

RLS stats in SMF 42s, BP in Subtype 19

Availability improvements

from Hardware / Software

Topics - Availability

• z/OS –

– V2.1 Serial Coupling Facility structure rebuild

• Processing, re-designed to help improve performance and

availability by rebuilding coupling facility structures more

quickly and in priority order.

– Previously all structures rebuilt in parallel causing contention

– Now critical systems structures recovered 1st and other

structures optionally prioritized by policy

– zAware – Analytics – Detects anomalies avoid outages

• MQ ShrQ CF full avoidance –

– EC12 Storage Class Memory (SCM) (AKA- Flash Memory)

• May hurt performance as slower than CF memory

Questions

SHARE Sessions with more

details

• 15841: EWCP: Project Open and IBM ATS Hot Topics

– Monday, August 4, 2014: 1:30 PM-2:30 PM Room 303

– Speakers: Kathy Walsh(IBM Corporation)

• 15806: IBM zEnterprise EC12 and BC12 Update

– Tuesday, August 5, 2014: 10:00 AM-11:00 AM Room 310

– Speaker: Harv Emery(IBM Corporation)

• 15105: z/OS Parallel Sysplex z/OS 2.1 Update (Annaheim)

– https://share.confex.com/share/122/webprogram/Session15105.html

• 14142: Unclog Your Systems with z/OS 2.1 – Something New

and Exciting for Catalog (VSAM RLS UCATs) (Boston)

– http://proceedings.share.org/conference/abstract.cfm?abstract_id=268

22

谢谢

Backup Slides

z/OS 1.12 – Servers w/ no

active enclaves

• Controlled by new IEAOPT Parameter

• ManageNonEnclaveWork = {No |Yes}

– No: (default) Non enclave work is managed based on the most

important enclave.

• Doesn’t work well when no active enclaves and other work to

do

• Example; Significant work unrelated to an enclave:

– Garbage collection for a JVM (WAS)

– Yes: (new / recommended) Non-enclave work is managed

towards the goals of the address space external service class

• Enclave managed address spaces service class goals /

importance is more important than it used to be.

– Verify high enough before switching to Yes

SMF70 - Warning-TrackInterrupt Metrics

SMF RECORD 70 SUBTYPE 1

Field Name

The number of times PR/SM issued a warning-track

SMF70WTS

interruption to a logical processor and z/OS was able

to return the logical processor within the grace period.

The number of times PR/SM issued a warning-track

SMF70WTU

interruption to a logical processor and z/OS was

unable to return the logical processor within the grace

period.

Amount of time in milliseconds that a logical processor SMF70WTI

was yielded to PR/SM due to warning-track

processing.

Offset

x50

x54

x58

Metric of interest

% WTI Successful = SMF70WTS / (SMF70WTS + SMF70WTU)

CMF PTFs BQM0868 (5.8) or BQM0867 (5.9)

RMF APAR OA37803

SMF 42 – SMSVSAM - VSAM

RLS

• Subtype 15: Storage Class Response Time Summary

• Subtype 16: Dataset Response Time Summary

– Only generated if DSN Monitoring is enabled

• using the V SMS,MONDS command

– Remembered by SMSplex, not available via Parm

• Subtype 17: Coupling Facility Lock Structure Usage

• Subtype 18: CF Cache Partition Usage

• Subtype 19: Local Buffer Manager LRU Statistics