Genigraphics Research Poster Template 24x36

advertisement

Automated Bias Detection in Journalism

Richard Lee, James Chen, Jason Cho

MOTIVATION

PROBLEM STATEMENT

RESULTS, CONT.

60

• Be able to detected bias from parallel

• Assuming we’re given parallel corpuses,

corpuses (collections of machine-readable

identify biased sentences. From there,

texts)

identify biased articles.

bias detection has never been done.

and

sarcasm

• We assume that we’ve already given

annotated corpuses to play with. In the

• Previous research in NLP, eg, annotation

systems

40

Percentage

• To the best of our knowledge, automated

50

detection.

Applications in journalism, politics, rhetoric,

future and in practice, we won’t have such a

Positive

Neutral

Negative

30

20

luxury.

10

• Data borrowed from earlier study[1]:

0

• Parallel

linguistics, law, education.

• Increased objectivity in news articles

topics:

2008

Neutral

political

Liberal

Conservative

Bias Type

Chart 1. Sentiment-Bias correlation analysis; shows little/no correlation

atmosphere

100%

• Drawn from political blog posts.

and educational resources.

• Assisted grading and bias-flagging.

90%

• Match sentences/articles with three types of

80%

70%

bias: liberal, neutral, or conservative.

APPROACH

• Some existing research directed towards

related areas:

• Used existing software to streamline design

conservative and liberal

• AlchemyAPI

•

blogs[2]

SVMlight

sentiment

research

directly

permission)

0%

handles classification of texts:

• Bag of words approach; all the unique words

in a document are given an index. The

from

“Shedding light… on biased language”

20%

10%

data (patterns to build off).

tackling automated bias detection.

(with

simple

con-neg lib-neg neu-neg con-neu lib-neu neu-neu con-pos lib-pos neu-pos

Bias-Sentiment

Chart 2. Bias-Sentiment with word count analysis; sentiment has little impact

provided it is given sufficient training

such as sarcasm[4]

data

for

• In lay terms, it does the guess work,

• Detection of other linguistic constructs,

existing

McCain

Obama

40%

analysis

• Automated annotation[3]

• Borrowed

50%

30%

• Quantification of media bias using

no

60%

:

• Lexical bias indicators[1]

• However,

Word Count

BACKGROUND

documents are then reconstructed from

CONCLUSION

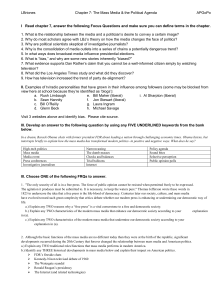

• Three categories, baseline accuracy is

33.33% (assuming completely random).

• Using simple bag-of-words approach we

achieved ~40% accuracy for three-way

these indexes as vectors. For example:

• John likes to watch movies. Mary

• Variety of NLP suites already popular, eg,

likes too. John also likes to

NLTK

classification.

• Using bag of words approach for binary

classification, we achieve ~60% accuracy.

watch football games.

• Consulted with Professor Eric K. Meyer, of

• {"John": 1, "likes": 2, "to": 3,

the UIUC College of Media.

FUTURE WORKS

"watch": 4, "movies": 5, "also":

• Topic framing, eg, estate tax vs. death

6,

tax.

"football":

7,

"games":

8,

• Bias != subjectivity

"Mary": 9, "too": 10}

• Choice of source, such as cherry-

• Eg, “The second amendment protects

• [1, 2, 1, 1, 1, 0, 0, 0, 1, 1]

picked quotes and data points.

gun owners’ rights in America,” versus

• [1, 1, 1, 1, 0, 1, 1, 1, 0, 0][5]

• Choice of words, eg, fetus vs. unborn

RESULTS

baby.

Liberal

vs.

Neutral

Error

39%

Cons.

vs.

Neutral

39.9%

Liberal

&

Cons.

vs.

Neutral

Liberal

vs.

Cons.

vs.

Neutral

39%

61.3%

“I think Inception was quite overrated.”

• More advanced bias detection techniques

Table1. Errors from classifier; bag-of-words experiences difficulties during textual

classifications due to the high amount of overlapping features present in all

datasets

• Smarter “dictionary” of very biased

topics

• Ie, creating a list of words that give

their sentences added priority when

weighing sentiment

• Summing sentiment by topic, etc.

References

1. T. Yano, P. Resnik, and N. Smith. 2010. Shedding (a thousands points of) light on biased language.

2. Y. R. Lin, J. P. Bagrow, and D. Lazer. 2011. More than ever? Quantifying media bias in networks.

3. L. Herzig, A. Nunes, and B. Snir. 2011. An annotation scheme for automated bias detection in Wikipedia.

4. R. González-Ibáñez, S. Muresan, N. Wacholder. Identifying sarcasm in Twitter: A closer look.

5. https://en.wikipedia.org/wiki/Bag-of-words_model