Chapter 13 PowerPoint

advertisement

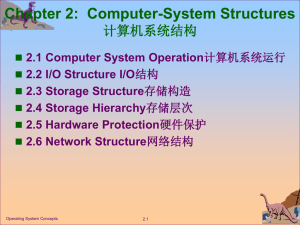

Operating System Concepts chapter 13 CS 355 Operating Systems Dr. Matthew Wright I/O Hardware • Computers support a wide variety of I/O devices, but common concepts apply to all: – Port: connection point for a device – Bus: set of wires that connects to many devices, with a protocol specifying how information can be transmitted over the wires – Controller: a chip (or part of a chip) that operates a port, a bus, or a device • How can the processor communicate with a device? – Special instructions allow the processor to transfer data and commands to registers on the device controller – The device controller may support memory-mapped I/O: – The same address bus is used for both memory and device access. – The same CPU instructions can access memory locations or devices. Typical PC Bus Structure Polling and Handshaking • How does a processor know when a device has data to transmit? • Polling: the CPU repeatedly checks the status register of a device to determine whether the device needs service – Polling could be efficient if the device is very fast. – Polling is usually inefficient, since it is basically busy waiting. • When a device is ready, a connection is established through a technique called handshaking: – Handshaking is an exchange of control information in order to establish a connection. – CPU and device verify that the other is ready for the transmission. – After the transmission, they verify that the transmission was received. • Since polling is inefficient, what alternatives do we have? – Interrupts! Interrupts • Basic interrupt mechanism: – A device controller raises an interrupt by asserting a signal on the interrupt-request line. – CPU catches the interrupt: after each instruction, the CPU checks the interrupt-request line. – CPU dispatches the interrupt handler: CPU saves its current state and jumps to the interrupt-handler routine at a fixed memory address. – Interrupt handler clears the interrupt: determines how to take care of the interrupt, then returns the CPU to its state prior to the interrupt • Modern OSs provide more sophisticated interrupt handling: – Interrupt handling can be deferred during critical processing. – The proper interrupt handler must be dispatched without polling all devices to see which one raised the interrupt. – Multilevel interrupts allow the OS to distinguish between high- and low-priority interrupts and respond appropriately. Interrupts • In modern hardware, interrupt-controller hardware helps the CPU handle interrupts. • Most CPUs have two interrupt request lines: – Nonmaskable interrupt: for events such as unrecoverable memory errors – Maskable interrupt: can be disabled during critical instruction sequences that must not be interrupted; used by device controllers to request service • Interrupts include an address: a number that selects a specific interrupthandling routine from a small set – Interrupt vector: a table containing the memory addresses of specific interrupt handlers – The interrupt vector might not have space for every interrupt handler. – Interrupt chaining: each element in the interrupt vector points to a list of interrupt handlers • Interrupt priority levels: allow the CPU to defer handling low-priority interrupts • Interrupt mechanism also handles: – Exceptions, such as division by zero – System calls (software interrupts) Intel Processor Event-Vector Table Direct Memory Access • For large transfers it would be wasteful to make the CPU handle each byte (or each word) of data to be transferred (this is called programmed I/O, or PIO) – Instead, computers include a direct-memory-access (DMA) controller that does most of the work. – The CPU sets up a memory block and gives its address to the DMA controller. – The DMA controller manages the data transfer directly, while the CPU does other work. – DMA controller interrupts the CPU when the transfer is finished. • When the DMA controller is transferring data on the memory bus, the CPU cannot access main memory. – This is called cycle stealing. – The CPU can still access its primary and secondary caches. – Direct virtual memory access (DVMA) allows the computer to transfer between two memory-mapped devices without the CPU or main memory. Application I/O Interface • How can the OS treat a variety of I/O devices in a standard, uniform way? – We abstract away device details, identifying a few general kinds. – Each kind is accessed through a standard interface. – Device drivers are tailored to specific devices but interact with the OS through one of the standard interfaces. • Devices vary on many dimensions, including: – Data-transfer mode: bytes (e.g. terminal) or blocks (e.g. disk) – Access method: sequential (e.g. modem) or random access (e.g. flash memory card) – Transfer schedule: synchronous (predictable response times, e.g. tape) or asynchronous (unpredictable response times, e.g. keyboard) – Sharing: sharable (e.g. keyboard) or dedicated (e.g. tape) – Device speed: a few bytes per second to gigabytes per second – I/O direction: read only, write only, read-write Application I/O Interface Block and Character Devices • Block-device interface captures all aspects necessary for accessing disk drives and other block-oriented devices. – Commands include read(), write(), and seek() – Applications don’t have to deal with the low-level operation of the devices. – OS may let application access device directly, without buffering or locking—this is raw I/O or direct I/O. • Character-stream interface is used for devices such as keyboards. – Commands, such as get() and put(), work with one character at a time. – Libraries can be used to offer line access and editing. Network Devices • Most systems provide a network I/O interface different from the disk I/O interface. • UNIX and Windows use the network socket interface. – System calls allow for socket creation, connecting a local socket to a remote address, listening on a socket, and sending and receiving packets through a socket. – The select() call returns information about which sockets have packets waiting to be received, which eliminates polling and busy waiting. • UNIX provides many more structures to help with network I/O. – These include half-duplex pipes, full-duplex FIFOs, full-duplex STREAMS, message queues, etc. – For details, see Appendix A.9 that accompanies the book. Clocks and Timers • Hardware clocks and timers provide three basic functions: – Get the current time. – Get the elapsed time. – Set a timer to trigger operation X at time T. • Hardware clock can often be read from a register, which offers accurate measurements of time intervals. • A programmable interval timer is used to measure elapsed time and trigger operations. – It can be set to wait a certain amount of time and then trigger an interrupt. – Scheduler uses this to preempt a process at the end of its time slice. – Network subsystem uses this to stop operations that are proceeding too slowly. – OS can allow other processes to use timers. – OS simulates virtual timers if there are more timer requests than hardware clock channels. Blocking and Nonblocking I/O • Blocking: process suspended until I/O completes – Easy to use and understand – Insufficient for some needs (e.g. a program that continues processing while waiting for input) • Nonblocking: I/O call returns as much as available – Can be implemented via multithreading (one thread blocks, another thread continues running) – Nonblocking call returns quickly, indicating how many bytes were transferred • Asynchronous: process runs while I/O executes – Asynchronous call returns immediately, without waiting for I/O – Completion of I/O is later communicated to the application through a memory location or software interrupt – Can be difficult to use • Difference: a nonblocking call returns immediately with the available data, an asynchronous call waits for the transfer in its entirity and returns later. Kernel I/O Subsystem The I/O subsystem handles many services for applications and for the other parts of the kernel. These services include: • Management of name space for files and devices • Access control to files and devices • Operation control (e.g. a modem cannot seek()) • • • • • File-system space allocation Device allocation Buffering, caching, and spooling Device-status monitoring, error handling, failure recovery Device-driver configuration and initialization Kernel I/O Subsystem: Scheduling • The kernel schedules I/O in order to improve overall system performance. – Example: disk scheduling • The OS maintains a wait queue for each device – I/O scheduler may rearrange a queue to lower the average response time or to give priority to certain requests • OS also maintains a device-status table with information about each device. Kernel I/O Subsystem: Buffering • A buffer is a memory area that stores data being transferred. • Why use buffering? – Producer and consumer of a data stream often operate at different speeds. – Producer and consumer may have different data-transfer sizes. – Buffering supports copy semantics for application I/O. • Copy semantics: – Rules governing how data is to be copied. – Example: If a block is being copied from memory to disk, and a program wants to write to that block, copy semantics may specify that the version of data written to disk is the version that existed when the write() call was made. – The write() call could quickly copy the block into a buffer before returning control to the application. • Double buffering: uses two buffers to provide seamless transfers – Device fills first buffer with data – While the contents of the first buffer is being transferred to disk, incoming data from the device is written to the second buffer. – First buffer may be re-used while contents of second buffer are transferred. Device Speeds Sun Enterprise 6000 device-transfer rates (logarithmic scale) Kernel I/O Subsystem: Spooling • A spool is a buffer that holds output for a device, such as a printer, that can only accept one data stream at a time. – OS intercepts all data sent to the printer. – Each print job is spooled to a separate disk file. – When one print job finishes, the next spool file is sent to the printer. • OS provides a control interface that enables users to view and modify the queue. • Exclusive access: Some operating systems an application to gain exclusive access to a device. – System calls to allocate and deallocate a device – System calls to wait until a device becomes available – In these cases, it is up to the applications to avoid deadlock. Kernel I/O Subsystem: Error Handling • Device and I/O transfers can fail in many ways. – Transient failure: the operation may succeed if re-tried (e.g. network overloaded, try again soon) – Permanent failure: require intervention (e.g. hardware failure) • Operating system can often deal with transient failures by attempting the transfer again • I/O system call returns information to help with failure analysis: – Such system calls generally return a success/failure status bit. – UNIX returns an inter variable named errno that contains an error code to identify the error • Some hardware returns detailed error information, such as the SCSI protocol, which returns three levels of detail – Sense key: identifies general nature of the failure (e.g. hardware error) – Additional sense code: category of failure (e.g. bad command parameter) – Additional sense-code qualifier: gives even more detail (e.g. specific command parameter that was in error) – Many SCSI devices maintain internal error logs Kernel I/O Subsystem: Protection • A user process may accidentally or purposely attempt to disrupt the system by attempting to issue illegal I/O instructions. • I/O instructions are defined as privileged instructions – Users must issue I/O instructions through the operating system. – System calls allow users to request I/O instructions. – OS checks the validity of any instruction before performing it. • Memory-mapped and I/O port memory locations are protected from user access. – An application may need direct access to memory-mapped locations. – The OS carefully controls which applications have access to such memory. Kernel I/O Data Structures • Kernel uses various data structures to keep state information about I/O components. – Open-file table tracks open files. – Other similar structures track network connections, characterdevice communications, and other I/O activities. UNIX I/O kernel structure Transforming I/O Requests to Hardware Operations • How does the operating system connect application request to a specific hardware device? • For example, if an application requests data from a file, how does the OS find the device that contains the file? • MS-DOS: – File names are preceded by a device identifier, such as C:. – A table maps drive letters to specific port addresses. • UNIX: – Device names are incorporated into regular file-system name space. – UNIX maintains a mount table that associates path names with specific devices – Since devices have file names, the same access-control system applies to devices as well as files. Example: A Blocking Read Request Process issues a blocking read() system call for a previously-opened file. Kernel system-call code checks parameters for correctness and determines whether physical I/O must be performed. 3. Process is removed from run queue, placed in wait queue, and I/O request is scheduled. Kernel I/O subsystem sends a request to the device driver. 4. Device driver allocates kernel buffer space to receive data, then sends commands to device controller by writing into device-control registers. 5. Device controller operates device hardware to perform data transfer. 6. DMA controller generates an interrupt when the transfer completes. 7. Interrupt handler receives interrupt (via interrupt-vector table) and signals the device driver. 8. Device driver receives the signal, determines which I/O request has completed, and signals the kernel I/O subsystem that the request has completed. 9. Kernel transfers data or return codes to the address space of the requesting process and moves the process back to the ready queue. 10. When the scheduler assigns the process to the CPU, the process resumes execution at the completion of the system call. 1. 2. Performance • I/O is a major factor in system performance: – Requires many context switches – Loads memory bus as data are copied between controllers, buffers, and memory locations • To improve I/O efficiency, we can: – Reduce the number of context switches. – Reduce the number of times that data must be copied in memory while passing between device and application. – Reduce the frequency of interrupts by using large transfers, smart controllers, and polling (if busy waiting can be minimized). – Increase concurrency by using DMA or channels to offload simple data copying from the CPU. – Move processing primitives into hardware to allow their operation in device controllers to be concurrent with CPU and bus operation. – Balance CPU, memory subsystem, bus, and I/O performance to avoid overloading one area.