Christine Chalk

advertisement

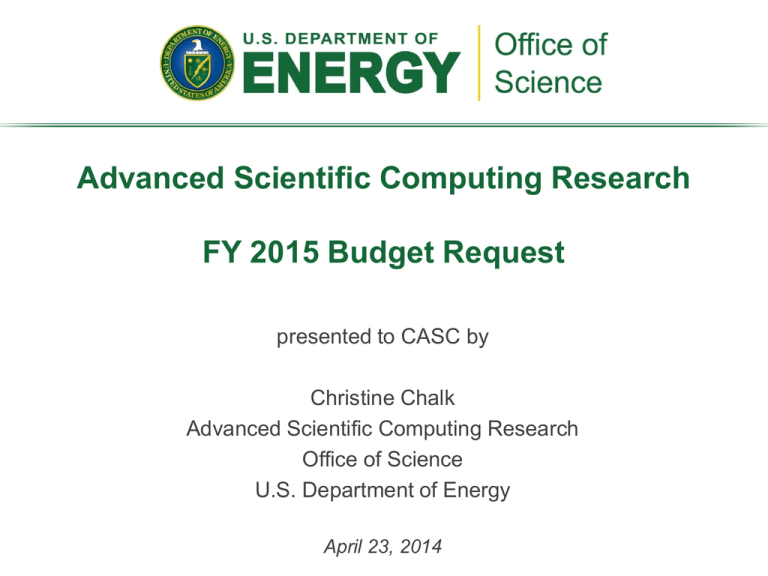

Advanced Scientific Computing Research FY 2015 Budget Request presented to CASC by Christine Chalk Advanced Scientific Computing Research Office of Science U.S. Department of Energy April 23, 2014 FY 2015 ASCR Budget • Investment Priorities – Exascale – Conduct research and development, and design efforts in hardware software, and mathematical technologies that will produce exascale systems in 2022. – Large Scientific Data – Prepare today’s scientific and data-intensive computing applications to migrate to and take full advantage of emerging technologies from research, development and design efforts. – Facilities – Acquire and operate more capable computing systems, from multi-petaflop through exascale computing systems that incorporate technologies emerging from research investments. • Specific Increases (dollars in K; includes SBIR) – Mathematical, Computational, and $10,428 Computer Sciences Research – High Performance Computing and Network Facilities $52,479 2 ASCR Budget Overview FY 2013 Current Approp. (prior to SBIR/STTR) FY 2014 Enacted Approp. FY 2015 President’s Request FY15 vs. FY14 Advanced Scientific Computing Research Exascale Data Applied Mathematics 43,341 49,500 52,155 +2,655 Exascale Data Computer Science 44,299 54,580 58,267 +3,687 Exascale Data Computational Partnerships (SciDAC) 41,971 46,918 46,918 +0 Data Next Generation Networking for Science 11,779 15,931 19,500 +3,569 4,924 5,518 6,035 +517 146,314 172,447 182,875 +10,428 62,000 65,605 69,000 +3,395 146,000 160,000 184,637 +24,637 Research and Evaluation Prototypes 24,000 37,784 57,934 +20,150 High Performance Network Facilities and Testbeds (ESnet) 31,610 32,608 35,000 +2,392 7,854 9,649 11,554 +1,905 271,464 305,646 358,125 +52,479 417,778 478,093 541,000 +62,907 SBIR/STTR Total, Mathematical, Computational, and Computer Sciences Research High Performance Production Computing (NERSC) Leadership Computing Facilities Exascale Data SBIR/STTR Total, High Performance Computing and Network Facilities Total, Advanced Scientific Computing Research 3 ASCR FY 2015 Budget Highlights* ($K) • Exascale Crosscut 91,000† Continue strategic investments to address the challenges of the next generation of computing to ensure that DOE applications continue to efficiently harness the potential of commercial hardware. • Facilities Increase +30,424 Begin preparations for 75-200 petaflop upgrades at each Leadership computing facility; support move of NERSC resources into the new Computational Research and Theory building, expansion of ESnet to support SC facilities and experiments in the US and Europe and creation of a Computational Science Post Doctoral Training program at the LCF’s and NERSC. • Data Intensive Science Increase +9,911 Continue building a portfolio of research investments that address the specific challenges from the massive data expected from DOE mission research, including research at current and planned DOE scientific user facilities and research to develop novel mathematical analysis techniques to understand and extract meaning from these massive datasets. * Does not include increases in SBIR † FY 2014 crosscut for Exascale was $76,364K 4 Facilities 5 Leadership Computing Goal Accelerate discovery science and energy-technology innovation through use of cutting-edge HPC systems • Strategies: – Focus capability computing on high-priority, high-payoff applications – Facilitate computing on multiple emerging architectures to exploit architectural diversity and to mitigate risk – Leverage commodity hardware (CPUs, memory, storage, and interconnects) – Strive to achieve “balance” in systems among compute, memory, and storage – Ensure applications readiness as new systems come online – Incorporate state-of-the-art software tools, techniques, and algorithms developed by ASCR research in applied mathematics and computer science research to ensure performance and usability • Exigencies: – Machines often are “experimental” in nature, even though based on commodity hardware – Significant power and infrastructure needs – Special skills needed (to achieve initial and sustain ongoing operations, to program and use) – Relatively small number of applications/users 6 FY 2015 ASCR Facility Investments ($K) • NERSC (High Performance Production Computing) (+3,395): – Operate optimally (over 90% scheduled availability) – Move to the Computational Research and Theory Building back on the LBNL campus – Initiate a post-doctoral training program for high-end computational science and engineering • LCFs (+13,320 ALCF; +$11.3M OLCF) – Operate optimally (over 90% scheduled availability) – Prepare for planned 75-200 petaflop upgrades in the 2017-2018 timeframe • Purchase and install long –lead time items such as cooling towers, chillers, transformers, heat exchangers, pumps, etc. – Initiate a post-doctoral training program for high-end computational science and engineering • High Performance Network Facilities and Testbeds (+$2.3M) – Operate optimally (99.99% reliability) – Coordinate with other agencies to ensure the availability of next generation of optical networking from domestic sources – Expansion of 100 Gbs network to support interim traffic growth 7 Leadership Computing Facilities (+$24,637 in $K) • Leadership Computing Facilities (LCF) Mission: Provide the computational and data science resources required to solve the most challenging of scientific & engineering problems • • • • • 2 architectures to address diverse and growing computational needs of the scientific community. Projects receive computational resources typically 100x greater than generally available. Leadership Computing Facilities at Argonne (ALCF) and Oak Ridge (OLCF) completed upgrades in FY 2013. Currently the ALCF has a 10 PF IBM Blue Gene and the OLCF has a 27 PF Cray XK7. Even with the increased resources, requests for allocations from both the Innovative and Novel Computational Impact on Theory and Experiment (INCITE) and ASCR Leadership Computing Challenge (ALCC) on the LCF remain oversubscribed by a factor of 3 and expected to grow. Planning, site preparation and delivery of next upgrades takes 4-5 years. The LCFs are working with LLNL on a joint procurement for resources in the 2017-2018 timeframe. The process for the next LCF upgrades has started • • • • Mission need statement (CD-0) signed January, 2013 for the delivery of 150-400 petaflops (PF) (75-200 PF at each LCF to provide architectural diversity). Upgrades in this range would increase the capability of the LCFs by a factor of 4-10. The Acquisition Strategy ( CD-1/CD-3a) was approved in October, 2013 allowing the labs to finalize a Request for Proposals for the upgrades. RFP released in January 2014 ; proposals received February 2014 and under review. Baseline approval and final contract negotiations with selected vendors are anticipated in Q1FY15 8 Mission Need for LCF 2017-2018 Upgrades Science challenges that can be tackled with proposed upgrades: • • • • • • • • • • Energy Storage: Develop multiscale, atoms-to-devices, science-based predictive simulations of cell performance characteristics, safety, cost, and lifetime for various energy storage solutions along with design optimizations at all hierarchies of battery (battery materials, cell, pack, etc.). Nuclear Energy: Develop of integrated performance and safety codes with improved uncertainty quantification and bridging of time and length scales. Implement next-generation multiphysics, multiscale models. Perform accurate full reactor core calculations with 40,000 fuel pins and 100 axial regions. Combustion: Develop of fuel efficient engines through3D simulations of high-pressure, low-temperature, turbulent lifted diesel jet flames with biodiesel or rate controlled compression ignition with fuel blending of alternative C1-C2 fuels and n-heptane. Continue to explore the limits of high-pressure, turbulent combustion with increasing Reynolds number. Fusion: Perform integrated first-principles simulation including all the important multiscale physical processes to study fusionreacting plasmas in realistic magnetic confinement geometries. Electric Grid: Optimization the stabilizing the energy grid while introducing renewable energy sources; incorporate more realistic decisions based on available energy sources. Accelerator Design: Simulate ultra-high gradient laser wakefield and plasma wakefield accelerator structures. Catalysis Design: Enable end-to-end, system-level descriptions of multifunctional catalysis including uncertainty quantification and data-integration approaches to enable inverse problems for catalytic materials design. Biomass to Biofuels: Simulate the interface and interaction between 100-million-atom microbial systems and cellulosic biomass, understanding the dynamics of enzymatic reactions on biomass. Design of superior enzymes for conversion of biomass. High resolution climate modeling: Simulate high resolution events by incorporating scale aware physics that extends from hydrostatic to nonhydrostatic dynamics. Incorporate cloud resolving simulation codes that couple with a dynamically responding surface. Rapid climate and earth system change: Adequately simulate physical and biogeochemical processes that drive nonlinear responses in the climate system, e.g., rapid increases of carbon transformations and cycling in thawing permafrost; ice sheet grounding line dynamics with ocean coupling that lead to rapid sea level rise; dynamics of teleconnections and system feedbacks within e.g. the (meridional) ocean circulation that alter global temperature and precipitation patterns. 9 Anticipated LCF System Upgrades System attributes 2013 “2017” ALCF/MIRA OLCF/TITAN 10 PF 27 PF 75-200 Petaflop/sec 4.8MW (Max) 2.8MW (Typical) 8.2 MW (Max) 5.5 MW(Typical) 15-20 MW (Max) 12-16 MW (Typical) 0.8 PB 0.7 PB 4 PB Node performance 204.8GF 1,452 GF 2-10 TF Node memory BW 42.6GB/s Opteron -51.2 GB/s Kepler - 180 GB/s Up to 1 TB/s to fast memory UP to 500 GB/s to bulk memory Node concurrency 16 cores, each with 4 hardware threads Operton – 16 Kepler – 2,688 CUDA Cores > 60 cores System size (nodes) 49,152 nodes 18,700 nodes 8,000-50,000 nodes 20GB/s 6.4GB/s > 25 GB/sec System peak Power System memory Total Node Interconnect BW 10 ALCF Upgrade Requirements Space: Theory and Computing Sciences Building Expansion • 15,000 SF for computer and 15,000 SF for mechanical, electrical and plumbing • Adjacent to laboratory-planned Electrical Substation and Chilled Water Plant expansion • Raised floor load will be 750 PSF Power: • 15-30MW peak of power and equivalent • Computer 18.5 MW • Data Storage 1.0 MW • Computational Infrastructure 0.5 MW Cooling: • Cooling water supply at 80° F • Two cooling towers provide cooling when ambient temperature is ≤ 73° • Chillers supplements cooling when ambient temperature is >73° F 11 OLCF Upgrade Requirements Space: Computational Sciences Building Expansion 1st floor • 10,000 SF of unfinished shell space • Adjacent to planned Central Energy Plant expansion • Slab on grade – no floor loading issues Power: • 20MW peak technical power • Computer 18.5 MW • Data storage 1.0 MW • Computational infrastructure 0.5 MW Cooling: • • • • 20MW (5700 tons) total cooling required Chilled water temps 55-65° F Humidity control in computer center N+1 chillers for reliability /maintainability 12 Post-Doc Training Program • The Nation needs highly trained workforce of computational scientists to run perform simulations across fields ranging from national security to basic energy science to engineering • ASCR facilities report that it takes up to 18 months to locate and recruit qualified computational scientists. • Numerous Advanced Scientific Computing Advisory Committee (ASCAC) reports have recommended investing more to build workforce capabilities • To address these needs ASCR proposes a Computational Scientists for Energy, Environment and National Security (CSEEN) Training program – Sited at ASCR facilities to attract a diverse set of candidates – Because of increasing processor count and architectural diversity in future computing resources, many application codes and algorithms will need to be re-written. CSEEN post docs will have access to the leading edge computers as well as experienced staff at the ASCR facilities to broaden their computational skillset. – Each facility is associated with a University with a strong computational science program. If there is a perceived gap in CSEEN Post Docs’ background there is support for additional course work. 13 R&E Prototypes • • FastForward: In FY12, Research and Evaluation Prototypes activity worked with LLNL to award $95M (total, including cost sharing, over two years) for innovative R&D on critical technologies – memory, processors and storage – needed to deliver next generation capabilities within a reasonable energy footprint. – Funded Projects: • AMD: processors and memory for extreme systems; • IBM : memory for extreme systems; • Intel Federal: energy efficient processors and memory architectures; • Nvidia: processor architecture for exascale computing at low power; and • Whamcloud: storage and I/O (input/output) – bought by Intel. FY 2015 increases support taking FastForward research to the next level – Lab/vendor partnerships (+12,216) • develop prototypes of the most promising mid-term technologies from the Fast Forward program for further testing – Nonrecurring engineering (+7,934) • Incorporate near-term technologies from Fast Forward into planned facility upgrades at NERSC, ALCF and OLCF. 14 Non Recurring Engineering (NRE) Costs • Component of Research and Evaluation Prototypes • Previous R&E investments beginning in 2007-2008 • Support of IBM-ANL-LLNL R&D contract for design and development of the IBM Blue Gene P/Q systems • Support of Cray component of DARPA HPCS program Outcome • Support to develop and scale compilers, storage systems and debuggers to support use of graphical processing units Outcome Outcome • Because of vendor development schedules, the earliest that results from today’s Fast Forward investments can be in ASCR systems is 2020. Thus, NRE funds will be used to – Scale up proposed systems on vendor’s roadmap for deployment in the LCFs in 2017-2018 – Harden near-term vendor’s research for use in proposed systems to move the architectures closer to pre-exascale. 15 LCF Staff • • Over the past 10 years, each LCF has created an staff experienced in deploying and operating “serial 1” supercomputers to deliver scientific discovery. Because of their extremely specialized skill sets many LCF staff members occupy leadership roles in international standards activities, users groups, and developments groups; are regularly engaged by national and international advisory panels on highperformance scientific computing; and asked to play leadership roles in scientific and technical meetings to more broadly share knowledge on progress in HPC. They have created a new model for providing user support where each project is assigned their own specialist to ensure scientific discovery through the effective use of LCF systems and emerging systems by – – – – assessing and improving the algorithms used by the applications; helping optimize code for the users’ applications, streamlining the work flow, and solving any computer issues that arise. A recent Independent review of the LCF’s upgrade plans found LCF organizations have a demonstrated track record of multiple, successful deployments of new machines, machine upgrades, and development of necessary infrastructure and ancillary facilities to house and maintain leadership computing operations. 16 Innovative and Novel Computational Impact on Theory and Experiment INCITE is an annual, peer-review allocation program that provides unprecedented computational and data science resources • 5.8 billion core-hours awarded for 2014 on the 27-petaflops Cray XK7 “Titan” and the 10-petaflops IBM BG/Q “Mira” • Average award: 78 million core-hours on Titan and 88 million core-hours on Mira in 2014 • INCITE is open to any science domain • INCITE seeks computationally intensive, large-scale research campaigns Call for Proposals The INCITE program seeks proposals for high-impact science and technology research challenges that require the power of the leadershipclass systems. Allocations will be for calendar year 2015. April 16 – June 27, 2014 Contact information Julia C. White, INCITE Manager whitejc@DOEleadershipcomputing.org Leadership-class resources at INCITE INCITE Eligibility Info INCITE is for researchers who have capability, time-tosolution, or computer architecture and data infrastructure requirements that can’t be met by any other resource. INCITE is open to researchers worldwide; US collaboration is encouraged but not required. DOE funding is not required. INCITE Access Details • Early access to prepare for INCITE: See the ALCF and OLCF Director’s Discretionary programs. – ALCF: www.alcf.anl.gov – OLCF: www.olcf.ornl.gov • INCITE information – www.doeleadershipcomputing.org Exascale and Data Intensive Science 19 Exascale Computing • In partnership with NNSA • “All-in” approach: – Mission-critical applications for National security & extreme scale science – System software/stacks – Acquisition of computer systems • Exaflops sustained performance – Approximate peak power 20-30 MW • Productive system – Usable by a wide variety of scientists – “Easier” to develop software & to manage the system • Based on marketable technology – Not a “one off” system – Scalable, sustainable technology • Major step in architectural complexity – not business as usual • Deployment in ~2023 20 Path Toward Exascale -- $91 million in FY 2015 ($K) FY 2013 Current Approp. FY 2014 Enacted Approp. FY 2015 President’s Request FY2015 Exascale Investments Mathematics, Computational and Computer Science Research Applied Mathematics 49,500 52,155 5,000 5,000 5,000 Computer Science 44,299 54,580 58,267 Exascale: Extreme scale, Advanced Architectures 12,580 17,580 20,000 Computational Partnerships (SciDAC) 41,971 46,918 46,918 Exascale: Co-Design 12,705 16,000 16,000 Continues support for Co-Design activities, including data intensive science partnerships started in FY 2014 Research and Evaluation Partnerships 24,000 37,784 57,934 Note: $7.9M in FY 15 is for non recurring engineering costs for facility upgrades. Exascale : Platform R&D and Critical Technologies 24,000 37,784 50,000 Initiates conceptual design studies for prototypical exascale systems from application workflow to hardware structure and system software; continues support for Fast Forward investments in critical technologies and Design Forward investments in systemlevel engineering efforts with high performance computer vendors. Exascale Total 54,285 76,364 91,000 Exascale: Uncertainity Quantification Continues support for awards made in 2013 on “UQ Methods for Extreme-Scale Science.” These efforts will improve the fidelity and predictability of DOE Simulations Supports new research addressing in situ methods, workflows, and proxy applications for data management, processing, analysis and visualization; continues support for research into advanced architectures, software environments and operating systems. High Performance Computing and Network Facilities 21 Office of Science and “Big Data” • SC has not been viewed historically by many not to be a player in “big data” • However, examples within SC abound: – Data from large-scale experiments (HEP, BES); medium-scale experiments (BER) – Observational data (BER/Climate, BER/Environment, HEP) – Simulation results (BER/Climate, BES, HEP, NP, FES) • SC has significant infrastructure devoted to data – ASCR: NERSC and the Leadership Computing Facilities – HEP: data architecture devoted to LHC • Big data and big computing go hand-in-hand – cannot have one without the other • Workflows are emerging at large experimental facilities that join with high-end computing – ALS, APS, LCLS, SNS 22 Mission: Extreme Scale Science Data Explosion Genomics Data Volume increases to 10 PB in FY21 High Energy Physics (Large Hadron Collider) 15 PB of data/year Light Sources Approximately 300 TB/day Climate Data expected to be hundreds of 100 EB Driven by exponential technology advances Data sources • • • • Scientific Instruments Scientific Computing Facilities Simulation Results Observational data Big Data and Big Compute • Analyzing Big Data requires processing (e.g., search, transform, analyze, …) • Extreme scale computing will enable timely and more complex processing of increasingly large Big Data sets 23 ASCR Research Investments in “Big Data” • Applied Math (+$2,655): – Development of mathematical algorithms that accommodate the spatial and temporal variation in data and account for the characteristics of sensors as needed and adaptively reduce data – Development of new compression techniques • Computer Science (+3,687) – Develop new paradigm for generating and executing dynamic workflows that include the development of new workflow engines and languages that are semantically rich and allow interoperability or interchangeably in many environments – Development of scalable and interactive visualization methods for ensembles, multivariate and multiscale data – Define components and associated Application Programing Interfaces for storing , annotating and accessing scientific data; support development of standards • Next Generation Networking for Science (+$3,569) – Develop new methods for scheduling data movement over the WAN that includes understanding replication policies, data architectures and subset access mechanisms – Create new methods for rapid and scalable collaborative analysis and interpretation – Construct a cyber framework that supports complex real-time analysis and knowledge navigation, integration and creation processes. 24 SC/NNSA Partnership 25 ASCR-NNSA Partnership DOE Exascale Program is a partnership between Office of Science and NNSA Joint: • Management via 2011 MOU between the DOE Office of Science and NNSA • Program planning and execution of technical R&D • Fast Forward, Design Forward • Development of Exascale Roadmap • Procurements of major systems • Lab executive engagement via “E7” • ANL, LANL, LBNL, LLNL, ORNL, PNNL, SNL • Periodic PI meetings and workshops 26 Joint Procurements with NNSA • In January, 2013, DOE Office of Science approved the mission need for LCF upgrades to be installed in 2017-2018 • Because of the timing of acquisitions ANL and ORNL along with Lawrence Livermore National Laboratory will collaborate on a joint procurement for upgrades. • Will result in two diverse platforms for the LCFs • Collaboration is a win-win for all parties: – Reduces the number of RFPs vendors have to respond to – Allows pooling of R&D funds – Supports sharing technical expertise between Labs – Should improve the number and quality of proposals – Strengthens the alliance between SC/NNSA on road to exascale 27