Cern Data Centre Evolution - SDCD2012

advertisement

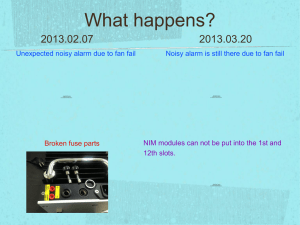

CERN Data Centre Evolution Gavin McCance gavin.mccance@cern.ch @gmccance SDCD12: Supporting Science with Cloud Computing Bern 19th November 2012 What is CERN ? • Conseil Européen pour la Recherche Nucléaire – aka European Laboratory for Particle Physics • Between Geneva and the Jura mountains, straddling the Swiss-French border • Founded in 1954 with an international treaty • Our business is fundamental physics , what is the universe made of and how does it work Gavin McCance, CERN 2 Answering fundamental questions… • How to explain particles have mass? We have theories and accumulating experimental evidence.. Getting close… • What is 96% of the universe made of ? We can only see 4% of its estimated mass! • Why isn’t there anti-matter in the universe? Nature should be symmetric… • What was the state of matter just after the « Big Bang » ? Travelling back to the earliest instants of the universe would help… Gavin McCance, CERN 3 The Large Hadron Collider (LHC) tunnel Gavin McCance, CERN 4 Gavin McCance, CERN 5 • Data Centre by Numbers – Hardware installation & retirement • ~7,000 hardware movements/year; ~1,800 disk failures/year Racks 828 Disks 64,109 Tape Drives 160 Servers 11,728 Raw disk capacity (TiB) 63,289 Tape Cartridges 45,000 Processors 15,694 Memory modules 56,014 Tape slots 56,000 Cores 64,238 Memory capacity (TiB) Tape Capacity (TiB) 73,000 HEPSpec06 Xeon 3GHz 4% Xeon L5520 33% 482,507 Xeon Xeon 5150 5160 2% 10% 158 RAID controllers 3,749 High Speed Routers (640 Mbps → 2.4 Tbps) Other Fujitsu 0% 3% Xeon E5335 7% Xeon E5345 14% Ethernet Switches Hitachi 23% Seagate 15% 10 Gbps ports HP 0% Switching Capacity Maxtor 0% Xeon L5420 8% Xeon E5410 16% Xeon E5405 6% Western Digital 59% Gavin McCance, CERN 1 Gbps ports 10 Gbps ports 24 350 2,000 4.8 Tbps 16,939 558 IT Power Consumption 2,456 KW Total Power Consumption 3,890 KW 6 Current infrastructure • Around 12k servers – – – – Dedicated compute, dedicated disk server, dedicated service nodes Majority Scientific Linux (RHEL5/6 clone) Mostly running on real hardware Last couple of years, we’ve consolidated some of the service nodes onto Microsoft HyperV – Various other virtualisation projects around • In 2002 we developed our own management toolset – Quattor / CDB configuration tool – Lemon computer monitoring – Open source, but a small community Gavin McCance, CERN 7 • Many diverse applications (”clusters”) • Managed by different teams (CERN IT + experiment groups) Gavin McCance, CERN 8 New data centre to expand capacity Gavin McCance, CERN • Data centre in Geneva at the limit of electrical capacity at 3.5MW • New centre chosen in Budapest, Hungary • Additional 2.7MW of usable power • Hands off facility • Deploying from 2013 with 200Gbit/s network to CERN 9 Time to change strategy • Rationale – Need to manage twice the servers as today – No increase in staff numbers – Tools becoming increasingly brittle and will not scale as-is • Approach – CERN is no longer a special case for compute – Adopt an open source tool chain model – Our engineers rapidly iterate • Evaluate solutions in the problem domain • Identify functional gaps and challenge old assumptions • Select first choice but be prepared to change in future – Contribute new function back to the community Gavin McCance, CERN 10 Building Blocks mcollective, yum Bamboo Puppet AIMS/PXE Foreman JIRA OpenStack Nova git Koji, Mock Yum repo Pulp Active Directory / LDAP Lemon / Hadoop Hardware database Puppet-DB Gavin McCance, CERN 11 Choose Puppet for Configuration • The tool space has exploded in last few years – In configuration management and operations • Puppet and Chef are the clear leaders for ‘core tools’ • Many large enterprises now use Puppet – – – – Its declarative approach fits what we’re used to at CERN Large installations: friendly, wide-based community You can buy books on it You can employ people who know it better than do Gavin McCance, CERN 12 Puppet Experience • Excellent: basic puppet is easy to setup and can be scaled-up well • Well documented, configuring services with it is easy • Handle our cluster diversity and dynamic clouds well • Lots of resource (“modules”) online, though of varying quality • Large, responsive community to help • Lots of nice tooling for free – Configuration version control and branching: integrates well with git – Dashboard: we use the Foreman dashboard • We’re moving all our production service over in 2013 Gavin McCance, CERN 13 Gavin McCance, CERN 14 Preparing the move to cloud • Improve operational efficiency and dynamicness – Dynamic multiple operating system demand – Dynamic temporary load spikes for special activities – Hardware interventions with long running programs (live migration) • Improve resource efficiency – Exploit idle resources, especially waiting for disk and tape I/O – Highly variable load such as interactive or build machines • Enable cloud architectures – Gradual migration from traditional batch + disk to cloud interfaces and workflows • Improve responsiveness – Self-Service with coffee break response time Gavin McCance, CERN 15 What is OpenStack ? • OpenStack is a cloud operating system that controls large pools of compute, storage, and networking resources throughout a datacenter, all managed through a dashboard that gives administrators control while empowering their users to provision resources through a web interface Gavin McCance, CERN 16 Service Model • Pets are given names like pussinboots.cern.ch • They are unique, lovingly hand raised and cared for • When they get ill, you nurse them back to health • Cattle are given numbers like vm0042.cern.ch • They are almost identical to other cattle • When they get ill, you get another one • Future application architectures should use Cattle but Pets with strong configuration management are viable and still needed Gavin McCance, CERN 17 Basic Openstack Components HORIZON KEYSTONE GLANCE NOVA Compute Registry Scheduler Image Volume Network • Each component has an API and is pluggable • Other non-core projects interact with these components Gavin McCance, CERN 18 Supporting the Pets with OpenStack • Network – Interfacing with legacy site DNS and IP management – Ensuring Kerberos identity before VM start • Puppet – Ease use of configuration management tools with our users – Exploit mcollective for orchestration/delegation • External Block Storage – Currently using nova-volume with Gluster backing store • Live migration to maximise availability – KVM live migration using Gluster – KVM and Hyper-V block migration Gavin McCance, CERN 19 Current Status of OpenStack at CERN • Working on an Essex code base from the EPEL repository – Excellent experience with the Fedora cloud-sig team – Cloud-init for contextualisation, oz for images with RHEL/Fedora • Components – Current focus is on Nova with KVM and Hyper-V – Keystone running with Active Directory and Glance for Linux and Windows images • Pre-production facility with around 200 Hypervisors, with 2000 VMs integrated with CERN infrastructure – used for simulation of magnet placement using LHC@Home and batch physics programs Gavin McCance, CERN 20 Gavin McCance, CERN 21 Next Steps • Deploy into production at the start of 2013 with Folsom running production services and compute on top of OpenStack IaaS • Support multi-site operations with 2nd data centre in Hungary • Exploit new functionality – Ceilometer for metering – Bare metal for non-virtualised use cases such as high I/O servers – X.509 user certificate authentication – Load balancing as a service Ramping to 15K hypervisors with 100K VMs by 2015 Gavin McCance, CERN 22 Conclusions • CERN computer centre is expanding • We’re in the process of refurbishing the tools we use to manage the centre based on Openstack for IaaS and Puppet for configuration management • Production at CERN in next few months on Folsom – Gradual migration of all our services • Community is key to shared success – CERN contributes and benefits Gavin McCance, CERN 23 BACKUP SLIDES Gavin McCance, CERN 24 Training and Support • • • • Buy the book rather than guru mentoring Follow the mailing lists to learn Newcomers are rapidly productive (and often know more than us) Community and Enterprise support means we’re not on our own Gavin McCance, CERN 25 Staff Motivation • Skills valuable outside of CERN when an engineer’s contracts end Gavin McCance, CERN 26 When communities combine… • OpenStack’s many components and options make configuration complex out of the box • Puppet forge module from PuppetLabs does our configuration • The Foreman adds OpenStack provisioning for user kiosk to a configured machine in 15 minutes Gavin McCance, CERN 27 Foreman to manage Puppetized VM Gavin McCance, CERN 28 Active Directory Integration • CERN’s Active Directory – – – – Unified identity management across the site 44,000 users 29,000 groups 200 arrivals/departures per month • Full integration with Active Directory via LDAP – Uses the OpenLDAP backend with some particular configuration settings – Aim for minimal changes to Active Directory – 7 patches submitted around hard coded values and additional filtering • Now in use in our pre-production instance – Map project roles (admins, members) to groups – Documentation in the OpenStack wiki Gavin McCance, CERN 29 What are we missing (or haven’t found yet) ? • Best practice for – Monitoring and KPIs as part of core functionality – Guest disaster recovery – Migration between versions of OpenStack • Roles within multi-user projects – VM owner allowed to manage their own resources (start/stop/delete) – Project admins allowed to manage all resources – Other members should not have high rights over other members VMs • Global quota management for non-elastic private cloud – Manage resource prioritisation and allocation centrally – Capacity management / utilisation for planning Gavin McCance, CERN 30 Opportunistic Clouds in online experiment farms • The CERN experiments have farms of 1000s of Linux servers close to the detectors to filter the 1PByte/s down to 6GByte/s to be recorded to tape • When the accelerator is not running, these machines are currently idle – Accelerator has regular maintenance slots of several days – Long Shutdown due from March 2013-November 2014 • One of the experiments are deploying OpenStack on their farm – Simulation (low I/O, high CPU) – Analysis (high I/O, high CPU, high network) Gavin McCance, CERN 31 New architecture data flows Gavin McCance, CERN 32