Multicore/Manycore Processors - St. Francis Xavier University

advertisement

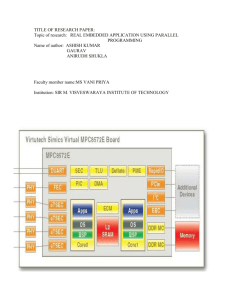

Joram Benham April 2, 2012 Introduction Motivation Multicore Processors Overview, CELL Advantages of CMPs Throughput, Latency Challenges Future of Multicore Multicore processors Several/many cores on the same chip Dual/quad core – two/four cores AKA Chip-multiprocessors (CMPs) Instruction-Level Parallelism Pipelining – split execution into stages Superscalar – issue multiple instruction each cycle Out-of-order execution Branch prediction Take advantage of implicit program parallelism – instruction independence 1. 2. 3. Limited amount of implicit parallelism in sequentially designed/coded programs Circuitry for pipelining becomes complex after 10-20 stages Power – circuitry for ILP exploitation results in exponentially more power being used Intel processor power over time. Power in Watts on y-axis, years on x-axis. AKA Multicore/Manycore Processor Getting harder to build better uniprocessors CMPs are less difficult Can reuse/modify old designs Add modified copies to same chip Requires a paradigm shift From Von Neumann model to parallel programming model Thread-level parallelism + instruction-level parallelism CELL CMP – heterogeneous Developed by Sony, Toshiba, IBM Built for Sony’s PlayStation 3 Contains 9 cores 1 Power Processing Element (PPE) 8 Synergistic Processing Elements (SPEs) Throughput, Latency Web-server throughput Handle many independent service requests Collections of uniprocessor servers used Then, multiprocessor systems CMP approach Use less power for communication Reducing clock-speeds General rule: “The simpler the pipeline, the lower the power.” Simple cores – less power used Less speed, but more cores available to handle requests Comparison of power usage by equivalent narrow issue/in-order processors, and wide-issue/out-of-order processors on throughput-oriented software. Server applications: High thread-level parallelism Lower instruction-level parallelism, high cache miss rates Results in idle processor time on uniprocessors Hardware multithreading Coarse-grained: stalls trigger switches Fine-grained: switch threads continuously Simultaneous: Run multiple threads using superscalar issuing More cores = higher total hardware thread count What kind of cores should be added? Fewer larger, more complex cores ▪ Individual threads complete faster Many smaller, simpler cores ▪ Slightly slower – but more cores means more threads, and higher throughput Latency is more important in some programs E.g. Desktop applications, compilation CMPs are closer together on chip – less communication time Two ways CMPs help with latency Parallelize the code for responsive applications Run sequential applications on their own hardware threads – no competition between threads Power and Temperature, Cache Coherence, Memory Access, Paradigm Shift, Starvation In theory: two cores on the same chip = twice as much power + lots of heat Solutions: Reduce core clock speeds Implement a power control unit CELL chip-multiprocessor thermal diagram. Multiple cores, independent local caches Load same block of main memory into cache – may result in data inconsistency Cache coherence schemes Snooping: Watch the communication bus Directory-based: Keep track of which memory locations are being shared in multiple caches We need more memory to share among multicore processors 64-bit processors – helps address the issue: more addressable memory Useless if we cannot access it quickly Disk speed slows everyone down “To use multicore, you really have to use multiple threads. If you know how to do it, it's not bad. But the first time you do it there are lots of ways to shoot yourself in the foot. The bugs you introduce with multithreading are so much harder to find.” Have to educate programmers Convince them to make their programs concurrent Sequential programs will not use all cores Some cores “starve” Shared cache usage One core evicts another core’s data Other core has to keep accessing main memory Multicore, Manycore, Hybrids Instruction-level parallelism reaching its limits CMPs help with throughput and latency Two types of CMP will emerge “Manycore”: large number of small, simple cores, targets at servers/throughput “Multicore”: fewer, faster superscalar cores for very latency sensitive programs “Hybrids”: heterogeneous combinations Hammond, L., Laudon, J., Olukotun, K. Chip Multiprocessor Architecture: Techniques to Improve Throughput and Latency. Morgan and Claypool, 2007. Hennessy, J. L., Patterson, D. A. Computer Architecture: A Quantitative Approach. San Francisco: Morgan Kaufmann Publishers, 2007. Mashiyat, A. S. “Multi/Many Core Systems.” St. Francis Xavier University course presentation, 2011. Schauer, Bryan. “Multicore Processors – A Necessity.” Proquest Discovery Guides. September 2008. Web. Accessed April 2 2012. <http://www.csa.com/discoveryguides/multicore/review.pdf>