Dr. David Treder – Data Analysis Utilizing the New

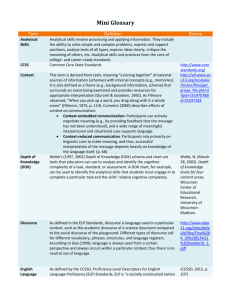

advertisement

Michigan’s Accountability System What Does It Take To Be A Green School? There were 98 “Green” Schools Or is 96? The Downloadable Database now has two new green schools: -- 9th Grade Transition & Alternative Learning Center, Inkster -- Clare-Gladwin Area School So, We’ll go with 96 Green Schools Couple Quick Factoids • Only 7 had a Top-to-Bottom Ranking • Only 13 had AMO targets 96 Green Schools 28 - Had ZERO Possible Points (brand new in 201213, so no Proficiency data, no SIP Requirement, No REP data Requirement 96 – 28 = 68 96 Green Schools 24 - Opened or reconfigured in Fall 2011, so their AMO was zero (AMOs were based on 2011-12 FAY percent proficient… these schools had none, so actually their AMO was set at the percent proficient in 2012-13) 68 – 24 = 44 Opened in 2011 (points awarded for zero proficient) 96 Green Schools 29 – Less then 30 FAY students (small schools) - no bottom 30 % subgroups 44 – 29 = 15 AMO Targets for Small Schools • A 95% confidence interval is used • Compares school proficiency against a statewide target, based on based on the number of FAY students: > sqrt((p * (1 - p)) /n) p = percent proficient, n = number of FAY students • Confidence interval put around theTARGET, not the “sample” (Pct Prof of FAY kids). • So, with 23 FAY Kids: > 1.96* sqrt((.14*(1-.14)) / 23) = 14.2% > Bottom end of the Math Target “Confidence Interval” = -0.2 > So, Zero Percent Proficient means the school made the target 96 Green Schools 6 – No AMO, with > 30 in 2012 - “Target” becomes small school target - Again, bottom 30 subgroup can be awarded 2 pts. with no students proficient 15 – 6 = 9 (Not sure why Bottom 30% gets a green – a Sample Size of 12 is the point where Zero kids will get you a green). 96 Green Schools 2 Closed in 2012, no data available 9–2=7 Closed 2012 (same for all subjects) 96 Green Schools 6 – AMO targets for Math & Reading only (No Bottom 30% in Science or Soc. Studies) 7–6=1 And the School Left Standing: Webster Elementary, Livonia School District Proficiency Rates 100% - Math, Soc. Studies, and Writing 99.6% - Reading, 94% - Science (bottom 30%, 82% proficient) “To have ANY students proficient in the Bottom 30% subgroup, a school needs to have 70% proficient in the ALL Students group” This is not exactly accurate… Bottom 30 Math 3 3 Math Std Error 46 50 Math Z Score -1.609 -1.694 Math Proficient Provisional Provisional But Pretty Close … Genesee ISD-Wide Data Math - 32,337 FAY/Valid Math Scores - Bottom 30%, proficient 2.8% growth 1.0% provisional And REALLY Close in Science - 13,399 Valid/FAY Science Scores - 0.1% (20 students) Bottom 30%, Provisionally Proficient - all -- but 1, from a gifted/magnet program -- had a SEM of > 44 (13 had the maximum SEM of 50) So, you want to be a Green School next year? It’s best, this year, you look like this Arvon Township School 21798 Skanee Road Skanee, MI 49962 http://www.arvontownshipschool.org/about.php Or This…. Accountability for Title III/Limited English Proficient (LEP) Students -- AMAOs (Annual Measurable Achievement Objectives) AMAO 1: Progress The district must demonstrate that the percentage of its students making “progress” on the English Language Proficiency Assessment (ELPA) meets or exceeds the current year’s target. Progress Calculation The AMAO 1 progress calculation is done in the following manner for each district: 1. Calculate the number of EL students in the district. 2. Calculate the number of those students gaining at least 4 points* on the ELPA scale from the previous year (or a prior year). 3. Divide the result of (2) by the result of (1) to obtain the percentage of students in each district making sufficient progress over the past year. 4. A district is identified as making sufficient progress if the target percentage of its EL students gained a minimum of 4 points from the previous year’s (or a prior year’s) ELPA. *The rationale for choosing a cutoff of 4 points is that it is larger than the overall standard error of measurement along the ELPA scale, indicating that if a student made at least that much progress, it is attributable to student gains rather than measurement error. Statewide Mean Scale Scores, and Mean Across-Year “Growth” from Year to Year State-Wide Mean Scale Score GRADE 2009 K 531 Year 2 Grade - Year 1 (Grade - 1) 2010 525 2011 525 2112 526 2013 529 2009 -2010 2010 -2011 2011 -2012 2012 -2013 1 559 556 556 559 560 25 31 34 34 2 586 586 591 593 590 27 35 37 31 3 607 606 608 607 609 20 22 16 17 4 620 621 622 622 622 14 16 14 15 5 629 630 632 632 632 10 11 10 10 6 614 614 615 615 617 -15 -15 -17 -16 7 621 629 623 624 623 15 9 9 8 8 628 629 632 631 630 8 3 8 6 9 631 628 632 632 633 0 3 0 3 10 637 637 640 639 639 6 12 7 7 11 643 643 646 645 643 6 9 5 4 12 643 644 648 647 643 1 5 1 -2 2006 Technical Manual, with Standard Setting 2006 Technical Manual, with Standard Setting, and 1st Years’ Scores 2010 Technical Manual: Mean Scale Scores 2013 Technical Manual: Mean Scale Scores Purpose and Recommended Use 2007 Tech Manual …………….. Because test results provide students, teachers, and parents with an objective report of each student’s strengths and weaknesses in the English language skills of listening, speaking, reading, and writing, the MI-ELPA helps determine whether these students are making adequate progress toward English language proficiency. Year-to-year progress in language proficiency can also be measured and documented after the MI-ELPA vertical scale is successfully established. 2110 & 2013 Tech Manual ……………… This vertical development of the language tested allows the test to differentiate more finely among students at different stages of language acquisition. Because test results provide students, teachers, and parents with an objective report of each student’s strengths and weaknesses in the English language skills of speaking, listening, reading, writing, and comprehension, the ELPA helps determine whether these students are making adequate progress towards English language proficiency. A number of extensive, well documented Technical Manual have been done … But don’t see anything on how the vertical scale was “successfully established.” DESCRIPTIVE STATISTICS AT ITEM AND TEST LEVELS 4.1 Item-Level Descriptive Statistics 4.2 Higher-Level Descriptive Statistics RELIABILITY 5.1 Classical True Score Theory Internal Consistency Reliability Standard Error of Measurement (SEM) within CTT Framework 5.2 Conditional SEM within the IRT Framework 5.3 Inter-Rater Reliability 5.4 Quality (or Reliability) of Classification Decisions at Proficient Cuts CALIBRATION, EQUATING, AND SCALING 6.1 The Unidimensional Rasch and Partial Credit Models 6.2 Calibration of the Spring 2013 ELPA Stability of Anchor Items 6.3 Scale Scores for the ELPA 6.4 Test Characteristic Curves for the ELPA by Assessment Level IRT STATISTICS 7.1 Rasch Statistics 7.2 Evidence of Model Fit 7.3 Item Information 8.1 Validity Evidence of the ELPA Test Content Relation between ELPA and Michigan English Language Proficiency (ELP) Standards Validity of the Presentation of the Listening Stimulus 8.2 Validity Evidence of the Internal Structure of the ELPA 8.3 Validity Evidence of the External Structure of the ELPA Relation between the ELPA and the MEAP Subject Tests Relation between the ELPA and the MME Subject Tests Distribution of Student Classification across Performance Levels