Factor 2. Effective Administration

advertisement

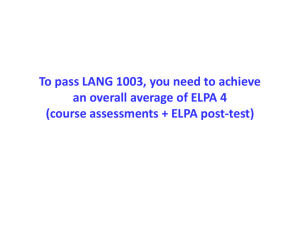

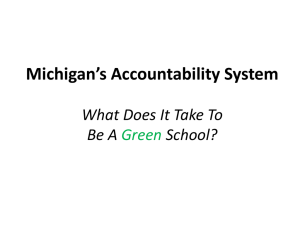

INVESTIGATING THE RELIABILITY AND VALIDITY OF HIGH-STAKES ESL TESTS Dr. Paula Winke Michigan State University winke@msu.edu March 22, 2013, TESOL Dallas Background Tests must be valid. Validity = evidence that a test actually measures what it is intended to measure. I can fly planes! Background Validity can be measured: Quantitatively Psychometric measures of reliability Tests should be Narrow reliable and consistent. Qualitatively Social, ethical, practical, and consequential considerations (e.g. Messik, 1980, 1989, 1994; Moss, 1998; McNamara & Roever, 2006; Ryan, 2002; Shohamy, 2001, 2006) Broad Tests should be fair, meaningful, cost efficient, developmentally appropriate, and do no harm. 4 K-12, NCLB ELPA in Michigan Who determines reliability & validity? • • • Test developers Researchers Test Stakeholders Validity is a multifaceted concept that includes value judgments and social consequences. (Messick, 1980; 1989; 1994; 1995; McNamara & Roever, 2006) “Justifying the validity of test use is the responsibility of all test users.” (Chapelle, 1999, p. 258) Study 1: The ELPA in Michigan The purpose of this study was to evaluate the perceived effectiveness of the English Language Proficiency Assessment (ELPA), which is used in the state of Michigan to fulfill the No Child Left Behind (NCLB) requirements. In particular, I wanted to look at the test validity of the ELPA. Goal of this Study Investigate the views of teachers and school administrators (educators) on the administration of the ELPA in Michigan ELPA in Michigan Administered to 70,000 English Language Learners (ELLs) in K-12 annually since spring of 2006 Fulfillment of No Child Left Behind (NCLB) and Federal Title I and Title III requirements 9 The ELPA Based on standards adopted by the State Subtests Listening Reading Writing Speaking Comprehension Scoring Basic Intermediate Proficient Levels of the 2007 ELPA Level I for kindergarteners Level II for grades 1 and 2 Level III for grades 3 through 5 Level IV for grades 6 through 8 Level V for grades 9 through 12 ELPA Technical Manual 2006 MI-ELPA Technical Manual claimed ELPA is valid. A. Item writers were trained B. Items and test blueprints were reviewed by content experts C. Item discrimination indices were calculated D. Item response theory was used to measure item fit and correlations among items and test sections Validity argument did not consider the test’s consequences, fairness, meaningfulness, or cost and efficiency, all of which are part of a test’s validation criteria (Linn et al., 1991) 13 Research Questions 1. What are educators’ opinions about the ELPA and its administration? 2. Do educators’ opinions vary according to the demographic or teaching environment in which the ELPA was administered? 267 Participants Educator Type Teachers of ESL & Language Arts Teachers of English Literature & Other Subjects School Principals & Administrators Others Not Identified Total Number 166 Percentage 62.2% 5 1.9% 62 23.2% 30 11.2% 4 1.5% 267 100% Materials 3-part online survey Part 1: 6 items on demographic information Part 2: 40 belief statements + comments Part 3: 5 open-ended questions Procedure ELPA Testing Window 3/194/27 Online Survey Window 3/29-5/20 MITESOL Listserv email sent 3/29 Names and emails culled off Web 3/30-4/7 Reminder email to MITESOL and culled list sent 5/14 Analysis Quantitative: factor analysis of the data generated from the 40 belief items; derived factor scores and used those to see if the ELL demographic or teaching environment affected survey outcomes Qualitative: deductive-analytic, qualitative analysis of answers to the open-ended questions and comments on the 40 closed items. How Factor Analysis Works Item 1 Speaking ability Item 2 Item 3 Item 4 Item 5 ability Listening Item 6 Item 7 Item 8 Item 9 Item 10 Factor 1 •• After Items items that are don’t Factor analysis clustered correlate with runs correlations together with any Factor 2 among the that otherlarger items factor (that were answered answered items in don’t fit into awe thesee same way, to what items can look at the cluster) can be are related— items thefrom that is,inwhat dropped cluster label questions tap furtherand analysis the cluster—what into the same or discussion. is the theme of underlying the cluster? construct. 21 Results: 1. Factor Analysis Factor Alpha Mean SE SD 1. Reading and writing tests 0.9 5.59 0.15 2.54 2. Effective administration 0.88 7.34 0.15 2.77 3. Impacts 0.88 5.43 0.15 2.61 4. Speaking test 0.9 5.88 0.15 2.49 5. Listening test 0.88 5.64 0.15 2.62 ANOVA results Factor 2 (affective administration) by ELL concentration 23 0 -0.5 -0.97 -1 UB -1.5 Mean LB -1.99 -2 -2.14 -2.5 -3 Less than 5% 5 to 25% More than 25% ANOVA results Factor 4 (speaking test) by ELL concentration 24 1 0.5 0.19 0 UB -0.27 Mean -0.5 LB -0.71 -1 -1.5 Less than 5% 5 to 25% More than 25% Qualitative Results A. Perceptions on the ELPA subtests Factor 1. Reading and writing Factor 4. Speaking Factor 5. Listening B. Logistics Factor 2. Effective Administration C. Test impacts Factor 3. Impacts A. Perceptions on the ELPA subtests (Factors 1, 4 and 5) 65 wrote that the test was too difficult for lower grade levels. Out of the 145 responders who administered ELPA Level I, the test for kindergarteners, 30 commented that it was too hard or inappropriate. Most of these comments centered on the reading and writing portions of the test. A. Perceptions on the ELPA subtests (Factors 1, 4 and 5) Example 1: Having given the ELPA grades K-4, I feel it is somewhat appropriate at levels 1-4. However I doubt many of our American, English speaking K students could do well on the K test. This test covered many literacy skills that are not part of our K curriculum. It was upsetting for many of my K students. The grade 1 test was very difficult for my non-readers who often just stopped working on it. I felt stopping was preferable to having them color in circles randomly. Educator 130, ESL teacher, administered Levels I , II, & III. A. Perceptions on the ELPA subtests (Factors 1, 4 and 5) Speaking subsection of the ELPA was too subjective. 31 educators wrote the rubrics did not contain example responses or did not provide enough levels to allow for an accurate differentiation among student abilities. 4 educators wrote ELLs who were shy or did not know the test administrator did poorly on the speaking test. 15 mentioned problems with two-question prompts that were designed to elicit two-part responses; some learners only answered the second question, resulting in low scores. A. Perceptions on the ELPA subtests (Factors 1, 4 and 5) Example 2: [I]t [the rubric] focuses on features of language that are not important and does not focus on language features that are important; some items test way too many features at a time; close examination of the rubric language makes it impossible to make a decision to give 2 or 3 points because it is too subjective and not quantifiable enough because too many features are being assessed at a time Educator 79, ESL teacher, administered Levels I, II, & III. A. Perceptions on the ELPA subtests (Factors 1, 4 and 5) Example 3: For some this was adequate but for those students who are shy it didn't give an accurate measure at all of their true ability. I have a student who is an excellent English speaker but painfully shy and she scored primarily zeros because she was nervous and too shy to speak. Educator 30, ESL teacher, administered Levels III & IV. A. Perceptions on the ELPA subtests (Factors 1, 4 and 5) Example 4: When you say in succession: "What would you say to give your partner directions? What pictures would be fun to make? The students are only going to answer the last question and 100% of mine did! So it is already half wrong. Too many questions had a two part answer but only one bubble to fill in how they did. Not very adequate to me! Educator 133, ESL and bilingual teacher, administered Levels I & II. A. Perceptions on the ELPA subtests (Factors 1, 4 and 5) 30 of the 151 educators who administered lower levels of the ELPA commented that the listening section was unable to hold the students’ attention because it was too long and repetitive or had bland topics. 9 complained of the reliance on memory and reading skills to answer questions. A. Perceptions on the ELPA subtests (Factors 1, 4 and 5) Example 5: Some of the stories were very long for kindergarten and first graders to sit through and then they were repeated! The kids didn't listen well the second time it was read and had trouble with the questions because of the length of the story. Educator 176, Reading recovery and literacy coach, administered Levels I, II, & III. A. Perceptions on the ELPA subtests (Factors 1, 4 and 5) Example 6: The kindergarten level is where I saw the most difference between actual ability and test results. Here students could give me the correct answer orally but mark the wrong answer in the test booklet. Educator 80, ESL teacher, administered Levels I, II, & III. B. Effective Administration (Factor 2) There was not adequate space or time for testing. Test materials did not arrive on time or were missing. Other national and state tests were being conducted at the same time, which overburdened the test administrators and the ELLs. B. Effective Administration (Factor 2) Example 7: There are only six teachers to test over 600 students in 30 schools. We had to [administer the ELPA] ourselves because it was during IOWA testing and that was where the focus was. Because we are itinerant in our district we were given whatever hallway or closet was available. Educator 249, ESL teacher, administered all Levels. B. Effective Administration (Factor 2) Example 8: The CD's and cassettes were late. Several of the boxes of materials were also late and I spent a lot of time trying to track them. NONE of the boxes came to the ESL office as requested. It was difficult with four grades in a building to find the right time to pull students and not mess with classwork and schedules. Educator 151, school administrator, administered all Levels. C. Impacts (Factor 3) 49 educators wrote that the test did not directly impact the ESL curricular content. 86 educators wrote that the test reduced the quantity and quality of ESL services during all or part of the ELPA test window. 79 educators commented on the negative psychological impact on students. 7 out of the 79 wondered about how much money and resources the ELPA cost Michigan. C. Impacts (Factor 3) Example 9: I feel it makes those students stand out from the rest of the school because they have to be pulled from their classes. They thoroughly despise the test . One student told me and I quote, "I wish I wasn't Caldean* so I didn't have to take this" and another stated she would have her parents write her a note stating that she was not Hispanic (which she is) Educator 71, Title 1 teacher, administered Levels I , II, III, & IV. *Chaldeans speak Aramaic, are from the part of Iraq originally called Mesopotamia. As Christians, they are a minority among Iraqis. Many have immigrated to Michigan. C. Impacts (Factor 3) Example 10: This test took too long to administer and beginning students, who did not even understand the directions, were very frustrated and in some cases crying because they felt so incapable. Educator 109, ELL and resource room teacher, administered Levels II & III. C. Impacts (Factor 3) Example 11: Just that the ELPA is a really bad idea and bad test... we spend the entire year building students confidence in using the English language, and the ELPA successfully spends one week destroying it again Educator 171, ESL teacher, administered Level IV. Discussion Research question 1 What are educators’ opinions about the ELPA and its administration? Answers Q1. 1. Difficult for lower grade students Q1. 2. Speaking tests are problematic for youngsters Q1. 3. Logistic problems with the administration Q1. 1. Difficult for lower grade students The educators may be right. What do we know about young language learners? The attention span of young learners in the early years of schooling is short, 10 to 15 minutes. They are easily diverted and distracted. They may drop out of a task when they find it difficult, though they are often willing to try a task in order to please the teacher (McKay, 2006, p. 6). Q1. 1. Difficult for lower grade students Children under 8 are unable to use language to talk about language. (They have no metalanguage.) (McKay, 2006) Children do not develop the ability to read silently to themselves until between the ages of 7 and 9 (Pukett & Black, 2000). Before that, children are just starting to understand how writing and reading work. Q1. 1. Difficult for lower grade students Children between 5 and 7 are only beginning to develop feelings of independence. They may become anxious when separated from familiar people and places. Having unfamiliar adults administer tests in unfamiliar settings might be introducing a testing environment that is not “psychologically safe” (McKay, 2006) for children. Children between 5 and 7 are still developing their gross and fine motor skills (McKay, 2006). They may not be able to fill in bubble-answersheets. Q1. 1. Difficult for lower grade students Recommendations Test administrators should read directions aloud and be allowed to clarify them if necessary. Students should be allowed to give verbal answers in the listening and reading subsections. Better would be to have a test that could be stopped if the educator felt like it should stop. Computer Adaptive Testing for kids? 47 May be extremely problematic… http://www.upi.com/blog/2013/03/04/Seattleteacher-revolt-other-districts-join-fight-against-highstakes-tests/2741362401320/ 48 http://www.upi.com/blog/2013/03/0 4/Seattle-teacher-revolt-otherdistricts-join-fight-against-highstakes-tests/2741362401320 stakes-tests/2741362401320/ Q1. 2. Speaking tests are unreliable Recommendations Stop the standardized, high-stakes, oral proficiency testing of young children. If it must be done, use a puppet to reduce test anxiety and stranger-danger. Allow non-verbal responses to count as responses. Q1. 3. Effective Administration What do test logistics have to do with validity? A test cannot be reliable if the physical context of the exam is not in order (Brown 2004). Materials and equipment should be ready, audio should be clear, and the classroom should be quiet, well lit, and a comfortable temperature. If a test is not reliable, it is not valid (Bachman, 1990; Brown, 2004; Chapelle, 1999; McNamara, 2000). Q1. 3. Effective Administration Recommendations Test administrators should be required to flag tests given under unfavorable conditions and provide information to the testing agency about the situation. This could spur improvements in the conditions of future test administrations, especially if districts and states were held accountable for providing adequate evidence of fair test conditions. Discussion The unintended effect of reduction in ELL services raises the question of whether the ELPA program in Michigan is feasible (Nevo & Shohamy, 1986). Feasibility standards are intended to “ensure that a testing method will be realistic, prudent and frugal” (Shohamy, 2001, p. 152). It may be unrealistic to expect ESL teachers with limited resources to administer the existing ELPA within the given time frame. Discussion Language tests can affect students’ psychological wellbeing by mirroring back to students a sense of how society views them and how they should view themselves (Crooks, 1998; Shohamy, 2000, 2001, 2006; Spratt, 1995; Taylor, 1994). The test does not recognize the ELLs’ heritage languages or cultural identities, perhaps communicating that these are unimportant to their education and thus to society (Schmidt, 2000). Recommendation 1 of 4 Collect validity data anonymously from educators immediately after the test is administered. Educators can complete a survey about the test logistics, their perceptions of the test, and the test’s impacts on the curriculum and students’ psyches. Sampling issues can be avoided if all educators are required to complete the surveys. If educators know in advance that they will be asked about the validity of the test, they may feel more ownership over the testing process. Recommendation 2 of 4 Use an outside evaluator to construct and conduct the validity survey. States and for-profit test agencies like Harcourt Assessment, Pearson, or any testing body, have an incentive to avoid criticizing the NCLB tests they manage. It is better to have a neutral party conduct the survey, summarize results and present them to the public, and perhaps suggest ways to improve the test (Ryan, 2002). Recommendation 3 of 4 Use the validity data to form research questions. These questions should be answered by literature reviews and additional data collection. The answers will shed light on why certain aspects of the test went wrong or were viewed negatively by the educators. Recommendation 4 of 4 Publish all validity evidence publicly. Presenting the data in open forums, on the web, at conferences, and in the test’s technical manual will encourage discussion about the uses of the test and the inferences that can be drawn from it. Disseminating validity data may also increase trust in the state and the corporate and non-profit testing agencies they hire. The way forward in Michigan? 58 The ELPA is not feasible. Michigan just joined WIDA. The ELPA is being given now (right now!) in Michigan, but this will be the last year of its administration. WIDA assessments do not give reading or writing tests to young children still working on pre-literacy skills. (Yeah!) Yet still, the ELL WIDA tests are not normed on likeaged children who are native speakers of English. There is much work to be done. Questions? THANK YOU! 59 Dr. Paula Winke, Michigan State University winke@msu.edu Clarification on two kinds of scales, with correlated or uncorrelated indices! http://www.stat-help.com/factor.pdf (This is the overview paper I gave out a few weeks ago.) 1. These three observed variables are effect indicators. They are appropriate for FA. Self-esteem causes the scores on the three indicator variable scales. This is modeled with factor analysis. 2. These three indicator variables are what cause stress. Note that these three things may not correlate. One can use Principle Components Analysis to investigate this type of structure. Worth Much proud Good as others Self Esteem We expect these three to correlate because they are all caused by self-esteem. Job probs Home probs No coping skills Stress Factor Analysis How-to Papers 61 Costello, A. B., & Osborne, J. W. (2005). Best practices in exploratory factor analysis: Four recommendations for getting the most from your analysis. Practical Assessment, Research & Evaluation, 10(7), Available online: http://pareonline.net/getvn.asp?v=10&n=17 DiStefano, C., Zhu, M., & Mîndrilă, D. (2009). Understanding and using factor scores: Considerations for the applied researcher. Practical Assessment, Research & Evaluation, 14(20), Available online: http://pareonline.net/getvn.asp?v=14&n=20