Instrumental Conditioning Foundations

advertisement

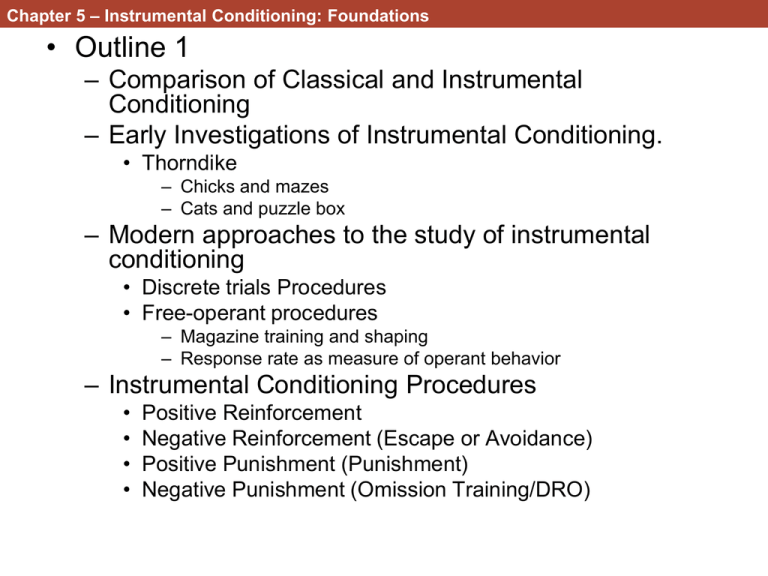

Chapter 5 – Instrumental Conditioning: Foundations • Outline 1 – Comparison of Classical and Instrumental Conditioning – Early Investigations of Instrumental Conditioning. • Thorndike – Chicks and mazes – Cats and puzzle box – Modern approaches to the study of instrumental conditioning • Discrete trials Procedures • Free-operant procedures – Magazine training and shaping – Response rate as measure of operant behavior – Instrumental Conditioning Procedures • • • • Positive Reinforcement Negative Reinforcement (Escape or Avoidance) Positive Punishment (Punishment) Negative Punishment (Omission Training/DRO) • Comparison of Classical and Instrumental Conditioning. – Classical = S-S relationship • Light-shock – elicits fear • Tone-food – elicits salivation • In Classical Conditioning there is no response requirement. – Instrumental = R-S relationship • We will refer to it as R-O • Behavior (Response) is instrumental in producing the outcome – Press lever – Get food – Pull lever – Get money • In Instrumental Conditioning a particular response is required • Keep in mind Classical and Instrumental conditioning are approaches to understanding learning. – not completely different kinds of learning. – Many learning situations could be described by either approach. • Child touches hot stove – CS, US, UR, CR? – Pavlovian = fear stove – Instrumental = less likely to approach • Conditioned Taste Aversion – S-S = Taste – LICl – R-O= Drink liquid - get sick (punished) • Early Investigations of Instrumental Conditioning. • Edward Lee Thorndike (American) – Same time as Pavlov • Late 1800s; early 1900s – interested in animal intelligence. • late 19th century - many people believed that animals reasoned – like people do – Romanes – Stories of amazing abilities of animals. • biased reporting? – Report interesting behavior – ignore stupid • Thorndike (1898) – “Dogs get lost hundreds of times and no one ever notices it or sends an account of it to a scientific magazine, but let one find his way home from Brooklyn to Yonkers and the fact immediately becomes a circulating anecdote. Thousands of cats on thousands of occasions sit helplessly yowling, and no one takes thought of it or writes to his friend, the professor; but let one cat claw at the knob of a door supposedly as a signal to be let out, and straightway this cat becomes representative of the cat-mind in all the books…In short, the anecdotes give really the…supernormal psychology of animals.” • Thorndike attempted to understand normal or ordinary animal intelligence. – Chicks in a maze – Cats in a box • Puzzle box video • Thorndike tested many animals – chicks, cats, dogs, fish, and monkeys. – little evidence for reasoning. • Instead learning seemed to result from trial and accidental success • Modern approaches to the study of instrumental conditioning – Discrete trials Procedures • Like Thorndike’s work • Simpler mazes though – Figure 5.3 » Straight alleyway (running speed) » T-Maze (errors) – Later » radial arm maze » Morris Water Maze – Note • each run is separated by an intertrial interval • Just like in Pavlovian Conditioning 8-arm Maze Morris Water Maze • Free-operant procedures – There are no “trials” – The animal is allowed to behave freely • Skinner Box – an automated method for gathering data from animals • Skinner • Rat in Operant Chamber • Skinner used these boxes to study operant conditioning – operant • any response that “operated” on the environment – Defined in terms of the effect it has on the environment • Pressing a lever for food – Doesn’t matter how the rat does it • Right paw, left paw, tail • As long as it actuated the switch – Similar to opening a door • Doesn’t matter which hand you use • Or foot (carrying groceries) • Just as long as the result is achieved • Magazine training and shaping – First have to train the animals about availability of food – training by successive approximations (shaping) • Shaping a rat • Response rate as measure of operant behavior – In a free-operant situation you do not have measures such as percent correct, or errors. – Skinner used response rate as a primary measure – We will see later that various schedules of reinforcement affect response rate in various ways. • Instrumental Conditioning Procedures • First let’s get some terminology down – Positive • Behave = Stimulus applied – Negative • Behave = Stimulus removed – Reinforcement • Behavior increased – Punishment • Behavior decreased • 2 x 2 table Punishment Reinforcement positive negative • Application – Box 5.2 in book • Mentally disabled woman • Head banging behavior • Possibly to get attention - reward – Change contingencies • Ignore head banging • Social rewards when not head banging – Procedure? • Negative Punishment – Differential Reinforcement of Other Behavior (DRO) ABAB design • Your book uses somewhat different terminology – Positive Reinforcement • Same – Escape or Avoidance • Negative Reinforcement – Punishment • Positive Punishment – Omission Training/DRO • Negative Punishment • We will use my terminology O • Outline 2 – Fundamental Elements of Instrumental Conditioning • The Response – Behavioral variability vs. Stereotypy – Relevance or Belongingness – Behavior Systems and Constraints on Instrumental conditioning • The Reinforcer – Quantity and Quality of the Reinforcer – Shifts in Reinforcer Quality or Quantity • The Response-Outcome Relation – – – – – Temporal Relation Contingency Skinner’s Superstition experiment The Triadic Design Learned helplessness hypothesis • Behavioral variability vs. Stereotypy – Thorndike emphasized the fact that reinforcement increases the likelihood of the behavior being repeated in the future • Uniformity – stereotypy • This is often true – You can increase response variability, however, by requiring it • Page & Neuringer, 1985 • Two keys (50 trials per session) – Novel group » Peck 8 times » Do not repeat a pattern that was used in the last 50 trials » LRLRLRLR » RLRLRLRL » LLRRLLRR » LLLLRRRR – Control group » RF 8 pecks » Doesn’t matter how they do it. • Figure 5.8 Reward and originality (creativity)? Box 5.3 • Relevance or Belongingness • We have discussed this in Classical Conditioning • Bright Noisy Tasty water • Peck differently for water and Grain • It has also been studied extensively in the Instrumental literature • Originally noted by Thorndike • Cat – puzzle box • Train cat to yawn to escape • Or scratch themselves • Did not go well • Brelands “The Misbehavior of Organisms” 1961 • Play on Skinner’s “Behavior of Organisms” (1938) • students of Skinner – training animals to do tricks as advertising gimmicks • raccoon and coin(s) – Shaped to pick up coin – Then to place in bank – Then 2 coins • pig and wooden coin • Instinctive drift – Arbitrarily established responses drift toward more innately organized behavior (instinct) • The arbitrary operant – place coin in bank • Instinctive drift – species specific behaviors related to food consumption • Wash food • Root for food Behavior Systems and Constraints on Instrumental conditioning • Behavior Systems (Timberlake) • the response that occurs in a learning episode is related to the particular behavioral system that is active at the time • If you are a hungry rat and food is the reward • behaviors related to foraging will increase • If you are a male quail maintained on a light cycle that indicates mating season and access to a female is being offered • mating behaviors will be elicited Behavior systems continued • The effectiveness of any procedure for increasing an instrumental response will depend on the compatibility of that response with behavioral system currently activated – rats pressing levers for food? – pigeons pecking keys for food? – Very easy to train • Even works for fish – Easy • bite a stimulus associated with a rival male • Swim through hoops for a stimulus associated with female – Difficult • Bite stimulus associated with access to female • Swim through hoops for access to rival male The Reinforcer • How do qualities of the Reinforcer affect Instrumental Conditioning? – Quantity and Quality of the Reinforcer • Just like in Pavlovian conditioning. – More intense US Better conditioning • Trosclair-Lassere et al. (2008) – Taught autistic child to press button for social reward » Praise, hugs, stories – Social reward » 10 s » 105 s » 120 s – Progressive ratio • Magnitude of RF and drug abstinence – Perhaps not surprising • The more you pay the better addicts do. . • Shifts in Reinforcer Quality or Quantity • How well a reinforcer works depends on what subjects are used to receiving – Mellgren (1972) • Straight alleyway – Phase 1 » Half – found 2 pellets (low reward) » Half – found 22 pellets (high reward) – Phase 2 » Half from each group switched to opposite condition » Other half stay the same • 4 conditions • H-H • high RF control • L-L • low RF control • L-H • Positive contrast • H-L • Negative Contrast Results – Figure 5.10 [note Domjan changed it to Small (S) and Large (L) rewards in text] • Response- Outcome Relation – Temporal relation? • contiguity – Causal relation? • Contingency • Independent – Contiguous doesn’t mean contingent • You wake up – sun rises – Contingent not always contiguous • Submit tax returns – Wait a few weeks for the money • Effects of temporal relationship – Hard to get animals to respond if long delay between response and reward – Delays are tough experiments to run using free operant procedure • Allow barpressing during “delay?” – Some barpresses likely close to RF • Enforce no barpressing after initial barpress? – Delay RF? • In study in book (Fig. 5.11) each bar press resulted in RF at a specified set time • For some animals the delay was short – 2-4s • For some it was long – 64 s • Example (16 s delay) – Bar press 1 at 1 s (RF1 = 17 s) – Bar press again at 3 s (RF2 at 19s) • 14 s from RF1 – Bar press again at 12 s (RF3 at 28 s) • 5 s from RF1 – There will still be some “accidental” contiguity • Graph shows how responding is affected by actually experienced delays • Why are animals so sensitive to the delay between response and outcome? – Delay makes it difficult to determine which behavior actually caused RF • Press lever (contingent after 20 s) – – – – Scratch ear Dig in bedding on the floor Rear up Clean face » Reward • All of the other behaviors are more contiguous with RF than is lever pressing – A marking procedure can help maintain responding over a long delay • Provide a light or click after “target” response – Lever press – click .....20 s food • Helps animals bridge the gap • Response-Reinforcer Contingency – Skinner thought contiguity was more important than contingency – Superstition experiment – Superstition and bowling video from u-tube • relevant content begins at 3:12 • Reinterpretation of Superstition Experiment • Behavioral systems again – Different kinds of responses occur when periodic RF is used • Focal search – Behaviors near food cup as time for RF approaches » “I know its coming” • Post-food focal search – Again - activity near cup » “Did I miss any?” • General search – Move » » » away from cup This is probably when skinner saw the turning and head tossing behaviors “I have to wait, might as well look around” “I am also a bit frustrated” – There is evidence for these patterns of responding • Staddon and Simmelhag (1971) – There is also evidence that slightly different patterns emerge with food vs. water RF. • Effects of the controllability of Reinforcers • Is having control a good thing? • We briefly mentioned the Brady Executive Monkey study earlier. • That study implied having operant control over outcomes could be bad for the animal • That study was confounded • Better evidence for the effects of control over outcomes comes from the learned helplessness literature • Learned helplessness hypothesis – Animals learn that they have no control over the shock – Creates an expectation • expect shock regardless of their behavior • Implications – 1. This expectation reduces motivation to make an instrumental response – 2. Even if they do escape/avoid shock, it is harder for them to associate their behavior with the outcome. • Shock was independent of behavior in the past • Similar to US preexposure effect