AAPS-3 - Pacific University

advertisement

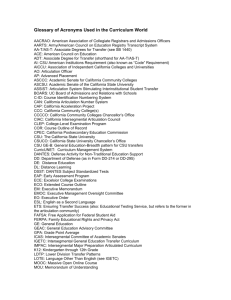

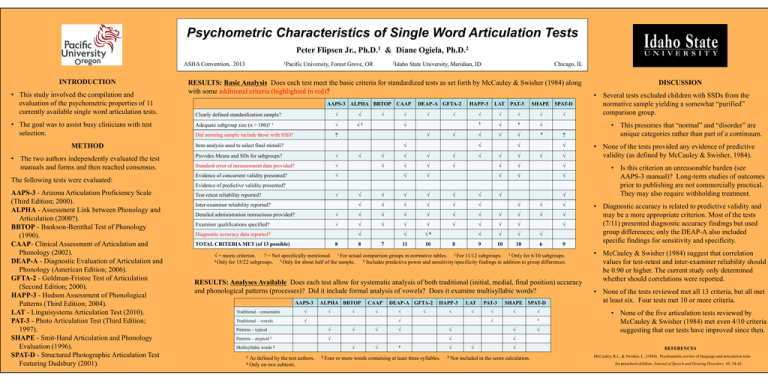

Psychometric Characteristics of Single Word Articulation Tests Peter Flipsen Jr., Ph.D.1 & Diane Ogiela, Ph.D.2 1Pacific ASHA Convention, 2013 INTRODUCTION • This study involved the compilation and evaluation of the psychometric properties of 11 currently available single word articulation tests. • The goal was to assist busy clinicians with test selection. METHOD • The two authors independently evaluated the test manuals and forms and then reached consensus. The following tests were evaluated: AAPS-3 - Arizona Articulation Proficiency Scale (Third Edition; 2000). ALPHA - Assessment Link between Phonology and Articulation (2000?). BBTOP - Bankson-Bernthal Test of Phonology (1990). CAAP- Clinical Assessment of Articulation and Phonology (2002). DEAP-A - Diagnostic Evaluation of Articulation and Phonology (American Edition; 2006). GFTA-2 - Goldman-Fristoe Test of Articulation (Second Edition; 2000). HAPP-3 - Hodson Assessment of Phonological Patterns (Third Edition; 2004). LAT - Linguisystems Articulation Test (2010). PAT-3 - Photo Articulation Test (Third Edition; 1997). SHAPE - Smit-Hand Articulation and Phonology Evaluation (1996). SPAT-D - Structured Photographic Articulation Test Featuring Dudsbury (2001). 2Idaho University, Forest Grove, OR Chicago, IL State University, Meridian, ID RESULTS: Basic Analysis Does each test meet the basic criteria for standardized tests as set forth by McCauley & Swisher (1984) along with some additional criteria (highlighted in red)? AAPS-3 ALPHA BBTOP CAAP Clearly defined standardization sample? √ √ Adequate subgroup size (n > 100)? 1 √ √2 Did norming sample include those with SSD? ? √ DEAP-A GFTA-2 √ √ HAPP-3 LAT √ √ √ √ 3 √ 4 √ √ √ √ 5 √ √ √ √ √ Item analysis used to select final stimuli? Provides Means and SDs for subgroups? √ Standard error of measurement data provided? √ Evidence of concurrent validity presented? √ √ PAT-3 √ √ √ √ √ √ √ √ √ √ √ SHAPE SPAT-D √ √ √ ? √ √ √ √ √ √ √ √ √ √ √ Evidence of predictive validity presented? √ Test-retest reliability reported? Inter-examiner reliability reported? √ √ √ √ √ √ √ √ √ √ √ √ √ √ √ √ √ √ √ Detailed administration instructions provided? √ √ √ √ √ √ √ √ √ Examiner qualifications specified? √ √ √ √ √ √ √ √ √ √ √6 √ √ √ √ 11 10 9 10 10 6 √ Diagnostic accuracy data reported? TOTAL CRITERIA MET (of 13 possible) 8 8 7 8 √ 9 √ = meets criterion. ? = Not specifically mentioned. 1 For actual comparison groups in normative tables. 2 For 11/12 subgroups. 3 Only for 6/10 subgroups. 4 Only for 15/22 subgroups. 5 Only for about half of the sample. 6 Includes predictive power and sensitivity/specificity findings in addition to group differences. RESULTS: Analyses Available Does each test allow for systematic analysis of both traditional (initial, medial, final position) accuracy and phonological patterns (processes)? Did it include formal analysis of vowels? Does it examine multisyllabic words? AAPS-3 Traditional – consonants √ Traditional – vowels √ ALPHA BBTOP √ √ Patterns – atypical 1 √ √ √ Multisyllabic words 2 As defined by the test authors. 4 Only on two subtests. √ DEAP-A GFTA-2 √ HAPP-3 √ √ LAT √ √ Patterns – typical 1 √ CAAP 2 √ √ √ 4 Four or more words containing at least three syllables. PAT-3 √ SHAPE SPAT-D √ √ 3 √ √ √ √ √ 3 √ √ √ Not included in the score calculation. √ DISCUSSION • Several tests excluded children with SSDs from the normative sample yielding a somewhat “purified” comparison group. • This presumes that “normal” and “disorder” are unique categories rather than part of a continuum. • None of the tests provided any evidence of predictive validity (as defined by McCauley & Swisher, 1984). • Is this criterion an unreasonable burden (see AAPS-3 manual)? Long-term studies of outcomes prior to publishing are not commercially practical. They may also require withholding treatment. • Diagnostic accuracy is related to predictive validity and may be a more appropriate criterion. Most of the tests (7/11) presented diagnostic accuracy findings but used group differences; only the DEAP-A also included specific findings for sensitivity and specificity. • McCauley & Swisher (1984) suggest that correlation values for test-retest and inter-examiner reliability should be 0.90 or higher. The current study only determined whether should correlations were reported. • None of the tests reviewed met all 13 criteria, but all met at least six. Four tests met 10 or more criteria. • None of the five articulation tests reviewed by McCauley & Swisher (1984) met even 4/10 criteria suggesting that our tests have improved since then. REFERENCES McCauley, R.J., & Swisher, L. (1984). Psychometric review of language and articulation tests for preschool children. Journal of Speech and Hearing Disorders, 49, 34-42.