Introduction to Terrier

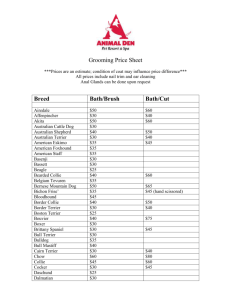

advertisement

Introduction to Terrier Existing IR Platforms • Academic: – Terrier – Zettair – Lemur/Indri • Non-Academic: – Lucene/Nutch – Xapian Terrier: • Flexible & ideal for experimentation • Rapid development of new research ideas • Not just one model – Implements various modern state-of-the-art IR models • Proven effective retrieval 3/31 Open Source Terrier • Why Open Source? Terrier is a community project – you use & benefit – you contribute – Everyone benefits • Cross-OS developed in Java – runs on Windows, *nix, MacOS X • Indexing and Querying APIs – Easy to extend – adapt for new applications – Modular architecture – Simple to start working with – Many configuration options 4/31 What’s in Terrier Scripts to Compiled Java files Start Terrier DocumentationConfiguration Files Stopwords & tests Java Source – index/ – results/ 5/31 Compiling Terrier • To use your code with Terrier, add your jar file or your class folder to the CLASSPATH environment variable • If you do need to alter the code in Terrier, then you have to recompile. • bin/compile.sh • bin/compile.bat •ant • In Eclipse, you will need the Antlr plugin to compile 6/31 File->New->Project 7/31 8/31 Using Terrier Terrier comes with three applications: • Desktop Terrier • Interactive Terrier • Batch (TREC) Terrier 9/31 • Desktop Terrier • SimpleFileCollection – Simple Text , PDF , MS Word , MS PowerPoint , MS Excel , HTML ,XML , XHTML , etc • Java Swing GUI • Comes with Terrier Back 10/31 • Interactive Terrier Back 11/31 • Batch (TREC) Terrier Indexing Retrieval Evaluation • ./bin/trec_terrier.sh -i – -H for Hadoop Indexing. • ./bin/trec_terrier.sh -r -Dtrec.model=PL2 – Classical models, such as tf-idf, BM25 – -q for Query Expansion. Bo1, Bo2 and KL • ./bin/trec_terrier.sh -e – p@20 p@30 etc. 12/31 Indexing 13/31 Term Pipelining • In Terrier, each token from a Document is passed through the Term Pipeline • Each Term Pipeline stage can either: – Transform the term. Stemming, ala Porter’s English stemming etc. – Drop the term Stopword removal 14/31 Example • Original Text • Tokenisation • Stopword removal • Stemming 15/31 Indexing API 16/31 Retrieval in IR 17/31 18/31 Scoring Documents • A simple model of scoring documents to a query is TF.IDF: • Also Language Modelling (Hiemstra) - A query term w(t,d) is scored by how different its term distribution in the document d is, compared to the whole collection 19/31 Weighting Models in Terrier • Terrier provides many state-of-the-art document weighting models: – TF-IDF (with length normalisation, aka BM11) – Lemur’s TF-IDF – Okapi BM25 – Hiemstra and Ponte&Croft Language Models • All in org.terrier.matching.models 20/31 Score Documents • TAAT Term-At-A-Time • DAAT Document-At-A-Time advantageous for retrieving from large indices 21/31 Query Expansion •Why using Query Expansion? – Achieve a better retrieval performance • How to use? – Add –q parameter in your command • Terrier’s QE is a pseudo-relevance feedback technique that – Expands the query by adding new query terms – Re-weights the query terms(KL,Bo1,Bo2) 22/31 Expanding the query • The added query terms are meant to be related to the topic • QE brings more information to the query • It helps to retrieve more relevant documents BUT it can also bring noise 23/31 Extending Retrieval Use Cases: Document Priors • Assumption: You have a file containing PageRank scores for each document in the collection • Integrate with retrieval score as • How: Use a DocumentScoreModifier – Modify retrieval scores at end of Matching 24/31 25/31 Evaluation • How well did the system perform? • Specify the qrels file with the relevance assessments to use in etc/trec.qrels • Evaluate all the result files in the var/results directory • .eval contains usual evaluation measures, P@10 P@20 etc. 26/31 Data Structures Builders • Lexicon 27/31 • DocumentIndex 28/31 • CollectionStatistics 29/31 • DirectIndex 30/31 • InvertedIndex 31/31 2/22