Family Works HB and Results Based Accountability

advertisement

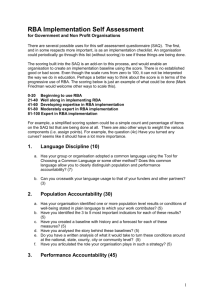

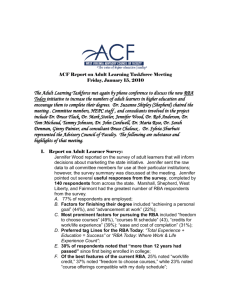

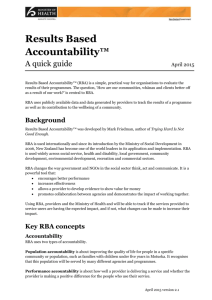

What did we do and how well did we do it? A consistent approach to identifying and measuring outcomes RBA – what it means to Family Works HB Systematic approach to planning and measuring outcomes A framework for monitoring and evaluating what we do A framework for reflection A framework for reporting A framework for planning To know we are doing is making a difference How did we get started?: Evaluation cycle: monitoring, evaluation and learning Define and plan service delivery Reflect on outcomes and refine service Evaluation in Action Action Research model Accountability Clients Governance Funders Communications internal & external Fundraising Analyse data and review service outcomes Do it Implement service Process used to establish evaluation framework Map the programme ‘focus group’ discussions with staff describing “what we do, how we do it and how we know when its working” Build a programme logic derived from programme map Identify most useful performance measures based on an RBA approach The Journey In 2008 we developed our programme logic and embarked on RBA. In July 2009 we merged another organisation into Family Works HB – 10 new staff, 2 new services and a number of new programmes. In 2010 – we purchased a data base and have spent time ensuring a fit with our tools, processes etc designed around an RBA framework. This is work largely completed. PROGRAMME LOGIC: A way of describing a programme for planning and evaluation Resources INPUTS + What you do ACTIVITIES Within your control Planned work = Results OUTPUTS Direct products of the activities OUTCOMES Short-term Medium term Longer term Results we expect to see Results we want to see Results we hope to see Intended results Impacts PROGRAMME LOGIC: A way of describing a programme for planning and evaluation Resources + What you do INPUTS = ACTIVITIES Results OUTPUTS OUTCOMES Direct products of the activities Planned work Medium term Longer term Results we expect to see Results we want to see Results we hope to see Intended results Performance Measures / Indicators Short-term How do you know this has happened? Impacts How did we start: programme mapping INPUTS ACTIVITIES OUTPUTS OUTCOMES Short-term Medium term Longer term (working with FW ) (On closure with FW ) (6–12 mnths post?) Client participation rate Change achieved in issues in Goal Plan – client reviews Changes in Safety, Care & Stability – SW reviews Community participation reviewed Client participation rate Change achieved in issues in Goal Plan – intake vs closure Changes in Safety, Care & Stability – intake vs closure Client service evaluation – incl. PSNZ measure Community participation Educational statuschildren Family PHO registrationgoing to GP as required Number of adverse contacts with Agencies for client families (CYF, WINZ, Police - s.15 notifications; s.19 referrals) Families represent through PR Performance Measures / Indicators Tracking Inputs: costs staff time # clients resources Quantity & quality of Activities: Level & quality of engagement with family Quality of the services Monitor & review intra/interagency processes Tracking Outputs: • Assessment completed contract agreed goal plan in progress # sessions # reviews case status on closure Safety assessment completed Community participation assessment completed Quantity Quality Input Effort How much service did we deliver? How well did we deliver it? Output Effect Performance Measures How much change / effect did we produce? What quality of change / effect did we produce? How much did we do? # clients # sessions # reviews funding case completions vs non-completions Referral sources Agencies involved Inputs - Effort Client demographics Presenting issues Match with priority populations Background Info Community profiles Events / issues in community How well did we do it? Client service evaluation – satisfaction survey Social worker satisfaction survey - [workloads, resources, support – supervision & direction etc.] Social work practice - progress with Goal plan etc. , % reviews at 6-8 sessions Closures / completion rates / reasons for noncompletion Client participation rates / Level and quality of engagement with clients Outcomes - Effect Is anyone better off? Ratings show changes in issues listed in Client contract – client assessed Ratings show changes in Child Safety dimensions– SW assessed Ratings show changes in community participation rates- SW assessed Client service evaluation – satisfaction survey including PSNZ measure Client engagement & participation rate After 6 & 12 months: Educational status of children- children enrolled and attending school Family PHO Registration-going to GP as required Number of adverse contacts with Agencies for client families (CYF, WINZ, Police - s.15 notifications; s.19 referrals) . What have we achieved to date Client plans completed 2008 / 09 2009/10 2010/11 2011/12 57% 45% 96% 95% Client outcomes met 2008/09 2009/10 2010/11 2011/12 29% 55% 75% 85% 24% Social Work results 100% 90% 80% 70% 60% 50% 40% 30% 20% 10% 0% 2008 - 2009 2009-2010 2010-2011 2011/2012 Client plans Client plans Child safety Child safety completed not dimensions - dimensions completed improved same Our results – what happened Review Findings Tools were okay – some minor tweeks Staff inconsistent in use of tools and reporting Inadequate QA Action plan Improve QA and monitor practice closely Policy changes and training around “forming a belief” and reporting accurately. Infrastructure changes Key enablers Outcomes reporting and RBA was supported by MSD My CEO, and Executive wanted a tool to evaluate what we did. Staff understand our need to report outcomes CMS aided the process of implementation. Barriers/ Challenges New Manager, new staff , new systems, new CMS. Existing capability / capacity versus increasing demand and complexity Greater levels of quality assurance needed CMS – added an additional challenge Fear – a tool to measure individual performance? Fear – of change, Inconsistent practice Lessons learnt Bring all staff on the journey, don’t leave any behind Be clear with staff of our legal obligations for reporting to funders and our Board and clients One step at a time. Don’t be afraid of change This is about the service , not individuals Look for patterns – these tell a story on their own Lessons learnt Be prepared to delve into the files for solutions and to get the story behind the data e.g. – 25% disengagement – find out when in the process and why and take action Review data management tools and rating scales- are they doing what you want them to do? Don’t be afraid of change. What surprised me? Our results. Not all our results are good but we had a starting place from which to launch practice improvements. We have to have a framework by which to hang our results Client demographics remain stable - No surprises Issues and challenges Consistency, completeness, case reviews, quality assurance – protect against garbage in garbage out. All our work is on CMS and reporting is easier. A recent restructure and subsequent staff changes challenge our ability to meet demand. Reviewing and updating our practice model- change raises levels of anxiety. Recap Programme mapping Vigilance around reporting Good QA system Integrity of data Would I recommend RBA Most definitely It provides structure , it asks and allows us to answer the key questions – do we make a difference in the lives of those we work with. And If not , why not. It helps to justify our existence by providing an evidence based framework. Whole population RESULT or OUTCOME A condition of well-being for children, adults, families or communities. Children born healthy, Children succeeding in school, Safe communities, Clean Environment, Prosperous Economy INDICATOR or BENCHMARK A measure which helps quantify the achievement Rate of low-birthweight babies, rate of high school graduation, crime rate, air quality index of a result. Client population PERFORMANCE MEASURE A measure of how well a programme, agency or service system is working. Three types: 1. How much did we do? 2. How well did we do it? 3. Is anyone better off? = Customer Results