ppt

advertisement

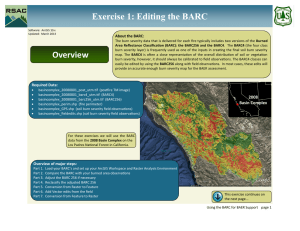

“ National Knowledge Network and Grid - A DAE Perspective ” B.S. Jagadeesh Computer Division BARC. India and CERN: Visions for future Collaboration 01/March/2011 Our Approach to Grids has been an evolutionary approach • Anupam Supercomputers • To Achieve Supercomputing Speeds – At least 10 times faster than the available sequential machines in BARC • To build a General Purpose Parallel Computer – Catering to wide variety of problems – General Purpose compute nodes and interconnection network • To keep development cycle short – Use readily available, off-the-shelf components ANUPAM Performance over the years 100000 47000 10000 9036 1730 1000 100 72 15 10 3 1 1.6 1 0.407 0.189 0.11 0.052 0.034 0.1 Year 2012 2011 2010 2009 2008 2007 2006 2005 2004 2003 2002 2001 2000 1999 1998 1997 1996 1995 1994 1993 1992 0.01 1991 GFLOPS 365 ‘ANUPAM-ADHYA’ 47 Tera Flops Complete solution to scientific problems by exploiting parallelism for Processing ( parallelization of computation) I/O ( parallel file system) Visualization (parallelized graphic pipeline/ Tile Display Unit) Snapshots of Tiled Image Viewer We now have , Large tiled display Rendering power with distributed rendering Scalable to many many pixels and polygons An attractive alternative to high end graphics system Deep and rich scientific visualization system Post-tsunami: Nagappattinam, India (Lat: 10.7906° N Lon: 79.8428° E) This one-meter resolution image was taken by Space Imaging's IKONOS satellite on Dec. 29, 2004 — just three days after the devastating tsunami hit. 1M IKONOS Image Acquired: 29 December 2004 Credit "Space Imaging" So, We Need Lots Of Resources Like High Performance Computers, Visualization Tools, Data Collection Tools, Sophisticated Laboratory Equipments Etc. “ Science Has Become Mega Science” “Laboratory Has To Be A Collaboratory” Key Concept is “Sharing By Respecting Administrative Policies” GRID CONCEPT User Access Point Result Resource Broker Grid Resources Grid concept (another perspective) • Many jobs per system -- Early days of Computation • One job per system -- Risc / Workstation Era • Two systems per JOB -- Client-Server Model • Many systems per JOB -- Parallel / Distributed Computing • View all of the above as Single unified resource -- Grid Computing LHC Computing • LHC (Large Hadron Collider) has become operational and is churning out data. • Data rates per experiment of >100 Mbytes/sec. • >1 Pbytes/year of storage for raw data per experiment. • Computationally problem is so large that can not be solved by a single computer centre • World-wide collaborations and analysis. – Desirable to share computing and analysis throughout the world. LEMON architecture QUATTOR • Quattor is a tool suite providing automated installation, configuration and management of clusters and farms • Highly suitable to install, configure and manage Grid computing clusters correctly and automatically • At CERN, currently used to auto manage nodes >2000 with heterogeneous hardware and software applications • Centrally configurable & reproducible installations, run time management for functional & security updates to maximize availability Please visit: http://gridview.cern.ch/GRIDVIEW/ National Knowledge Network •Nationwide networks like ANUNET, NICNET,SPACENET did exist •Idea is to Synergize, Integrate and leverage to form National Information Highways •Take everyone onboard (5000 institutes of learning) . Provide quality of service .Outlay of Rs 100 crores for Proof of Concept Envisaged applications are: Countrywide Classrooms, Telemedicine, E-Governance, Grid Technology ….. NKN TOPOLOGY All pops are covered by atleast Two NLDs NKN Topology EDGE Current status Distribution Core Distribution 15 POPS 78 Institutes have been connected EDGE EDGE Distribution Work in progress for 27 POPS EDGE 550+ Institutes Achieve Higher Availability Educational Institutions NTRO Cert-IN Research Labs CSIR/DAE/ISRO/ICAR EDUSAT National Internet Exchange Points (NIXI) NKN MPLS Clouds INTERNET Broad Band Clouds Connections to Global Networks (e.g. GEANT) National / State Data Centers DAE-wide Applications on NKN • DAE-Grid : Grid resources at BARC, IGCAR, RRCAT and VECC • WLCG and GARUDA • Videoconferencing: with NIC, IITs, IISc • Collab-CAD : Collaborative design of sub assembly of the prototype 500 MW Fast Breeder Reactor from NIC, BARC & IGCAR • Remote classrooms : Amongst different Training schools Quarter Transponder 9 MHz BARC, Trombay INSAT 3C 8 Carriers of 768 Kbps each CTCRS, BARC Anushaktinagar, Mumbai Hos p. BARC AERB DAE, Mumbai ECIL, Hyderabad HWB BRIT NPCIL TIFR NFC CCCM IRE TMC IGCAR, Kalpakkam VECC, Kolkata BARC FACL CAT, Indore BARC, BARC, BARC, Mysore Gauribidnur Tarapur BARC, Mt.Abu AMD, AMD, Secund’badShillong SAHA INST. IMS, Chennai IOP IPR, HRI, TMH Bhubaneshwar HWB, HWB, UCIL-I UCIL-II AllahabadAhmedabad MRPU UCIL-III Navi Kota Manuguru Jaduguda Jaduguda Jaduguda Mumbai Notes: Sites shown in yellow oblong are connected over dedicated landlines. ANUNET WIDE AREA NETWORK ANUNET Leased Links Plan for 11th Plan Delhi Shilong Allahabad Mount Abu Kota Jaduguda Indore Gandhinagar Kolkata Tarapur Bhubneshwar Mumbai Hyderabad Vizag Manguru Mysore Gauribidunir Chennai Kalpakkam Existing Proposed Leased Links Plan All over India DAE & NKN • BARC CONDUCTED THE FIRST NKN WORKSHOP TO TRAIN NETWORK ADMINISTRATORS IN DEC, 2008 • DAE-GRID • COUNTRYWIDE CLASSROOMS OF HBNI • SHARING OF DATABASES • DISASTER RECOVERY SYSTEMS (planned) • DAE GETS ACCESS TO RESOURCES IN THE COUNTRY • DAE STANDS TO GAIN IN THAT WE GET ACCESS TO RESOURCES ON GEANT(34 EUROPEAN COUNTRIES), • GLORIAD ( RUSSIA,CHINA,USA,NETHERLANDS,CANADA ..), • TIEN3 (AUSTRALIA,CHINA,JAPAN,SINGAPORE,…..) Applications … NKN-General (National Collaborations) NKN-Internet (Grenoble-France) WLCG Collaboration Logical Communication Domains Through NKN Intranet segment of BARC 0 Internet segment of BARC NKN Router Anunet (DAE units) BARC – IGCAR Common Users Group (CUG) National Grid Computing CDAC, Pune LAYOUT OF VIRTUAL CLASSROOM 55“ LED 55“ LED Projection Screen Elevation Front HD Camera Teacher HD Camera 55“ LED 55“ LED LAYOUT OF VIRTUAL CLASS ROOM Elevation Back An Example of High bandwidth Application A Collaboratory (ESRF, Grenoble) Collaboratory? Depicts a one degree oscillation photograph on crystals of HIV-1 PR M36I mutant recorded by remotely operating the FIP beamline at ESRF, and OMIT density for the mutation residue I. (Credits: Dr. JeanLuc Ferrer, Dr. Michel Pirochi & Dr. Jacques Joley, IBS/ESRF, France, Dr. M.V. Hosur & Colleagues, Solid State Physics Division & Computer Division, BARC) E-Governance? COLLABORATIVE DESIGN Collaborative design of reactor components Credits : IGCAR, Kalpakkam, NIC-Delhi, Comp Divn, BARC DAEGrid UTKARSH 4 Sites 6 Clusters • • • • • • Utkarsh – dual processor-quad core 80 node (BARC) Aksha-itanium – dual processor 10 node (BARC) Ramanujam – dual core dual processor 14 node (RRCAT) Daksha – dual processor 8 node (RRCAT) Igcgrid-xeon – dual processor 8 node (IGCAR) Igcgrid2-xeon – quad core 16 node (IGCAR) 800 Cores DAEGrid Connectivity is through NKN – 1 Gbps Categories of Grid Applications Category Examples Characteristics High Harness Idle Cryptography cycles Throughput Cost Remote On Demand Experiments effectiveness Info from Large Data Intensive CERN LHC Data sets / Provenance Distributed Supercomputing Collaborative Ab-initio Molecular Dyn Data Exploration Large CPU / memory reqd Support communication Moving Forward …… “ ‘Fire and Forget’ to ‘Assured quality of service’ ” - Effect of Process Migration in distributed environments - A Novel Distributed Memory file system - Implementation of Network Swap for Performance Enhancement - Redundancy issues to address the failure of resource brokers - Service center concept using Glite Middleware - Development of Meta-brokers to direct jobs amongst middlewares -All of the above lead towards ensuring Better quality of service and imparting simplicity in Grid usage… clouds? Acknowledgements All colleagues from Computer Division, BARC for help in preparing the presentation material are gratefully acknowledged. We also Acknowledge NICDelhi, IGCAR-Kalpakkam and IT-Division, CERN, Geneva. THANK YOU