K,V - Aaron Gember

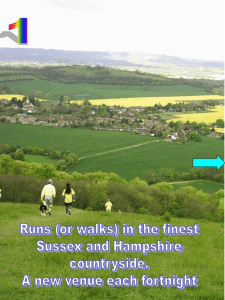

advertisement

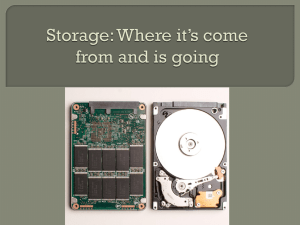

Design Patterns for Tunable and Efficient SSD-based Indexes Ashok Anand, Aaron Gember-Jacobson, Collin Engstrom, Aditya Akella 1 Large hash-based indexes ≈20K lookups and inserts per second (1Gbps link) ≥ 32GB hash table WAN optimizers [Anand et al. SIGCOMM ’08] De-duplication systems [Quinlan et al. FAST ‘02] Video Proxy [Anand et al. HotNets ’12] 2 Use of large hash-based indexes Where to store the indexes? WAN optimizers De-duplication systems Video Proxy 3 Where to store the indexes? 8x less 25x less SSD 4 What’s the problem? • Need domain/workload-specific optimizations for SSD-based index with↑ performance and ↓overhead • Existing designs have… – Poor flexibility – target a specific point in the cost-performance spectrum – Poor generality – only apply to specific workloads or data structures 5 Our contributions • Design patterns that ensure: – High performance – Flexibility – Generality • Indexes based on these principles: – SliceHash – SliceBloom – SliceLSH 6 Outline Problem statement • Limitations of state-of-the-art • SSD architecture • Parallelism-friendly design patterns – SliceHash (streaming hash table) • Evaluation 7 State-of-the-art SSD-based index • BufferHash [Anand et al. NSDI ’10] – Designed for high throughput In-memory incarnation 0 KA,VA 1 2 KC,VC 3 KB,VB Bloom filter 0 1 K,V 2 K,V 3 K,V 0 K,V 1 K,V 2 K,V 3 0 K,V 1 K,V 2 3 K,V #(2K ) incarnation 4 bytes per K/V pair! 16 page reads in worst case! (average: ≈1) 8 State-of-the-art SSD-based index • SILT [Lim et al. SOSP ‘11] – Designed for low memory + high throughput Target specific workloads and objectives 0 KA,VA 0 K,V K,V 1 Hash KB,VB 1 and generality → poor flexibility K,V table 2 3 KC,VC 2 3 K,V K,V Index Log Hash Sorted Do not leverage internal parallelism ≈0.7 bytes per K/V pair 33 page reads in worst case! (average: 1) High CPU usage! 9 SSD Architecture Flash mem package 1 Plane 2 Plane 1 Block 1 Block 2 Page 1 Page 1 Page 2 Page 2 Plane 1 Data register Plane 2 How does the SSD architecture … Die n Die 1 inform our design patterns? … Flash mem pkg 4 Channel 1 … SSD controller Channel 32 Flash mem pkg 125 Flash mem pkg 126 … Flash mem pkg 128 10 Four design principles SliceHash Flash memory package 1 Block 1 2 Page 1 Page 2 Flash memory … package 4 Channel 1 … I. Store related entries on the same page II. Write to the SSD at block granularity III. Issue large reads and large writes IV. Spread small reads across channels Channel 32 11 I. Store related entries on the same page • Many hash table incarnations, like BufferHash #(5K ) Incarnation Multiple page Page reads per lookup! Sequential slots from a specific incarnation 0: K,V 4 0: K,V 4: K,V 0: K,V 4: K,V 1: K,V 5: K,V 1 5 1: K,V 5: K,V 2 6: K,V 2: K,V 6: K,V 2: K,V 6 3: K,V 7: K,V 3: K,V 7: K,V 3 7: K,V 12 I. Store related entries on the same page • Many hash table incarnations, like BufferHash • Slicing: store same hash slot from all incarnations on the same page 5 Only 1 page read per Slice lookup! Page Specific slot from all incarnations 0: K,V 1: 4K,V 0: 2K,V 4: K,V 3: 0: 4K,V 4: 5: K,V 6: K,V 7: K,V 1: 0: K,V 5: 1K,V 2: 1K,V 3: 5K,V 1: 4: K,V 5: 5K,V 6: K,V 7: K,V 0: 2K,V 6: 1: K,V 2: K,V 6: 3K,V 2: 4: K,V 5: 6K,V 6 7: K,V Incarnation 3: K,V 7: K,V 3: K,V 7: K,V 3 7: K,V 13 II. Write to the SSD at block granularity • Insert into a hash table incarnation in RAM • Divide the hash table so all slices SliceTable fit into one block 0 1 2 3 4 5 6 7 KA,VA KD,VD KF,VF KE,VE KC,VC KB,VB Incarnation Block 0: K,V 1: K,V 2 3: K,V 4 5: K,V 6: K,V 7: K,V 0: K,V 1 2: K,V 3: K,V 4: K,V 5 6: K,V 7: K,V 0: K,V 1: K,V 2: K,V 3 4: K,V 5: K,V 6 7: K,V 14 MB/second read III. Issue large reads and large writes Package 300 parallelism 200 Channel parallelism 100 0 1 Page size Package 1 Reg Page Channel 1 Channel 2 2 4 8 16 32 64 128 Read size (KB) Package 2 Reg Page Package 3 Reg Page Package 4 Reg Page 15 MB/second written III. Issue large reads and large writes 200 150 Block size 128KB Writes 256KB Writes 512KB Writes 100 50 0 2 6 10 14 18 22 26 30 # threads SSD assigns consecutive chunks Channel parallelism (4 pages/8KB) to different channels 16 III. Issues large reads and large writes 0 1 KA,VA 2 KD,VD 3 KF,VF 0: K,V 1: K,V 2 3: K,V • Read entire SliceTable (Block) into RAM • Write entire SliceTable onto SSD 0: K,V 1 2: K,V 3: K,V 0: K,V 1: K,V 2: K,V 3 0 1: KA,VA 2: KD,VD 3: KF,VF 0: K,V 1: K,V 2 3: K,V 0: K,V 1 2: K,V 3: K,V 0: K,V 1: K,V 2: K,V 3 4 5: K,V 6: K,V 7: K,V 4: K,V 5 6: K,V 7: K,V 4: K,V 5: K,V 6 7: K,V 17 IV. Spread small reads across channels • Recall: SSD writes consecutive chunks (4 pages) of a block to different channels – Use existing techniques to reverse engineer [Chen et al. HPCA ‘11] – SSD uses write-order mapping channel for chunk i = i modulo (# channels) 18 IV. Spread small reads across channels • Estimate channel using slot # and chunk size • Attempt to schedule 1 read per channel 2 1 4 5 0 1 (slot ( # * pages per slot) modulo (# channels * pages per chunk) 4 1 Channel 0 Channel 1 Channel 2 Channel 3 19 SliceHash summary Specific slot from all incarnations 0 1 2 3 4 5 6 7 KA,VA KD,VD KF,VF KE,VE KC,VC KB,VB In-memory incarnation Slice Page Block Incarnation 0: K,V 1: K,V 2 3: K,V 4 5: K,V 6: K,V 7: K,V 0: K,V 1 2: K,V 3: K,V 4: K,V 5 6: K,V 7: K,V Read/write when updating 0: K,V 1: K,V 2: K,V 3 4: K,V 5: K,V 6 7: K,V SliceTable 20 Evaluation: throughput vs. overhead See paper for theoretical analysis ↑15% ↓12% 5 4 3 2 1 0 8B key 8B value ↑6.6x 50% insert 50% lookup ↑2.8x 80 60 40 20 0 CPU utilization (%) 140 120 100 80 60 40 20 0 2.26Ghz 4-core Memory (bytes/entry) Throughput (K ops/sec) 128GB Crucial M4 21 Evaluation: flexibility SILT Use multiple SSDs for even ↓ memory use and ↑ throughput BufferHash SH 32 Inc. SH 48 Inc. 140 120 100 80 60 40 20 0 SH 64 Inc. Throughput (K ops/sec) SILT BufferHash SH 16 Inc. SH 32 Inc. SH 48 Inc. 4.5 4 3.5 3 2.5 2 1.5 1 0.5 0 SH 64 Inc. Memory (bytes/entry) • Trade-off memory for throughput SH 16 Inc. 50% insert 50% lookup 22 Evaluation: generality Throughput (K ops/sec) • Workload may change 1200 1000 800 600 400 200 0 Lookup-only Mixed Insert-only Memory (bytes/entry) 4 Constantly 3 2low! 1 0 SH BH SILT CPU utilization (%) 100 75 Decreasing! 50 25 0 SH BH SILT 23 Summary • Present design practices for low cost and high performance SSD-based indexes • Introduce slicing to co-locate related entries and leverage multiple levels of SSD parallelism • SliceHash achieves 69K lookups/sec (≈12% better than prior works), with consistently low memory (0.6B/entry) and CPU (12%) overhead 24 Evaluation: theoretical analysis • Parameters – 16B key/value pairs – 80% table utilization – 32 incarnations – 4GB of memory – 128GB SSD – 0.31ms to read a block – 0.83ms to write a block – 0.15ms to read a page overhead 0.6 B/entry cost avg: ≈5.7μs worst: 1.14ms cost avg & worst: 0.15ms 25 Evaluation: theoretical analysis BufferHash 4B/entry overhead 0.6 B/entry cost avg: ≈0.2us worst: 0.83ms avg: ≈5.7μs worst: 1.14ms cost avg: ≈0.15ms worst: 4.8ms avg & worst: 0.15ms 26