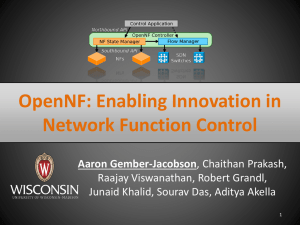

05_29_14 - Stanford University Networking Seminar

advertisement

OpenNF: Enabling Innovation in

Network Function Control

Aditya Akella

With: Aaron Gember, Raajay Vishwanathan, Chaithan

Prakash, Sourav Das, Robert Grandl, and Junaid Khalid

University of Wisconsin—Madison

Network functions, or middleboxes

Introduce custom packet processing functions into the network

Firewall

Caching

Proxy

Intrusion

Traffic

Prevention scrubber

…

Load

balancer

SSL

Gateway

WAN

optimizer

Stateful: detailed

book-keeping for

network flows

[Sherry et al., SIGCOMM 2012]

Common in

enterprise,

cellular, ISP

networks

State-of-the-art

Network functions

virtualization (NFV)

– Lower cost

Xen/KVM

– Easy prov., upgrades

Software-defined

networking (SDN)

– Decouple from physical

– Better performance, chaining

3

NFV + SDN: distributed processing

Dynamic reallocation to coordinate processing across instances

Load balancing

SDN

Controller

MBox

MBox

Extract maximal performance

at a given $$

NFV + SDN: distributed processing

Dynamic reallocation to coordinate processing across instances

Load balancing

SDN

Controller

MBox

MBox

Key abstractions

SDN

1. Elastic Controller

2. Always

updated

3. Dynamic

enhancement

MBox Hand-off

MBoxfor

processing

a traffic subset

MBox

MBox

Extract maximal performance

at a given $$

MBox

What’s missing?

The ability to simultaneously…

Meet SLAs

– E.g., ensure deployment throughput > 1Gpbs

Ensure accuracy/efficacy

– E.g., IDS raises alerts for all HTTP flows

containing known malware packages

Keep costs low, efficiency high

– E.g., shut down idle resources when not needed

… needs more control than NFV + SDN

Example

Scaling in/out

sustain thr’put at low $

Home

Users

Firewall

Caching

Proxy

Intrusion

Prevention

Web Server

Not moving flows bottleneck persists

SLAs!

Naively move flows no associated state

Accuracy!

Scale down: wait for flow drain out

Efficiency!

Transfer live state while updating n/w forwarding

7

OpenNF

Quick and safe dynamic reallocation of processing

across NF instances

Quick: Any reallocation decision invoked any time

finishes predictably soon

Safe: Key semantics for live state transfers

No state updates missed, order preserved, etc.

Rich distributed processing based applications to

flexibly meet cost, performance, security objectives

Outline

Overview and challenges

Design

– Requirements

– Key ideas

– Applications

Evaluation

Dynamic

enhancement

Hot standby

Elastic scaling

Overview and challenges

Reallocation

operations

OpenNF Controller

Coordination

w/ network

SDN Controller

MBox

OpenNF

NF APIs and control plane for

joint control over internal NF

state and network

forwarding state

State import/

export

MBox

Overview and challenges

Challenges

1: Many NFs, minimal changes

– Avoid forcing NFs to use special state structures/

allocation/access strategies

Simple NF-facing API; relegates actions to NFs

2: Reigning in race conditions

– Packets may arrive while state is being moved; Updates

lost or re-ordered; state inconsistency

Lock-step NF state/forwarding update

3: Bounding overhead

– State transfers impose CPU, memory, n/w overhead

Applications control granularity, guarantees

11

NF state taxonomy

C1: Minimal NF Changes

State created or updated by an NF applies to

either a single flow or a collection of flows

Per-flow state

TcpAnalyzer

Multi-flow state

Connection

HttpAnalyzer

ConnCount

Connection

TcpAnalyzer

All-flows state

Statistics

HttpAnalyzer

Classify state based on scope

Flow provides a natural way for reasoning

about which state to move, copy, or share

12

C1: Minimal NF Changes

API to export/import state

Three simple functions: get, put, delete(f)

– Version for each scope (per-, multi-, all-flows)

– Filter f defined over packet header fields

NFs responsible for

– Identifying and providing all state matching a filter

– Combining provided state with existing state

No need to expose internal state organization

No changes to conform to a specific allocation strategy

13

Operations

“Reallocate port 80 to NF2”

move flow-specific NF state at

various granularities

copy and combine, or share,

NF state pertaining to multiple

flows

Semantics for move (loss-free,

order-preserving), copy/share

(various notions of consistency)

14

Move

Control Application

SDN Controller

move (port=80,Inst1,Inst2,LF&OP)

forward(port=80,Inst2)

OpenNF Controller

getPerflow(port=80)

delPerflow(port=80)

[ID1,Chunk1]

[ID2,Chunk2]

Inst1

putPerflow(ID1,Chunk1)

putPerflow(ID2,Chunk2)

Inst2

15

C2: Race conditions

Load-balanced network monitoring

Moving live state: some updates (packets) may be lost, or

arrive out of order

HTTP req

HTTP req

vulnerable.bro

weird.bro

move

vulnerable.bro reconstruct MD5’s for HTTP responses

– Not robust to losses

weird.bro SYN and data packets seen in unexpected

order

– Not robust to reordering

C2: Race conditions

Lost updates during move

• Packets may arrive during a move operation

Loss-free: All state updates

B2 due to packet processing

move(blue,Inst

1 ,Inst2 ) in the transferred

Inst2 is missing

updates

should

be reflected

state, and

all

packets the switch receives should be processed

B2

B1

R1

Inst1

Inst2

• Fix:

traffic

flow and

buffer packets

Keysuspend

idea: Event

abstraction

to prevent,

observe

– May last and

100ssequence

of ms state updates

– Packets in-transit when buffering starts are dropped

17

C2: Race conditions

Loss-free move using events

Stop processing; buffer at controller

1. enableEvents(blue,drop) on Inst1;

2. get/delete on Inst1

3. Buffer events at controller

B2

B3

B1

4. put on Inst2

5. Flush packets in B1

B2

drop

R1

events to Inst2

Inst1

6. Update

Controller

forwarding

B1,B2

B1,B2,B3

Inst2

18

Re-ordering of updates

Switch

Controller

Inst1

C2: Race conditions

Inst2

5. Flush buffer

6. Issue fwd

update

B2

B3

B3

B2

B2

B4

B3

B4

B3

B3

Order-preserving: All packets should be

processed in the order they were forwarded

to the NF instances by the switch

Two-stage update to track last packet at NF1

19

Order-preserving move

C2: Race conditions

Track last packet; sequence updates

Flush packets in events to Inst2 w/ “do not buffer”

enableEvents(blue,buffer) on

Inst2

Forwarding update: send to Inst1 & controller

Wait for packet from

switch (remember last)

Forwarding update:

send to Inst2

B4

B3

buf

B3

drop

R1

B1,B2,

B1,B2,B3

B1,B2

B1

B3, B4

Wait for event from

Inst2 for last Inst1 packet

Release buffer of packets on Inst2

B2

20

Bounding overhead

Apps decide, based on NF type, objective:

granularity of reallocation operations

move, copy or share

filter, scope

guarantees desired

move: no-guarantee, loss-free,

loss-free + order-preserving

copy: no or eventual consistency

share: strong or strict consistency

C3: Applications

C3: Applications

Load-balanced network monitoring

HTTP req

HTTP req

scan.bro

vulnerable.bro

weird.bro

scan.bro

movePrefix(prefix,oldInst,newInst):

vuln.bro

copy(oldInst,newInst,{nw_src:prefix},multi)

move(oldInst,newInst,{nw_src:prefix},per,LF+OP)

while (true):

weird.bro

sleep(60)

copy(oldInst,newInst,{nw_src:prefix},multi)

copy(newInst,oldInst,{nw_src:prefix},multi)

scan.bro

Implementation

Impl & Eval

OpenNF Controller (≈4.7K lines of Java)

– Written atop Floodlight

Shared NF library (≈2.6K lines of C)

Modified NFs (4-10% increase in code)

–

–

–

–

Bro (intrusion detection)

PRADS (service/asset detection)

iptables (firewall and NAT)

Squid (caching proxy)

Testbed: HP ProCurve connected to 4 servers

23

Microbenchmarks: NFs

Serialization/deserialization

costs dominate

Impl & Eval

Cost grows with

state complexity

24

Impl & Eval

Microbenchmarks: operations

• State: 500 flows in PRADS; Traffic:D 5000

pkts/sec

= f(load,state,speed)

• Move per-flow state for all flows

881 packets

250

400

300

Packets

dropped!

686 462

Per-packet Latency

Increase (ms)

Move Time (ms)

500

200

100

0

NG

NG PL

LF PL

LF

OP

PL+ER PL+ER

in events

200

150

100

50

0

Average Maximum

838 packets + 1120 packets

in events buffered at Inst2

Copy (MF state) – 111ms

Share (strong) – 13ms per pkt Guarantees come at a cost!

25

Macrobenchmarks:

end-to-end benefits

Impl & Eval

Load balanced monitoring with Bro IDS

– Load: replay cloud trace at 10K pkts/sec

– At 180 sec: move HTTP flows (489) to new Bro

– At 360 sec: move HTTP flows back to old Bro

OpenNF scaleup: 260ms to move (optimized, loss-free)

– Log entries equivalent to using a single instance

VM replication: 3889 incorrect log entries

– Cannot support scale-down

Forwarding control only: scale down delayed by more

than 1500 seconds

Wrap up!

• OpenNF enables rich control of the packet

processing happening across instances of an NF

• Quick, key safety guarantees,

• Low overhead, minimal NF modifications

http://opennf.cs.wisc.edu

27

Backup

Copy and share

C2: Race conditions

Used when multiple instances need to access a

particular piece of state

Copy – eventual consistency

– Issue once, periodically, based on events, etc.

Share – strong

– All packets reaching NF instances trigger an event

– Packets in events are released one at a time

– State is copied between packets

29

Example app: Selectively C3: Applications

invoking advanced remote processing

HTTP req

scan.bro

vulnerable.bro

weird.bro

HTTP req

scan.bro

vulnerable.bro

weird.bro

detect-MHR.bro

!

enhanceProcessing(flowid,locInst):

move(locInst,cloudInst,flowid,per,LF)

Enterprise n/w

Internet

No need for:

(1) order-preservation

(2) copying multi-flow

state

checks md5sum

of HTTP reply

Existing approaches

• Control over routing (PLayer, SIMPLE, Stratos)

• Virtual machine replication

– Unneeded state => incorrect actions

– Cannot combine => limited rebalancing

• Split/Merge and Pico/Replication

– Address specific problems => limited suitability

– Require NFs to create/access state in specific

ways => significant NF changes

31

Controller performance

Improve scalability with P2P state transfers

32

Macrobenchmarks:

Benefits of Granular Control

Impl & Eval

Two clients make HTTP requests

– 40 unique URLs

Initially, both go to Squid1

20s later reassign client 1 to Squid2

Metric

Ignore

Copy-client

Copy-all

Hits @ S1

117

117

117

Hits @ S2

crashed

39

50

State

transferred

0

4MB

54MB

Granularities

of copy