cs188-lecture - UC Berkeley School of Information

advertisement

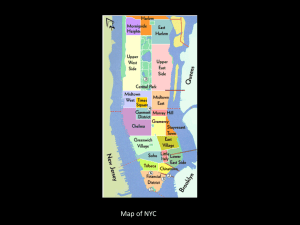

CS188 Guest Lecture: Statistical Natural Language Processing Prof. Marti Hearst School of Information Management & Systems www.sims.berkeley.edu/~hearst 1 School of Information Management & Systems 2 School of Information Management & Systems Information economics and policy Humancomputer interaction Information design and architecture SIMS Information assurance Sociology of information 3 How do we Automatically Analyze Human Language? The answer is … forget all that logic and inference stuff you’ve been learning all semester! Instead, we do something entirely different. Gather HUGE collections of text, and compute statistics over them. This allows us to make predictions. Nearly always a VERY simple algorithm and a VERY large text collection do better than a smart algorithm using knowledge engineering. 4 Statistical Natural Language Processing Chapter 23 of the textbook Prof. Russell said it won’t be on the final Today: 3 Applications Author Identification Speech Recognition (language models) Spelling Correction 5 Author Identification Problem Variations 1. Disputed authorship (choose among k known authors) 2. Document pair analysis: Were two documents written by the same author? 3. Odd-person-out: Were these documents written by one of this set of authors or by someone else? 4. Clustering of “putative” authors (e.g., internet handles: termin8r, heyr, KaMaKaZie) Slide adapted from Fred S. Roberts 6 The Federalist Papers Written in 1787-1788 by Alexander Hamilton, John Jay and James Madison to persuade the citizens of New York to ratify the constitution. Papers consisted of short essays, 900 to 3500 words in length. Authorship of 12 of those papers have been in dispute (Madison or Hamilton). These papers are referred to as the disputed Federalist papers. Slide adapted form Glenn Fung 7 Stylometry The use of metrics of literary style to analyze texts. Sentence length Paragraph length Punctuation Density of parts of speech Vocabulary Mosteller & Wallace, 1964 Federalist papers problem Used Naïve Bayes and 30 “marker” words more typical of one or the other author Concluded the disputed documents written by Madison. 8 An Alternative Method (Fung) Find a hyperplane based on 3 words: 0.5368 to +24.6634 upon+2.9532would=66.6159 All disputed papers end up on the Madison side of the plane. Slide adapted from Glenn Fung 9 Slide adapted from Glenn Fung 10 Idiosyncratic Features Idiosyncratic usage (misspellings, repeated neologisms, etc.) are apparently also useful. For example, Foster’s unmasking of Klein as the author of “Primary Colors”: “Klein and Anonymous loved unusual adjectives ending in -y and –inous: cartoony, chunky, crackly, dorky, snarly,…, slimetudinous, vertiginous, …” “Both Klein and Anonymous added letters to their interjections: ahh, aww, naww.” “Both Klein and Anonymous loved to coin words beginning in hyper-, mega-, post-, quasi-, and semimore than all others put together” “Klein and Anonymous use “riffle” to mean rifle or rustle, a usage for which the OED provides no instance in the past thousand years” Slide adapted from Fred S. Roberts 12 Language Modeling A fundamental concept in NLP Main idea: For a given language, some words are more likely than others to follow each other, or You can predict (with some degree of accuracy) the probability that, given a word, a particular other word will follow it. 13 Next Word Prediction From a NY Times story... Stocks ... Stocks plunged this …. Stocks plunged this morning, despite a cut in interest rates Stocks plunged this morning, despite a cut in interest rates by the Federal Reserve, as Wall ... Stocks plunged this morning, despite a cut in interest rates by the Federal Reserve, as Wall Street began Adapted from slide by Bonnie Dorr 14 Stocks plunged this morning, despite a cut in interest rates by the Federal Reserve, as Wall Street began trading for the first time since last … Stocks plunged this morning, despite a cut in interest rates by the Federal Reserve, as Wall Street began trading for the first time since last Tuesday's terrorist attacks. Adapted from slide by Bonnie Dorr 15 Next Word Prediction Clearly, we have the ability to predict future words in an utterance to some degree of accuracy. How? Domain knowledge Syntactic knowledge Lexical knowledge Claim: A useful part of the knowledge needed to allow word prediction can be captured using simple statistical techniques In particular, we'll rely on the notion of the probability of a sequence (a phrase, a sentence) Adapted from slide by Bonnie Dorr 16 Applications of Language Models Why do we want to predict a word, given some preceding words? Rank the likelihood of sequences containing various alternative hypotheses, – e.g. for spoken language recognition Theatre owners say unicorn sales have doubled... Theatre owners say popcorn sales have doubled... Assess the likelihood/goodness of a sentence – for text generation or machine translation. The doctor recommended a cat scan. El doctor recommendó una exploración del gato. Adapted from slide by Bonnie Dorr 17 N-Gram Models of Language Use the previous N-1 words in a sequence to predict the next word Language Model (LM) unigrams, bigrams, trigrams,… How do we train these models? Very large corpora Adapted from slide by Bonnie Dorr 18 Notation P(unicorn) Read this as “The probability of seeing the token unicorn” P(unicorn|mythical) Called the Conditional Probability. Read this as “The probability of seeing the token unicorn given that you’ve seen the token mythical 19 Speech Recognition Example From BeRP: The Berkeley Restaurant Project (Jurafsky et al.) A testbed for a Speech Recognition project System prompts user for information in order to fill in slots in a restaurant database. – Type of food, hours open, how expensive After getting lots of input, can compute how likely it is that someone will say X given that they already said Y. P(I want to each Chinese food) = P(I | <start>) P(want | I) P(to | want) P(eat | to) P(Chinese | eat) P(food | Chinese) Adapted from slide by Bonnie Dorr 20 A Bigram Grammar Fragment from BeRP Eat on .16 Eat Thai .03 Eat some .06 Eat breakfast .03 Eat lunch .06 Eat in .02 Eat dinner .05 Eat Chinese .02 Eat at .04 Eat Mexican .02 Eat a .04 Eat tomorrow .01 Eat Indian .04 Eat dessert .007 Eat today .03 Eat British .001 Adapted from slide by Bonnie Dorr 21 <start> I .25 Want some .04 <start> I’d .06 Want Thai .01 <start> Tell .04 To eat .26 <start> I’m .02 To have .14 I want .32 To spend .09 I would .29 To be .02 I don’t .08 British food .60 I have .04 British restaurant .15 Want to .65 British cuisine .01 Want a .05 British lunch .01 Adapted from slide by Bonnie Dorr 22 P(I want to eat British food) = P(I|<start>) P(want|I) P(to|want) P(eat|to) P(British|eat) P(food|British) = .25*.32*.65*.26*.001*.60 = .000080 vs. I want to eat Chinese food = .00015 Probabilities seem to capture “syntactic'' facts, “world knowledge'' eat is often followed by an NP British food is not too popular N-gram models can be trained by counting and normalization Adapted from slide by Bonnie Dorr 23 Spelling Correction How to do it? Standard approach Rely on a dictionary for comparison Assume a single “point change” – Insertion, deletion, transposition, substitution – Don’t handle word substitution Problems Might guess the wrong correction Dictionary not comprehensive – Shrek, Britney Spears, nsync, p53, ground zero May spell the word right but use it in the wrong place – principal, principle – read, red 24 New Approach: Use Search Engine Query Logs! Leverage off of the mistakes and corrections that millions of other people have already made! 25 Spelling Correction via Query Logs Cucerzan and Brill ‘04 Main idea: Iteratively transform the query into other strings that correspond to more likely queries. Use statistics from query logs to determine likelihood. – Despite the fact that many of these are misspelled – Assume that the less wrong a misspelling is, the more frequent it is, and correct > incorrect Example: ditroitigers -> detroittigers -> detroit tigers 26 Spelling Correction via Query Logs (Cucerzan and Brill ’04) 27 Spelling Correction Algorithm Algorithm: Compute the set of all possible alternatives for each word in the query – Look at word unigrams and bigrams from the logs – This handles concatenation and splitting of words Find the best possible alternative string to the input – Do this efficiently with a modified Viterbi algorithm Constraints: No 2 adjacent in-vocabulary words can change simultaneously Short queries have further (unstated) restrictions In-vocabulary words can’t be changed in the first round of iteration 28 Spelling Correction Evaluation Emphasizing coverage 1044 randomly chosen queries Annotated by two people (91.3% agreement) 180 misspelled; annotators provided corrections 81.1% system agreement with annotators – 131 false positives 2002 kawasaki ninja zx6e -> 2002 kawasaki ninja zx6r – 156 suggestions for the misspelled queries 2 iterations were sufficient for most corrections Problem: annotators were guessing user intent 30 Spell Checking: Summary Can use the collective knowledge stored in query logs Works pretty well despite the noisiness of the data Exploits the errors made by people Might be further improved to incorporate text from other domains 31 Other Search Engine Applications Many other applications apply to search engines and related topics. One more example … automatic synonym and related word generation. 32 Synonym Generation 33 Synonym Generation 34 Synonym Generation 35 Speaking of Search Engines … Introducing a New Course! Search Engines: Technology, Society, and Business IS141 (2 units) Mondays 4-6pm + 1hr section CCN 42702 No prerequisites http://www.sims.berkeley.edu/courses/is141/f05/ 36 A Great Line-up of World-Class Experts! 37 A Great Line-up of World-Class Experts! 38 Thank you! Prof. Marti Hearst School of Information Management & Systems www.sims.berkeley.edu/~hearst 39