TAMU * Galveston Workshops November 20-21, 2014

advertisement

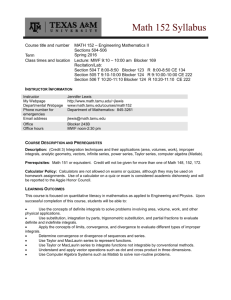

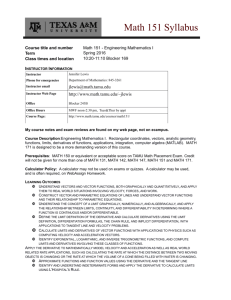

Support Program Assessment November 18-19, 2014 Ryan J. McLawhon, Ed.D. Director Institutional Assessment Ryan.McLawhon@tamu.edu Elizabeth C. Bledsoe, M.A. Program Coordinator Institutional Assessment ebledsoe@tamu.edu assessment@tamu.edu 979.862.2918 assessment.tamu.edu Kimberlee Pottberg Sr. Admin Coordinator Institutional Assessment K-pottberg@tamu.edu Agenda • Components of the WEAVEonline Assessment Plan & expectations of each • Assessment Review Process • Question and Answer Session SACS Expectations SACS Comprehensive Standard 3.3.1 3.3 Institutional Effectiveness 3.3.1 The institution identifies expected outcomes, assesses the extent to which it achieves these outcomes, and provides evidence of improvement based on analysis of the results in each of the following areas: (Institutional Effectiveness) 3.3.1.1 educational programs, to include student learning outcomes 3.3.1.2 administrative support services 3.3.1.3 educational support services 3.3.1.4 research within its educational mission, if appropriate 3.3.1.5 community/public service within its educational mission, if appropriate SACS Expectations SACS Comprehensive Standard 3.3.1 3.3 Institutional Effectiveness 3.3.1 The institution identifies expected outcomes, assesses the extent to which it achieves these outcomes, and provides and provides evidence of improvement basedofonthe analysis evidence of improvement based on analysis resultsofintheeach results… of the following areas: (Institutional Effectiveness) 3.3.1.1 educational programs, to include student learning outcomes 3.3.1.2 administrative support services 3.3.1.3 educational support services 3.3.1.4 research within its educational mission, if appropriate 3.3.1.5 community/public service within its educational mission, if appropriate The Assessment Circle Modify & Improve Develop Program Mission & Outcomes Interpret/ Evaluate Information Design an Assessment Plan Implement the Plan & Gather Information Adapted from: Trudy Banta, IUPUI Develop Mission and Outcomes Develop Program Mission & Outcomes Mission Statement • The mission statement links the functions of your unit to the overall mission of the institution. • A few questions to consider in formulating the mission of your unit: – What is the primary function of your unit? – What should stakeholders interacting with your unit/program experience? Characteristics of a Well-Defined Mission Statement • Brief, concise, distinctive • Clearly identifies the program’s purpose and larger impact • Clearly aligns with the mission of the division and the University • Clearly identifies the primary stakeholders of the program: i.e., students, faculty, parents, etc. Outcomes/Objectives should… • Limited in number (manageable) • Specific, measurable and/or observable • Meaningful Outcomes/Objectives There are two categories of outcomes: Learning Outcomes Program Objectives Examples of Learning Outcomes • Students participating in service learning activities will articulate how the experience connects to their degree and understanding of their field. • Students will identify and discuss various aspects of architectural diversity in their design projects. Program Objectives • Process statements – Relate to what the unit intends to accomplish • Level or volume of activity (participation rates, turnaround time, etc.) • Compliance with external standards of “good practice in the field” or regulations (government standards, etc.) • Satisfaction statements – Describe how those you serve rate their satisfaction with your program, services, or activities Examples of Program Objectives • Process statements – The Office of Safety and Security will prevent and resolve unsafe conditions. • Satisfaction statements – Students who participate in Honors and Undergraduate Research core programs will express satisfaction with the format and content of the programs by acknowledging that these activities contributed toward their achieving learning outcomes for undergraduate studies. Design an Assessment Plan Design an Assessment Plan Measures should be… • Measurable and/or observable – You can observe it, count it, quantify, etc. – Specifically defined with enough context to understand how it is observable • Meaningful – It captures enough of the essential components of the objective to represent it adequately – It will yield vital information about your unit/program • Triangulates data – Multiple measures for each outcome – Direct and Indirect Measures Assessment Measures • Define and identify the sources of evidence you will use to determine whether you are achieving your outcomes and how, if necessary, how that will be analyzed/evaluated. • Identify or create measures which can inform decisions about your unit/program’s processes and services. Types of Assessment Measures (Palomba and Banta, 1999) There are two basic types of assessment measures: Direct Measures Indirect Measures Direct Measures • Direct measures are those designed to directly measure what a stakeholder knows or is able to do (i.e., requires a stakeholder to actually demonstrate the skill or knowledge) OR • Direct measures are physical representations of the fulfillment of an outcome. Indirect Measures Indirect measures focus on: stakeholders’ perception of the performance of the unit stakeholders’ perception of the benefit of programming or intervention completion of requirements or activities stakeholders’ satisfaction with some aspect of the program or service Common Indirect Measures • Surveys • Exit interviews • Retention/graduation data • Demographics • Focus groups Choosing Assessment Measures Some things to think about: – How would you describe the end result of the outcome? OR How will you know if this outcome is being accomplished? • What is the end product? – Will the resulting data provide information that could lead to an improvement of your services or processes? Achievement Targets • An achievement target is the result, target, benchmark, or value that will represent success at achieving a given outcome. • Achievement targets should be specific numbers or trends representing a reasonable level of success for the given measure/outcome relationship. • What does quality mean and/or look like? Examples of Achievement Targets • 95% of all radiation safety inspections assigned will be performed monthly, to include providing recommendations for correcting deficiencies. This target was established with departmental leadership based on previous years' performance and professional judgment. • A 5% increase in products and weights of EHS recycled materials (e.g., used oil, light bulbs) from the previous year will be realized. Implement & Gather Information Implement the Plan & Gather Information Findings • The results of the application of the measure to the collected data • The language of this statement should parallel the corresponding achievement target. • Results should be described in enough detail to prove you have met, partially met, or not met the achievement target. Interpret/Evaluate Information Interpret/ Evaluate Information Analyzing Findings • Three key questions at the heart of the analysis: – What did you find and learn? – So What does that mean for your unit or program? – Now What will you do as a result of the first two answers? Analysis Question Responses should… • Demonstrate thorough analysis of the given findings • Provide additional context to the action plan (why this approach was selected, why it is expected to make a difference, etc.) • Update previous action plans – results of implementation Modify/Improve Modify & Improve Action Plans • After reflecting on the findings, you and your colleagues should determine appropriate action to improve the services provided. • Actions outlined in the Action Plan should be specific and relate to the outcome and the results of assessment. – Action Plans should not be related to the assessment process itself An Action Plan will… • Clearly communicate how the collected evidence of efficiency, satisfaction, or other Findings inform a change or improvement to processes and services. • This DOES NOT include: – Changes to assessment processes – Continued monitoring of information – Changes to the program not informed by the data collected through the assessment process Assessment Review Mission Statement Outcomes/Objectives Measures Targets Findings Action Plans Analysis Questions Take-Home Messages • Assess what is important • Use your findings to inform actions • You do not have to assess everything every year OIA Consultations • WEAVEonline support and training • Assessment plan design, clean-up, and re-design – And we can come to you! • New Website: assessment.tamu.edu Questions? http://assessment.tamu.edu/conference References The Principles of Accreditation: Foundations for Quality Enhancement. SACS COC. 2008 Edition. Banta, Trudy W., & Palomba, C. (1999). Assessment Essentials. San Francisco: Jossey-Bass. Banta, Trudy W. (2004). Hallmarks of Effective Outcomes Assessment. San Francisco: John Wiley and Sons. Walvoord, Barbara E. (2004). Assessment Clear and Simple: A Practical Guide for Institutions, Departments, and General Education. San Francisco: Jossey-Bass. Assessment manuals from Western Carolina University, Texas Christian University, the University of Central Florida were very helpful in developing this presentation. Putting It All Together examples adapted from Georgia State University, the University of North Texas, and the University of Central Florida’s Assessment Plans