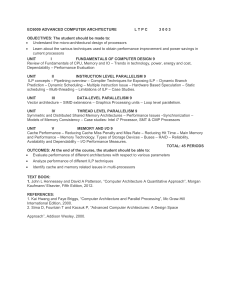

TRENDS IN PARALLEL ARCHITECTURES By: Saba Ahsan Assistant Professor Department of Computer Engineering, Sir Syed University of Engineering & Technology, Technology Trends • Moore’s law • • • is an empirical observation which states that the number of transistors of a typical processor chip doubles every 18–24 months and in 2020 a typical processor chip might consist upto dozens to hundreds of cores. This observation was first made by Gordon Moore in 1965 and is valid now for more than 40 years. Moore’s predictions proved accurate for several decades and has been used in the semiconductor industry to guide long-term planning and set targets for research and development. Advancement in digital electronics are strongly linked to Moore’s law. Quality adjusted memory price, memory capacity, sensor and even the no & size of pixels in digital camera’s. Technology Trends • Moore’s Law describes a driving force of technological • • • • and social change, productivity and economic growth. GPUs(Graphics Processing Units): Another trend in parallel computing is the use of GPUs for compute intensive applications. GPU architectures provide many hundreds of specialized processing cores that can perform computation in parallel. It is useful for Machine Learning, video editing and gaming applications. If large no of cores then single application program would be executed on multiple cores. Parallelism in Uniprocessors • Different phases of microprocessor design trends exist – all of them are mainly driven by the internal use of parallelism: o o o o o o Parallelism at bit-level Instruction level Parallelism Data Parallelism Parallelism by pipelining Parallelism by multiple functional units Parallelism at process or thread level 1 Parallelism at Bit-Level • This development has been driven by demands for • improved floating-point accuracy and a larger address space. The trend has stopped at a word size of 64 bits, since this gives sufficient accuracy for floating-point numbers and covers a sufficiently large address space of 264 bytes. 10 + 03 === 13 1 0 1 0 + + + + 0 0 1 1 = = = = 1 1 0 1 1-bit Full Adder 1-bit Full Adder 1-bit Full Adder 1-bit Full Adder Instruction level Parallelism • How many instructions in a computer program can be executed simultaneously. • Hardware level works----Dynamic parallelism • Software level works-----Static parallelism • Dynamic Parallelism: Processor decides at run time which instructions to execute in parallel. • Static Parallelism: Computer decides which instruction to execute in parallel e.g • e= a+b • d= g+h • x= e-d dependent on instruction 1 & 2 so can’t be executed in parallel Data Parallelism • Parallelism across multiple processors in parallel computing environment. • Data distributes in parallel across different nodes. Parallelism by Pipelining 2 • The idea of pipelining at instruction level is an overlapping of the execution of multiple instructions • The execution of each instruction is partitioned into • several steps which are performed by dedicated hardware units (pipeline stages) one after another. A typical partitioning could result in the following steps: o o o o Fetch: fetch the next instruction to be executed from memory Decode: decode the instruction fetched in step one Execute: load the operands specified and execute the instruction Write-back: write the result into the target register INSTRUCTION 2 INSTRUCTION 1 F1 T1 WRITE-BACK EXECUTE INSTRUCTION 3 DECODE INSTRUCTION 4 FETCH Instruction Pipeline Flow F4 D4 F3 D3 E3 W3 F2 D2 E2 W2 D1 E1 W1 T2 T3 E4 W4 Time T4 ILP Processors • Processors which use pipelining to execute instructions • • • • are called ILP processors (Instruction-Level Parallelism) In the absence of dependencies, all pipeline stages work in parallel Typical numbers of pipeline stages lie between 2 and 26 stages. Processors with a relatively large number of pipeline stages are sometimes called super-pipelined Although the available degree of parallelism increases with the number of pipeline stages, this number cannot be arbitrarily increased, since it is not possible to partition the execution of the instruction into a very large number of steps of equal size. Parallelism by Multiple 3 Functional Units • Many processors are multiple-issue processors. They use: o o o o multiple, independent functional units like ALUs FPUs (floating-point units) Load/store units Branch (prediction or handling) units • These units can work in parallel, (i.e., different • independent instructions can be executed in parallel by different functional units) thus increasing the average execution rate of instructions. Multiple-issue processors can either be: o o Superscalar processors VLIW (Very Long Instruction Word) processors Superscalar Processors • For superscalar processors, the dependencies are • • • determined at run-time dynamically by the hardware, and decoded instructions are dispatched to the instruction units using dynamic scheduling by the hardware. Superscalars exhibit increasingly complex hardware circuitry. Superscalar processors with up to four functional units yield a substantial benefit over a single functional unit. Using even more functional units provides little additional gain because of dependencies between instructions and branching of control flow. Drawbacks of previous three techniques • The three techniques described so far assume a single • • • sequential control flow which is provided by the sequential programming language compiler which determines the execution order if there are dependencies between instructions. However, the degree of parallelism obtained by pipelining and multiple functional units is limited and has already been reached for some time for typical processors. But more and more transistors are available per processor chip according to Moore’s law. This can be used to integrate larger caches on the chip But the cache sizes cannot be arbitrarily increased either, as larger caches lead to a larger access time! Parallelism at Process/ 4 Thread Level • An alternative approach to use the increasing number • • • of transistors on a chip is to put multiple, independent processor cores onto a single processor chip. This approach has been used for typical desktop processors since 2005 and is known as multi-core processors Each of the cores of a multi-core processor must obtain a separate flow of control, i.e., some parallel programming techniques must be used. The cores of a processor chip access the same memory and may even share caches requiring their coordinated memory accesses. Flynn’s Taxonomy • A parallel computer can be characterized as a collection of • • • processing elements that can communicate and cooperate to solve large problems fast. This taxonomy characterizes parallel computers according to the global control and the resulting data and control flows. Instruction stream(The sequence of instruction from memory to control unit), Data stream(one operation at a time), Single vs. multiple Four combinations o SISD (Single Instruction Single Data) o SIMD (Single Instruction Multiple Data) o MISD (Multiple Instruction Single Data) o MIMD (Multiple Inst. Multiple Data) SISD(Single Instruction S. Data) • There is one processing element which has access to a single program and data storage. • In each step, the processing element loads an • • instruction and the corresponding data and executes the instruction. Single-CPU systems Note: co-processors don’t count o Functional o I/O • Example: conventional PCs SISD(Single Instruction S. Data) Instruction Stream I/O Control Unit Processor Instruction Stream Data Stream Memory SIMD (Single Inst. Multiple Data) • There are multiple processing elements each of which • • • has a private access to a (shared or distributed) data memory. But there is only one program memory from which a special control processor fetches and dispatches instructions. In each step, each processing element obtains from the control processor the same instruction and loads a separate data element through its private data access on which the instruction is performed. E.g. are multimedia applications or computer graphics algorithms to generate realistic three-dimensional views of computer-generated environments. SIMD (Single Inst. Multiple Data) Processor #1 Memory #1 Instruction Stream Data Stream Control Unit Program Loaded From Front end Processor #2 Memory #2 Processor #n Memory #n SIMD Scheme 1 • Each processor has its own local memory. • SIMD Scheme 2 • Processors and memory modules communicate with each other via interconnection network. MISD (Multiple Inst. Single Data) • There are multiple processing elements each of which • • has a private program memory, but there is only one common access to a single global data memory. In each step, each processing element obtains the same data element from the data memory and loads an instruction from its private program memory. This execution model is very restrictive and no commercial parallel computer of this type has ever been built. MIMD (Multiple Inst. Multi. Data) • There are multiple processing elements each of which • • has a separate instruction and data access to a (shared or distributed) program and data memory. In each step, each processing element loads a separate instruction and a separate data element, applies the instruction to the data element, and stores a possible result back into the data storage. Multiple-CPU computers o Multiprocessors o Multicomputers Measuring Performance FLOPS • Flops (floating point ops / second) is a measure of • • • computer performance, useful in fields of scientific calculations that make heavy use of floating point calculations. A floating point operation requires more processing than a fixed point operation. Categorize as: Mega-flops (106) o Giga-flops (109) o Tera-flops (1012) o Peta-flops(1015) o Exa-flops(1018) Memory Organization of Parallel Computers • Nearly all general-purpose parallel computers are based on the MIMD model. • From the programmer’s point of view, it can be • distinguished between computers with a distributed address space and computers with a shared address space. For example, a parallel computer with a physically distributed memory may appear to the programmer as a computer with a shared address space when a corresponding programming environment is used. Memory Organization of Parallel Computers Parallel and Distributed MIMD Computer Systems Multicomputer Systems Hybrid Systems Multiprocessor Systems Computers with Distributed memory Shared computers With virtually memory Computers with Memory shared Distributed Computing • Distributed Computing is a field of computer science that studies distributed systems. A distributed System is a model in which components located on network. Computer communicate & Co-ordinate their actions by passing messages. The components interact with each other in order to achieve a common goal. Computers with Distributed Memory Network Network DMA P P P P M M M M M DMA P M P DMM – Architectural Description • They consist of: • • • • o a number of processing elements (called nodes) and o an interconnection network which connects nodes and supports the transfer of data between nodes A node is an independent unit, consisting of processor, local memory, and, sometimes peripherals Program data is stored in the local memory of one or several nodes. All local memory is private and only the local processor can access the local memory directly When a processor needs data from the local memory of other nodes to perform local computations, message-passing has to be performed via the interconnection network to provide communication between cooperating sequential processes. Decoupling DMM's – DMM's & routers • A DMA controller at each node can decouple the • • execution of communication operations from the processor’s operations to control the data transfer between the local memory and the I/O controller. A further decoupling can be obtained by connecting nodes to routers. The routers form the actual network over which communication can be performed DMM - considerations • Technically, DMM's are quite easy to assemble since standard desktop computers can be used as nodes • The programming of DMM's requires a careful data • layout, since each processor can directly access only its local data Non-local data must be accessed via message-passing, and the execution of the corresponding send and receive operations takes significantly longer than a local memory access DMM, NOW and Clusters • The structure of DMM's has many similarities with • • • • networks of workstations (NOW's) in which standard workstations are connected by a fast LAN An important difference is that interconnection networks of DMM's are typically more specialized and provide larger bandwidths and lower latencies, thus leading to a faster message exchange Collections of complete computers with a dedicated interconnection network are often called Clusters Clusters are usually based on standard computers and even standard network topologies. The entire cluster is addressed and programmed as a single unit. From Clusters to Grids • A natural programming model of DMMs is the message- • • • passing model that is supported by communication libraries like MPI or PVM. These libraries are often based on standard protocols like TCP/IP The difference between cluster systems and distributed systems lies in the fact that the nodes in cluster systems use the same operating system and can usually not be addressed individually; instead a special job scheduler must be used Several clusters can be connected to grid systems by using middle-ware software (which controls execution of its application programs – e.g. the Globus Toolkit) to allow a coordinated collaboration among them.