Mathematical Finance: Stochastic Calculus Problems & Solutions

advertisement

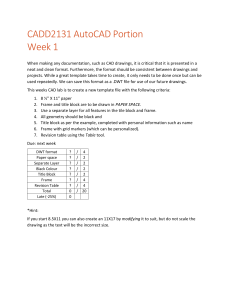

Chin

ffirs.tex

V3 - 08/23/2014

4:39 P.M.

Page vi

Chin

Problems and Solutions

in Mathematical Finance

ffirs.tex

V3 - 08/23/2014

4:39 P.M.

Page i

Chin

For other titles in the Wiley Finance Series

please see www.wiley.com/finance

ffirs.tex

V3 - 08/23/2014

4:39 P.M.

Page ii

Chin

ffirs.tex

Problems and Solutions

in Mathematical Finance

Volume 1: Stochastic Calculus

Eric Chin, Dian Nel and Sverrir Ólafsson

V3 - 08/23/2014

4:39 P.M.

Page iii

Chin

ffirs.tex

V3 - 08/23/2014

4:39 P.M.

This edition first published 2014

© 2014 John Wiley & Sons, Ltd

Registered office

John Wiley & Sons Ltd, The Atrium, Southern Gate, Chichester, West Sussex, PO19 8SQ, United Kingdom

For details of our global editorial offices, for customer services and for information about how to apply for

permission to reuse the copyright material in this book please see our website at www.wiley.com.

All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any

form or by any means, electronic, mechanical, photocopying, recording or otherwise, except as permitted by the UK

Copyright, Designs and Patents Act 1988, without the prior permission of the publisher.

Wiley publishes in a variety of print and electronic formats and by print-on-demand. Some material included with

standard print versions of this book may not be included in e-books or in print-on-demand. If this book refers to

media such as a CD or DVD that is not included in the version you purchased, you may download this material at

http://booksupport.wiley.com. For more information about Wiley products, visit www.wiley.com.

Designations used by companies to distinguish their products are often claimed as trademarks. All brand names and

product names used in this book are trade names, service marks, trademarks or registered trademarks of their

respective owners. The publisher is not associated with any product or vendor mentioned in this book.

Limit of Liability/Disclaimer of Warranty: While the publisher and author have used their best efforts in preparing

this book, they make no representations or warranties with the respect to the accuracy or completeness of the

contents of this book and specifically disclaim any implied warranties of merchantability or fitness for a particular

purpose. It is sold on the understanding that the publisher is not engaged in rendering professional services and

neither the publisher nor the author shall be liable for damages arising herefrom. If professional advice or other

expert assistance is required, the services of a competent professional should be sought.

Library of Congress Cataloging-in-Publication Data

Chin, Eric,

Problems and solutions in mathematical finance : stochastic calculus / Eric Chin, Dian Nel and Sverrir Ólafsson.

pages cm

Includes bibliographical references and index.

ISBN 978-1-119-96583-1 (cloth)

1. Finance – Mathematical models. 2. Stochastic analysis. I. Nel, Dian, II. Ólafsson, Sverrir, III. Title.

HG106.C495 2014

332.01′ 51922 – dc23

2013043864

A catalogue record for this book is available from the British Library.

ISBN 978-1-119-96583-1 (hardback) ISBN 978-1-119-96607-4 (ebk)

ISBN 978-1-119-96608-1 (ebk)

ISBN 978-1-118-84514-1 (ebk)

Cover design: Cylinder

Typeset in 10/12pt TimesLTStd by Laserwords Private Limited, Chennai, India

Printed in Great Britain by CPI Group (UK) Ltd, Croydon, CR0 4YY

Page iv

Chin

“the beginning of a task is the biggest step”

Plato, The Republic

ffirs.tex

V3 - 08/23/2014

4:39 P.M.

Page v

Chin

ffirs.tex

V3 - 08/23/2014

4:39 P.M.

Page vi

Chin

ftoc.tex

V3 - 08/23/2014

Contents

Preface

Prologue

About the Authors

ix

xi

xv

1

General Probability Theory

1.1 Introduction

1.2 Problems and Solutions

1.2.1 Probability Spaces

1.2.2 Discrete and Continuous Random Variables

1.2.3 Properties of Expectations

1

1

4

4

11

41

2

Wiener Process

2.1 Introduction

2.2 Problems and Solutions

2.2.1 Basic Properties

2.2.2 Markov Property

2.2.3 Martingale Property

2.2.4 First Passage Time

2.2.5 Reflection Principle

2.2.6 Quadratic Variation

51

51

55

55

68

71

76

84

89

3

Stochastic Differential Equations

3.1 Introduction

3.2 Problems and Solutions

3.2.1 Itō Calculus

3.2.2 One-Dimensional Diffusion Process

3.2.3 Multi-Dimensional Diffusion Process

95

95

102

102

123

155

4

Change of Measure

4.1 Introduction

4.2 Problems and Solutions

4.2.1 Martingale Representation Theorem

185

185

192

192

4:40 P.M.

Page vii

Chin

viii

4:40 P.M.

Contents

4.2.2 Girsanov’s Theorem

4.2.3 Risk-Neutral Measure

5

ftoc.tex V3 - 08/23/2014

Poisson Process

5.1 Introduction

5.2 Problems and Solutions

5.2.1 Properties of Poisson Process

5.2.2 Jump Diffusion Process

5.2.3 Girsanov’s Theorem for Jump Processes

5.2.4 Risk-Neutral Measure for Jump Processes

194

221

243

243

251

251

281

298

322

Appendix A Mathematics Formulae

331

Appendix B Probability Theory Formulae

341

Appendix C Differential Equations Formulae

357

Bibliography

365

Notation

369

Index

373

Page viii

Chin

fpref.tex

V3 - 08/23/2014

Preface

Mathematical finance is based on highly rigorous and, on occasions, abstract mathematical

structures that need to be mastered by anyone who wants to be successful in this field, be it

working as a quant in a trading environment or as an academic researcher in the field. It may

appear strange, but it is true, that mathematical finance has turned into one of the most advanced

and sophisticated field in applied mathematics. This development has had considerable impact

on financial engineering with its extensive applications to the pricing of contingent claims

and synthetic cash flows as analysed both within financial institutions (investment banks) and

corporations. Successful understanding and application of financial engineering techniques to

highly relevant and practical situations requires the mastering of basic financial mathematics.

It is precisely for this purpose that this book series has been written.

In Volume I, the first of a four volume work, we develop briefly all the major mathematical

concepts and theorems required for modern mathematical finance. The text starts with probability theory and works across stochastic processes, with main focus on Wiener and Poisson

processes. It then moves to stochastic differential equations including change of measure and

martingale representation theorems. However, the main focus of the book remains practical.

After being introduced to the fundamental concepts the reader is invited to test his/her knowledge on a whole range of different practical problems. Whereas most texts on mathematical

finance focus on an extensive development of the theoretical foundations with only occasional

concrete problems, our focus is a compact and self-contained presentation of the theoretical

foundations followed by extensive applications of the theory. We advocate a more balanced

approach enabling the reader to develop his/her understanding through a step-by-step collection of questions and answers. The necessary foundation to solve these problems is provided

in a compact form at the beginning of each chapter. In our view that is the most successful way

to master this very technical field.

No one can write a book on mathematical finance today, not to mention four volumes, without

being influenced, both in approach and presentation, by some excellent text books in the field.

The texts we have mostly drawn upon in our research and teaching are (in no particular order

of preference), Tomas Björk, Arbitrage Theory in Continuous Time; Steven Shreve, Stochastic

Calculus for Finance; Marek Musiela and Marek Rutkowski, Martingale Methods in Financial

Modelling and for the more practical aspects of derivatives John Hull, Options, Futures and

4:40 P.M.

Page ix

Chin

x

fpref.tex

V3 - 08/23/2014

4:40 P.M.

Preface

Other Derivatives. For the more mathematical treatment of stochastic calculus a very influential text is that of Bernt Øksendal, Stochastic Differential Equations. Other important texts are

listed in the bibliography.

Note to the student/reader. Please try hard to solve the problems on your own before you

look at the solutions!

Page x

Chin

fpref.tex

V3 - 08/23/2014

Prologue

IN THE BEGINNING WAS THE MOTION. . .

The development of modern mathematical techniques for financial applications can be traced

back to Bachelier’s work, Theory of Speculation, first published as his PhD Thesis in 1900.

At that time Bachelier was studying the highly irregular movements in stock prices on the

French stock market. He was aware of the earlier work of the Scottish botanist Robert Brown,

in the year 1827, on the irregular movements of plant pollen when suspended in a fluid. Bachelier worked out the first mathematical model for the irregular pollen movements reported by

Brown, with the intention to apply it to the analysis of irregular asset prices. This was a highly

original and revolutionary approach to phenomena in finance. Since the publication of Bachelier’s PhD thesis, there has been a steady progress in the modelling of financial asset prices.

Few years later, in 1905, Albert Einstein formulated a more extensive theory of irregular molecular processes, already then called Brownian motion. That work was continued and extended

in the 1920s by the mathematical physicist Norbert Wiener who developed a fully rigorous

framework for Brownian motion processes, now generally called Wiener processes.

Other major steps that paved the way for further development of mathematical

finance included the works by Kolmogorov on stochastic differential equations, Fama

on efficient-market hypothesis and Samuelson on randomly fluctuating forward prices.

Further important developments in mathematical finance were fuelled by the realisation of the

importance of Itō’s lemma in stochastic calculus and the Feynman-Kac formula, originally

drawn from particle physics, in linking stochastic processes to partial differential equations

of parabolic type. The Feynman-Kac formula provides an immensely important tool for

the solution of partial differential equations “extracted” from stochastic processes via Itō’s

lemma. The real relevance of Itō’s lemma and Feynman-Kac formula in finance were only

realised after some further substantial developments had taken place.

The year 1973 saw the most important breakthrough in financial theory when Black and

Scholes and subsequently Merton derived a model that enabled the pricing of European call and

put options. Their work had immense practical implications and lead to an explosive increase

in the trading of derivative securities on some major stock and commodity exchanges. However, the philosophical foundation of that approach, which is based on the construction of

risk-neutral portfolios enables an elegant and practical way of pricing of derivative contracts,

has had a lasting and revolutionary impact on the whole of mathematical finance. The development initiated by Black, Scholes and Merton was continued by various researchers, notably

Harrison, Kreps and Pliska in 1980s. These authors established the hugely important role of

4:40 P.M.

Page xi

Chin

xii

fpref.tex

V3 - 08/23/2014

4:40 P.M.

Prologue

martingales and arbitrage theory for the pricing of a large class of derivative securities or,

as they are generally called, contingent claims. Already in the Black, Scholes and Merton

model the risk-neutral measure had been informally introduced as a consequence of the construction of risk-neutral portfolios. Harrison, Kreps and Pliska took this development further

and turned it into a powerful and the most general tool presently available for the pricing of

contingent claims.

Within the Harrison, Kreps and Pliska framework the change of numéraire technique plays

a fundamental role. Essentially the price of any asset, including contingent claims, can be

expressed in terms of units of any other asset. The unit asset plays the role of a numéraire. For

a given asset and a selected numéraire we can construct a probability measure that turns the

asset price, in units of the numéraire, into a martingale whose existence is equivalent to the

absence of an arbitrage opportunity. These results amount to the deepest and most fundamental

in modern financial theory and are therefore a core construct in mathematical finance.

In the wake of the recent financial crisis, which started in the second half of 2007, some

commentators and academics have voiced their opinion that financial mathematicians and their

techniques are to be blamed for what happened. The authors do not subscribe to this view. On

the contrary, they believe that to improve the robustness and the soundness of financial contracts, an even better mathematical training for quants is required. This encompasses a better

comprehension of all tools in the quant’s technical toolbox such as optimisation, probability,

statistics, stochastic calculus and partial differential equations, just to name a few.

Financial market innovation is here to stay and not going anywhere, instead tighter regulations and validations will be the only way forward with deeper understanding of models.

Therefore, new developments and market instruments requires more scrutiny, testing and validation. Any inadequacies and weaknesses of model assumptions identified during the validation process should be addressed with appropriate reserve methodologies to offset sudden

changes in the market direction. The reserve methodologies can be subdivided into model

(e.g., Black-Scholes or Dupire model), implementation (e.g., tree-based or Monte Carlo simulation technique to price the contingent claim), calibration (e.g., types of algorithms to solve

optimization problems, interpolation and extrapolation methods when constructing volatility

surface), market parameters (e.g., confidence interval of correlation marking between underlyings) and market risk (e.g., when market price of a stock is close to the option’s strike price

at expiry time). These are the empirical aspects of mathematical finance that need to be a core

part in the further development of financial engineering.

One should keep in mind that mathematical finance is not, and must never become, an esoteric subject to be left to ivory tower academics alone, but a powerful tool for the analysis of

real financial scenarios, as faced by corporations and financial institutions alike. Mathematical

finance needs to be practiced in the real world for it to have sustainable benefits. Practitioners

must realise that mathematical analysis needs to be built on a clear formulation of financial

realities, followed by solid quantitative modelling, and then stress testing the model. It is our

view that the recent turmoil in financial markets calls for more careful application of quantitative techniques but not their abolishment. Intuition alone or behavioural models have their

role to play but do not suffice when dealing with concrete financial realities such as, risk quantification and risk management, asset and liability management, pricing insurance contracts

or complex financial instruments. These tasks require better and more relevant education for

quants and risk managers.

Financial mathematics is still a young and fast developing discipline. On the other hand, markets present an extremely complex and distributed system where a huge number of interrelated

Page xii

Chin

Prologue

fpref.tex

V3 - 08/23/2014

xiii

financial instruments are priced and traded. Financial mathematics is very powerful in pricing

and managing a limited number of instruments bundled into a portfolio. However, modern

financial mathematics is still rather poor at capturing the extremely intricate contractual interrelationship that exists between large numbers of traded securities. In other words, it is only

to a very limited extent able to capture the complex dynamics of the whole markets, which is

driven by a large number of unpredictable processes which possess varying degrees of correlation. The emergent behaviour of the market is to an extent driven by these varying degrees of

correlations. It is perhaps one of the major present day challenges for financial mathematics to

join forces with modern theory of complexity with the aim of being able to capture the macroscopic properties of the market, that emerge from the microscopic interrelations between a

large number of individual securities. That this goal has not been reached yet is no criticism

of financial mathematics. It only bears witness to its juvenile nature and the huge complexity

of its subject.

Solid training of financial mathematicians in a whole range of quantitative disciplines,

including probability theory and stochastic calculus, is an important milestone in the further

development of the field. In the process, it is important to realise that financial engineering

needs more than just mathematics. It also needs a judgement where the quant should

constantly be reminded that no two market situations or two market instruments are exactly

the same. Applying the same mathematical tools to different situations reminds us of the

fact that we are always dealing with an approximation, which reflects the fact that we are

modelling stochastic processes i.e. uncertainties. Students and practitioners should always

bear this in mind.

4:40 P.M.

Page xiii

Chin

fpref.tex

V3 - 08/23/2014

4:40 P.M.

Page xiv

Chin

flast.tex

V3 - 08/23/2014

About the Authors

Eric Chin is a quantitative analyst at an investment bank in the City of London where he

is involved in providing guidance on price testing methodologies and their implementation,

formulating model calibration and model appropriateness on commodity and credit products.

Prior to joining the banking industry he worked as a senior researcher at British Telecom investigating radio spectrum trading and risk management within the telecommunications sector. He

holds an MSc in Applied Statistics and an MSc in Mathematical Finance both from University

of Oxford. He also holds a PhD in Mathematics from University of Dundee.

Dian Nel has more than 10 years of experience in the commodities sector. He currently works

in the City of London where he specialises in oil and gas markets. He holds a BEng in Electrical and Electronic Engineering from Stellenbosch University and an MSc in Mathematical

Finance from Christ Church, Oxford University. He is a Chartered Engineer registered with

the Engineering Council UK.

Sverrir Ólafsson is Professor of Financial Mathematics at Reykjavik University; a Visiting

Professor at Queen Mary University, London and a director of Riskcon Ltd, a UK based risk

management consultancy. Previously he was a Chief Researcher at BT Research and held

academic positions at The Mathematical Departments of Kings College, London; UMIST

Manchester and The University of Southampton. Dr Ólafsson is the author of over 95 refereed academic papers and has been a key note speaker at numerous international conferences

and seminars. He is on the editorial board of three international journals. He has provided an

extensive consultancy on financial risk management and given numerous specialist seminars to

finance specialists. In the last five years his main teaching has been MSc courses on Risk Management, Fixed Income, and Mathematical Finance. He has an MSc and PhD in mathematical

physics from the Universities of Tübingen and Karlsruhe respectively.

4:40 P.M.

Page xv

Chin

flast.tex

V3 - 08/23/2014

4:40 P.M.

Page xvi

Chin

c01.tex

V3 - 08/23/2014

1

General Probability Theory

Probability theory is a branch of mathematics that deals with mathematical models of trials

whose outcomes depend on chance. Within the context of mathematical finance, we will review

some basic concepts of probability theory that are needed to begin solving stochastic calculus

problems. The topics covered in this chapter are by no means exhaustive but are sufficient to

be utilised in the following chapters and in later volumes. However, in order to fully grasp the

concepts, an undergraduate level of mathematics and probability theory is generally required

from the reader (see Appendices A and B for a quick review of some basic mathematics and

probability theory). In addition, the reader is also advised to refer to the notation section (pages

369–372) on set theory, mathematical and probability symbols used in this book.

1.1 INTRODUCTION

We consider an experiment or a trial whose result (outcome) is not predictable with certainty.

The set of all possible outcomes of an experiment is called the sample space and we denote it

by Ω. Any subset A of the sample space is known as an event, where an event is a set consisting

of possible outcomes of the experiment.

The collection of events can be defined as a subcollection ℱ of the set of all subsets of Ω

and we define any collection ℱ of subsets of Ω as a field if it satisfies the following.

Definition 1.1 The sample space Ω is the set of all possible outcomes of an experiment

or random trial. A field is a collection (or family) ℱ of subsets of Ω with the following

conditions:

(a) ∅ ∈ ℱ where ∅ is the empty set;

(b) if A ∈ ℱ then Ac ∈ ℱ where Ac is the

⋃ complement of A in Ω;

(c) if A1 , A2 , . . . , An ∈ ℱ, n ≥ 2 then ni=1 Ai ∈ ℱ – that is to say, ℱ is closed under finite

unions.

It should be noted in the definition of a field that ℱ is closed under finite unions (as well

as under finite intersections). As for the case of a collection of events closed under countable

unions (as well as under countable intersections), any collection of subsets of Ω with such

properties is called a 𝜎-algebra.

Definition 1.2 If Ω is a given sample space, then a 𝜎-algebra (or 𝜎-field) ℱ on Ω is a family

(or collection) ℱ of subsets of Ω with the following properties:

(a) ∅ ∈ ℱ;

c

(b) if A ∈ ℱ then Ac ∈ ℱ where

⋃∞ A is the complement of A in Ω;

(c) if A1 , A2 , . . . ∈ ℱ then i=1 Ai ∈ ℱ – that is to say, ℱ is closed under countable unions.

4:33 P.M.

Page 1

Chin

2

c01.tex

1.1

V3 - 08/23/2014

4:33 P.M.

INTRODUCTION

We next outline an approach to probability which is a branch of measure theory. The reason

for taking a measure-theoretic path is that it leads to a unified treatment of both discrete and

continuous random variables, as well as a general definition of conditional expectation.

Definition 1.3 The pair (Ω, ℱ) is called a measurable space. A probability measure ℙ on a

measurable space (Ω, ℱ) is a function ℙ ∶ ℱ → [0, 1] such that:

(a) ℙ(∅) = 0;

(b) ℙ(Ω) = 1;

⋃∞

(c) if A1 , A2 , . . . ∈ ℱ and {Ai }∞

i=1 is disjoint such that Ai ∩ Aj = ∅, i ≠ j then ℙ( i=1 Ai ) =

∑∞

i=1 ℙ(Ai ).

The triple (Ω, ℱ, ℙ) is called a probability space. It is called a complete probability space

if ℱ also contains subsets B of Ω with ℙ-outer measure zero, that is ℙ∗ (B) = inf{ℙ(A) ∶ A ∈

ℱ, B ⊂ A} = 0.

By treating 𝜎-algebras as a record of information, we have the following definition of a

filtration.

Definition 1.4 Let Ω be a non-empty sample space and let T be a fixed positive number, and

assume for each t ∈ [0, T] there is a 𝜎-algebra ℱt . In addition, we assume that if s ≤ t, then

every set in ℱs is also in ℱt . We call the collection of 𝜎-algebras ℱt , 0 ≤ t ≤ T, a filtration.

Below we look into the definition of a real-valued random variable, which is a function that

maps a probability space (Ω, ℱ, ℙ) to a measurable space ℝ.

Definition 1.5 Let Ω be a non-empty sample space and let ℱ be a 𝜎-algebra of subsets of Ω.

A real-valued random variable X is a function X ∶ Ω → ℝ such that {𝜔 ∈ Ω ∶ X(𝜔) ≤ x}

∈ ℱ for each x ∈ ℝ and we say X is ℱ measurable.

In the study of stochastic processes, an adapted stochastic process is one that cannot “see

into the future” and in mathematical finance we assume that asset prices and portfolio positions

taken at time t are all adapted to a filtration ℱt , which we regard as the flow of information

up to time t. Therefore, these values must be ℱt measurable (i.e., depend only on information

available to investors at time t). The following is the precise definition of an adapted stochastic

process.

Definition 1.6 Let Ω be a non-empty sample space with a filtration ℱt , t ∈ [0, T] and let Xt

be a collection of random variables indexed by t ∈ [0, T]. We therefore say that this collection

of random variables is an adapted stochastic process if, for each t, the random variable Xt is

ℱt measurable.

Finally, we consider the concept of conditional expectation, which is extremely important

in probability theory and also for its wide application in mathematical finance such as pricing

options and other derivative products. Conceptually, we consider a random variable X defined

on the probability space (Ω, ℱ, ℙ) and a sub-𝜎-algebra 𝒢 of ℱ (i.e., sets in 𝒢 are also in ℱ).

Here X can represent a quantity we want to estimate, say the price of a stock in the future, while

Page 2

Chin

1.1

c01.tex

V3 - 08/23/2014

INTRODUCTION

3

𝒢 contains limited information about X such as the stock price up to and including the current

time. Thus, 𝔼(X|𝒢) constitutes the best estimation we can make about X given the limited

knowledge 𝒢. The following is a formal definition of a conditional expectation.

Definition 1.7 (Conditional Expectation) Let (Ω, ℱ, ℙ) be a probability space and let 𝒢 be

a sub-𝜎-algebra of ℱ (i.e., sets in 𝒢 are also in ℱ). Let X be an integrable (i.e., 𝔼(|X|) < ∞)

and non-negative random variable. Then the conditional expectation of X given 𝒢, denoted

𝔼(X|𝒢), is any random variable that satisfies:

(a) 𝔼(X|𝒢) is 𝒢 measurable;

(b) for every set A ∈ 𝒢, we have the partial averaging property

∫A

𝔼(X|𝒢) dℙ =

∫A

X dℙ.

From the above definition, we can list the following properties of conditional expectation.

Here (Ω, ℱ, ℙ) is a probability space, 𝒢 is a sub-𝜎-algebra of ℱand X is an integrable random

variable.

• Conditional probability. If 1IA is an indicator random variable for an event A then

𝔼(1IA |𝒢) = ℙ(A|𝒢).

• Linearity. If X1 , X2 , . . . , Xn are integrable random variables and c1 , c2 , . . . , cn are constants then

𝔼(c1 X1 + c2 X2 + . . . + cn Xn |𝒢) = c1 𝔼(X1 |𝒢) + c2 𝔼(X2 |𝒢) + . . . + cn 𝔼(Xn |𝒢).

• Positivity. If X ≥ 0 almost surely then 𝔼(X|𝒢) ≥ 0 almost surely.

• Monotonicity. If X and Y are integrable random variables and X ≤ Y almost surely then

𝔼(X|𝒢) ≤ 𝔼(Y|𝒢).

• Computing expectations by conditioning. 𝔼[𝔼(X|𝒢)] = 𝔼(X).

• Taking out what is known. If X and Y are integrable random variables and X is 𝒢 measurable then

𝔼(XY|𝒢) = X ⋅ 𝔼(Y|𝒢).

• Tower property. If ℋ is a sub-𝜎-algebra of 𝒢 then

𝔼[𝔼(X|𝒢)|ℋ] = 𝔼(X|ℋ).

• Measurability. If X is 𝒢 measurable then 𝔼(X|𝒢) = X.

• Independence. If X is independent of 𝒢 then 𝔼(X|𝒢) = 𝔼(X).

• Conditional Jensen’s inequality. If 𝜑 ∶ ℝ → ℝ is a convex function then

𝔼[𝜑(X)|𝒢] ≥ 𝜑[𝔼(X|𝒢)].

4:33 P.M.

Page 3

Chin

4

c01.tex

1.2.1

1.2

1.2.1

V3 - 08/23/2014

4:33 P.M.

Probability Spaces

PROBLEMS AND SOLUTIONS

Probability Spaces

1. De Morgan’s Law. Let Ai , i ∈ I where I is some, possibly uncountable, indexing set.

Show that

(⋃

)c ⋂

A =

Ac .

(a)

(⋂i∈I i )c ⋃i∈I ic

(b)

= i∈I Ai .

i∈I Ai

Solution:

(a) Let a ∈

(⋃

i∈I

Ai

)c

which implies a ∉

(

⋃

⋃

i∈I

Ai , so that a ∈ Aci for all i ∈ I. Therefore,

)c

⊆

Ai

⋂

i∈I

On the contrary, if we let a ∈

hence

i∈I

⋂

c

i∈I Ai

then a ∉ Ai for all i ∈ I or a ∈

)c

(

⋃

⋂

c

Ai ⊆

Ai .

i∈I

(⋃

)c

=

Therefore,

i∈I Ai

(b) From (a), we can write

Aci .

(⋃

i∈I

Ai

)c

and

i∈I

⋂

c

i∈I Ai .

(

⋃

)c

Aci

=

i∈I

⋂ ( )c ⋂

Aci =

Ai .

i∈I

i∈I

Taking complements on both sides gives

(

)c

⋃

⋂

Ai

=

Aci .

i∈I

i∈I

◽

2. ⋂

Let ℱ be a 𝜎-algebra of subsets of the sample space Ω. Show that if A1 , A2 , . . . ∈ ℱ then

∞

i=1 Ai ∈ ℱ.

⋃

c

Solution: Given that ℱ is a 𝜎-algebra then Ac1 , Ac2 , . . . ∈ ℱ and ∞

i=1 Ai ∈ ℱ. Further⋃∞ c (⋃∞ c )c

more, the complement of i=1 Ai is

∈ ℱ.

i=1 Ai

(⋃∞ c )c

Thus, from De Morgan’s law (see Problem 1.2.1.1, page 4) we have

=

i=1 Ai

⋂∞ ( c )c ⋂∞

= i=1 Ai ∈ ℱ.

i=1 Ai

◽

3. Show that if ℱ is a 𝜎-algebra of subsets of Ω then {∅, Ω} ∈ ℱ.

Solution: ℱ is a 𝜎-algebra of subsets of Ω, hence if A ∈ ℱ then Ac ∈ ℱ.

Since ∅ ∈ ℱ then ∅c = Ω ∈ ℱ. Thus, {∅, Ω} ∈ ℱ.

◽

Page 4

Chin

1.2.1

c01.tex

Probability Spaces

V3 - 08/23/2014

5

4. Show that if A ⊆ Ω then ℱ = {∅, Ω, A, Ac } is a 𝜎-algebra of subsets of Ω.

Solution: ℱ = {∅, Ω, A, Ac } is a 𝜎-algebra of subsets of Ω since

(i) ∅ ∈ ℱ.

(ii) For ∅ ∈ ℱ then ∅c = Ω ∈ ℱ. For Ω ∈ ℱ then Ωc = ∅ ∈ ℱ. In addition, for A ∈ ℱ

then Ac ∈ ℱ. Finally, for Ac ∈ ℱ then (Ac )c = A ∈ ℱ.

(iii) ∅ ∪ Ω = Ω ∈ ℱ, ∅ ∪ A = A ∈ ℱ, ∅ ∪ Ac = Ac ∈ ℱ, Ω ∪ A = Ω ∈ ℱ, Ω ∪

Ac = Ω ∈ ℱ, ∅ ∪ Ω ∪ A = Ω ∈ ℱ, ∅ ∪ Ω ∪ Ac = Ω ∈ ℱand Ω ∪ A ∪ Ac = Ω ∈ ℱ.

◽

5. Let {ℱ

⋂i }i∈I , I ≠ ∅ be a family of 𝜎-algebras of subsets of the sample space Ω. Show that

ℱ = i∈I ℱi is also a 𝜎-algebra of subsets of Ω.

Solution: ℱ =

⋂

i∈I

ℱi is a 𝜎-algebra by taking note that

(a) Since ∅ ∈ ℱi , i ∈ I therefore ∅ ∈ ℱ as well.

∈ I. Therefore, A ∈ ℱ and hence Ac ∈ ℱ.

(b) If A ∈ ℱi for all i ∈ I then Ac ∈ ℱi , i⋃

, A2 , . . . ∈ ℱi for all i ∈ I then ∞

(c) If A1⋃

k=1 Ak ∈ ℱi , i ∈ I and hence A1 , A2 , . . . ∈ ℱ

and ∞

A

∈

ℱ.

k=1 k

⋂

From the results of (a)–(c) we have shown ℱ = i∈I ℱi is a 𝜎-algebra of Ω.

◽

6. Let Ω = {𝛼, 𝛽, 𝛾} and let

ℱ1 = {∅, Ω, {𝛼}, {𝛽, 𝛾}}

and

ℱ2 = {∅, Ω, {𝛼, 𝛽}, {𝛾}}.

Show that ℱ1 and ℱ2 are 𝜎-algebras of subsets of Ω.

Is ℱ = ℱ1 ∪ ℱ2 also a 𝜎-algebra of subsets of Ω?

Solution: Following the steps given in Problem 1.2.1.4 (page 5) we can easily show ℱ1

and ℱ2 are 𝜎-algebras of subsets of Ω.

By setting ℱ = ℱ1 ∪ ℱ2 = {∅, Ω, {𝛼}, {𝛾}, {𝛼, 𝛽}, {𝛽, 𝛾}}, and since {𝛼} ∈ ℱand {𝛾} ∈

ℱ, but {𝛼} ∪ {𝛾} = {𝛼, 𝛾} ∉ ℱ, then ℱ = ℱ1 ∪ ℱ2 is not a 𝜎-algebra of subsets of Ω.

◽

7. Let ℱbe a 𝜎-algebra of subsets of Ω and suppose ℙ ∶ ℱ → [0, 1] so that ℙ(Ω) = 1. Show

that ℙ(∅) = 0.

Solution: Given that ∅ and Ω are mutually exclusive we therefore have

∅ ∩ Ω = ∅ and ∅ ∪ Ω = Ω.

Thus, we can express

ℙ(∅ ∪ Ω) = ℙ(∅) + ℙ(Ω) − ℙ(∅ ∩ Ω) = 1.

Since ℙ(Ω) = 1 and ℙ(∅ ∩ Ω) = 0 therefore ℙ(∅) = 0.

◽

4:33 P.M.

Page 5

Chin

6

c01.tex

1.2.1

V3 - 08/23/2014

4:33 P.M.

Probability Spaces

8. Let (Ω, ℱ, ℙ) be a probability space and let ℚ ∶ ℱ → [0, 1] be defined by ℚ(A) = ℙ(A|B)

where B ∈ ℱ such that ℙ(B) > 0. Show that (Ω, ℱ, ℚ) is also a probability space.

Solution: To show that (Ω, ℱ, ℚ) is a probability space we note that

ℙ(∅ ∩ B) ℙ(∅)

=

= 0.

ℙ(B)

ℙ(B)

ℙ(Ω ∩ B) ℙ(B)

=

= 1.

(b) ℚ(Ω) = ℙ(Ω|B) =

ℙ(B)

ℙ(B)

(c) Let A1 , A2 , . . . be disjoint members of ℱand hence we can imply A1 ∩ B, A2 ∩ B, . . .

are also disjoint members of ℱ. Therefore,

(a) ℚ(∅) = ℙ(∅|B) =

(

ℚ

∞

⋃

)

(

=ℙ

Ai

)

(⋃∞

)

∞

∞

|

∑

ℙ

ℙ(Ai ∩ B) ∑

i=1 (Ai ∩ B)

|

Ai | B =

ℚ(Ai ).

=

=

|

ℙ(B)

ℙ(B)

i=1

i=1

i=1

|

∞

⋃

i=1

Based on the results of (a)–(c), we have shown that (Ω, ℱ, ℚ) is also a probability space.

◽

9. Boole’s Inequality. Suppose {Ai }i∈I is a countable collection of events. Show that

(

⋃

ℙ

)

Ai

i∈I

≤

∑

( )

ℙ Ai .

i∈I

Solution: Without loss of generality we assume that I = {1, 2, . . .} and define B1 = A1 ,

Bi = Ai \ (A1 ∪ A2 ∪ . . . ∪ Ai−1 ), i ∈ {2, 3, . . .} such that {B1 , B2 , . . .} are pairwise disjoint and

⋃

⋃

Ai =

Bi .

i∈I

i∈I

Because Bi ∩ Bj = ∅, i ≠ j, i, j ∈ I we have

)

(

ℙ

⋃

Ai

(

=ℙ

i∈I

=

∑

⋃

)

Bi

i∈I

ℙ(Bi )

i∈I

=

∑

i∈I

=

ℙ(Ai \(A1 ∪ A2 ∪ . . . ∪ Ai−1 ))

∑{

(

)}

ℙ(Ai ) − ℙ Ai ∩ (A1 ∪ A2 ∪ . . . ∪ Ai−1 )

i∈I

≤

∑

i∈I

ℙ(Ai ).

◽

Page 6

Chin

1.2.1

c01.tex

V3 - 08/23/2014

Probability Spaces

7

10. Bonferroni’s Inequality. Suppose {Ai }i∈I is a countable collection of events. Show that

(

⋂

ℙ

)

≥1−

Ai

i∈I

∑

( )

ℙ Aci .

i∈I

Solution: From De Morgan’s law (see Problem 1.2.1.1, page 4) we can write

(

ℙ

⋂

)

Ai

((

=ℙ

i∈I

⋃

)c )

Aci

(

=1−ℙ

⋃

i∈I

)

.

Aci

i∈I

By applying Boole’s inequality (see Problem 1.2.1.9, page 6) we will have

(

)

⋂

∑ ( )

Ai ≥ 1 −

ℙ Aci

ℙ

i∈I

since ℙ

(⋃

)

c

i∈I Ai

≤

∑

i∈I

( c)

i∈I ℙ Ai .

◽

11. Bayes’ Formula. Let A1 , A2 , . . . , An be a partition of Ω, where

n

⋃

Ai = Ω, Ai ∩ Aj = ∅,

i=1

i ≠ j and each Ai , i, j = 1, 2, . . . , n has positive probability. Show that

ℙ(B|Ai )ℙ(Ai )

.

ℙ(Ai |B) = ∑n

j=1 ℙ(B|Aj )ℙ(Aj )

Solution: From the definition of conditional probability, for i = 1, 2, . . . , n

ℙ(Ai |B) =

ℙ(B|Ai )ℙ(Ai )

ℙ(B|Ai )ℙ(Ai )

ℙ(Ai ∩ B)

ℙ(B|A )ℙ(Ai )

= ∑n

.

= (⋃ i

) = ∑n

n

ℙ(B)

j=1 ℙ(B ∩ Aj )

j=1 ℙ(B|Aj )ℙ(Aj )

ℙ

(B

∩

A

)

j

j=1

◽

12. Principle of Inclusion and Exclusion for Probability. Let A1 , A2 , . . . , An , n ≥ 2 be a collection of events. Show that

ℙ(A1 ∪ A2 ) = ℙ(A1 ) + ℙ(A2 ) − ℙ(A1 ∩ A2 ).

From the above result show that

ℙ(A1 ∪ A2 ∪ A3 ) = ℙ(A1 ) + ℙ(A2 ) + ℙ(A3 ) − ℙ(A1 ∩ A2 ) − ℙ(A1 ∩ A3 )

− ℙ(A2 ∩ A3 ) + ℙ(A1 ∩ A2 ∩ A3 ).

Hence, using mathematical induction show that

(

ℙ

n

⋃

i=1

)

Ai

=

n

∑

i=1

ℙ(Ai ) −

n−1 ∑

n

∑

i=1 j=i+1

ℙ(Ai ∩ Aj ) +

n−2 ∑

n−1 ∑

n

∑

i=1 j=i+1 k=j+1

ℙ(Ai ∩ Aj ∩ Ak )

4:33 P.M.

Page 7

Chin

8

c01.tex

1.2.1

V3 - 08/23/2014

Probability Spaces

− . . . + (−1)n+1 ℙ(A1 ∩ A2 ∩ . . . ∩ An ).

Finally, deduce that

(

ℙ

n

⋂

)

Ai

=

i=1

n

∑

ℙ(Ai ) −

i=1

n−1 ∑

n

∑

ℙ(Ai ∪ Aj ) +

i=1 j=i+1

n−2 ∑

n−1 ∑

n

∑

ℙ(Ai ∪ Aj ∪ Ak )

i=1 j=i+1 k=j+1

− . . . + (−1)n+1 ℙ(A1 ∪ A2 ∪ . . . ∪ An ).

Solution: For n = 2, A1 ∪ A2 can be written as a union of two disjoint sets

A1 ∪ A2 = A1 ∪ (A2 \ A1 ) = A1 ∪ (A2 ∩ Ac1 ).

Therefore,

ℙ(A1 ∪ A2 ) = ℙ(A1 ) + ℙ(A2 ∩ Ac1 )

and since ℙ(A2 ) = ℙ(A2 ∩ A1 ) + ℙ(A2 ∩ Ac1 ) we will have

ℙ(A1 ∪ A2 ) = ℙ(A1 ) + ℙ(A2 ) − ℙ(A1 ∩ A2 ).

For n = 3, and using the above results, we can write

ℙ(A1 ∪ A2 ∪ A3 ) = ℙ(A1 ∪ A2 ) + ℙ(A3 ) − ℙ[(A1 ∪ A2 ) ∩ A3 ]

= ℙ(A1 ) + ℙ(A2 ) + ℙ(A3 ) − ℙ(A1 ∩ A2 ) − ℙ[(A1 ∪ A2 ) ∩ A3 ].

Since (A1 ∪ A2 ) ∩ A3 = (A1 ∩ A3 ) ∪ (A2 ∩ A3 ) therefore

ℙ[(A1 ∪ A2 ) ∩ A3 ] = ℙ[(A1 ∩ A3 ) ∪ (A2 ∩ A3 )]

= ℙ(A1 ∩ A3 ) + ℙ(A2 ∩ A3 ) − ℙ[(A1 ∩ A3 ) ∩ (A2 ∩ A3 )]

= ℙ(A1 ∩ A3 ) + ℙ(A2 ∩ A3 ) − ℙ(A1 ∩ A2 ∩ A3 ).

Thus,

ℙ(A1 ∪ A2 ∪ A3 ) = ℙ(A1 ) + ℙ(A2 ) + ℙ(A3 ) − ℙ(A1 ∩ A2 ) − ℙ(A1 ∩ A3 )

−ℙ(A2 ∩ A3 ) + ℙ(A1 ∩ A2 ∩ A3 ).

Suppose the result is true for n = m, where m ≥ 2. For n = m + 1, we have

ℙ

(( m

⋃

)

Ai

)

∪ Am+1

=ℙ

i=1

(m

⋃

(

=ℙ

)

m

⋃

i=1

)

Ai

)

((

+ ℙ Am+1 − ℙ

Ai

i=1

(

(

)

+ ℙ Am+1 − ℙ

(

m

⋃

)

Ai

)

∩ Am+1

i=1

m

⋃

(

Ai ∩ Am+1

i=1

4:33 P.M.

)

)

.

Page 8

Chin

1.2.1

c01.tex

V3 - 08/23/2014

Probability Spaces

9

By expanding the terms we have

(m+1 )

m

m

m

m−1

m−2

⋃

∑

∑ ∑

∑ m−1

∑ ∑

ℙ

Ai =

ℙ(Ai ) −

ℙ(Ai ∩ Aj ) +

ℙ(Ai ∩ Aj ∩ Ak )

i=1

i=1

i=1 j=i+1

i=1 j=i+1 k=j+1

− . . . + (−1)m+1 ℙ(A1 ∩ A2 ∩ . . . ∩ Am )

)

(

)

(

+ℙ Am+1 − ℙ (A1 ∩ Am+1 ) ∪ (A2 ∩ Am+1 ) . . . ∪ (Am ∩ Am+1 )

∑

m+1

=

m

∑ ∑

m−1

ℙ(Ai ) −

i=1

m

∑ ∑ ∑

m−2 m−1

ℙ(Ai ∩ Aj ) +

i=1 j=i+1

ℙ(Ai ∩ Aj ∩ Ak )

i=1 j=i+1 k=j+1

− . . . + (−1)m+1 ℙ(A1 ∩ A2 ∩ . . . ∩ Am )

−

m

∑

ℙ(Ai ∩ Am+1 ) +

i=1

m−1

∑

m

∑

ℙ(Ai ∩ Aj ∩ Am+1 )

i=1 j=i+1

+ . . . − (−1)m+1 ℙ(A1 ∩ A2 ∩ . . . ∩ Am+1 )

=

m+1

∑

ℙ(Ai ) −

i=1

m m+1

∑

∑

ℙ(Ai ∩ Aj ) +

i=1 j=i+1

m−1

∑

m m+1

∑

∑

ℙ(Ai ∩ Aj ∩ Ak )

i=1 j=i+1 k=j+1

− . . . + (−1)m+2 ℙ(A1 ∩ A2 ∩ . . . ∩ Am+1 ).

Therefore, the result is also true for n = m + 1. Thus, from mathematical induction we

have shown for n ≥ 2,

( n )

n

n−1 n

n−2 n−1

n

⋃

∑

∑

∑

∑

∑ ∑

Ai =

ℙ(Ai ) −

ℙ(Ai ∩ Aj ) +

ℙ(Ai ∩ Aj ∩ Ak )

ℙ

i=1

i=1

i=1 j=i+1

i=1 j=i+1 k=j+1

− . . . + (−1)n+1 ℙ(A1 ∩ A2 ∩ . . . ∩ An ).

From Problem 1.2.1.1 (page 4) we can write

( n )

(( n

)c )

( n

)

⋂

⋃

⋃

ℙ

Ai = ℙ

Aci

Aci .

=1−ℙ

i=1

i=1

i=1

Thus, we can expand

( n )

n

n−1 ∑

n−2 ∑

n

n−1 ∑

n

⋂

∑

∑

∑

Ai = 1 −

ℙ(Aci ) +

ℙ(Aci ∩ Acj ) −

ℙ(Aci ∩ Acj ∩ Ack )

ℙ

i=1

i=1

i=1 j=i+1

i=1 j=i+1 k=j+1

+ . . . − (−1)n+1 ℙ(Ac1 ∩ Ac2 ∩ . . . ∩ Acn )

=1−

n

∑

(1 − ℙ(Ai )) +

i=1

−

n−2 ∑

n−1 ∑

n

∑

i=1 j=i+1 k=j+1

n

n−1 ∑

∑

(1 − ℙ(Ai ∪ Aj ))

i=1 j=i+1

(1 − ℙ(Ai ∪ Aj ∪ Ak ))

4:33 P.M.

Page 9

Chin

10

c01.tex

1.2.1

V3 - 08/23/2014

4:33 P.M.

Probability Spaces

+ . . . − (−1)n+1 (1 − ℙ(A1 ∪ A2 ∪ . . . ∪ An ))

=

n

∑

ℙ(Ai ) −

i=1

n−1 ∑

n

∑

ℙ(Ai ∪ Aj ) +

n−2 ∑

n−1 ∑

n

∑

i=1 j=i+1

ℙ(Ai ∪ Aj ∪ Ak )

i=1 j=i+1 k=j+1

− . . . + (−1)n+1 ℙ(A1 ∪ A2 ∪ . . . ∪ An ).

◽

13. Borel–Cantelli Lemma. Let (Ω, ℱ, ℙ) be a probability space and let A1 , A2 , . . . be sets in

ℱ. Show that

∞ ∞

∞

⋂

⋃

⋃

Ak ⊆

Ak

m=1 k=m

k=m

and hence prove that

(

ℙ

∞

∞ ⋃

⋂

)

Ak

m=1 k=m

Solution: Let Bm =

∞

⋃

k=m

∞

∑

⎧

ℙ(Ak ) = ∞

⎪1 if Ai ∩ Aj = ∅, i ≠ j and

k=1

⎪

=⎨

∞

⎪

∑

ℙ(Ak ) < ∞.

⎪0 if

⎩

k=1

Ak and since

∞

⋂

m=1

∞ ∞

⋂

⋃

Bm ⊆ Bm therefore we have

Ak ⊆

m=1 k=m

∞

⋃

Ak .

k=m

From Problem 1.2.1.9 (page 6) we can deduce that

)

(∞ )

(∞ ∞

∞

⋂⋃

⋃

∑

ℙ

Ak ≤ ℙ

Ak ≤

ℙ(Ak ).

m=1 k=m

For the case

and hence

∞

∑

k=1

k=m

k=m

ℙ(Ak ) < ∞ and given it is a convergent series, then lim

(

ℙ

∞ ∞

⋂

⋃

m→∞ k=m

)

Ak

∞

∑

ℙ(Ak ) = 0

=0

m=1 k=m

if

∞

∑

k=1

ℙ(Ak ) < ∞.

For the case Ai ∩ Aj = ∅, i ≠ j and

∞

∑

k=1

(( ∞

)c)

(∞ )

⋃

⋃

=

ℙ(Ak ) = ∞, since ℙ

Ak + ℙ

Ak

k=m

1 therefore from Problem 1.2.1.1 (page 4)

(∞

)

(( ∞ )c )

(∞

)

⋃

⋃

⋂

c

Ak = 1 − ℙ

Ak

Ak .

ℙ

=1−ℙ

k=m

k=m

k=m

k=m

Page 10

Chin

1.2.2

c01.tex

V3 - 08/23/2014

Discrete and Continuous Random Variables

∞

∑

From independence and because

11

ℙ(Ak ) = ∞ we can express

k=1

(

ℙ

∞

⋂

)

Ack

=

k=m

∞

∏

∞

∞

∑∞

( ) ∏

(

( )) ∏

ℙ Ack =

e−ℙ(Ak ) = e− k=m ℙ(Ak ) = 0

1 − ℙ Ak ≤

k=m

k=m

(

for all m and hence

ℙ

∞

⋃

k=m

)

Ak

(

=1−ℙ

k=m

∞

⋂

)

Ack

=1

k=m

for all m. Taking the limit m → ∞,

(

ℙ

∞

∞ ⋃

⋂

)

Ak

(

= lim ℙ

m→∞

m=1 k=m

for the case Ai ∩ Aj = ∅, i ≠ j and

1.2.2

∞

∑

k=1

∞

⋃

)

Ak

=1

k=m

ℙ(Ak ) = ∞.

◽

Discrete and Continuous Random Variables

1. Bernoulli Distribution. Let X be a Bernoulli random variable, X ∼ Bernoulli(p), p ∈ [0, 1]

with probability mass function

ℙ(X = 1) = p,

ℙ(X = 0) = 1 − p.

Show that 𝔼(X) = p and Var(X) = p(1 − p).

Solution: If X ∼ Bernoulli(p) then we can write

ℙ(X = x) = px (1 − p)1−x ,

x ∈ {0, 1}

and by definition

𝔼(X) =

1

∑

xℙ(X = x) = 0 ⋅ (1 − p) + 1 ⋅ p = p

x=0

𝔼(X ) =

2

1

∑

x2 ℙ(X = x) = 0 ⋅ (1 − p) + 1 ⋅ p = p

x=0

and hence

𝔼(X) = p,

Var(X) = 𝔼(X 2 ) − 𝔼(X)2 = p(1 − p).

◽

4:33 P.M.

Page 11

Chin

12

c01.tex

V3 - 08/23/2014

4:33 P.M.

1.2.2 Discrete and Continuous Random Variables

2. Binomial Distribution. Let {Xi }ni=1 be a sequence of independent Bernoulli random variables each with probability mass function

ℙ(Xi = 1) = p,

ℙ(Xi = 0) = 1 − p,

and let

X=

n

∑

p ∈ [0, 1]

Xi .

i=1

Show that X follows a binomial distribution, X ∼ Binomial(n, p) with probability mass

function

( )

n k

ℙ(X = k) =

p (1 − p)n−k , k = 0, 1, 2, . . . , n

k

such that 𝔼(X) = np and Var(X) = np(1 − p).

Using the central limit theorem show that X is approximately normally distributed, X ∻

𝒩(np, np(1 − p)) as n → ∞.

Solution: The random variable X counts the number of Bernoulli variables X1 , . . . , Xn

that are equal to 1, i.e., the number of successes in the n independent trials. Clearly X takes

values in the set N = {0, 1, 2, . . . , n}. To calculate the probability that X = k, where k ∈ N

is the number of successes we let E be the event such that Xi1 = Xi2 = . . . = Xik = 1 and

Xj = 0 for all j ∈ N\ S where S = {i1 , i2 , . . . , ik }. Then, because the Bernoulli variables

are independent and identically distributed,

ℙ(E) =

∏

ℙ(Xj = 1)

j∈S

∏

ℙ(Xj = 0) = pk (1 − p)n−k .

j∈N \ S

( )

n

combinations to select sets of indices i1 , . . . , ik from N, which

k

are mutually exclusive events, so

However, as there are

ℙ(X = k) =

( )

n k

p (1 − p)n−k ,

k

k = 0, 1, 2, . . . , n.

From the definition of the moment generating function of discrete random variables (see

Appendix B),

( ) ∑ tx

MX (t) = 𝔼 etX =

e ℙ(X = x)

x

( )

n x

where t ∈ ℝ and substituting ℙ(X = x) =

p (1 − p)n−x we have

x

MX (t) =

n

∑

x=0

( )

n ( )

∑

n x

n

n−x

p (1 − p)

(pet )x (1 − p)n−x = (1 − p + pet )n .

e

=

x

x

tx

x=0

Page 12

Chin

1.2.2

c01.tex

Discrete and Continuous Random Variables

V3 - 08/23/2014

13

By differentiating MX (t) with respect to t twice we have

M ′X (t) = npet (1 − p + pet )n−1

M ′′X (t) = npet (1 − p + pet )n−1 + n(n − 1)p2 e2t (1 − p + pet )n−2

and hence

𝔼(X) = M ′X (0) = np

Var(X) = 𝔼(X 2 ) − 𝔼(X)2 = M ′′X (0) − M ′X (0)2 = np(1 − p).

Given the sequence Xi ∼ Bernoulli(p), i = 1, 2, . . . , n are independent and identically distributed, each having expectation 𝜇 = p and variance 𝜎 2 = p(1 − p), then as n → ∞, from

the central limit theorem

∑n

i=1 Xi − n𝜇 D

−−→ 𝒩(0, 1)

√

𝜎 n

or

D

X − np

−−→ 𝒩(0, 1).

√

np(1 − p)

Thus, as n → ∞, X ∻ 𝒩(np, np(1 − p)).

◽

3. Poisson Distribution. A discrete Poisson distribution, Poisson(𝜆) with parameter 𝜆 > 0

has the following probability mass function:

ℙ(X = k) =

𝜆k −𝜆

e ,

k!

k = 0, 1, 2, . . .

Show that 𝔼(X) = 𝜆 and Var(X) = 𝜆.

For a random variable following a binomial distribution, Binomial(n, p), 0 ≤ p ≤ 1 show

that as n → ∞ and with p = 𝜆∕n, the binomial distribution tends to the Poisson distribution

with parameter 𝜆.

Solution: From the definition of the moment generating function

( ) ∑ tx

MX (t) = 𝔼 etX =

e ℙ(X = x)

x

where t ∈ ℝ and substituting ℙ(X = x) =

MX (t) =

∞

∑

etx

x=0

𝜆x −𝜆

e we have

x!

∞

∑

(𝜆et )x

t

𝜆x −𝜆

e = e−𝜆

= e𝜆(e −1) .

x!

x!

x=0

By differentiating MX (t) with respect to t twice

M ′X (t) = 𝜆et e𝜆(e −1) ,

t

M ′′X (t) = (𝜆et + 1)𝜆et e𝜆(e −1)

t

4:33 P.M.

Page 13

Chin

14

c01.tex

V3 - 08/23/2014

4:33 P.M.

1.2.2 Discrete and Continuous Random Variables

we have

𝔼(X) = M ′X (0) = 𝜆

′′

′

Var(X) = 𝔼(X 2 ) − 𝔼(X)2 = MX (0) − MX (0)2 = 𝜆.

If X ∼ Binomial(n, p) then we can write

ℙ(X = k) =

n!

pk (1 − p)n−k

k!(n − k)!

n(n − 1) . . . (n − k + 1)(n − k)!

k!(n − k)!

(

)

(n − k)(n − k − 1) 2

k

× p 1 − (n − k)p +

p + ...

2!

(

)

n(n − 1) . . . (n − k + 1) k

(n − k)(n − k − 1) 2

=

p 1 − (n − k)p +

p + ... .

k!

2!

=

For the case when n → ∞ so that n ≫ k we have

(

)

(np)2

nk

ℙ(X = k) ≈ pk 1 − np +

+ ...

k!

2!

=

nk k −np

pe .

k!

By setting p = 𝜆∕n, we have ℙ(X = k) ≈

𝜆k −𝜆

e .

k!

◽

4. Exponential Distribution. Consider a continuous random variable X following an exponential distribution, X ∼ Exp(𝜆) with probability density function

fX (x) = 𝜆e−𝜆x ,

x≥0

1

1

where the parameter 𝜆 > 0. Show that 𝔼(X) = and Var(X) = 2 .

𝜆

𝜆

Prove that X ∼ Exp(𝜆) has a memory less property given as

ℙ(X > s + x|X > s) = ℙ(X > x) = e−𝜆x ,

x, s ≥ 0.

For a sequence of Bernoulli trials drawn from a Bernoulli distribution, Bernoulli(p), 0 ≤

p ≤ 1 performed at time Δt, 2Δt, . . . where Δt > 0 and if Y is the waiting time for the

first success, show that as Δt → 0 and p → 0 such that p∕Δt approaches a constant 𝜆 > 0,

then Y ∼ Exp(𝜆).

Solution: For t < 𝜆, the moment generating function for a random variable X ∼ Exp(𝜆) is

( )

MX (t) = 𝔼 etX =

∞

∫0

∞

etu 𝜆e−𝜆u du = 𝜆

∫0

e−(𝜆−t)u du =

𝜆

.

𝜆−t

Page 14

Chin

1.2.2

c01.tex

V3 - 08/23/2014

Discrete and Continuous Random Variables

15

Differentiation of MX (t) with respect to t twice yields

′

MX (t) =

𝜆

,

(𝜆 − t)2

Therefore,

′

𝔼(X) = MX (0) =

2𝜆

.

(𝜆 − t)3

′′

MX (t) =

1

,

𝜆

′′

𝔼(X 2 ) = MX (0) =

and the variance of X is

Var(X) = 𝔼(X 2 ) − [𝔼(X)]2 =

By definition

ℙ(X > x) =

2

𝜆2

1

.

𝜆2

∞

∫x

𝜆e−𝜆u du = e−𝜆x

and

∞

−𝜆u

ℙ(X > s + x, X > s) ℙ(X > s + x) ∫s+x 𝜆e du

= e−𝜆x .

=

= ∞

ℙ(X > s + x|X > s) =

ℙ(X > s)

ℙ(X > s)

∫s 𝜆e−𝜆𝑣 d𝑣

Thus,

ℙ(X > s + x|X > s) = ℙ(X > x).

If Y is the waiting time for the first success then for k = 1, 2, . . .

ℙ(Y > kΔt) = (1 − p)k .

By setting y = kΔt, and in the limit Δt → 0 and assuming that p → 0 so that p∕Δt → 𝜆,

for some positive constant 𝜆,

(

( ) )

x

ℙ(Y > y) = ℙ Y >

Δt

Δt

≈ (1 − 𝜆Δt)y∕Δt

( )(

= 1 − 𝜆y +

≈ 1 − 𝜆y +

y

Δt

y

Δt

)

−1

2!

(𝜆Δt)2 + . . .

(𝜆y)2

+ ...

2!

= e−𝜆x .

In the limit Δt → 0 and p → 0,

ℙ(Y ≤ y) = 1 − ℙ(Y > y) ≈ 1 − e−𝜆y

and the probability density function is therefore

fY (y) =

d

ℙ(Y ≤ y) ≈ 𝜆e−𝜆y , y ≥ 0.

dy

◽

4:33 P.M.

Page 15

Chin

16

c01.tex

V3 - 08/23/2014

4:33 P.M.

1.2.2 Discrete and Continuous Random Variables

5. Gamma Distribution. Let U and V be continuous independent random variables and let

W = U + V. Show that the probability density function of W can be written as

∞

fW (𝑤) =

∫−∞

∞

fV (𝑤 − u)fU (u) du =

∫−∞

fU (𝑤 − 𝑣)fV (𝑣) d𝑣

where fU (u) and fV (𝑣) are the density functions of U and V, respectively.

Let X1 , X2 , . . . , Xn ∼ Exp(𝜆) be a sequence of independent and identically distributed random variables, each following an exponential distribution with common parameter 𝜆 > 0.

Prove that if

n

∑

Y=

Xi

i=1

then Y follows a gamma distribution, Y ∼ Gamma (n, 𝜆) with the following probability

density function:

(𝜆y)n−1 −𝜆y

fY (y) =

𝜆e , y ≥ 0.

(n − 1)!

Show also that 𝔼(Y) =

n

n

and Var(Y) = 2 .

𝜆

𝜆

Solution: From the definition of the cumulative distribution function of W = U + V we

obtain

f (u, 𝑣) dud𝑣

FW (𝑤) = ℙ(W ≤ 𝑤) = ℙ(U + V ≤ 𝑤) =

∫ ∫ UV

u+𝑣≤𝑤

where fUV (u, 𝑣) is the joint probability density function of (U, V). Since U ⟂

⟂ V therefore

fUV (u, 𝑣) = fU (u)fV (𝑣) and hence

FW (𝑤) =

fUV (u, 𝑣) dud𝑣

∫ ∫

u+𝑣≤𝑤

=

fU (u)fV (𝑣) dud𝑣

∫ ∫

u+𝑣≤𝑤

∞

=

∫−∞

{

}

𝑤−u

∫−∞

fV (𝑣) d𝑣

fU (u) du

∞

=

∫−∞

FV (𝑤 − u)fU (u) du.

By differentiating FW (𝑤) with respect to 𝑤, we have the probability density function

fW (𝑤) given as

∞

fW (𝑤) =

∞

d

F (𝑤 − u)fU (u) du =

f (𝑤 − u)fU (u) du.

∫−∞ V

d𝑤 ∫−∞ V

Page 16

Chin

1.2.2

c01.tex

V3 - 08/23/2014

Discrete and Continuous Random Variables

17

Using the same steps we can also obtain

∞

fW (𝑤) =

fU (𝑤 − 𝑣)fV (𝑣) d𝑣.

∫−∞

∑

To show that Y = ni=1 Xi ∼ Gamma (n, 𝜆) where X1 , X2 , . . . , Xn ∼ Exp(𝜆), we will prove

the result via mathematical induction.

For n = 1, we have Y = X1 ∼ Exp(𝜆) and the gamma density fY (y) becomes

fY (y) = 𝜆e−𝜆y ,

y ≥ 0.

Therefore, the result is true for n = 1.

Let us assume that the result holds for n = k and we now wish to compute the density for

the case n = k + 1. Since X1 , X2 , . . . , Xk+1 are all mutually independent and identically

∑

distributed, by setting U = ki=1 Xi and V = Xk+1 , and since U ≥ 0, V ≥ 0, the density of

∑k

Y = i=1 Xi + Xk+1 can be expressed as

y

fY (y) =

fV (y − u)fU (u) du

∫0

y

=

∫0

𝜆e−𝜆(y−u) ⋅

(𝜆u)k−1 −𝜆u

du

𝜆e

(k − 1)!

y

=

𝜆k+1 e−𝜆y

uk−1 du

(k − 1)! ∫0

=

(𝜆y)k −𝜆y

𝜆e

k!

∑

which shows the result is also true for n = k + 1. Thus, Y = ni=1 Xi ∼ Gamma (n, 𝜆).

Given that X1 , X2 , . . . , Xn ∼ Exp(𝜆) are independent and identically distributed with com1

1

mon mean and variance 2 , therefore

𝜆

𝜆

( n )

n

∑

∑

n

Xi =

𝔼(Xi ) =

𝔼(Y) = 𝔼

𝜆

i=1

i=1

and

Var(Y) = Var

( n

∑

)

Xi

i=1

=

n

∑

Var(Xi ) =

i=1

n

.

𝜆2

◽

6. Normal Distribution Property I. Show that for constants a, L and U such that t > 0 and

L < U,

U

(

1

a𝑤− 2

1

e

√

2𝜋t ∫L

𝑤

√

t

)

[ (

)2

d𝑤 = e

1 2

a t

2

Φ

U − at

√

t

(

−Φ

)]

L − at

√

t

where Φ(⋅) is the cumulative distribution function of a standard normal.

4:33 P.M.

Page 17

Chin

18

c01.tex

V3 - 08/23/2014

4:33 P.M.

1.2.2 Discrete and Continuous Random Variables

Solution: Simplifying the integrand we have

(

U

1

a𝑤− 2

1

e

√

∫

2𝜋t L

𝑤

√

t

)2

(

U

1

−

1

d𝑤 = √

e 2

∫

2𝜋t L

U −1

2

𝑤2 −2a𝑤t

t

[(

1

e

=√

2𝜋t ∫L

1 2

(

U

1

−

e2a t

e 2

=√

2𝜋t ∫L

By setting x =

U

∫L

𝑤−at

√

t

𝑤−at

√

t

)

d𝑤

]

)2

−a2 t

d𝑤

)2

d𝑤.

𝑤 − at

√ we can write

t

(

1

−

1

e 2

√

2𝜋t

U

𝑤−at

√

t

)2

d𝑤 =

1 2

1

e− 2 x dx = Φ

√

2𝜋

∫ L−at

√

t

(

1

a𝑤− 2

1

Thus, √

e

2𝜋t ∫L

𝑤

√

t

1 2

a t

2

Φ

U − at

√

t

)

[ (

)2

d𝑤 = e

)

(

U−at

√

t

U − at

√

t

(

−Φ

(

.

)]

L − at

√

t

−Φ

)

L − at

√

t

.

◽

7. Normal Distribution Property II. Show that if X ∼ 𝒩(𝜇, 𝜎 2 ) then for 𝛿 ∈ { − 1, 1},

𝔼[max{𝛿(e − K), 0}] = 𝛿e

X

𝜇+ 21 𝜎 2

(

Φ

𝛿(𝜇 + 𝜎 2 − log K)

𝜎

)

(

− 𝛿KΦ

𝛿(𝜇 − log K)

𝜎

)

where K > 0 and Φ(⋅) denotes the cumulative standard normal distribution function.

Solution: We first let 𝛿 = 1,

∞

𝔼[max{eX − K, 0}] =

∫log K

(ex − K) fX (x) dx

∞

=

−1

1

e 2

(ex − K) √

∫log K

𝜎 2𝜋

∞

=

By setting 𝑤 =

∫log K

−1

1

e 2

√

𝜎 2𝜋

(

)

x−𝜇 2

+x

𝜎

(

)

x−𝜇 2

𝜎

dx

∞

dx − K

∫log K

−1

1

e 2

√

𝜎 2𝜋

(

)

x−𝜇 2

𝜎

x−𝜇

and z = 𝑤 − 𝜎 we have

𝜎

∞

𝔼[max{eX − K, 0}] =

∫ log K−𝜇

𝜎

∞

1 2

1 2

1

1

e− 2 𝑤 +𝜎𝑤+𝜇 d𝑤 − K

e− 2 𝑤 d𝑤

√

√

log K−𝜇

∫

2𝜋

2𝜋

𝜎

dx.

Page 18

Chin

1.2.2

c01.tex

V3 - 08/23/2014

Discrete and Continuous Random Variables

∞

1 2

= e𝜇+ 2 𝜎

∫ log K−𝜇

𝜎

19

∞

1

1 2

2

1

1

e− 2 (𝑤−𝜎) d𝑤 − K

e− 2 𝑤 d𝑤

√

√

log K−𝜇

∫

2𝜋

2𝜋

𝜎

∞

∞

1 2

1 2

1

1

e− 2 z dz − K

e− 2 𝑤 d𝑤

√

√

∫ log K−𝜇 2𝜋

∫

2𝜋

𝜎

(

)

(

)

2

𝜇 + 𝜎 − log K

𝜇 − log K

𝜇+ 12 𝜎 2

=e

Φ

− KΦ

.

𝜎

𝜎

=e

𝜇+ 12 𝜎 2

log K−𝜇−𝜎 2

𝜎

Using similar steps for the case 𝛿 = −1 we can also show that

)

)

(

(

1 2

log K − 𝜇

log K − (𝜇 + 𝜎 2 )

− e𝜇+ 2 𝜎 Φ

.

𝔼[max{K − eX , 0}] = KΦ

𝜎

𝜎

◽

8. For x > 0 show that

∞

(

)

1 2

1 2

1 2

1

1

1

1

1

− 3 √

e− 2 x ≤

e− 2 z dz ≤ √

e− 2 x .

√

∫

x x

x

2𝜋

2𝜋

x 2𝜋

∞

1 2

1

1 d𝑣

=

e− 2 z dz using integration by parts, we let u = ,

√

∫x

z dz

2𝜋

1 2

1

du

1

= − 2 and 𝑣 = − √

e− 2 z . Therefore,

so that

dz

z

2𝜋

Solution: Solving

1 2

z

e− 2 z

√

2𝜋

∞

∫x

|∞

∞

1 2

1

1

1

− 12 z2

− 12 z2 ||

e

e

e− 2 z dz

dz = − √

√

√

| −∫

|

x

2𝜋

z 2𝜋

z2 2𝜋

|x

1 2

1

e− 2 x −

= √

∫x

x 2𝜋

∞

1 2

1

e− 2 z dz

√

z2 2𝜋

1 2

1

e− 2 x

≤ √

x 2𝜋

∞

since

∫x

1 2

1

e− 2 z dz > 0.

√

z2 2𝜋

∞

1 2

1

e− 2 z dz by parts where we let u =

√

∫x z2 2𝜋

1 2

du

1

3

so that

= − 4 and 𝑣 = − √

e− 2 z and hence

dz

z

2𝜋

To obtain the lower bound, we integrate

1 2

1 d𝑣

z

,

= √

e− 2 z

3

z dz

2𝜋

∞

∫x

|∞

∞

1 2

1

3

1

− 12 z2

− 12 z2 ||

dz = − √

−

e

e

e− 2 z dz

√

√

|

∫x z4 2𝜋

|

z2 2𝜋

z3 2𝜋

|x

=

1 2

1

e− 2 x −

√

∫x

x3 2𝜋

∞

3

√

z4 2𝜋

1 2

e− 2 z dz.

4:33 P.M.

Page 19

Chin

20

c01.tex

V3 - 08/23/2014

4:33 P.M.

1.2.2 Discrete and Continuous Random Variables

Therefore,

∞

∫x

∞

since

∫x

we have

1 2

1 2

1 2

1

1

1

e− 2 z dz = √

e− 2 z − √

e− 2 x +

√

∫x

2𝜋

x 2𝜋

x3 2𝜋

(

)

1 2

1

1

1

− 3 √

e− 2 x

≥

x x

2𝜋

∞

3

√

1 2

e− 2 z dz

z4 2𝜋

1 2

3

e− 2 z dz > 0. By taking into account both the lower and upper bounds

√

z4 2𝜋

(

1

1

−

x x3

)

1 2

1

e− 2 x ≤

√

∫x

2𝜋

∞

1 2

1 2

1

1

e− 2 z dz ≤ √

e− 2 x .

√

2𝜋

x 2𝜋

◽

9. Lognormal Distribution I. Let Z ∼ 𝒩(0, 1), show that the moment generating function of

a standard normal distribution is

1 2

( )

𝔼 e𝜃Z = e 2 𝜃

for a constant 𝜃.

Show that if X ∼ 𝒩(𝜇, 𝜎 2 ) then Y = eX follows a lognormal distribution, Y = eX ∼

log-𝒩(𝜇, 𝜎 2 ) with probability density function

fY (y) =

−1

1

e 2

√

y𝜎 2𝜋

(

)

log y−𝜇 2

𝜎

(

)

1 2

2

2

with mean 𝔼(Y) = e𝜇+ 2 𝜎 and variance Var(Y) = e𝜎 − 1 e2𝜇+𝜎 .

Solution: By definition

( )

𝔼 e𝜃Z =

∞

1 2

1

e𝜃z ⋅ √

e− 2 z dz

2𝜋

∫−∞

∞

=

1

2 1 2

1

e− 2 (z−𝜃) + 2 𝜃 dz

√

2𝜋

∫−∞

∞

1 2

= e2𝜃

∫−∞

1

2

1

e− 2 (z−𝜃) dz

√

2𝜋

1 2

= e2𝜃 .

For y > 0, by definition

(

)

ℙ e < y = ℙ(X < log y) =

X

log y

∫−∞

−1

1

e 2

√

𝜎 2𝜋

(

)

x−𝜇 2

𝜎

dx

Page 20

Chin

1.2.2

c01.tex

V3 - 08/23/2014

Discrete and Continuous Random Variables

21

and hence

fY (y) =

)

−1

d ( X

1

ℙ e <y = √

e 2

dy

y𝜎 2𝜋

(

)

log y−𝜇 2

𝜎

.

Given that log Y ∼ 𝒩(𝜇, 𝜎 2 ) we can write log Y = 𝜇 + 𝜎Z, Z ∼ 𝒩(0, 1) and hence

1 2

(

)

( )

𝔼(Y) = 𝔼 e𝜇+𝜎Z = e𝜇 𝔼 e𝜎Z = e𝜇+ 2 𝜎

1 2

( )

since 𝔼 e𝜃Z = e 2 𝜃 is the moment generating function of a standard normal distribution.

Taking second moments,

(

)

)

( )

(

2

𝔼 Y 2 = 𝔼 e2𝜇+2𝜎Z = e2𝜇 𝔼 e2𝜎Z = e2𝜇+2𝜎 .

Therefore,

(

( 2

)

)

1 2 2

2

2

= e𝜎 − 1 e2𝜇+𝜎 .

Var(Y) = 𝔼(Y 2 ) − [𝔼(Y)]2 = e2𝜇+2𝜎 − e𝜇+ 2 𝜎

10. Lognormal Distribution II. Let X ∼ log-𝒩(𝜇, 𝜎 2 ), show that for n ∈ ℕ

1 2 2

𝜎

𝔼 (X n ) = en𝜇+ 2 n

.

Solution: Given X ∼ log-𝒩(𝜇, 𝜎 2 ) the density function is

fX (x) =

− 12

1

√

(

e

)

log x−𝜇 2

𝜎

,

x>0

x𝜎 2𝜋

and for n ∈ ℕ,

∞

𝔼(X n ) =

xn fX (x) dx

∫0

∞

=

By substituting z =

∫0

1

1

n −

√ x e 2

x𝜎 2𝜋

(

)

log x−𝜇 2

𝜎

dx.

log x − 𝜇

dz

1

so that x = e𝜎z+𝜇 and

=

,

𝜎

dx x𝜎

∞

𝔼(X n ) =

∫−∞

∞

=

∫−∞

1 2

1

en(𝜎z+𝜇) e− 2 z x𝜎 dz

√

x𝜎 2𝜋

1 2

1

e− 2 z +n(𝜎z+𝜇) dz

√

2𝜋

1 2 2

𝜎

= en𝜇+ 2 n

∞

∫−∞

1

2

1

e− 2 (z−n𝜎) dz

√

2𝜋

◽

4:33 P.M.

Page 21

Chin

22

c01.tex

V3 - 08/23/2014

4:33 P.M.

1.2.2 Discrete and Continuous Random Variables

1 2 2

𝜎

= en𝜇+ 2 n

∞

since

∫−∞

1

2

1

e− 2 (z−n𝜎) dz = 1.

√

2𝜋

◽

11. Folded Normal Distribution. Show that if X ∼ 𝒩(𝜇, 𝜎 2 ) then Y = |X| follows a folded

normal distribution, Y = |X| ∼ 𝒩f (𝜇, 𝜎 2 ) with probability density function

√

fY (y) =

√

with mean

𝔼 (Y) = 𝜎

(

1

2 −2

e

𝜋𝜎 2

2

𝜋

e

y2 +𝜇 2

𝜎2

( )2

− 12 𝜇𝜎

)

cosh

( 𝜇y )

𝜎2

[

( 𝜇 )]

+ 𝜇 1 − 2Φ −

𝜎

and variance

{ √

Var (Y) = 𝜇 + 𝜎 −

2

2

𝜎

2

𝜋

e

( )2

− 12 𝜇𝜎

}2

[

( 𝜇 )]

+ 𝜇 1 − 2Φ −

𝜎

where Φ(⋅) is the cumulative distribution function of a standard normal.

Solution: For y > 0, by definition

y

ℙ (|X| < y) = ℙ(−y < X < y) =

∫−y

−1

1

e 2

√

𝜎 2𝜋

(

)

x−𝜇 2

𝜎

dx

[ ( )2

(

) ]

y+𝜇

y−𝜇 2

− 12 𝜎

− 12 𝜎

d

1

+e

e

fY (y) = ℙ (|X| < y) = √

dy

𝜎 2𝜋

(

)

√

1 y2 +𝜇 2

( 𝜇y )

2 − 2 𝜎2

e

cosh 2 .

=

2

𝜋𝜎

𝜎

and hence

By definition

∞

𝔼 (Y) =

∫−∞

∞

=

∫0

y fY (y) dy

y

−1

e 2

√

𝜎 2𝜋

(

)

y+𝜇 2

𝜎

∞

dy +

∫0

y

−1

e 2

√

𝜎 2𝜋

(

)

y−𝜇 2

𝜎

dy.

By setting z = (y + 𝜇)∕𝜎 and 𝑤 = (y − 𝜇)∕𝜎 we have

∞

𝔼 (Y) =

∫𝜇∕𝜎

∞

1

1

− 1 z2

− 1 𝑤2

√ (𝜎z − 𝜇)e 2 dz +

√ (𝜎𝑤 + 𝜇)e 2 d𝑤

∫

−𝜇∕𝜎

2𝜋

2𝜋

Page 22

Chin

1.2.2

c01.tex

V3 - 08/23/2014

Discrete and Continuous Random Variables

−1

𝜎

e 2

=√

2𝜋

( )2

𝜇

𝜎

( )2

23

( )2

[

( 𝜇 )]

[

( 𝜇 )]

𝜇

−1

𝜎

+√

e 2 𝜎 −𝜇 1−Φ −

−𝜇 1−Φ

𝜎

𝜎

2𝜋

( 𝜇 )]

[ (𝜇)

−Φ −

+𝜇 Φ

𝜎

𝜎

2𝜎 − 12 𝜇𝜎

=√

e

2𝜋

√

( )2

[

( 𝜇 )]

−1 𝜇

2

=𝜎

.

e 2 𝜎 + 𝜇 1 − 2Φ −

𝜋

𝜎

( )

To evaluate 𝔼 Y 2 by definition,

( )

𝔼 Y2 =

∞

∫−∞

∞

=

∫0

y2 fY (y) dy

y2

−1

e 2

√

𝜎 2𝜋

(

)

y+𝜇 2

𝜎

∞

dy +

∫0

y2

−1

e 2

√

𝜎 2𝜋

(

)

y−𝜇 2

𝜎

dy.

By setting z = (y + 𝜇)∕𝜎 and 𝑤 = (y − 𝜇)∕𝜎 we have

( )

𝔼 Y2 =

∞

∫𝜇∕𝜎

∞

1

1

1

1

2 − z2

2 − 𝑤2

√ (𝜎z − 𝜇) e 2 dz +

√ (𝜎𝑤 + 𝜇) e 2 d𝑤

∫−𝜇∕𝜎 2𝜋

2𝜋

∞

∞

∞

1 2

1 2

1 2

2𝜇𝜎

1

𝜎2

=√

z2 e− 2 z dz − √

ze− 2 z dz + 𝜇2

e− 2 z dz

√

∫

∫

∫

𝜇∕𝜎

2𝜋 𝜇∕𝜎

2𝜋 𝜇∕𝜎

2𝜋

∞

∞

1 2

1 2

2𝜇𝜎

𝜎2

+√

𝑤2 e− 2 𝑤 d𝑤 + √

𝑤e− 2 𝑤 d𝑤

∫

∫

2𝜋 −𝜇∕𝜎

2𝜋 −𝜇∕𝜎

∞

+ 𝜇2

1 2

1

e− 2 𝑤 d𝑤

√

2𝜋

∫−𝜇∕𝜎

]

[

( )2

( 𝜇 ))

( 𝜇 ) 1 ( 𝜇 )2 √ (

2𝜇𝜎 − 12 𝜇𝜎

−2 𝜎

𝜎2

+ 2𝜋 1 − Φ

e

=√

−√

e

𝜎

𝜎

2𝜋

2𝜋

[

( 𝜇 )]

+ 𝜇2 1 − Φ

𝜎

]

[

( )2

( )2

(

( 𝜇 ))

√ (

2𝜇𝜎 − 12 𝜇𝜎

𝜇 ) − 12 𝜇𝜎

𝜎2

e

+√

−

e

+√

+ 2𝜋 1 − Φ −

𝜎

𝜎

2𝜋

2𝜋

[

( 𝜇 )]

+ 𝜇2 1 − Φ −

𝜎

( 𝜇 )]

(𝜇)

( 2

)[

2

= 𝜇 +𝜎

−Φ −

2−Φ

𝜎

𝜎

= 𝜇2 + 𝜎 2.

4:33 P.M.

Page 23

Chin

24

c01.tex

V3 - 08/23/2014

4:33 P.M.

1.2.2 Discrete and Continuous Random Variables

Therefore,

{ √

Var(Y) = 𝔼(Y 2 ) − [𝔼(Y)]2 = 𝜇 2 + 𝜎 2 −

𝜎

2

𝜋

e

( )2

− 12 𝜇𝜎

}2

( 𝜇 )]

+ 𝜇 1 − 2Φ −

𝜎

[

.

◽

X−𝜇

∼ 𝒩(0, 1).

12. Chi-Square Distribution. Show that if X ∼ 𝒩(𝜇, 𝜎 2 ) then Y =

𝜎

Let Z1 , Z2 , . . . , Zn ∼ 𝒩(0, 1) be a sequence of independent and identically distributed random variables each following a standard normal distribution. Using mathematical induction show that

Z = Z12 + Z22 + . . . + Zn2 ∼ 𝜒 2 (n)

where Z has a probability density function

fZ (z) =

such that

Γ

n

1

−1 − z

( )z2 e 2 ,

2 Γ n2

z≥0

n

2

∞

( )

n

n

=

e−x x 2 −1 dx.

∫0

2

Finally, show that 𝔼(Z) = n and Var(Z) = 2n.

X−𝜇

then

𝜎

(

)

X−𝜇

ℙ(Y ≤ y) = ℙ

≤ y = ℙ(X ≤ 𝜇 + 𝜎y).

𝜎

Solution: By setting Y =

Differentiating with respect to y,

fY (y) =

d

ℙ(Y ≤ y)

dy

𝜇+𝜎y

=

d

dy ∫−∞

−1

1

e 2

√

𝜎 2𝜋

−1

1

e 2

= √

𝜎 2𝜋

(

)

𝜇+𝜎y−𝜇 2

𝜎

(

)

x−𝜇 2

𝜎

dx

𝜎

1 2

1

e− 2 y

= √

2𝜋

which is a probability density function of 𝒩(0, 1).

For Z = Z12 and given Z ≥ 0, by definition

ℙ(Z ≤ z) = ℙ

(

Z12

( √

√)

≤ z = ℙ − z < Z1 < z = 2

√

z

)

∫0

1

− 1 z2

√ e 2 1 dz1

2𝜋

Page 24

Chin

1.2.2

c01.tex

V3 - 08/23/2014

Discrete and Continuous Random Variables

and hence

25

[

]

√

z

1 2

1

z

1

1

d

e− 2 z1 dz1 = √ z− 2 e− 2 ,

2

fZ (z) =

∫0 √2𝜋

dz

2𝜋

z≥0

which is the probability density function of 𝜒 2 (1).

For Z = Z12 + Z22 such that Z ≥ 0 we have

ℙ(Z ≤ z) = ℙ(Z12 + Z22 ≤ z) =

1 − 12 (z21 +z22 )

dz1 dz2 .

e

∫ ∫ 2𝜋

z21 +z22 ≤z

Changing to polar coordinates (r, 𝜃) such that z1 = r cos 𝜃 and z2 = r sin 𝜃 with the Jacobian determinant

| 𝜕z1

|

| 𝜕(z1 , z2 ) | || 𝜕r

|=|

|J| = ||

|

| 𝜕(r, 𝜃) | || 𝜕z2

|

| 𝜕r

𝜕z1 |

|

𝜕𝜃 || |cos 𝜃 −r sin 𝜃 |

|=r

| = ||

|

𝜕z2 || | sin 𝜃 r cos 𝜃 |

|

𝜕𝜃 |

then

2𝜋

√

z

1

1 − 12 (z21 +z22 )

1 − 12 r2

e

e

dz1 dz2 =

r drd𝜃 = 1 − e− 2 z .

∫ ∫ 2𝜋

∫𝜃=0 ∫r=0 2𝜋

z21 +z22 ≤z

Thus,

⎡

⎤

⎥

1 − 12 (z21 +z22 )

d ⎢

e

dz1 dz2 ⎥

fZ (z) =

⎢

dz ⎢ ∫ ∫ 2𝜋

⎥

⎣ z21 +z22 ≤z

⎦

=

1 − 12 z

e ,

2

z≥0

which is the probability density function of 𝜒 2 (2).

Assume the result is true for n = k such that

U = Z12 + Z22 + . . . + Zk2 ∼ 𝜒 2 (k)

and knowing that

2

V = Zk+1

∼ 𝜒 2 (1)

then, because U ≥ 0 and V ≥ 0 are independent, using the convolution formula the density

∑

2

of Z = U + V = k+1

i=1 Zi can be written as

z

fZ (z) =

fV (z − u)fU (u) du

∫0

z

=

∫0

{

1

− 1 − 1 (z−u)

√ (z − u) 2 e 2

2𝜋

} ⎧

⎫

1

1 ⎪

⎪

1

k−1

−

u

⋅ ⎨ k ( ) u 2 e 2 ⎬ du

⎪22 Γ k

⎪

2

⎩

⎭

4:33 P.M.

Page 25

Chin

26

c01.tex

V3 - 08/23/2014

4:33 P.M.

1.2.2 Discrete and Continuous Random Variables

1

z

e− 2 z

1

k

=√

(z − u)− 2 u 2 −1 du.

( )

k

k ∫0

2𝜋2 2 Γ 2

By setting 𝑣 =

u

we have

z

z

∫0

1

1

k

(z − u)− 2 u 2 −1 du =

∫0

=z

1

and because

∫0

1

k

(1 − 𝑣)− 2 𝑣 2 −1 d𝑣 = B

fore

fZ (z) =

2

k+1

2

(

1

k

(z − 𝑣z)− 2 (𝑣z) 2 −1 z d𝑣

k+1

−1

2

1 k

,

2 2

)

1

∫0

1

k

(1 − 𝑣)− 2 𝑣 2 −1 d𝑣

√ (k)

𝜋Γ 2

=

( ) (see Appendix A) thereΓ k+1

2

k+1

1

−1 − 1 z

( )z 2 e 2 ,

Γ k+1

2

z≥0

which is the probability density function of 𝜒 2 (k + 1) and hence the result is also true for

n = k + 1. By mathematical induction we have shown

Z = Z12 + Z22 + . . . + Zn2 ∼ 𝜒 2 (n).

By computing the moment generation of Z,

( )

MZ (t) = 𝔼 etZ =

∞

∫0

etz fZ (z) dz =

1

( )

∫0

2 Γ n2

n

2

∞ n

1

z 2 −1 e− 2 (1−2t)

dz

and by setting 𝑤 = 12 (1 − 2t)z we have

(

) n2 ∞ n

n

2

1

MZ (t) = n ( )

𝑤 2 −1 e−𝑤 d𝑤 = (1 − 2t)− 2 ,

∫

n

1

−

2t

0

22 Γ 2

Thus,

(

)

− n2 +1

′

MZ (t) = n(1 − 2t)

such that

′

𝔼 (Z) = MZ (0) = n,

,

′′

MZ (t) = 2n

(

(

)

1 1

t∈ − ,

.

2 2

(

)

)

− n +2

n

+ 1 (1 − 2t) 2

2

(

)

( )

′′

n

𝔼 Z 2 = MZ (0) = 2n

+1

2

and

( )

Var (Z) = 𝔼 Z 2 − [𝔼 (Z)]2 = 2n.

◽

Page 26

Chin

1.2.2

c01.tex

V3 - 08/23/2014

Discrete and Continuous Random Variables

27

13. Marginal Distributions of Bivariate Normal Distribution. Let X and Y be jointly normally

distributed with means 𝜇x , 𝜇y , variances 𝜎x2 , 𝜎y2 and correlation coefficient 𝜌xy ∈ (−1, 1)

such that the joint density function is

fXY (x, y) =

2𝜋𝜎x 𝜎y

−

1

√

e

1−

1

2(1−𝜌2xy )

[

(

x−𝜇x

𝜎x

)2

(

−2𝜌xy

x−𝜇x

𝜎x

]

)( y−𝜇 ) ( y−𝜇 )2

y

y

+

𝜎

𝜎

y

y

𝜌2xy

.

Show that X ∼ 𝒩(𝜇x , 𝜎x2 ) and Y ∼ 𝒩(𝜇y , 𝜎y2 ).

Solution: By definition,

∞

fX (x) =

∫−∞

∞

=

∫−∞

∞

=

∫−∞

fXY (x, y) dy

−

1

e

√

2𝜋𝜎x 𝜎y 1 − 𝜌2xy

×e

=

[

(

x−𝜇x

𝜎x

)2

(

−2𝜌xy

x−𝜇x

𝜎x

]

)( y−𝜇 ) ( y−𝜇 )2

y

y

+

𝜎

𝜎

y

y

dy

1

√

2𝜋𝜎x 𝜎y 1 − 𝜌2xy

[

−

1