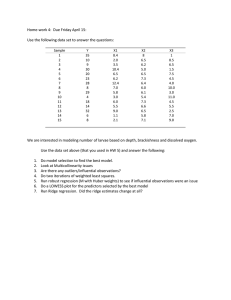

Applied Machine Learning

Annalisa Marsico

OWL RNA Bionformatics group

Max Planck Institute for Molecular Genetics

Free University of Berlin

SoSe 2015

What is Machine Learning?

What is Machine Learning?

The field of Machine Learning seeks to answer the question:

“How can we build computer systems that automatically improves with experience,

and what are the fundamental laws that govern all learning processes?”

Arthur Samuel (1959): field of study that gives computers the ability to learn

without being explicitly programmed

– ex: playing checkers against Samuel,

the computer eventually became much better than Samuel

– this was the first solid refutation

to the claim that computers cannot learn

What is Machine Learning?

Tom Mitchell (1998): a computer learns from experience E

with respect to some task T and some performance

measure P, if its performance on T as measured by P

improves with E

What is Machine Learning?

Computer ML

Statistics

Science

How can we build machines

that solve problems and which problems

are tractable/intractable?

What can be inferred from the data plus

some modeling assumptions, with what

reliability?

ML‘s applications

– Army, security

– imaging: object/face detection and recognition, object traking

– mobility: robotics, action learning, automatic driving

– Computers, internet

– interfaces: brainwaves (for the disable), handwriting / speech recognition

– security: spam / virus filtering, virus troubleshooting

ML‘s applications

– Finance

– banking: identify good, dissatisfied or prospective customers

– optimize / minimize credit risk

– market analysis

– Gaming

– intelligent: adaptibility to the player, agents

– object tracking, 3D modeling, etc...

ML‘s applications

– Biomedicine, biometrics

– medicine: screening, diagnosis and prognosis, drug discovery etc..

– security: face recognition, signature, fingerprint, iris verification etc..

– Bioinformatics

– motif finder, gene detectors, interaction networks, gene expression

predictors, cancer/disease classification, protein folding prediction, etc..

Examples of Learning problems

• Predict whether a patient, hospitalized due to a heart attack, will

have a seocnd heart attack, based on diet, blood tests, diesease

history..

• Identify the risk factor for colon cancer, based on gene expression

and clinical measurements.

• Predict if an e-mail is spam or not based on most commonly

occurring words (email/spam -> classification problem)

• Predict the price of a stock in 6 months from now, based on

company performance and economic data

You already use it! Some more

examples from daily life..

• Based on past choices, which movies will interest this viewer?

(Netflix)

• Based on past choices and metadata which music this user will

probably like? (Lastfm, Spotify)

• Based on past choices and profile features should we match these

people in online dating service (Tinder)

• Based on previous purchases, which shoes is the user likely to like?

(Zalando)

However, predictive models regularly generate wrong predicitons:

In 2010 an errouneous algorithm has caused a finantial crash..

Learning process

• Predictive modeling: process of developing a

mathematical tool or model that generates

accurate predictions

Prediction vs Interpretation

• It is always a trade-off

• If the goal is high accuracy (e.g. Spam filter) then we

do not care ‚why‘ and ‚how‘ the model reaches it

• If the goal is interpretability (e.g. In Biology, SNPs

which predict a certian disease risk) then we care

‚why‘ and ‚how‘

Key ingredients for a successful

predictive model

• Deep knowledge of the context and the problem

– If a signal is present in the data you are gonna find it

– Choose your features carefully (e.g. collect relevant

data)

• Versatile computational toolbox for model

building, but also data pre-processing,

visualization, statistics

– Weka, Knime, R (check out caret package)

• Critical evaluation

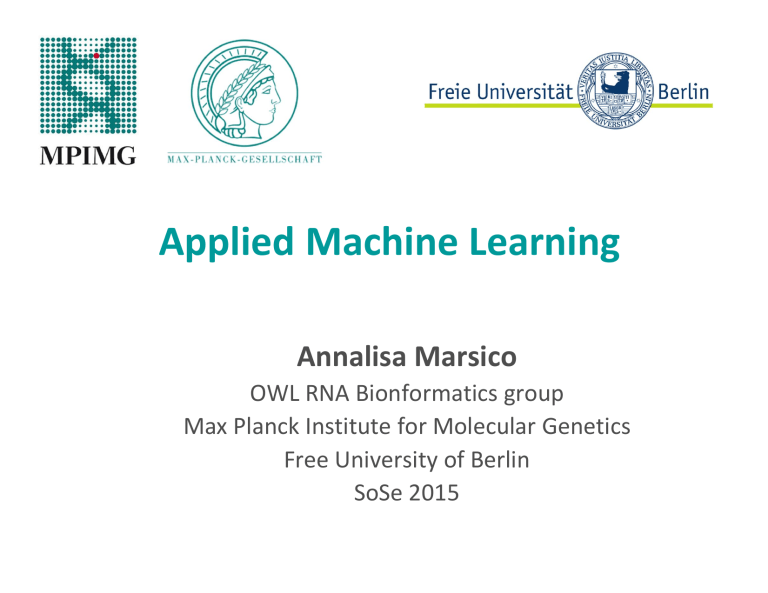

Supervised vs Unsupervised Learning

Typical Scenario

We have an outcome quantitative (price of a stock, risk factor..) or

categorical (heart attack yes or no) that we want to predict based on some

features. We have a training set of data and build a prediction model, a

learner, able to predict the outcome of new unseen objects

- A good learner accurately predicts such an outcome

- Supervised learning: the presence of the outcome variable is guiding the

learning process

- Unsupervised learning: we have only features, no outcome

- Task is rather to describe the data

Unsupervised learning

• find a structure in the data

• Given X ={xn} measurements / obervations /features

find a model M such that p(M|X) is maximized

i.e. Find the process that is most likely to have generate the data

Supervised learning

Find the connection between two sets of observations: the input

set, and the output set

– given {xn , yn}, find an hypothesis f (function, classification boundary)

, such that ∀n ∈ [1..N], N number of observations, f(xn ) = yn

X={xn} also called predictors, independent variables or covariates

Y={yn} also called response, dependent variable

Example1: Colorectal Cancer

There is a correlation between CSA (colon specific antigen) and a number of

clinical measuremnets in 200 patients.

Goal: predict CSA from clinical measurements

Supervised learning

Regression problem (outcome measure is quantitative)

Example2: Gene expression

microarrays

Measure the expression of all genes in a cell simultaneously,

by measuring the amount of RNA present in the cell for

that gene. We do this for several experiments (samples).

Goal: understand how genes and samples are organized

- Which genes are predictive for certain samples?

Unsupervised learning: p (# of samples) << N (# of genes)

Supervised learning: yes, possible, with some tricks

Variable Types

• Y quantitative -> regression model

Y qualitative (categorical) -> classification model (two or more classes)

• Inputs X can also be quantitative or qualitative

• there can be missing values

• dummy variable sometime a convenient way

• Both problems can be viewed as a task in function approximation f(X)

Let‘s re-formulate the training task

• Given X (features), make a good prediction of Y,

denoted by Ŷ (i.e. Identify appropriate function f(X)

to model Y). If Y takes values in R, then so should Ŷ

(quantitative response). For categorical output Ĝ

should take a class value, as G (categorical response).

Supervised Linear Models

Linear Models and Least Square

Given a vector of inputs X X 1 , X 2 ,.... X p

p = # of features; N = # of points,

we want to predict the output Y via the model:

T

p

Unknown coefficients

parameters of the model

Yˆ f ( X ) ˆ0 X j ˆ j

j 1

or

Yˆ X T ˆ

N.B we have included β0 in the coefficient vector

Matrix notation

For each point i, i=1....N

y i 0 1 x i1 2 x i 2 ....... p x ip

Linear Models and Least Square

We want to fit a linear model to a set

of training data {(xi1...xip), yi}. There might be several choices of β.

How do we choose them?

Linear Models and Least Square

• Least square method: we pick the coefficients β to minimize the

residual sum of squares

2

p

N

2

T

RSS ( ) yi f ( xi ) yi 0 xij j yi xi

i 1

i 1

j 1

i 1

N

N

2

The solution is easy to characterize

If we write it in matrix notation

RSS ( ) (Y X )T (Y X )

X T (Y X ) 0

ˆ ( X T X ) 1 X T Y

differentiation

with respect to β

One feature

What happens if p > N? I.e. XTX is singular?

two features

Another geometrical interpretation of

linear regression

Least-square regression with two predictors. The outcome vector y is orthogonally

projected into the hyperplane spanned by input vectors x1 and x2. The projection

^y represents the vector of the least square prediction.

2

We minimize RSS ( ) y X by choosing β so that the residual vector is orthogonal

to this subspace.

Example: Quantitative StructureActivity Relationship

We want to study the relationship between chemical structure and activity (solubility)

Screen several compounds against a target in a biolgical assay

Measure quantitative features xj (molecular weight,

electrical charge, surface area, # of atoms..)

The response y is the activity (inibition, solubility..)

yi 0 1 xi1 2 xi 2 ... p xip

Quantitative structure-activity relationship (QSAR modeling)

Aspirin

Measuring Performance in Regression

Models

If the outcome is a number -> RMSE (function of the model residuals)

RMSE

1

N

N

2

ˆ

(

y

y

)

i i

i 1

real value

predicted value

Another measure is R2 -> proportion of information in the data which is explained

by the model. More a measure of correlation

A short de-tour of the Predictive

Modeling Process

Always do a scatter plot of response vs each feature to see if a linear relationship

exists!

Introduce some

Non-linearity into the model

y 0 1x1 2 x12

Fit to local linear regression

A short de-tour of the Predictive

Modeling Process

„How“ the predictors enter the model is very important:

1. Data transformation

1. Centering / scaling

2. data skewed

3. Outliers

2. feature engineering / feature extraction

1. What are actually the informative features?

A short de-tour of the Predictive

Modeling Process

Data transformation

Necessary to avoid biases

Z

xx

mean of the data - centering

standard deviation - scaling

Skewness

x x

3

i

s

i

( n 1)v 3 / 2

x x

2

i

v

i

n 1

A value s of 20 indicates

high skewness. Log transformation

helps reducing the skewness

Between-Predictor Correlations

Predictors can be correlated. If correlation among predictors is high, then the

Ordinary least square solution for linear regression will have high variability

and will be unstable -> poor interpretation

Correlation heatmap for the structure-solubility data

Collinearity: high correlation between pairs of variables

Data reduction and feature extraction

We want to have a smaller set of predictors which captures most of the

Information in the data -> maybe predictors which are combinations of

the original predictors?

Principal Component Analysis (PCA)

is a commonly used data reduction technique

A short de-tour of the Predictive

Modeling Process

Data reduction and feature extraction

What about removing correlated predictors?

Yes, possible, but there are cases where a predictor is correlated to a

Linear combination of other predictors..Not detectable with correlation analysis

Other reasons to remove predictors:

1. Zero variance predictors (variables with few unique values)

2. Frequency of unique values is severely disproportioned

Goal: We want a technique (regression)

which takes into account (solves)

correlated variables..

Regression + feature reduction

Principal Component Analysis (PCA)

Idea:

• Given data points (predictors) in d-dimensional space,

project into lower dimensional space while preserving as

much information as possible

– E.g. Find best planar approximation to 3D data

• Learns lower dimensional representation of inputs

• Underlines structure in the data

• It generates a smaller set of predictors which captures the

majority of the information in the original variables

• New predictors are functions of the original predictors

Example 1: study the motion of a spring

• The important dimension to describe the dynamics

of the system is x – but we do not know that!

• Every time sample recorded by the cameras is a point

(vector) in a D-dimensional space, D=6

• Form linear algebra: every vector in a D-dimensional space

can be written as linear combination of some basis

• Is there other basis (linear combination of original basis) which better

re-expresses the data?

Principal Component Analysis (PCA)

The hope is that the new basis will filter out the noise and reveal the hidden

structure of the data -> In my case they will determine x as the important

direction..

You may have noticed the use of the word linear: PCA makes the stringent

but powerful assumption of linearity -> restricts the set of potential bases

PCA – formal definition

• PCA: orthogonal projection of the data into a

lower dimensional space, such as the variance

of the projected data is maximum

Variance and the goal

Quantitatively we assume that directions with largest variances in

our data space contain the dynamics of interest and so highest SNR

Principal Component analysis

y

x

Geometrical interpretation: find the rotation of the basis (axes) in a way that the first axis lies

in the direction of greatest variation. In the new system the predictors (PCs) are orthogonal

PCA - Redundancy

When two predictors x1 and x2 are correlated (measure redundant information),

this will complicate the effect of x1 and x2 on the response. It seems that either one

predictor or a linear combination of predictors can be used here

PCA in words

– Find the linear combination of X (in the new basis) which has the

maximum variation

– How do we formally find these new directions (basis) ui?

– Project data on new directions XTu

– Find u1 such that var(XTu1) is maximized subjected to the condition u1Tu1=1

– Find u2 such that var(XTu2) is maximized subjected to the condition u2Tu2=1

and u1Tu2=0

– Keep finding direction of greatest variation orthogonal to those already found

– Ideally, if N is the dimensionality of original data, we need only few D < N

directions to explain sufficiently the variability in the data

How many Principal

Components?

• Use the eigenvalues, which represent the variance explained by each component

• Choose the number of eigenvalues that amount to the desired percentage of the

variance

Scree plot

PCA example: image compression

Principal Component Analysis (PCA)

PCs are surrogate features / variables and therefore (linear) functions of the

original variables which better re-express the data

Then we can express the PCs as linear combinations of the original predictors.

The first PC is the best linear combination – the one capturing most of the variance

PC j a j1 feature 1 a j 2 feature 2 ... a jp featurep

p = # of predictors

aj1, aj2,.... ajp component weights / loadings

Summarizing..

The cool thing is that we have create components PCs which are uncorrelated

Some predictive models prefer predictors which are uncorrelated in order

To find a good solution. PCA creates new predictors with such characteristics!

To get an intuition of the data:

If the PCA captured most of the

information in the data, then plotting

E.g. PC1 vs PC2 can reveal clusters/structures

In the data

PCA – practical hints

1. PCA seeks direction of maximum variance, so it is sensitive to the

scale of the data, it might give higher weights to variables

on ‚large‘ scales.

Good practise is re-scale the data before doing PCA

2. Skeweness can also cause problems

Goal: We want a technique (regression)

which takes into account (solves)

correlated variables..

Regression + feature reduction

But PCA is an unsupervised technique..so it is blind to the response

Principal Component Regression (PCR)

Dimension reduction method: it works in two steps

1. Find transformed predictors Z1, Z2, ..Zm with m < p (# of original features)

2. Fit a least square model to these new predictors

p

M

Z m a jm X j

yi 0 m Z im

m 1

j 1

Fitting a regression

model to Zm

The choise of Z1...Zm and the selection of ajm can be achieved in different ways

One way is Principal Component Regression (PCR) – almost PLS..

E.g.

Z1 a11 x1 a21 x2

First principal components in the case of two variables

scores or loadings

Drawback of PCR

We assume that the direction in which xi show the most variation are the

directions associated to the reponse y..

If this assumption holds, then an appropriate choice of M = # of components

will give better results.

But this assumption is not always fullfilled and when Z1..Zm are produced in

an unsupervised way there is no garantee that these directions (which best

explain the input) are also the best to explain the output.

When will PCR perform worse than normal least square regression?

Partial Least Square Regression (PLSR)

• Supervised alternative to PCR. It makes use of the response Y to identify

the new features

• attempts to find directions that help explaining both the response and the

predictors

PLS Algorithm

1. Compute first partial least square direction Z1

by setting aj1 in the formula to the coefficients

from simple linear regression of Y onto Xj

p

p

Z m a jm X j

Z1 a j1 X j

j 1

j 1

2. Different intepretation of the loading ajm : here, how

much the predictor is important for the reponse!

3. Then Y is regressed on Z1, giving θ1

4. To find Z2 we ‚adjust‘ all variables for Z1. Means we project

or regress them to Z1

ˆ

X j j Z1

5. Compute the residuals (the remaining information which has not been explained

by the first PLS)

X Z

j

j

1

6. Compute Z2 (Zm) in the same way, using the projected data

7. The iterative approach can be repeated M times to identify multiple PLS comp

Example from the QSAR modeling

problem - PCR

Scatter plot of two predictors

Direction of the first PC

The first PC direction contains no

predictive information of the response

Example from the QSAR modeling

problem - PLS

PLS direction on two predictors

PLS direction contains highly

predictive information of the response

Example from the QSAR modeling

problem – PCR & PLS

Compaison of PLS and PCR

Summary

• Dimension reduction (PCA)

• Regression problem

– Linear regression (least-square)

– PCR and PLS are methods for feature reduction

and de-correlation of the features

Improves over-fitting, accuracy, can be hard to

interpret