Encyclopedia of Parallel Computing

David Padua (Ed.)

Encyclopedia of Parallel

Computing

With Figures and Tables

123

Editor-in-Chief

David Padua

University of Illinois at Urbana-Champaign

Urbana, IL

USA

ISBN ----

e-ISBN ----

DOI ./----

Print and electronic bundle ISBN: ----

Springer New York Dordrecht Heidelberg London

Library of Congress Control Number:

© Springer Science+Business Media, LLC

All rights reserved. This work may not be translated or copied in whole or in part without the written permission of the publisher (Springer

Science+Business Media, LLC, Spring Street, New York, NY , USA), except for brief excerpts in connection with reviews or scholarly

analysis. Use in connection with any form of information storage and retrieval, electronic adaptation, computer software, or by similar or

dissimilar methodology now known or hereafter developed is forbidden.

The use in this publication of trade names, trademarks, service marks, and similar terms, even if they are not identified as such, is not to be

taken as an expression of opinion as to whether or not they are subject to proprietary rights.

Printed on acid-free paper

Springer is part of Springer Science+Business Media (www.springer.com)

Preface

Parallelism, the capability of a computer to execute operations concurrently, has been a constant throughout the

history of computing. It impacts hardware, software, theory, and applications. The fastest machines of the past few

decades, the supercomputers, owe their performance advantage to parallelism. Today, physical limitations have

forced the adoption of parallelism as the preeminent strategy of computer manufacturers for continued performance gains of all classes of machines, from embedded and mobile systems to the most powerful servers. Parallelism

has been used to simplify the programming of certain applications which react to or simulate the parallelism of the

natural world. At the same time, parallelism complicates programming when the objective is to take advantage of

the existence of multiple hardware components to improve performance. Formal methods are necessary to study

the correctness of parallel algorithms and implementations and to analyze their performance on different classes of

real and theoretical systems. Finally, parallelism is crucial for many applications in the sciences, engineering, and

interactive services such as search engines.

Because of its importance and the challenging problems it has engendered, there have been numerous research

and development projects during the past half century. This Encyclopedia is our attempt to collect accurate and clear

descriptions of the most important of those projects. Although not exhaustive, with over entries the Encyclopedia covers most of the topics that we identified at the outset as important for a work of this nature. Entries include

many of the best known projects and span all the important dimensions of parallel computing including machine

design, software, programming languages, algorithms, theoretical issues, and applications.

This Encyclopedia is the result of the work of many, whose dedication made it possible. The Editorial Board

Members created the list of entries, did most of the reviewing, and suggested authors for the entries. Colin Robertson, the Managing Editor, Jennifer Carlson, Springer’s Reference Development editor, and Editorial Assistants Julia

Koerting and Simone Tavenrath, worked for long years coordinating the recruiting of authors and the review and

submission process. Melissa Fearon, Springer’s Senior Editor, helped immensely with the coordination of authors

and Editorial Board Members, especially during the difficult last few months. The nearly authors wrote crisp,

informative entries. They include experts in all major areas, come from many different nations, and span several

generations. In many cases, the author is the lead designer or researcher responsible for the contribution reported

in the entry. It was a great pleasure for me to be part of this project. The enthusiasm of everybody involved made

this a joyful enterprise. I believe we have put together a meaningful snapshot of parallel computing in the last

years and presented a believable glimpse of the future. I hope the reader agrees with me on this and finds the entries,

as I did, valuable contributions to the literature.

David Padua

Editor-in-Chief

University of Illinois at Urbana-Champaign

Urbana, IL

USA

Editors

David Padua

Editor-in-Chief

University of Illinois at Urbana-Champaign

Urbana, IL

USA

Sarita Adve

Editorial Board Member

University of Illinois at Urbana-Champaign

Urbana, IL

USA

Colin Robertson

Managing Editor

University of Illinois at Urbana-Champaign

Urbana, IL

USA

Gheorghe S. Almasi

Editorial Board Member

IBM T. J. Watson Research Center

Yorktown Heights, NY

USA

viii

Editors

Srinivas Aluru

Editorial Board Member

Iowa State University

Ames, IA

USA

Gianfranco Bilardi

Editorial Board Member

University of Padova

Padova

Italy

David Bader

Editorial Board Member

Georgia Tech

Atlanta, GA

USA

Siddharta Chatterjee

Editorial Board Member

IBM Systems & Technology Group

Austin, TX

USA

Editors

Luiz DeRose

Editorial Board Member

Cray Inc.

St. Paul, MN

USA

José Duato

Editorial Board Member

Universitat Politècnica de València

València

Spain

Jack Dongarra

Editorial Board Member

University of Tennessee

Knoxville, TN

USA

Oak Ridge National Laboratory

Oak Ridge, TN

USA

University of Manchester

Manchester

UK

Paul Feautrier

Editorial Board Member

Ecole Normale Supérieure de Lyon

Lyon

France

ix

x

Editors

María J. Garzarán

Editorial Board Member

University of Illinois at Urbana Champaign

Urbana, IL

USA

William Gropp

Editorial Board Member

University of Illinois Urbana-Champaign

Urbana, IL

USA

Michael Gerndt

Editorial Board Member

Technische Universitaet Muenchen

Garching

Germany

Thomas Gross

Editorial Board Member

ETH Zurich

Zurich

Switzerland

Editors

James C. Hoe

Editorial Board Member

Carnegie Mellon University

Pittsburgh, PA

USA

Hironori Kasahara

Editorial Board Member

Waseda University

Tokyo

Japan

Laxmikant Kale

Editorial Board Member

University of Illinois at Urbana Champaign

Urbana, IL

USA

Christian Lengauer

Editorial Board Member

University of Passau

Passau

Germany

xi

xii

Editors

José E. Moreira

Editorial Board Member

IBM Thomas J. Watson Research Center

Yorktown Heights, NY

USA

Keshav Pingali

Editorial Board Member

The University of Texas at Austin

Austin, TX

USA

Yale N. Patt

Editorial Board Member

The University of Texas at Austin

Austin, TX

USA

Markus Püschel

Editorial Board Member

ETH Zurich

Zurich

Switzerland

Editors

Ahmed H. Sameh

Editorial Board Member

Purdue University

West Lafayette, IN

USA

Vivek Sarkar

Editorial Board Member

Rice University

Houston, TX

USA

Pen-Chung Yew

Editorial Board Member

University of Minnesota at Twin Cities

Minneapolis, MN

USA

xiii

List of Contributors

DENNIS ABTS

Google Inc.

Madison, WI

USA

SRINIVAS ALURU

Iowa State University

Ames, IA

USA

and

SARITA V. ADVE

University of Illinois at Urbana-Champaign

Urbana, IL

USA

Indian Institute of Technology Bombay

Mumbai

India

GUL AGHA

University of Illinois at Urbana-Champaign

Urbana, IL

USA

JASMIN AJANOVIC

Intel Corporation

Portland, OR

USA

SELIM G. AKL

Queen’s University

Kingston, ON

Canada

PATRICK AMESTOY

Université de Toulouse ENSEEIHT-IRIT

Toulouse cedex

France

BABA ARIMILLI

IBM Systems and Technology Group

Austin, TX

USA

ROGER S. ARMEN

Thomas Jefferson University

Philadelphia, PA

USA

HASAN AKTULGA

Purdue University

West Lafayette, IN

USA

DOUGLAS ARMSTRONG

Intel Corporation

Champaign, IL

USA

JOSÉ I. ALIAGA

TU Braunschweig Institute of Computational Mathematics

Braunschweig

Germany

DAVID I. AUGUST

Princeton University

Princeton, NJ

USA

ERIC ALLEN

Oracle Labs

Austin, TX

USA

CEVDET AYKANAT

Bilkent University

Ankara

Turkey

GEORGE ALMASI

IBM

Yorktown Heights, NY

USA

DAVID A. BADER

Georgia Institute of Technology

Atlanta, GA

USA

xvi

List of Contributors

MICHAEL BADER

Universität Stuttgart

Stuttgart

Germany

SCOTT BIERSDORFF

University of Oregon

Eugene, OR

USA

DAVID H. BAILEY

Lawrence Berkeley National Laboratory

Berkeley, CA

USA

GIANFRANCO BILARDI

University of Padova

Padova

Italy

RAJEEV BALASUBRAMONIAN

University of Utah

Salt Lake City, UT

USA

ROBERT BJORNSON

Yale University

New Haven, CT

USA

UTPAL BANERJEE

University of California at Irvine

Irvine, CA

USA

GUY BLELLOCH

Carnegie Mellon University

Pittsburgh, PA

USA

ALESSANDRO BARDINE

Università di Pisa

Pisa

Italy

ROBERT BOCCHINO

Carnegie Mellon University

Pittsburgh, PA

USA

MUTHU MANIKANDAN BASKARAN

Reservoir Labs, Inc.

New York, NY

USA

HANS J. BOEHM

HP Labs

Palo Alto, CA

USA

CÉDRIC BASTOUL

University Paris-Sud - INRIA Saclay Île-de-France

Orsay

France

ERIC J. BOHM

University of Illinois at Urbana-Champaign

Urbana, IL

USA

AARON BECKER

University of Illinois at Urbana-Champaign

Urbana, IL

USA

MATTHIAS BOLLHÖFER

Universitat Jaume I

Castellón

Spain

MICHAEL W. BERRY

The University of Tennessee

Knoxville, TN

USA

DAN BONACHEA

Lawrence Berkeley National Laboratory

Berkeley, CA

USA

ABHINAV BHATELE

University of Illinois at Urbana-Champaign

Urbana, IL

USA

PRADIP BOSE

IBM Corp. T.J. Watson Research Center

Yorktown Heights, NY

USA

List of Contributors

MARIAN BREZINA

University of Colorado at Boulder

Boulder, CO

USA

ÜMIT V. ÇATALYÜREK

The Ohio State University

Columbus, OH

USA

JEFF BROOKS

Cray Inc.

St. Paul, MN

USA

LUIS H. CEZE

University of Washington

Seattle, WA

USA

HOLGER BRUNST

Technische Universität Dresden

Dresden

Germany

BRADFORD L. CHAMBERLAIN

Cray Inc.

Seattle, WA

USA

HANS-JOACHIM BUNGARTZ

Technische Universität München

Garching

Germany

ERNIE CHAN

NVIDIA Corporation

Santa Clara, CA

USA

MICHAEL G. BURKE

Rice University

Houston, TX

USA

RONG-GUEY CHANG

National Chung Cheng University

Chia-Yi

Taiwan

ALFREDO BUTTARI

Université de Toulouse ENSEEIHT-IRIT

Toulouse cedex

France

BARBARA CHAPMAN

University of Houston

Houston, TX

USA

ERIC J. BYLASKA

Pacific Northwest National Laboratory

Richland, WA

USA

DAVID CHASE

Oracle Labs

Burlington, MA

USA

ROY H. CAMPBELL

University of Illinois at Urbana-Champaign

Urbana, IL

USA

DANIEL CHAVARRÍA-MIRANDA

Pacific Northwest National Laboratory

Richland, WA

USA

WILLIAM CARLSON

Institute for Defense Analyses

Bowie, MD

USA

NORMAN H. CHRIST

Columbia University

New York, NY

USA

MANUEL CARRO

Universidad Politécnica de Madrid

Madrid

Spain

MURRAY COLE

University of Edinburgh

Edinburgh

UK

xvii

xviii

List of Contributors

PHILLIP COLELLA

University of California

Berkeley, CA

USA

KAUSHIK DATTA

University of California

Berkeley, CA

USA

SALVADOR COLL

Universidad Politécnica de Valencia

Valencia

Spain

JIM DAVIES

Oxford University

UK

GUOJING CONG

IBM

Yorktown Heights, NY

USA

JAMES H. COWNIE

Intel Corporation (UK) Ltd.

Swindon

UK

ANTHONY P. CRAIG

National Center for Atmospheric Research

Boulder, CO

USA

ANTHONY CURTIS

University of Houston

Houston

TX

JAMES DEMMEL

University of California at Berkeley

Berkeley, CA

USA

MONTY DENNEAU

IBM Corp., T.J. Watson Research Center

Yorktown Heights, NY

USA

JACK B. DENNIS

Massachusetts Institute of Technology

Cambridge, MA

USA

MARK DEWING

Intel Corporation

Champaign, IL

USA

H. J. J. VAN DAM

Pacific Northwest National Laboratory

Richland, WA

USA

VOLKER DIEKERT

Universität Stuttgart FMI

Stuttgart

Germany

FREDERICA DAREMA

National Science Foundation

Arlington, VA

USA

JACK DONGARRA

University of Tennessee

Knoxville, TN

USA

ALAIN DARTE

École Normale Supérieure de Lyon

Lyon

France

DAVID DONOFRIO

Lawrence Berkeley National Laboratory

Berkeley, CA

USA

RAJA DAS

IBM Corporation

Armonk, NY

USA

RON O. DROR

D. E. Shaw Research

New York, NY

USA

List of Contributors

IAIN DUFF

Science & Technology Facilities Council

Didcot, Oxfordshire

UK

MICHAEL DUNGWORTH

SANDHYA DWARKADAS

University of Rochester

Rochester, NY

USA

RUDOLF EIGENMANN

Purdue University

West Lafayette, IN

USA

WU-CHUN FENG

Virginia Tech

Blacksburg, VA

USA

and

Wake Forest University

Winston-Salem, NC

USA

JOHN FEO

Pacific Northwest National Laboratory

Richland, WA

USA

JEREMY T. FINEMAN

Carnegie Mellon University

Pittsburgh, PA

USA

E. N. (MOOTAZ) ELNOZAHY

IBM Research

Austin, TX

USA

JOSEPH A. FISHER

Miami Beach, FL

USA

JOEL EMER

Intel Corporation

Hudson, MA

USA

CORMAC FLANAGAN

University of California at Santa Cruz

Santa Cruz, CA

USA

BABAK FALSAFI

Ecole Polytechnique Fédérale de Lausanne

Lausanne

Switzerland

JOSÉ FLICH

Technical University of Valencia

Valencia

Spain

PAOLO FARABOSCHI

Hewlett Packard

Sant Cugat del Valles

Spain

CHRISTINE FLOOD

Oracle Labs

Burlington, MA

USA

PAUL FEAUTRIER

Ecole Normale Supérieure de Lyon

Lyon

France

MICHAEL FLYNN

Stanford University

Stanford, CA

USA

KARL FEIND

SGI

Eagan, MN

USA

JOSEPH FOGARTY

University of South Florida

Tampa, FL

USA

xix

xx

List of Contributors

PIERFRANCESCO FOGLIA

Università di Pisa

Pisa

Italy

PEDRO J. GARCIA

Universidad de Castilla-La Mancha

Albacete

Spain

TRYGGVE FOSSUM

Intel Corporation

Hudson, MA

USA

MICHAEL GARLAND

NVIDIA Corporation

Santa Clara, CA

USA

GEOFFREY FOX

Indiana University

Bloomington, IN

USA

KLAUS GÄRTNER

Weierstrass Institute for Applied Analysis and Stochastics

Berlin

Germany

MARTIN FRÄNZLE

Carl von Ossietzky Universität

Oldenburg

Germany

ED GEHRINGER

North Carolina State University

Raleigh, NC

USA

FRANZ FRANCHETTI

Carnegie Mellon University

Pittsburgh, PA

USA

ROBERT A. VAN DE GEIJN

The University of Texas at Austin

Austin, TX

USA

STEFAN M. FREUDENBERGER

Freudenberger Consulting

Zürich

Switzerland

AL GEIST

Oak Ridge National Laboratory

Oak Ridge, TN

USA

HOLGER FRÖNING

University of Heidelberg

Heidelberg

Germany

THOMAS GEORGE

IBM Research

Delhi

India

KARL FÜRLINGER

Ludwig-Maximilians-Universität München

Munich

Germany

MICHAEL GERNDT

Technische Universität München

München

Germany

EFSTRATIOS GALLOPOULOS

University of Patras

Patras

Greece

AMOL GHOTING

IBM Thomas. J. Watson Research Center

Yorktown Heights, NY

USA

ALAN GARA

IBM T.J. Watson Research Center

Yorktown Heights, NY

USA

JOHN GILBERT

University of California

Santa Barbara, CA

USA

List of Contributors

ROBERT J. VAN GLABBEEK

NICTA

Sydney

Australia

and

The University of New South Wales

Sydney

Australia

and

Stanford University

Stanford, CA

USA

SERGEI GORLATCH

Westfälische Wilhelms-Universität Münster

Münster

Germany

KAZUSHIGE GOTO

The University of Texas at Austin

Austin, TX

USA

ALLAN GOTTLIEB

New York University

New York, NY

USA

STEVEN GOTTLIEB

Indiana University

Bloomington, IN

USA

N. GOVIND

Pacific Northwest National Laboratory

Richland, WA

USA

SUSAN L. GRAHAM

University of California

Berkeley, CA

USA

ANANTH Y. GRAMA

Purdue University

West Lafayette, IN

USA

DON GRICE

IBM Corporation

Poughkeepsie, NY

USA

LAURA GRIGORI

Laboratoire de Recherche en Informatique Universite

Paris-Sud

Paris

France

WILLIAM GROPP

University of Illinois at Urbana-Champaign

Urbana, IL

USA

ABDOU GUERMOUCHE

Université de Bordeaux

Talence

France

JOHN A. GUNNELS

IBM Corp

Yorktown Heights, NY

USA

ANSHUL GUPTA

IBM T.J. Watson Research Center

Yorktown Heights, NY

USA

JOHN L. GUSTAFSON

Intel Corporation

Santa Clara, CA

USA

ROBERT H. HALSTEAD

Curl Inc.

Cambridge, MA

USA

KEVIN HAMMOND

University of St. Andrews

St. Andrews

UK

JAMES HARRELL

xxi

xxii

List of Contributors

ROBERT HARRISON

Oak Ridge National Laboratory

Oak Ridge, TN

USA

JOHN C. HART

University of Illinois at Urbana-Champaign

Urbana, IL

USA

MICHAEL HEATH

University of Illinois at Urbana-Champaign

Urbana, IL

USA

HERMANN HELLWAGNER

Klagenfurt University

Klagenfurt

Austria

DANNY HENDLER

Ben-Gurion University of the Negev

Beer-Sheva

Israel

BRUCE HENDRICKSON

Sandia National Laboratories

Albuquerque, NM

USA

ROBERT HENSCHEL

Indiana University

Bloomington, IN

USA

MANUEL HERMENEGILDO

Universidad Politécnica de Madrid

Madrid

Spain

IMDEA Software Institute

Madrid

Spain

OSCAR HERNANDEZ

Oak Ridge National Laboratory

Oak Ridge, TN

USA

PAUL HILFINGER

University of California

Berkeley, CA

USA

KEI HIRAKI

The University of Tokyo

Tokyo

Japan

H. PETER HOFSTEE

IBM Austin Research Laboratory

Austin, TX

USA

CHRIS HSIUNG

Hewlett Packard

Palo Alto, CA

USA

JONATHAN HU

Sandia National Laboratories

Livermore, CA

USA

KIERAN T. HERLEY

University College Cork

Cork

Ireland

THOMAS HUCKLE

Technische Universität München

Garching

Germany

MAURICE HERLIHY

Brown University

Providence, RI

USA

WEN-MEI HWU

University of Illinois at Urbana-Champaign

Urbana, IL

USA

List of Contributors

FRANÇOIS IRIGOIN

MINES ParisTech/CRI

Fontainebleau

France

KRISHNA KANDALLA

The Ohio State University

Columbus, OH

USA

KEN’ICHI ITAKURA

Japan Agency for Marine-Earth Science and Technology

(JAMSTEC)

Yokohama

Japan

LARRY KAPLAN

Cray Inc.

Seattle, WA

USA

JOSEPH F. JAJA

University of Maryland

College Park, MD

USA

JOEFON JANN

T. J. Watson Research Center, IBM Corp.

Yorktown Heights, NY

USA

KARL JANSEN

NIC, DESY Zeuthen

Zeuthen

Germany

PRITISH JETLEY

University of Illinois at Urbana-Champaign

Urbana, IL

USA

WIBE A. DE JONG

Pacific Northwest National Laboratory

Richland, WA

USA

TEJAS S. KARKHANIS

IBM T.J. Watson Research Center

Yorktown Heights, NY

USA

RAJESH K. KARMANI

University of Illinois at Urbana-Champaign

Urbana, IL

USA

GEORGE KARYPIS

University of Minnesota

Minneapolis, MN

USA

ARUN KEJARIWAL

Yahoo! Inc.

Sunnyvale, CA

USA

MALEQ KHAN

Virginia Tech

Blacksburg, VA

USA

LAXMIKANT V. KALÉ

University of Illinois at Urbana-Champaign

Urbana, IL

USA

THILO KIELMANN

Vrije Universiteit

Amsterdam

The Netherlands

ANANTH KALYANARAMAN

Washington State University

Pullman, WA

USA

GERRY KIRSCHNER

Cray Incorporated

St. Paul, MN

USA

AMIR KAMIL

University of California

Berkeley, CA

USA

CHRISTOF KLAUSECKER

Ludwig-Maximilians-Universität München

Munich

Germany

xxiii

xxiv

List of Contributors

KATHLEEN KNOBE

Intel Corporation

Cambridge, MA

USA

V. S. ANIL KUMAR

Virginia Tech

Blacksburg, VA

USA

ANDREAS KNÜPFER

Technische Universität Dresden

Dresden

Germany

KALYAN KUMARAN

Argonne National Laboratory

Argonne, IL

USA

GIORGOS KOLLIAS

Purdue University

West Lafayette, IN

USA

JAMES LA GRONE

University of Houston

Houston, TX

USA

K. KOWALSKI

Pacific Northwest National Laboratory

Richland, WA

USA

ROBERT LATHAM

Argonne National Laboratory

Argonne, IL

USA

QUINCEY KOZIOL

The HDF Group

Champaign, IL

USA

BRUCE LEASURE

Saint Paul, MN

USA

DIETER KRANZLMÜLLER

Ludwig-Maximilians-Universität München

Munich

Germany

MANOJKUMAR KRISHNAN

Pacific Northwest National Laboratory

Richland, WA

USA

JENQ-KUEN LEE

National Tsing-Hua University

Hsin-Chu

Taiwan

CHARLES E. LEISERSON

Massachusetts Institute of Technology

Cambridge, MA

USA

CHI-BANG KUAN

National Tsing-Hua University

Hsin-Chu

Taiwan

CHRISTIAN LENGAUER

University of Passau

Passau

Germany

DAVID J. KUCK

Intel Corporation

Champaign, IL

USA

RICHARD LETHIN

Reservoir Labs, Inc.

New York, NY

USA

JEFFERY A. KUEHN

Oak Ridge National Laboratory

Oak Ridge, TN

USA

ALLEN LEUNG

Reservoir Labs, Inc.

New York, NY

USA

List of Contributors

JOHN M. LEVESQUE

Cray Inc.

Knoxville, TN

USA

PEDRO LÓPEZ

Universidad Politécnica de Valencia

Valencia

Spain

MICHAEL LEVINE

GEOFF LOWNEY

Intel Corporation

Husdon, MA

USA

JEAN-YVES L’EXCELLENT

ENS Lyon

Lyon

France

JIAN LI

IBM Research

Austin, TX

USA

XIAOYE SHERRY LI

Lawarence Berkeley National Laboratory

Berkeley, CA

USA

ZHIYUAN LI

Purdue University

West Lafayette, IN

USA

VICTOR LUCHANGCO

Oracle Labs

Burlington, MA

USA

PIOTR LUSZCZEK

University of Tennessee

Knoxville, TN

USA

OLAV LYSNE

The University of Oslo

Oslo

Norway

CALVIN LIN

University of Texas at Austin

Austin, TX

USA

XIAOSONG MA

North Carolina State University

Raleigh, NC

USA

and

Oak Ridge National Laboratory

Raleigh, NC

USA

HESHAN LIN

Virginia Tech

Blacksburg, VA

USA

ARTHUR B. MACCABE

Oak Ridge National Laboratory

Oak Ridge, TN

USA

HANS-WOLFGANG LOIDL

Heriot-Watt University

Edinburgh

UK

KAMESH MADDURI

Lawrence Berkeley National Laboratory

Berkeley, CA

USA

RITA LOOGEN

Philipps-Universität Marburg

Marburg

Germany

JAN-WILLEM MAESSEN

Google

Cambridge, MA

USA

xxv

xxvi

List of Contributors

KONSTANTIN MAKARYCHEV

IBM T.J. Watson Research Center

Yorktown Heights, NY

USA

PHILLIP MERKEY

Michigan Technological University

Houghton, MI

USA

JUNICHIRO MAKINO

National Astronomical Observatory of Japan

Tokyo

Japan

JOSÉ MESEGUER

University of Illinois at Urbana-Champaign

Urbana, IL

USA

ALLEN D. MALONY

University of Oregon

Eugene, OR

USA

MICHAEL METCALF

Berlin

Germany

MADHA V. MARATHE

Virginia Tech

Blacksburg, VA

USA

ALBERTO F. MARTÍN

Universitat Jaume I

Castellón

Spain

GLENN MARTYNA

IBM Thomas J. Watson Research Center

Yorktown Heights, NY

USA

ERIC R. MAY

University of Michigan

Ann Arbor, MI

USA

SALLY A. MCKEE

Chalmers University of Technology

Goteborg

Sweden

SAMUEL MIDKIFF

Purdue University

West Lafayette, IN

USA

KENICHI MIURA

National Institute of Informatics

Tokyo

Japan

BERND MOHR

Forschungszentrum Jülich GmbH

Jülich

Germany

JOSÉ E. MOREIRA

IBM T.J. Watson Research Center

Yorktown Heights, NY

USA

ALAN MORRIS

University of Oregon

Eugene, OR

USA

MIRIAM MEHL

Technische Universität München

Garching

Germany

J. ELIOT B. MOSS

University of Massachusetts

Amherst, MA

USA

BENOIT MEISTER

Reservoir Labs, Inc.

New York, NY

USA

MATTHIAS MÜLLER

Technische Universität Dresden

Dresden

Germany

List of Contributors

PETER MÜLLER

ETH Zurich

Zurich

Switzerland

ALLEN NIKORA

California Institute of Technology

Pasadena, CA

USA

YOICHI MURAOKA

Waseda University

Tokyo

Japan

ROBERT W. NUMRICH

City University of New York

New York, NY

USA

ANCA MUSCHOLL

Université Bordeaux

Talence

France

RAVI NAIR

IBM Thomas J. Watson Research Center

Yorktown Heights, NY

USA

STEPHEN NELSON

MARIO NEMIROVSKY

Barcelona Supercomputer Center

Barcelona

Spain

RYAN NEWTON

Intel Corporation

Hudson, MA

USA

ROCCO DE NICOLA

Universita’ di Firenze

Firenze

Italy

ALEXANDRU NICOLAU

University of California Irvine

Irvine, CA

USA

JAREK NIEPLOCHA†

Pacific Northwest National Laboratory

Richland, WA

USA

†

deceased

STEVEN OBERLIN

LEONID OLIKER

Lawrence Berkeley National Laboratory

Berkeley, CA

USA

DAVID PADUA

University of Illinois at Urbana-Champaign

Urbana, IL

USA

SCOTT PAKIN

Los Alamos National Laboratory

Los Alamos, NM

USA

BRUCE PALMER

Pacific Northwest National Laboratory

Richland, WA

USA

DHABALESWAR K. PANDA

The Ohio State University

Columbus, OH

USA

SAGAR PANDIT

University of South Florida

Tampa, FL

USA

YALE N. PATT

The University of Texas at Austin

Austin, TX

USA

xxvii

xxviii

List of Contributors

OLIVIER PÈNE

University de Paris-Sud-XI

Orsay Cedex

France

WILFRED POST

Oak Ridge National Laboratory

Oak Ridge, TN

USA

PAUL PETERSEN

Intel Corporation

Champaign, IL

USA

CHRISTOPH VON PRAUN

Georg-Simon-Ohm University of Applied Sciences

Nuremberg

Germany

BERNARD PHILIPPE

Campus de Beaulieu

Rennes

France

FRANCO P. PREPARATA

Brown University

Providence, RI

USA

MICHAEL PHILIPPSEN

University of Erlangen-Nuremberg

Erlangen

Germany

COSIMO ANTONIO PRETE

Università di Pisa

Pisa

Italy

JAMES C. PHILLIPS

University of Illinois at Urbana-Champaign

Urbana, IL

USA

ANDREA PIETRACAPRINA

Università di Padova

Padova

Italy

KESHAV PINGALI

The University of Texas at Austin

Austin, TX

USA

TIMOTHY PRINCE

Intel Corporation

Santa Clara, CA

USA

JEAN-PIERRE PROST

Morteau

France

GEPPINO PUCCI

Università di Padova

Padova

Italy

TIMOTHY M. PINKSTON

University of Southern California

Los Angeles, CA

USA

MARKUS PÜSCHEL

ETH Zurich

Zurich

Switzerland

ERIC POLIZZI

University of Massachusetts

Amherst, MA

USA

ENRIQUE S. QUINTANA-ORTÍ

Universitat Jaume I

Castellón

Spain

STEPHEN W. POOLE

Oak Ridge National Laboratory

Oak Ridge, TN

USA

PATRICE QUINTON

ENS Cachan Bretagne

Bruz

France

List of Contributors

RAM RAJAMONY

IBM Research

Austin, TX

USA

ARUN RAMAN

Princeton University

Princeton, NJ

USA

LAWRENCE RAUCHWERGER

Texas A&M University

College Station, TX

USA

JAMES R. REINDERS

Intel Corporation

Hillsboro, OR

USA

STEVEN P. REINHARDT

JOHN REPPY

University of Chicago

Chicago, IL

USA

MARÍA ENGRACIA GÓMEZ REQUENA

Universidad Politécnica de Valencia

Valencia

Spain

DANIEL RICCIUTO

Oak Ridge National Laboratory

Oak Ridge, TN

USA

YVES ROBERT

Ecole Normale Supérieure de Lyon

France

ARCH D. ROBISON

Intel Corporation

Champaign, IL

USA

A. W. ROSCOE

Oxford University

Oxford

UK

ROBERT B. ROSS

Argonne National Laboratory

Argonne, IL

USA

CHRIS ROWEN

CEO, Tensilica

Santa Clara, CA, USA

DUNCAN ROWETH

Cray (UK) Ltd.

UK

SUKYOUNG RYU

Korea Advanced Institute of Science and Technology

Daejeon

Korea

VALENTINA SALAPURA

IBM Research

Yorktown Heights, NY

USA

JOEL H. SALTZ

Emory University

Atlanta, GA

USA

ROLF RIESEN

IBM Research

Dublin

Ireland

AHMED SAMEH

Purdue University

West Lafayette, IN

USA

TANGUY RISSET

INSA Lyon

Villeurbanne

France

MIGUEL SANCHEZ

Universidad Politécnica de Valencia

Valencia

Spain

xxix

xxx

List of Contributors

BENJAMIN SANDER

Advanced Micro Device Inc.

Austin, TX

USA

MICHAEL L. SCOTT

University of Rochester

Rochester, NY

USA

PETER SANDERS

Universitaet Karlsruhe

Karlsruhe

Germany

MATOUS SEDLACEK

Technische Universität München

Garching

Germany

DAVIDE SANGIORGI

Universita’ di Bologna

Bologna

Italy

JOEL SEIFERAS

University of Rochester

Rochester, NY

USA

VIVEK SARIN

Texas A&M University

College Station, TX

USA

FRANK OLAF SEM-JACOBSEN

The University of Oslo

Oslo

Norway

VIVEK SARKAR

Rice University

Houston, TX

USA

OLAF SCHENK

University of Basel

Basel

Switzerland

MICHAEL SCHLANSKER

Hewlett-Packard Inc.

Palo-Alto, CA

USA

STEFAN SCHMID

Telekom Laboratories/TU Berlin

Berlin

Germany

ANDRÉ SEZNEC

IRISA/INRIA, Rennes

Rennes

France

JOHN SHALF

Lawrence Berkeley National Laboratory

Berkeley, CA

USA

MEIYUE SHAO

Umeå University

Umeå

Sweden

MARTIN SCHULZ

Lawrence Livermore National Laboratory

Livermore, CA

USA

DAVID E. SHAW

D. E. Shaw Research

New York, NY

USA

and

Columbia University

New York, NY

USA

JAMES L. SCHWARZMEIER

Cray Inc.

Chippewa Falls, WI

USA

XIAOWEI SHEN

IBM Research

Armonk, NY

USA

List of Contributors

SAMEER SHENDE

University of Oregon

Eugene, OR

USA

EDGAR SOLOMONIK

University of California at Berkeley

Berkeley, CA

USA

GALEN M. SHIPMAN

Oak Ridge National Laboratory

Oak Ridge, TN

USA

MATTHEW SOTTILE

Galois, Inc.

Portland, OR

USA

HOWARD JAY SIEGEL

Colorado State University

Fort Collins, CO

USA

M’HAMED SOULI

Université des Sciences et Technologies de Lille

Villeneuve d’Ascq cédex

France

DANIEL P. SIEWIOREK

Carnegie Mellon University

Pittsburgh, PA

USA

WYATT SPEAR

University of Oregon

Eugene, OR

USA

FEDERICO SILLA

Universidad Politécnica de Valencia

Valencia

Spain

EVAN W. SPEIGHT

IBM Research

Austin, TX

USA

BARRY SMITH

Argonne National Laboratory

Argonne, IL

USA

MARK S. SQUILLANTE

IBM

Yorktown Heights, NY

USA

BURTON SMITH

Microsoft Corporation

Redmond, WA

USA

ALEXANDROS STAMATAKIS

Heidelberg Institute for Theoretical Studies

Heidelberg

Germany

MARC SNIR

University of Illinois at Urbana-Champaign

Urbana, IL

USA

GUY L. STEELE, JR.

Oracle Labs

Burlington, MA

USA

LAWRENCE SNYDER

University of Washington

Seattle, WA

USA

THOMAS L. STERLING

Louisiana State University

Baton Rouge, LA

USA

MARCO SOLINAS

Università di Pisa

Pisa

Italy

TJERK P. STRAATSMA

Pacific Northwest National Laboratory

Richland, WA

USA

xxxi

xxxii

List of Contributors

PAULA E. STRETZ

Virginia Tech

Blacksburg, VA

USA

JOSEP TORRELLAS

University of Illinois at Urbana-Champaign

Urbana, IL

USA

THOMAS M. STRICKER

Zürich, CH

Switzerland

JESPER LARSSON TRÄFF

University of Vienna

Vienna

Austria

JIMMY SU

University of California

Berkeley, CA

USA

HARI SUBRAMONI

The Ohio State University

Columbus, OH

USA

SAYANTAN SUR

The Ohio State University

Columbus, OH

USA

JOHN SWENSEN

CPU Technology

Pleasanton, CA

USA

PHILIP TRINDER

Heriot-Watt University

Edinburgh

UK

RAFFAELE TRIPICCIONE

Università di Ferrara and INFN Sezione di Ferrara

Ferrara

Italy

MARK TUCKERMAN

New York University

New York, NY

USA

RAY TUMINARO

Sandia National Laboratories

Livermore, CA

USA

HIROSHI TAKAHARA

NEC Corporation

Tokyo

Japan

BORA UÇAR

ENS Lyon

Lyon

France

MICHELA TAUFER

University of Delaware

Newark, DE

USA

MARAT VALIEV

Pacific Northwest National Laboratory

Richland, WA

USA

VINOD TIPPARAJU

Oak Ridge National Laboratory

Oak Ridge, TN

USA

NICOLAS VASILACHE

Reservoir Labs, Inc.

New York, NY

USA

ALEXANDER TISKIN

University of Warwick

Coventry

UK

MARIANA VERTENSTEIN

National Center for Atmospheric Research

Boulder, CO

USA

List of Contributors

JENS VOLKERT

Johannes Kepler University Linz

Linz

Austria

TONG WEN

University of California

Berkeley, CA

USA

YEVGEN VORONENKO

Carnegie Mellon University

Pittsburgh, PA

USA

R. CLINT WHALEY

University of Texas at San Antonio

San Antonio, TX

USA

RICHARD W. VUDUC

Georgia Institute of Technology

Atlanta, GA

USA

ANDREW B. WHITE

Los Alamos National Laboratory

Los Alamos, NM

USA

GENE WAGENBRETH

University of Southern Califorina

Topanga, CA

USA

BRIAN WHITNEY

Oracle Corporation

Hillsboro, OR

USA

DALI WANG

Oak Ridge National Laboratory

Oak Ridge, TN

USA

ROLAND WISMÜLLER

University of Siegen

Siegen

Germany

JASON WANG

LSTC

Livermore, CA

USA

ROBERT W. WISNIEWSKI

IBM

Yorktown Heights, NY

USA

GREGORY R. WATSON

IBM

Yorktown Heights, NY

USA

DAVID WOHLFORD

Reservoir Labs, Inc.

New York, NY

USA

ROGER WATTENHOFER

ETH Zürich

Zurich

Switzerland

FELIX WOLF

Aachen University

Aachen

Germany

MICHAEL WEHNER

Lawrence Berkeley National Laboratory

Berkeley, CA

USA

DAVID WONNACOTT

Haverford College

Haverford, PA

USA

JOSEF WEIDENDORFER

Technische Universität München

München

Germany

PATRICK H. WORLEY

Oak Ridge National Laboratory

Oak Ridge, TN

USA

xxxiii

xxxiv

List of Contributors

SUDHAKAR YALAMANCHILI

Georgia Institute of Technology

Atlanta, GA

USA

FIELD G. VAN ZEE

The University of Texas at Austin

Austin, TX

USA

KATHERINE YELICK

University of California at Berkeley and Lawrence Berkeley

National Laboratory

Berkeley, CA

USA

LIXIN ZHANG

IBM Research

Austin, TX

USA

PEN-CHUNG YEW

University of Minnesota at Twin-Cities

Minneapolis, MN

USA

BOBBY DALTON YOUNG

Colorado State University

Fort Collins, CO

USA

CLIFF YOUNG

D. E. Shaw Research

New York, NY

USA

GABRIEL ZACHMANN

Clausthal University

Clausthal-Zellerfeld

Germany

GENGBIN ZHENG

University of Illinois at Urbana-Champaign

Urbana, IL

USA

HANS P. ZIMA

California Institute of Technology

Pasadena, CA

USA

JAROSLAW ZOLA

Iowa State University

Ames, IA

USA

A

Ab Initio Molecular Dynamics

Car-Parrinello Method

Access Anomaly

Race Conditions

Actors do not share state: an actor must explicitly send

a message to another actor in order to affect the latter’s

behavior. Each actor carries out its actions concurrently

(and asynchronously) with other actors. Moreover, the

path a message takes as well as network delays it may

encounter are not specified. Thus, the arrival order of

messages is indeterminate. The key semantic properties of the standard Actor model are encapsulation of

state and atomic execution of a method in response

to a message, fairness in scheduling actors and in the

delivery of messages, and location transparency enabling

distributed execution and mobility.

Actors

Rajesh K. Karmani, Gul Agha

University of Illinois at Urbana-Champaign, Urbana,

IL, USA

Definition

Actors is a model of concurrent computation for developing parallel, distributed, and mobile systems. Each

actor is an autonomous object that operates concurrently and asynchronously, receiving and sending messages to other actors, creating new actors, and updating

its own local state. An actor system consists of a collection of actors, some of whom may send messages to, or

receive messages from, actors outside the system.

Preliminaries

An actor has a name that is globally unique and a behavior which determines its actions. In order to send an

actor a message, the actor’s name must be used; a name

cannot be guessed but it may be communicated in a

message. When an actor is idle, and it has a pending message, the actor accepts the message and does

the computation defined by its behavior. As a result,

the actor may take three types of actions: send messages, create new actors, and update its local state. An

actor’s behavior may change as it modifies its local state.

Advantages of the Actor Model

In the object-oriented programming paradigm, an

object encapsulates data and behavior. This separates

the interface of an object (what an object does) from its

representation (how it does it). Such separation enables

modular reasoning about object-based programs and

facilitates their evolution. Actors extend the advantages of objects to concurrent computations by separating control (where and when) from the logic of a

computation.

The Actor model of programming [] allows

programs to be decomposed into self-contained, autonomous, interactive, asynchronously operating components. Due to their asynchronous operation, actors

provide a model for the nondeterminism inherent in

distributed systems, reactive systems, mobile systems,

and any form of interactive computing.

History

The concept of actors has developed over decades. The

earliest use of the term “actors” was in Carl Hewitt’s

Planner [] where the term referred to rule-based

active entities which search a knowledge base for patterns to match, and in response, trigger actions. For

the next decades, Hewitt’s group worked on actors as

David Padua (ed.), Encyclopedia of Parallel Computing, DOI ./----,

© Springer Science+Business Media, LLC

A

Actors

Thread

State

Methods

Mailbox

Create

Thread

State

Thread

State

Methods

Methods

msg

Mailbox

Mailbox

Actors. Fig. Actors are concurrent objects that communicate through messages and may create new actors. An actor

may be viewed as an object augmented with its own control, a mailbox, and a globally unique, immutable name

agents of computation, and it evolved as a model of concurrent computing. A brief history of actor research can

be found in []. The commonly used definition of actors

today follows the work of Agha () which defines

actors using a simple operational semantics [].

In recent years, the Actor model has gained in

popularity with the growth of parallel and distributed

computing platforms such as multicore architectures,

cloud computers, and sensor networks. A number

of actor languages and frameworks have been developed. Some early actor languages include ABCL,

POOL, ConcurrentSmalltalk, ACT++, CEiffel (see []

for a review of these), and HAL []. Actor languages and frameworks in current use include Erlang

(from Ericsson) [], E (Erights.org), Scala Actors

library (EPFL) [], Ptolemy (UC Berkeley) [], ASP

(INRIA) [], JCoBox (University of Kaiserslautern)

[], SALSA (UIUC and RPI) [], Charm++ []

and ActorFoundry [] (both from UIUC), the Asynchronous Agents Library [] and Axum [] (both from

Microsoft), and Orleans framework for cloud computing from Microsoft Research []. Some well-known

open source applications built using actors include

Twitter’s message queuing system and Lift Web Framework, and among commercial applications are Facebook Chat system and Vendetta’s game engine.

Illustrative Actor Language

In order to show how actor programs work, consider a simple imperative actor language ActorFoundry

that extends Java. A class defining an actor behavior

extends osl.manager.Actor. Messages are handled by

methods; such methods are annotated with @message.

The create(class, args) method creates an actor

instance of the specified actor class, where args correspond to the arguments of a constructor in the class.

Each newly created actor has a unique name that is initially known only to the creator at the point where the

creation occurs.

Execution Semantics

The semantics of ActorFoundry can be informally

described as follows. Consider an ActorFoundry program P that consists of a set of actor definitions.

An actor communicates with another actor in P by

sending asynchronous (non-blocking) messages using

the send statement: send(a,msg) has the effect of eventually appending the contents of msg to the mailbox of the actor a. However, the call to send returns

immediately, that is, the sending actor does not wait

for the message to arrive at its destination. Because

actors operate asynchronously, and the network has

indeterminate delays, the arrival order of messages is

Actors

nondeterministic. However, we assume that messages

are eventually delivered (a form of fairness).

At the beginning of execution of P, the mailbox of

each actor is empty and some actor in the program must

receive a message from the environment. The ActorFoundry runtime first creates an instance of a specified

actor and then sends a specified message to it, which

serves as P’s entry point.

Each actor can be viewed as executing a loop with

the following steps: remove a message from its mailbox

(often implemented as a queue), decode the message,

and execute the corresponding method. If an actor’s

mailbox is empty, the actor blocks – waiting for the next

message to arrive in the mailbox (such blocked actors

are referred to as idle actors). The processing of a message may cause the actor’s local state to be updated,

new actors to be created, and messages to be sent.

Because of the encapsulation property of actors, there is

no interference between messages that are concurrently

processed by different actors.

An actor program “terminates” when every actor

created by the program is idle and the actors are

not open to the environment (otherwise the environment could send new messages to their mailboxes in the future). Note that an actor program need

not terminate – in particular, certain interactive programs and operating systems may continue to execute

indefinitely.

Listing shows the HelloWorld program in ActorFoundry. The program comprises of two actor definitions: the HelloActor and the WorldActor. An

instance of the HelloActor can receive one type of

message, the greet message, which triggers the execution of greet method. The greet method serves

as P’s entry point, in lieu of the traditional main

method.

On receiving a greet message, the HelloActor

sends a print message to the stdout actor (a builtin actor representing the standard output stream)

along with the string “Hello.” As a result, “Hello”

will eventually be printed on the standard output

stream. Next, it creates an instance of the WorldActor.

The HelloActor sends an audience message to the

WorldActor, thus delegating the printing of “World”

to it. Note that due to asynchrony in communication, it is possible for “World” to be printed before

“Hello.”

A

Listing Hello World! program in ActorFoundry

public class HelloActor extends Actor {

@message

public void greet() throws RemoteCodeException

{

ActorName other = null;

send(stdout, "print", "Hello");

other = create(WorldActor.class);

send(other, "audience");

}

}

public class WorldActor extends Actor {

@message

public void audience() throws RemoteCodeException

{

send(stdout, "print", "World");

}

}

Synchronization

Synchronization in actors is achieved through communication. Two types of commonly used communication

patterns are Remote Procedure Call (RPC)-like messaging and local synchronization constraints. Language

constructs can enable actor programmers to specify

such patterns. Such language constructs are definable

in terms of primitive actor constructs, but providing

them as first-class linguistic objects simplifies the task

of writing parallel code.

RPC-Like Messaging

RPC-like communication is a common pattern of

message-passing in actor programs. In RPC-like communication, the sender of a message waits for the

reply to arrive before the sender proceeds with processing other messages. For example, consider the pattern

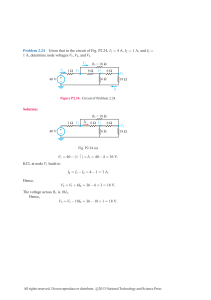

shown in Fig. for a client actor which requests a quote

from a travel service. The client wishes to wait for the

quote to arrive before it decides whether to buy the trip,

or to request a quote from another service.

Without a high-level language abstraction to express

RPC-like message pattern, a programmer has to explicitly implement the following steps in their program:

. The client actor sends a request.

. The client then checks incoming messages.

A

A

Actors

Client

Re

que

st

Service #1

ly

Rep

Client

Re

dependencies in the code. This may not only make the

program’s execution more inefficient than it needs to be,

it can lead to deadlocks and livelocks (where an actor

ignores or postpones processing messages, waiting for

an acknowledgment that never arrives).

Local Synchronization Constraints

que

st

Service #2

ly

Rep

Client

Actors. Fig. A client actor requesting quotes from

multiple competing services using RPC-like

communication. The dashed slanted arrows denote

messages and the dashed vertical arrows denote that the

actor is waiting or is blocked during that period in its life

. If the incoming message corresponds to the reply

to its request, the client takes the appropriate action

(accept the offer or keep searching).

. If an incoming message does not correspond to the

reply to its request, the message must be handled

(e.g., by being buffered for later processing), and the

client continues to check messages for the reply.

RPC-like messaging is almost universally supported

in actor languages and libraries. RPC-like messages are

particularly useful in two kinds of common scenarios.

One scenario occurs when an actor wants to send an

ordered sequence of messages to a particular recipient –

in this case, it wants to ensure that a message has been

received before it sends another. A variant of this scenario is where the sender wants to ensure that the target

actor has received a message before it communicates

this information to another actor. A second scenario is

when the state of the requesting actor is dependent on

the reply it receives. In this case, the requesting actor

cannot meaningfully process unrelated messages until

it receives a response.

Because RPC-like messages are similar to procedure calls in sequential languages, programmers have

a tendency to overuse them. Unfortunately, inappropriate usage of RPC-like messages introduces unnecessary

Asynchrony is inherent in distributed systems and

mobile systems. One implication of asynchrony is that

the number of possible orderings in which messages

may arrive is exponential in the number of messages

that are “pending” at any time (i.e., messages that have

been sent but have not been received). Because a sender

may be unaware of what the state of the actor it is sending a message to will be when the latter receives the

message, it is possible that the recipient may not be in

a state where it can process the message it is receiving. For example, a spooler may not have a job when

some printer requests one. As another example, messages to actors representing individual matrix elements

(or groups of elements) asking them to process different iterations in a parallel Cholesky decomposition

algorithm need to be monotonically ordered. The need

for such orderings leads to considerable complexity in

concurrent programs, often introducing bugs or inefficiencies due to suboptimal implementation strategies.

For example, in the case of Cholesky decomposition,

imposing a global ordering on the iterations leads to

highly inefficient execution on multicomputers [].

Consider the example of a print spooler. Suppose

a ‘get’ message from an idle printer to its spooler may

arrive when the spooler has no jobs to return the printer.

One way to address this problem is for the spooler to

refuse the request. Now the printer needs to repeatedly

poll the spooler until the latter has a job. This technique

is called busy waiting; busy waiting can be expensive –

preventing the waiting actor from possibly doing other

work while it “waits,” and it results in unnecessary message traffic. An alternate is to the spooler buffer the

“get” message for deferred processing. The effect of such

buffering is to change the order in which the messages

are processed in a way that guarantees that the number of messages put messages to the spooler is always

greater than the number of get messages processed by

the spooler.

If pending messages are buffered explicitly inside the

body of an actor, the code specifying the functionality

Actors

Client

Open

File :

“open”

Client

Rea

d

Rea

d

Rea

d

File :

“read” | “close”

Close

Client

Actors. Fig. A file actor communication with a client is

constrained using local synchronization constraints. The

vertical arrows depict the timeline of the life of an actor

and the slanted arrows denote messages. The labels inside

a circle denote the messages that the file actor can accept

in that particular state

(the how or representation) of the actor is mixed with

the logic determining the order in which the actor processes the messages (the when). Such mixing violates the

software principle of separation of concerns. Researchers

have proposed various constructs to enable programmers to specify the correct orderings in a modular and

abstract way, specifically, as logical formulae (predicates) over the state of an actor and the type of messages. Many actor languages and frameworks provide

such constructs; examples include local synchronization constraints in ActorFoundry, and pattern matching

on sets of messages in Erlang and Scala Actors library.

A

images is passed through a series of filtering and transforming stages. The output of the last stage is a stream

of processed images. This pattern has been demonstrated by an image processing example, written using

the Asynchronous Agents Library [], which is part of

the Microsoft Visual Studio .

A map-reduce graph is an example of the divideand-conquer pattern (see Fig. b). A master actor maps

the computation onto a set of workers and the output from each of these workers is reduced in the “join

continuation” behavior of the master actor (possibly

modeled as a separate actor) (e.g., see []). Other examples of divide-and-conquer pattern are naïve parallel

quicksort [] and parallel mergesort. The synchronization idioms discussed above may be used in succinct

encoding of these patterns in actor programs since these

patterns essentially require ordering the processing of

some messages.

Semantic Properties

As mentioned earlier, some important semantic properties of the pure Actor model are encapsulation and

atomic execution of methods (where a method represents computation in response to a message), fairness,

and location transparency []. We discuss the implications of these properties.

Note that not all actor languages enforce all these

properties. Often, the implementations compromise

some actor properties, typically because it is simpler

to achieve efficient implementations by doing so. However, it is possible by sophisticated program transformations, compilation, and runtime optimizations to regain

almost all the efficiency that is lost in a naïve language

implementation, although doing so is more challenging for library-like actor frameworks []. By failing to

enforce some actor properties in an actor language or

framework implementation, actor languages add to the

burden of the programmers, who have to then ensure

that they write programs in a way that does not violate

the property.

Patterns of Actor Programming

Encapsulation and Atomicity

Two common patterns of parallel programming are

pipeline and divide-and-conquer []. These patterns are

illustrated in Fig. a and b, respectively.

An example of the pipeline pattern is an image processing network (see Fig. a) in which a stream of

Encapsulation implies that no two actors share state.

This is useful for enforcing an object-style decomposition in the code. In sequential object-based languages,

this has led to the natural model of atomic change in

objects: an object invokes (sends a message to) another

A

A

Actors

Stage #1

Stage #2

Stage #3

Raw images

Processed images

Master

(map)

Requests

Worker #1

Worker #2

Replies

Master

(reduce)

Actors. Fig. Patterns of actor programming (from top): (a) pipeline pattern (b) divide-and-conquer pattern

object, which finishes processing the message before

accepting another message from a different object. This

allows us to reason about the behavior of the object in

response to a message, given the state of the target object

when it received the message. In a concurrent computation, it is possible for a message to arrive while an actor

is busy processing another message. Now if the second message is allowed to interrupt the target actor and

modify the target’s state while the target is still processing the first message, it is no longer feasible to reason

about the behavior of the target actor based on what

the target’s state was when it received the first message.

This makes it difficult to reason about the behavior of

the system as such interleaving of messages may lead to

erroneous and inconsistent states.

Instead, the target actor processes messages one

at a time, in a single atomic step consisting of all

actions taken in response to a given message [].

By dramatically reducing the nondeterminism that

must be considered, such atomicity provides a macrostep semantics which simplifies reasoning about actor

programs. Macro-step semantics is commonly used

by correctness-checking tools; it significantly reduces

the state-space exploration required to check a property against an actor program’s potential executions

(e.g., see []).

Fairness

The Actor model assumes a notion of fairness which

states that every actor makes progress if it has some

computation to do, and that every message is eventually

delivered to the destination actor, unless the destination

actor is permanently disabled. Fairness enables modular reasoning about the liveness properties of actor

programs []. For example, if an actor system A is composed with an actor system B where B includes actors

that are permanently busy, the composition does not

affect the progress of the actors in A. A familiar example

where fairness would be useful is in browsers. Problems are often caused by the composition of browser

components with third-party plug-ins: in the absence

of fairness, such plug-ins sometimes result in browser

crashes and hang-ups.

Location Transparency

In the Actor model, the actual location of an actor does

not affect its name. Actors communicate by exchanging messages with other actors, which could be on the

same core, on the same CPU, or on another node in

the network. Location transparent naming provides an

abstraction for programmers, enabling them to program without worrying about the actual physical location of actors. Location transparent naming facilitates

Actors

automatic migration in the runtime, much as indirection in addressing facilitates compaction following

garbage collection in sequential programming.

Mobility is defined as the ability of a computation

to migrate across different nodes. Mobility is important for load-balancing, fault-tolerance, and reconfiguration. In particular, mobility is useful in achieving

scalable performance, particularly for dynamic, irregular applications []. Moreover, employing different

distributions in different stages of a computation may

improve performance. In other cases, the optimal or

correct performance depends on runtime conditions

such as data and workload, or security characteristics

of different platforms. For example, web applications

may be migrated to servers or to mobile clients depending on the network conditions and capabilities of the

client [].

Mobility may also be useful in reducing the energy

consumed by the execution of parallel applications.

Different parts of an application often involve different parallel algorithms and the energy consumption of

an algorithm depends on how many cores the algorithm is executed on and at what frequency these cores

operate []. Mobility facilitates dynamic redistribution

of a parallel computation to the appropriate number

of cores (i.e., to the number of cores that minimize

energy consumption for a given performance requirement and parallel algorithm) by migrating actors. Thus,

mobility could be an important feature for energyaware programming of multicore (manycore) architectures. Similarly, energy savings may be facilitated by

being able to migrate actors in sensor networks and

clouds.

Implementations

Erlang is arguably the best-known implementation of

the Actor model. It was developed to program telecom switches at Ericsson about years ago. Some

recent actor implementations have been listed earlier.

Many of these implementations have focused on a

particular domain such as the Internet (SALSA), distributed applications (Erlang and E), sensor networks

(ActorNet), and, more recently multicore processors

(Scala Actors library, ActorFoundry, and many others in

development).

A

It has been noted that a faithful but naïve implementation of the Actor model can be highly inefficient []

(at least on the current generation of architectures).

Consider three examples:

. An implementation that maps each actor to a separate process may have a high cost for actor creation.

. If the number of cores is less than the number of

actors in the program (sometimes termed CPU oversubscription), an implementation mapping actors to

separate processes may suffer from high context

switching cost.

. If two actors are located on the same sequential

node, or on a shared-memory processor, it may

be an order of magnitude more efficient to pass a

reference to the message contents rather than to

make a copy of the actual message contents.

These inefficiencies may be addressed by compilation and runtime techniques, or through a combination of the two. The implementation of the ABCL

language [] demonstrates some early ideas for optimizing both intra-node and internode execution and

communication between actors. The Thal language

project [] shows that encapsulation, fairness, and universal naming in an actor language can be implemented

efficiently on commodity hardware by using a combination of compiler and runtime. The Thal implementation also demonstrates that various communication

abstractions such as RPC-like communication, local

synchronization constraints, and join expressions can

also be supported efficiently using various compile-time

program transformations.

The Kilim framework develops a clever postcompilation continuation-passing style (CPS) transform (“weaving”) on Java-based actor programs for

supporting lightweight actors that can pause and

resume []. Kilim and Scala also add type systems to

support safe but efficient messages among actors on a

shared node [, ]. Recent work suggests that ownership transfer between actors, which enables safe and

efficient messaging, can be statically inferred in most

cases [].

On distributed platforms such as cloud computers or grids, because of latency in sending messages

to remote actors, an important technique for achieving good performance is communication–computation

A

A

Actors

overlap. Decomposition into actors and the placement

of actors can significantly determine the extent of this

overlap. Some of these issues have been effectively

addressed in the Charm++ runtime []. Decomposition and placement issues are also expected to show up

on scalable manycore architectures since these architecture cannot be expected to support constant time access

to shared memory.

Finally, note that the notion of garbage in actors

is somewhat complex. Because an actor name may

be communicated in a message, it is not sufficient to

mark the forward acquaintances (references) of reachable actors as reachable. The inverse acquaintances of

reachable actors that may be potentially active need to

be considered as well (these actors may send a message to a reachable actor). Efficient garbage collection

of distributed actors remains an open research problem

because of the problem of taking efficient distributed

snapshots of the reachability graph in a running

system [].

Tools

Several tools are available to aid in writing, maintaining, debugging, model checking, and testing actor

programs. Both Erlang and Scala have a plug-in for

the popular, open source IDE (Integrated Development

Environment) called Eclipse (http://www.eclipse.org).

A commercial testing tool for Erlang programs called

QuickCheck [] is available. The tool enables programmers to specify program properties and input generators which are used to generate test inputs.

JCute [] is a tool for automatic unit testing of programs written in a Java actor framework. Basset []

works directly on executable (Java bytecode) actor

programs and is easily retargetable to any actor language that compiles to bytecode. Basset understands

the semantic structure of actor programs (such as the

macro-step semantics), enabling efficient path exploration through the Java Pathfinder (JPF) – a popular tool for model checking programs []. The term

rewriting system Maude provides an Actor module to

specify program behavior; it has been used to model

check actor programs []. There has also been work on

runtime monitoring of actor programs [].

Extensions and Abstractions

A programming language should facilitate the process of writing programs by being close to the conceptual level at which a programmer thinks about a

problem rather than at the level at which it may be

implemented. Higher level abstractions for concurrent

programming may be defined in interaction languages

which allow patterns to be captured as first-class

objects []. Such abstractions can be implemented

through an adaptive, reflective middleware []. Besides

programming abstractions for concurrency in the pure

(asynchronous) Actor model, there are variants of the

Actor model, such as for real-time, which extend the

model [, ]. Two interaction patterns are discussed

to illustrate the ideas of interaction patterns.

Pattern-Directed Communication

Recall that a sending actor must know the name of

a target actor before the sending actor can communicate with the target actor. This property, called locality,

is useful for compositional reasoning about actor programs – if it is known that only some actors can send

a message to an actor A, then it may be possible to

figure out what types of messages A may get and perhaps specify some constraints on the order in which

it may get them. However, real-world programs generally create an open system which interacts with their

external environment. This means that having ways of

discovering actors which provide certain services can be

helpful. For example, if an actor migrates to some environment, discovering a printer in that environment may

be useful.

Pattern-directed communication allows programmers to declare properties of a group of actors, enabling

the use of the properties to discover actual recipients are

chosen at runtime. In the ActorSpace model, an actor

specifies recipients in terms of patterns over properties

that must be satisfied by the recipients. The sender may

send the message to all actors (in some group) that satisfy the property, or to a single representative actor [].

There are other models for pattern-based communication. In Linda, potential recipients specify a pattern for

messages they are interested in []. The sending actors

simply inserts a message (called tuple in Linda) into

Actors

a tuple-space, from where the receiving actors may read

or remove the tuples if the tuple matches the pattern of

messages the receiving actor is interested in.

Coordination

Actors help simplify programming by increasing the

granularity at which programmers need to reason about

concurrency, namely, they may reason in terms of the

potential interleavings of messages to actors, instead

of in terms the interleavings of accesses to shared

variables within actors. However, developing actor programs is still complicated and prone to errors. A key

cause of complexity in actor programs is the large number of possible interleaving of messages to groups of

actors: if these message orderings are not suitably constrained, some possible execution orders may fail to

meet the desired specification.

Recall that local synchronization constraints postpone the dispatch of a message based on the contents of

the messages and the local state of the receiving actor

(see section “Local Synchronization Constraints”). Synchronizers, on the other hand, change the order in which

messages are processed by a group of actors by defining constraints on ordering of messages processed at

different actors in a group of actors. For example, if

a withdrawal and deposit messages must be processed

atomically by two different actors, a Synchronizer can

specify that they must be scheduled together. Synchronizers are described in [].

In the standard actor semantics, an actor that knows

the name of a target actor may send the latter a message. An alternate semantics introduces the notion of a

channel; a channel is used to establish communication

between a given sender and a given recipient. Recent

work on actor languages has introduced stateful channel contracts to constrain the order of messages between

two actors. Channels are a central concept for communication between actors in both Microsoft’s Singularity platform [] and Microsoft’s Axum language [],

while they can be optionally introduced between two

actors in Erlang. Channel contracts specify a protocol that governs the communication between the two

end points (actors) of the channel. The contracts are

stated in terms of state transitions based on observing

messages on the channel.

A

From the perspective of each end point (actor), the

channel contract specifies the interface of the other end

point (actor) in terms of not only the type of messages

but also the ordering on messages. In Erlang, contracts

are enforced at runtime, while in Singularity a more

restrictive notion of typed contracts make it feasible to

check the constraints at compile time.

Current Status and Perspective

Actor languages have been used for parallel and distributed computing in the real world for some time

(e.g., Charm++ for scientific applications on supercomputers [], Erlang for distributed applications []). In

recent years, interest in actor-based languages has been

growing, both among researchers and among practitioners. This interest is triggered by emerging programming platforms such as multicore computers and cloud

computers. In some cases, such as cloud computing,

web services, and sensor networks, the Actor model

is a natural programming model because of the distributed nature of these platforms. Moreover, as multicore architectures are scaled, multicore computers will

also look more and more like traditional multicomputer

platforms. This is illustrated by the -core Single-Chip

Cloud Computer (SCC) developed by Intel [] and the

-core TILE-Gx by Tilera []. However, the argument for using actor-based programming languages is

not simply that they are a good match for distributed

computing platforms; it is that Actors is a good model in

which to think about concurrency. Actors simplify the

task of programming by extending object-based design

to concurrent (parallel, distributed, mobile) systems.

Bibliography

. Agha G, Callsen CJ () Actorspace: an open distributed

programming paradigm. In: Proceedings of the fourth ACM

SIGPLAN symposium on principles and practice of parallel programming (PPoPP), San Diego, CA. ACM, New York,

pp –

. Agha G, Frolund S, Kim WY, Panwar R, Patterson A, Sturman D

() Abstraction and modularity mechanisms for concurrent

computing. IEEE Trans Parallel Distr Syst ():–

. Agha G () Actors: a model of concurrent computation in

distributed systems. MIT Press, Cambridge, MA

. Agha G () Concurrent object-oriented programming. Commun ACM (): –

A

A

Actors

. Arts T, Hughes J, Johansson J, Wiger U () Testing telecoms

software with quviq quickcheck. In: ERLANG ’: proceedings of

the ACM SIGPLAN workshop on Erlang. ACM, New York,

pp –

. Agha G, Kim WY () Parallel programming and complexity

analysis using actors. In: Proceedings: third working conference

on massively parallel programming models, , London. IEEE

Computer Society Press, Los Alamitos, CA, pp –

. Agha G, Mason IA, Smith S, Talcott C () A foundation for

actor computation. J Funct Program ():–

. Armstrong J () Programming Erlang: software for a concurrent World. Pragmatic Bookshelf, Raleigh, NC

. Astley M, Sturman D, Agha G () Customizable middleware

for modular distributed software. Commun ACM ():–

. Astley M (–) The actor foundry: a java-based actor programming environment. Open Systems Laboratory, University of

Illinois at Urbana-Champaign, Champaign, IL

. Briot JP, Guerraoui R, Lohr KP () Concurrency and distribution in object-oriented programming. ACM Comput Surv ():

–

. Chang PH, Agha G () Towards context-aware web applications. In: th IFIP international conference on distributed applications and interoperable systems (DAIS), , Paphos, Cyprus.

LNCS , Springer, Berlin