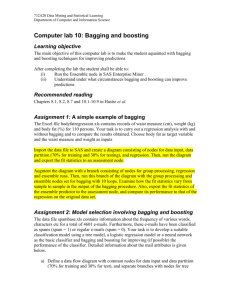

CHAPTER 3 ENSEMBLE LEARNING UNDERSTANDING ENSEMBLE: • Ensemble learning is one of the most powerful machine learning techniques that use the combined output of two or more models/weak learners and solve a particular computational intelligence problem. E.g., a Random Forest algorithm is an ensemble of various decision trees combined. • Ensemble learning is primarily used to improve the model performance, such as classification, prediction, function approximation, etc. In simple words, we can summarize the ensemble learning as follows: • "An ensembled model is a machine learning model that combines the predictions from two or more models.” • There are 3 most common ensemble learning methods in machine learning. • These are as follows: • Bagging • Boosting • Stacking CROSS-VALIDATION • Cross-validation is a technique for validating the model efficiency by training it on the subset of input data and testing on previously unseen subset of the input data. • For this purpose, we reserve a particular sample of the dataset, which was not part of the training dataset. • After that, we test our model on that sample before deployment, and this complete process comes under cross-validation. • This is something different from the general train-test split. THE BASIC STEPS OF CROSS-VALIDATIONS ARE: • Reserve a subset of the dataset as a validation set. • Provide the training to the model using the training dataset. • Now, evaluate model performance using the validation set. • If the model performs well with the validation set, perform the further step, else check for the issues. VIDEO: • https://www.youtube.com/watch?v=G8jMETlIq4o&t=22s COMMON METHODS FOR CROSS VALIDATION: 1. Validation Set Approach 2.Leave-P-out cross-validation 3.Leave one out cross-validation 4.K-fold cross-validation 5.Stratified k-fold cross-validation COMMON METHODS FOR CROSS VALIDATION: 1. Validation Set Approach 2.Leave-P-out cross-validation 3.Leave one out cross-validation 4.K-fold cross-validation 5.Stratified k-fold cross-validation K-FOLD CROSS-VALIDATION: • K-fold cross-validation approach divides the input dataset into K groups of samples of equal sizes. • These samples are called folds. • For each learning set, the prediction function uses k-1 folds, and the rest of the folds are used for the test set. • This approach is a very popular CV approach because it is easy to understand, and the output is less biased than other methods. THE STEPS FOR K-FOLD CROSS-VALIDATION ARE: • Split the input dataset into K groups • For each group: • Take one group as the reserve or test data set. • Use remaining groups as the training dataset • Fit the model on the training set and evaluate the performance of the model using the test set. EXAMPLE FOR K-FOLD CROSS-VALIDATION: • Let's take an example of 5-folds cross-validation. • So, the dataset is grouped into 5 folds. • On 1st iteration, the first fold is reserved for test the model, and rest are used to train the model. • On 2nd iteration, the second fold is used to test the model, and rest are used to train the model. • This process will continue until each fold is not used for the test fold. VIDEO • https://www.youtube.com/watch?v=TIgfjmp-4BA BOOSTING: • Boosting is an ensemble modeling technique that attempts to build a strong classifier from the number of weak classifiers. • It is done by building a model by using weak models in series. • Firstly, a model is built from the training data. Then the second model is built which tries to correct the errors present in the first model. • This procedure is continued and models are added until either the complete training data set is predicted correctly or the maximum number of models are added. TRAINING BOOSTING MODEL 1.Initialise the dataset and assign equal weight to each of the data point. 2.Provide this as input to the model and identify the wrongly classified data points. 3.Increase the weight of the wrongly classified data points. 4.if (got required results) Goto step 5 else Goto step 2 5.End TYPES: • Gradient Boosting • AdaBoost • XGBoost • CatBoost • LightGBM XGBOOST • XGBoost is an optimized distributed gradient boosting library designed for efficient and scalable training of machine learning models. • It is an ensemble learning method that combines the predictions of multiple weak models to produce a stronger prediction. • XGBoost stands for “Extreme Gradient Boosting” and it has become one of the most popular and widely used machine learning algorithms due to its ability to handle large datasets and its ability to achieve state-of-the-art performance in many machine learning tasks such as classification and regression. XGBOOST • One of the key features of XGBoost is its efficient handling of missing values, which allows it to handle real-world data with missing values without requiring significant pre-processing. • Additionally, XGBoost has built-in support for parallel processing, making it possible to train models on large datasets in a reasonable amount of time.. XGBOOST APPLICATION: • XGBoost can be used in a variety of applications, including Kaggle competitions, recommendation systems, KAGGLE: • Kaggle is the world's largest data science community with powerful tools and resources to help you achieve your data science goals. • A Kaggle competition is an event where competitors submit efficient machine learning models, and the winner earns real prize money. VIDEO: • https://www.youtube.com/watch?v=8yZMXCaFshs&t=143s BAGGING • Bagging is a ensembling method that attempts to resolve overfitting for classification or regression problems. • Bagging aims to improve the accuracy and performance of machine learning algorithms. • It does this by taking random subsets of an original dataset, with replacement, and fits either a classifier (for classification) or regressor (for regression) to each subset. • The predictions for each subset are then aggregated through majority vote for classification or averaging for regression, increasing prediction accuracy. Bagging Boosting The most effective manner of mixing predictions that belong to the same type. A manner of mixing predictions that belong to different sorts. The main task of it is decrease the variance but not bias. The main task of it is decrease the bias but not variance. Here each of the model is different weight. Here each of the model is same weight. Each of the model is built here independently. Each of the model is built here dependently. This training records subsets are decided on using row sampling with alternative and random sampling techniques from the whole training dataset. Each new subset consists of the factors that were misclassified through preceding models. It is trying to solve by over fitting problem. It is trying to solve by reducing the bias. If the classifier is volatile (excessive variance), then apply bagging. If the classifier is stable and easy (excessive bias) the practice boosting. In the bagging base, the classifier is works parallelly. In the boosting base, the classifier is works sequentially. Example is random forest model by using bagging. Example is AdaBoost using the boosting technique. STEPS OF BAGGING : • Step 1: Multiple subsets are made from the original information set with identical tuples, deciding on observations with replacement. • Step 2: A base model is created on all subsets. • Step 3: Every version is found in parallel with each training set and unbiased. • Step 4: The very last predictions are determined by combining the forecasts from all models. APPLICATION OF THE BAGGING: 1. IT: • Bagging can also improve the precision and accuracy of IT structures, together with network intrusion detection structures. • In the meantime, this study seems at how Bagging can enhance the accuracy of network intrusion detection and reduce the rates of fake positives. 2. Environment: • Ensemble techniques, together with Bagging, were carried out inside the area of far-flung sensing. This study indicates how it has been used to map the styles of wetlands inside a coastal landscape. APPLICATION OF THE BAGGING: • 3. Finance: • Bagging has also been leveraged with deep gaining knowledge of models within the finance enterprise, automating essential tasks, along with fraud detection, credit risk reviews, and option pricing issues. This research demonstrates how Bagging amongst different device studying techniques was leveraged to assess mortgage default hazard. This highlights how Bagging limits threats by saving you from credit score card fraud within the banking and economic institutions. • 4. Healthcare: • The Bagging has been used to shape scientific data predictions. These studies (PDF, 2.8 MB) show that ensemble techniques had been used for various bioinformatics issues, including gene and protein selection, to perceive a selected trait of interest. More significantly, this study mainly delves into its use to expect the onset of diabetes based on various threat predictors. RANDOM FOREST • Random Forest is a popular machine learning algorithm that belongs to the supervised learning technique. • It can be used for both Classification and Regression problems in ML. • It is based on the concept of ensemble learning, which is a process of combining multiple classifiers to solve a complex problem and to improve the performance of the model. RANDOM FOREST • "Random Forest is a classifier that contains a number of decision trees on various subsets of the given dataset and takes the average to improve the predictive accuracy of that dataset." • Instead of relying on one decision tree, the random forest takes the prediction from each tree and based on the majority votes of predictions, and it predicts the final output. • The greater number of trees in the forest leads to higher accuracy and prevents the problem of overfitting. ASSUMPTIONS FOR RANDOM FOREST • Since the random forest combines multiple trees to predict the class of the dataset, it is possible that some decision trees may predict the correct output, while others may not. But together, all the trees predict the correct output. Therefore, below are two assumptions for a better Random forest classifier: • There should be some actual values in the feature variable of the dataset so that the classifier can predict accurate results rather than a guessed result. • The predictions from each tree must have very low correlations. HOW DOES RANDOM FOREST ALGORITHM WORK? • Step-1: Select random K data points from the training set. • Step-2: Build the decision trees associated with the selected data points (Subsets). • Step-3: Choose the number N for decision trees that you want to build. • Step-4: Repeat Step 1 & 2. • Step-5: For new data points, find the predictions of each decision tree, and assign the new data points to the category that wins the majority votes. APPLICATION: 1. Banking: Banking sector mostly uses this algorithm for the identification of loan risk. 2.Medicine: With the help of this algorithm, disease trends and risks of the disease can be identified. 3.Land Use: We can identify the areas of similar land use by this algorithm. 4.Marketing: Marketing trends can be identified using this algorithm. • Advantages of Random Forest • Random Forest is capable of performing both Classification and Regression tasks. • It is capable of handling large datasets with high dimensionality. • It enhances the accuracy of the model and prevents the overfitting issue. • Disadvantages of Random Forest • Although random forest can be used for both classification and regression tasks, it is not more suitable for Regression tasks. VIDEO: • https://www.youtube.com/watch?v=D_2LkhMJcfY