GAN-Boosted Neural Networks for Drilling Bit Penetration Modeling

advertisement

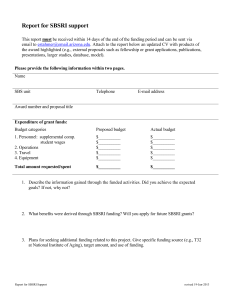

Applied Soft Computing 136 (2023) 110067 Contents lists available at ScienceDirect Applied Soft Computing journal homepage: www.elsevier.com/locate/asoc Developing GAN-boosted Artificial Neural Networks to model the rate of drilling bit penetration ∗ Mohammad Hassan Sharifinasab 1 , Mohammad Emami Niri 2 , , Milad Masroor 3 Institute of Petroleum Engineering, School of Chemical Engineering, College of Engineering, University of Tehran, Tehran, Iran graphical article abstract info Article history: Received 14 July 2022 Received in revised form 16 January 2023 Accepted 22 January 2023 Available online 2 February 2023 Keywords: GAN-Boosted Neural Networks Convolutional Neural Network Residual structure a b s t r a c t The goal of achieving a single model for estimating the rate of drilling bit penetration (ROP) with high accuracy has been the subject of many efforts. Analytical methods and, later, data-based techniques were utilized for this purpose. However, despite their partial effectiveness, these methods were inadequate for establishing models with sufficient generality. Based on deep learning (DL) concepts, this study has developed an innovative approach that produces general and boosted models capable of more accurately estimating ROPs compared to other techniques. A vital component of this approach is using a deep Artificial Neural Network (ANN) structure known as the Generative Adversarial Network (GAN). The GAN structure is combined with regressor (predictive) ANNs, to boost their ∗ Corresponding author. E-mail addresses: hassan.sharifi75@ut.ac.ir (M.H. Sharifinasab), emami.m@ut.ac.ir (M. Emami Niri), milad.masroor7275@ut.ac.ir (M. Masroor). 1 M.Sc. holder of petroleum drilling engineering. 2 Assistant Professor. 3 M.Sc. holder of petroleum production engineering. https://doi.org/10.1016/j.asoc.2023.110067 1568-4946/© 2023 Elsevier B.V. All rights reserved. M.H. Sharifinasab, M. Emami Niri and M. Masroor Principle component analysis ROP modeling Sensitivity analysis Applied Soft Computing 136 (2023) 110067 performance when estimating the target parameter. The predictive ANNs of this study include MultiLayer Perceptron Neural Network (MLP-NN) and 1-Dimensional Convolution Neural Network (1D-CNN) structures. More specifically, the key idea of our approach is to utilize GAN’s capability to produce fake samples comparable to true samples in predictive ANNs. Therefore, the proposed approach introduces a two-step predictive model development procedure. As the first step, the GAN structure is trained with the target of the problem as the input feature. GAN’s generator part, which can produce fake ROP samples similar to the true ones after training, is frozen and then replaces the output layer’s neuron of the predictive ANNs. In the second step, final predictive ANNs carrying frozen trained-generator called GAN-Boosted Neural Networks (GB-NNs) are trained to make predictions. Because this approach reduces the computational load of the predictive model training process and increases its quality, the performance of predictive ANN models is improved. An additional innovation of this research is using the residual structure during 1D-CNN network training, which improved the performance of the 1DCNN by combining the input data with those features extracted from the inputs. This study revealed that the GB-Res 1D-CNN model, a GAN-Boosted 1-Dimensional Convolutional Neural Network with a Residual structure, results in the most accurate prediction. The validity of the GB-Res 1D-CNN model is confirmed by its successful implementation in blind well. As the final step of this study, we conducted a sensitivity analysis to identify the effect of different parameters on the predicted ROP. As expected, the DS and DT parameters significantly affect the model-estimated ROP. © 2023 Elsevier B.V. All rights reserved. Over the years, ML techniques have been widely used by scientists and engineers to address complex modeling, design and optimization problems [16–20]. In particular, subsurface drilling specialists employed ML-based approaches to monitor, identify, predict, and describe the trends and patterns among various geological and drilling parameters in real-time [e.g., [21–24]]. In this context, ANNs have been the most popular choice for ROP prediction, as reported by various researchers [e.g., [25–33]]. Some other forms of shallow ML algorithms have also been utilized in ROP modeling; for example, Random Forest (RF) [27,34] and Support Vector Machine (SVM) [9,25] have been used to construct ROP estimation models. Despite the acceptable results obtained by the shallow ANNs (e.g., ANNs with a single hidden layer) in ROP estimation, due to the complexity of the relationships between ROP and its related parameters, the generalizability of this ML method is not entirely satisfactory. In fact, as the complexity of the relationships between the parameters increases, the capability of shallow ANNs decreases because (1) shallow ANNs cannot communicate among their input parameters, (2) As the number of training samples increases, the performance of shallow ANNs does not considerably improve (especially after 7000 samples). The recent progress in DL and image-based artificial intelligence (AI) methods has shifted the studies toward them. DL techniques usually have more layers and thus have more capability to deal with complex problems. They can also communicate between parameters in the input layer, which leads to the extraction of complex nonlinear patterns and considers the effects of parameters on each other [35]. Convolution Neural Networks (CNNs) are a widely used subset of DL techniques. Convolutional units called Kernels operate on the hidden layers of CNNs, which place the input layer under the convolution operation. This commonly includes a layer that produces a dot product between the kernel and the input layer matrix. Unlike shallow ANNs, this operation allows the CNN parameters to be adjusted based on the relationship between the input matrix elements. In addition, the kernels enable the network to utilize data in various dimensions, such as numerical data [36] and image data [37]. The CNNs with various structures have been used in many classification and regression problems thanks to the ability of convolution kernels to extract features effectively and prevent information loss and overfitting. For example, Matinkia et al. [38] applied the CNN method to construct a regression model to estimate ROP. Masroor et al. [39,40] showed that, compared to ML methods, 1D-CNN (compatible with 1D samples) enables better handling of a regression problem. They 1. Introduction The rate of drilling bit penetration (ROP) refers to the speed at which a drilling bit penetrates a formation to deepen the borehole. It is often measured at each level of depth in a unit of speed, which is usually meters per hour. The ROP is an essential parameter of drilling operations, as it may greatly affect the drilling performance and economic efficiency [1]. ROP depends on drilling parameters and formation characteristics. Drilling parameters can be categorized into controllable and uncontrollable factors [2]. The controllable parameters, such as drilling fluid rate (Q), drill string rotation speed (DS), stand pipe pressure (SPP), bit torque (T), and bit weight (WOB), can be modified without adversely affecting drilling operations. On the other hand, uncontrollable factors, such as drilling fluid density and rheological properties, and drilling bit diameter, cannot be easily altered due to geological and economic concerns [3]. Depending upon the borehole condition and formation properties, the drilling engineer can adjust the controllable parameters based on his experience or according to the equations and models to achieve the maximum drilling speed. Therefore, it is necessary to derive mathematical equations/models to quantify the relationship between the drilling controllable parameters and ROP. This can contribute to determining the optimal values of the controllable parameters in any drilling operational conditions [2,4]. ROP estimation models can be classified into physics-based (or Traditional) and data-driven (i.e., Statistical or Machine Learning) models [5]. The Traditional ROP prediction models are developed based on physical laws and incorporate the effects of drilling parameters and formation characteristics on the prediction process [6–8]. However, they often result in weak predictions because they rely on empirical coefficients, which require supporting data (such as bit parameters and mud properties) and conform to only one facies type (as lithology plays a vital role in determining the empirical coefficients) [9]. The coefficients of the traditional models are required to fit with the offset wells as accurately as possible, which is often a challenging problem in practice [10–12]. In this context, intelligent techniques, particularly machine learning (ML) methods, have been utilized to address the problems mentioned above in traditional models. The process of ML involves providing computers with the ability to analyze and uncover relationships among some features in a dataset using mathematical algorithms. In fact, the machines can learn from data samples and facilitate the solution of nonlinear or complex problems that are difficult to solve analytically [13–15]. 2 M.H. Sharifinasab, M. Emami Niri and M. Masroor Applied Soft Computing 136 (2023) 110067 also showed that transferring the samples’ dimension space into a higher dimension space to use 2D convolution units and implementing a particular structure called residual structure can improve the performance of the convolution neural networks for prediction. Skip connections in residual structures cause the patterns in the input layer to end up next to the patterns extracted from the feature extraction section. As a result, linear relationships in the input layer directly flow into the learning section along with the extracted features. In this study, we propose a boosting technique based on the Generative Adversarial Network (GAN) method to reinforce shallow and deep ANNs (called GB-NNs) in the ROP estimation problem. In fact, a GAN structure is involved in the model before launching the learning process. The whole process is performed by a two-step training procedure, consisting of (i) a GAN-training step and combining predictive ANNs with GAN’s generator, and (ii) a GB-NNs-training step, which results in a reduction of overfitting and a more accurate ROP prediction. We demonstrate that (1) Boosting shallow and deep models with a GAN structure can enhance accuracy in ROP estimation. (2) The residual form of the 1D-CNN improves its performance. (3) The deep ANNs outperform the shallow ones. Finally, as the most accurate model, we introduce the GB-Res 1D-CNN model, a deep NN model with a residual structure reinforced by the GAN network. Since an essential preprocessing step is choosing the optimal features as the network input, it is required to reduce the number of features while maintaining the accuracy and performance of the model. Among the techniques available to extract the most relevant features from the input dataset, we employed Principal Component Analysis (PCA) [41] to reduce the complexity of models and improve their performance. Fig. 1. The structure of MLP-NN with a single hidden layer. 2.1.1. Multilayer Perceptron Neural Network (MLP-NN) One of the simplest types of ANNs is a fully connected neural network of MLP-NN with a single hidden layer. This network has an input layer, a hidden layer with various numbers of neurons, and an output layer, all linked by strings of weights. A single neuron may constitute the output layer (for regression tasks), or multiple neurons may be utilized (for classification tasks). The structure of MLP-NN with a single hidden layer for a regression task is shown in Fig. 1. The output of this network can be expressed as Eq. (2): F (X ) = f ((σ (X T · θhidden−input ))T · Woutput−hidden ) Where X is the transposed feature matrix, θhidden−input is the matrix of weights connecting the hidden layer to the input layer, Woutput−hidden is the matrix of weights connecting the output layer to the hidden layer, σ is a non-linear activation function, and f is a linear activation function. This study aims to enhance the performance of the predictive ANNs in the ROP modeling problem. The GAN structure is utilized for this purpose; in fact, a part of the GAN structure called the Generator is incorporated into predictive ANNs, leading to the development of boosted structures known as GB-NNs. Therefore, this section introduces ANNs and describes the used networks, such as the MLP, 1D-CNN, and GAN structure. Finally, the development process of GB-NNs are explained. 2.1.2. One-Dimensional Convolutional Neural Network (1D-CNN) A 1D-CNN consists of two major parts: the feature extraction part (which includes convolutional layers and pooling layers) and the learning part (which consists of fully connected layers). There are numerous combinations of layers within these two sections. Additional layers are typically used, such as the flatten layer, which converts the output of the feature extraction section (feature map) into a one-dimensional matrix to be used in the learning section. It is also common to incorporate a dropout layer to prevent the overfitting problem. The architecture of a deep 1D-CNN is schematically illustrated in Fig. 2. Two convolutional layers, two pooling layers, and a fully connected layer are included in this network. Convolutional layer: In 1D-CNNs, the convolutional layer consisting of convolution kernel units is the core component of the network. A kernel is a spatial numerical matrix that operates as a processor unit (neurons in fully connected neural networks) and performs convolution on input data in convolutional networks. Each kernel unit moves along the input matrix and produces a feature matrix called a feature map entering the next layer. The size of the feature map can be expressed as Eq. (3). As shown in Fig. 2, the kernel units are stacked in the convolutional layer. The kernels’ size, number, stride, and padding all influence the convolutional layer’s structure. The kernel size is a spatial area representing a local (or restricted) receptive field over the input features matrix. The kernel is called one-dimensional when the input matrix is a vector. Defining the kernel’s stride will help determine how it moves through inputs. The padding technique 2.1. Artificial Neural Networks (ANNs) ANN is a widely used supervised ML technique to solve regression and classification problems. Based upon a network of interconnected artificial neurons, it represents an information processing procedure with similar characteristics to the behavior of an actual brain. ANN involves training a set of parameters called weights and biases on a dataset to determine a function: f (·) : Rm → Ro (2) T 2. Theory (1) Where m is the number of dimensions for input, and o is that of the output. A non-linear approximator can be learned for classification or regression from a set of features and a target. Typically, ANNs are composed of three main components (an input layer, an output layer, and a hidden part that may contain one or more layers), including non-linear neurons. Therefore, every neuron has a non-linear activation function (except for the input nodes). While several activation functions exist, sigmoid and ReLU are the most common. In an ANN, the structure, architecture, and processors change according to the type of the problem. The following sections will examine some types of ANNs and their underlying architectures used in this study. 3 M.H. Sharifinasab, M. Emami Niri and M. Masroor Applied Soft Computing 136 (2023) 110067 Fig. 2. A general structure of 1D-CNN. involves adding or removing values from the input dimension to allow the kernel unit to traverse the entire dimension smoothly. Fig. 3 illustrates the process carried out by the one-dimensional kernel unit. As the kernel moves over different regions of the input matrix, it performs the dot product for each place. It is possible to adjust the kernel speed by adjusting the stride parameter. For example, if the stride parameter is 1, the kernel will shift by one pixel at each iteration, and if it is 2, it will move two pixels per iteration. Moreover, the padding parameter determines how the kernel covers the input matrix. As an example, if padding is 0 (Fig. 4A), no border is added to the input matrix, so the kernel center is never placed on the border pixels of the input matrix. In the case of padding 1 (Fig. 4B), an extra margin with zero values is added to the input matrix border. For the kernel center to span all pixels of the input matrix (the feature map has the same size as the input matrix), the padding value must be as Eq. (4). Feature map size = Input v olume size − Kernel size + 2(Padding) Padding v alue = Stride Kernel Size − 1 2 +1 (3) (4) Pooling layer: Pooling reduces the size of the feature map (Fig. 5). We select the highest value from every region with the maximum pooling layer, while with the average pooling layer, the average value will be chosen. A pooling layer is placed after each convolutional layer. Flatten layer: Flatten layer is commonly used in transitioning from the convolution layer to the fully connected layer. This layer makes the produced feature maps suitable to enter the fully connected layer. Fully connected layer(s): This is the section responsible for the learning role. It consists of the fully connected neural layers. Each node in the fully connected layer is connected to nodes in the previous layer. Fig. 3. Convolution operation through a kernel unit. 2.1.3. Residual 1D-Convolutional Neural Network (Res 1D-CNN) As part of this study, to improve the model’s performance, the general architecture of 1D-CNN is modified by adding a residual structure [42]. However, the more complex and deep the ANN, the more likely it is to suffer from vanishing/exploding gradients, which results in performance degradation. He et al. [42] developed a deep residual architecture called ResNet to overcome this problem. It is a stack of residual blocks that makes up the ResNet architecture. A layer’s output is taken and added to the output Fig. 4. Moving Kernel on input matrix. (A) Padding = 0. (B) Padding = 1. of a deeper layer in the residual block. This crossing of layers in residual architecture is called skip connection or shortcutting. By introducing a residual form, the depth of the model can be increased without adding additional parameters to the training process or causing further computation complexity. Fig. 6 illustrates the configuration of a single residual block and the skip 4 M.H. Sharifinasab, M. Emami Niri and M. Masroor Applied Soft Computing 136 (2023) 110067 enter the discriminator section. Afterward, this section returns a probability between 0 and 1, where 1 indicates that the target value is real and 0 indicates that it is fake or produced by the generator network. 2.2. Development of GAN-Boosted Neural Networks (GB-NNs) The concept of boosting ANNs by GAN structure includes reinforcing predictive ANNs through a GAN structure. This modifying process involves combining an arbitrary predictive ANN that receives inputs with a GAN structure that has already encountered the output of the problem before combining the two. Thus, the network training will be conducted in two stages. In the first stage of the training, a GAN structure gets trained using the true target values contained in the data, which are the output of the problem. Then, the frozen GAN generator section is extracted and then replaced the output layer of the predictive ANN, which results in the final ANN entering the second stage of training. In the context of this discussion, the frozen term refers to the immutability of weights and biases throughout the training process. Therefore, the weights and biases of the generator do not change when integrated with the predictive ANN during the GB-NN training process. Figs. 9 and 10 show an unmodified (unboosted) predictive ANN and a modified (boosted) predictive ANN, respectively. According to Fig. 9, an ANN comprises an input layer, a hidden neural layer (or a section containing several layers), and an output layer with one neuron. Depending on the number, type, and structure of layers in the neural network, it may be a MLPNN with a single hidden layer, 1D-CNN, or Res1D-CNN. In the context of our problem (the ROP prediction), the ANN’s hidden part receives inputs such as drilling parameters and conventional well logs through the input layer. In the output layer, the output of the hidden part, which is a matrix of specific dimensions, enters a neuron, which then estimates the target value. Assume that a GAN structure is familiar with target samples and that its generator can convert a latent matrix into a fake target value that closely matches the true one. As illustrated in Fig. 10, this generator can be removed from the GAN structure and replaced by the neuron in the output layer of a predictive ANN. As a result, the ANN’s hidden part is regulated in a way that should produce a normally distributed matrix to feed the frozen generator. This reduces the computational load of the predictive ANN for the following reasons. (1) The weights linking the latent matrix, produced by the ANN’s hidden part, to the generator are frozen during the training of the predictive ANN; only those connecting the input layer to the hidden layer need to be altered. (2) Given that adjusting the weights for the connections between the input layer and the hidden part will result in a matrix with normal distribution, the complexity of the derivative calculations of the training process will be lower. By this method, the hidden part output enters the generator as a latent matrix, and the generator estimates the target value rather than the output layer neuron. Fig. 11 shows a flowchart for the two-step training process of the GB-NNs. Fig. 5. Pooling operation. Fig. 6. Residual block, each rectangle represents a function layer (F). connection. X , the output from the preceding layer, is used as the input for another layer (e.g., a convolutional block) where function F converts it to F (X ). Following the transformation, the original data, X , is added to the transformed result, F (X ) + X being the final result of the residual block. Fig. 7 illustrates a schematic overview of the developed Res 1D-CNN architecture based on the discussed modifications. Throughout this study, the input layer is positioned next to the output of the 1D-CNN feature extraction section. As a result, the patterns in the input layer can remain intact alongside the extracted features. Thus, the learning section of 1D-CNN can use more information. 2.1.4. Generative Adversarial Network (GAN) A GAN structure works as a two-part deep neural network designed to receive actual data (True samples) and generate synthetic data (Fake samples) with the most similarity to the real ones. This deep ANN contains two different neural components: generator and discriminator. The generator section of the network provides the capability to convert a normal distribution random matrix, called latent matrix, into synthetic data similar to real data during the training process. On the other hand, the discriminator section requires configuring through a training process to discern generated synthetic data from true data with the smallest difference. Therefore, the training process comprises constructive interaction between a generator and discriminator, ultimately leading to a network capable of producing data similar to actual ones. Fig. 8 illustrates a schematic view of the GAN structure. The latent matrix in the generator section is used to obtain a fake target value. This fake target value and the true target value 3. Method As illustrated in Fig. 12, the proposed workflow for ROP prediction consists of three main stages of data gathering and description (Stage 1), data preprocessing and feature selection (Stage 2), and model training and evaluation (Stage 3). 5 M.H. Sharifinasab, M. Emami Niri and M. Masroor Applied Soft Computing 136 (2023) 110067 Fig. 7. The architecture of the Res 1D-CNN model. The input layer is followed by a sequence of convolutional layers at a residual block to extract features from the map. It is also concatenated with a flattened feature map through a skip connection. Fig. 8. Schematic representation of the GAN structure, consisting of a generator and a discriminator. The network receives a random matrix called the latent matrix and creates a fake target sample similar to a true target sample. Fig. 9. Schematic of an unboosted predictive ANN. This network can be MLP, 1D-CNN, and ResCNN, based on the hidden layers’ number, type, and structure. 3.1. Data gathering and description operations include Final Drilling Reports, Daily Drilling Reports, and Daily Mud logging Reports. Well-logging data, as its name implies, is obtained by well-logging tools and is the measurement of some physical properties related to the subsurface formations. Both data types, which are different from well to well, are placed next to each other and form the dataset. In this study, drilling and well-logging data of three wells from an oil field in South-West Iran were used to build the final database (two training wells, The dataset used in this study is classified into two categories of drilling data and well logging data. The drilling data can be obtained from conventional drilling-related logs such as master and drilling logs. These logs result from measuring and adjusting the parameters of drilling operations at the surface. Other resources used to extract and analyze data related to well drilling 6 M.H. Sharifinasab, M. Emami Niri and M. Masroor Applied Soft Computing 136 (2023) 110067 Fig. 10. Schematic for a predictor ANN boosted by a pre-trained frozen GAN generator (GB-NN). The generator is frozen and placed into the predictive ANN in place of a single output neuron once the weights of the generator network are adjusted to produce fake ROP in the GAN training phase. Table 1 Drilling and well logging parameters in the dataset. Data category Parameter, unit Definition Source Drilling parameters ROP, m/h Drilling bit penetration speed. The rig site acquisition system. DS, rpm The drilling string’s rotational speed. WOB, klbf The downward force exerted by the drill collars on the drilling bit. SPP, psi Total pressure loss occurring in the drilling system due to the drilling fluid pressure drop within the fluid circulation path along the well. Torque, klbf .ft It is the energy required to overcome the rotational friction against the wellbore, the viscous force between the drill string and drilling fluid, and the torque of the drilling bit to rotate it at the bottom of the hole. Well-logging parameters MW, gr/cc Drilling fluid density. Q, gpm The flow rate of drilling fluid. CGR, GAPI Natural radiation caused by potassium and thorium in the formation. DT, µs/ft The delay time of compressional waves. NPHI, DEC Compensated neutron porosity log. RHOB, gr/cc Compensated bulk density log. A and B, and one blind well, C). It should also be noted that the data used belongs to a specific depth interval of each well (Sarvak Formation, which is composed of limestone and interspersed with layers of shale and anhydrite). Table 1 lists the definitions of all parameters present in the dataset. Table 2 presents the statistical parameters for wells A, B, and C. Wireline/ LWD (Logging While Drilling) tool. The LWD refers to a specific well logging technique which provides real-time measurement of the physical characteristics of a subsurface formation as it is drilled. 3.2. Data preprocessing 3.2.1. Outlier elimination and denoising Outliers and noisy samples in a dataset can adversely affect a ML system’s performance [43–45]. 7 M.H. Sharifinasab, M. Emami Niri and M. Masroor Applied Soft Computing 136 (2023) 110067 Fig. 11. A flowchart of the training process of GB-NNs. An outlier is a sample that has an unreasonable value. For example, in the dataset used for this research, a sample with a WOB or DS equal to zero and a ROP greater than zero can be considered an outlier. Such samples are not logical and must be identified and manually removed from the database. To efficiently handle the noisy data, a smoothing filter called Savitzky–Golay (SG) [46] was employed. SG filter reduces noise on data through a polynomial function, where it replaces the original values with more smooth ones. Within a selected interval, a polynomial function of order n is fitted to m points based on the least-squares error. This interval should contain odd numbers of points. Increasing the polynomial order or decreasing the number of points within the interval could keep the original data structure and reduce the level of smoothing. Therefore, it is vital to properly determine the polynomial order and the number of points within the interval. We conducted a sensitivity analysis to determine the optimal settings for these two parameters. A polynomial order of 1 to 5 and a point interval of 3 to 49 were considered. An ANN structure with a single hidden layer containing 10 neurons (equal to the number of input parameters) was then selected to train with different values for both parameters to determine optimal values. The ANN was trained using the filtered training 8 M.H. Sharifinasab, M. Emami Niri and M. Masroor Applied Soft Computing 136 (2023) 110067 Fig. 12. The proposed workflow for the ROP modeling process: Stage (1) data gathering and description, Stage (2) data preprocessing and feature selection, and Stage (3) model training and evaluating. Table 2 The summary statistics of drilling and well logging variables for each of the three wells. Well name, no. of samples Variable Minimum Maximum Mean SD A, 6551 ROP WOB DS SPP Torque MW Q CGR DT NPHI RHOB 19.05 1.9401 46.0 478.0 1.9 1.3 212.74 2.9766 49.1127 0.014 1.9201 0.56 18.2543 138.0 2268.0 7.6 1.36 502.9 46.7163 110.6902 0.3266 2.7506 10.0707 11.2312 124.0146 1967.0361 5.6104 1.3414 441.6996 7.177 66.8133 0.1172 2.5005 4.3533 3.8642 16.9024 318.3065 0.9104 0.0126 45.4125 3.8537 8.0663 0.0668 0.1279 B, 6801 ROP WOB DS SPP Torque MW Q CGR DT NPHI RHOB 0.1339 2.0283 12.1 560.0 0.7933 1.16 202.6199 5.3483 42.4247 0.01 2.1386 27.027 35.0535 156.0 3336.0 13.334 1.53 1948.0043 46.9711 120.3512 0.49 2.7283 7.8517 14.1828 114.6806 2661.6506 6.3906 1.3263 741.8476 11.4139 65.2282 0.112 2.4936 4.9718 4.973 22.5396 602.0977 1.5358 0.036 160.8168 3.9147 7.8237 0.0727 0.1129 C, 6631 ROP WOB DS SPP Torque MW Q CGR DT NPHI RHOB 0.42 0.8818 82 1908 2.74 1.3 503.89 2.2746 49.819 0.0189 2.1596 11.32 20.4809 125 2467 7.28 1.38 593.99 68.3711 108.3841 0.4333 2.6975 5.5568 11.9254 114.0482 2246.3163 5.1966 1.343 556.2272 7.264 64.605 0.1323 2.4659 2.1178 3.4468 8.186 113.9434 0.716 0.0107 20.9716 6.0735 7.9607 0.0673 0.1271 dataset through Adam optimizer approach [47] (an adaptive gradient descent method commonly used in back-propagation (BP) algorithms for training feed-forward neural networks [48,49]), and its performance was evaluated with the filtered blind dataset. Lastly, the correlation coefficient between the actual and predicted values was calculated, as shown in Fig. 13. According to Fig. 13, the optimal polynomial value and interval size are 1 and 45, respectively. In Figs. 14 and 15, and 16, the graphs of the 9 M.H. Sharifinasab, M. Emami Niri and M. Masroor Applied Soft Computing 136 (2023) 110067 Fig. 13. Correlation coefficients from the ANN model for various interval sizes and the SG filter’s polynomial order. Fig. 14. Graph comparing measured data (red lines) with denoised data (black lines) for well A. various drilling and well log parameters for all three wells are shown before (red) and after (black) passing through the SG filter. A heat map of feature correlations is displayed in Fig. 17, which shows coefficients of correlation between all parameters. It can be seen that the correlation between ROP and Q (mud fow rate) is negligible. Also, SPP has a significant linear correlation (R2 more than 0.9) with Q, and thus Q is removed from the dataset. Furthermore, there is a high linear correlation between DT, RHOB, and NPHI, which means two of these variables are required to be dropped, but which should remain? This question will be addressed in the next steps. Non-linear analysis using ANNs: An examination of the nonlinear relationships between features and the target parameter can be carried out using ANNs [50]. Because an ANN consists of a hidden layer so that each input feature is multiplied by the weights, and then the sum of their multiplications is entered into a non-linear function to estimate the target parameter, it may be concluded that the weights assigned to each input feature represent the non-linear correlation between the feature and the target parameter. 3.2.2. Feature selection A feature selection procedure reduces the number of input variables, hence the computational requirements, and enhances the predictive model performance. We used three feature selection methods in this study to determine the key input variables in the training dataset (well A and well B): (1) linear regression analysis, (3) non-linear analysis using ANNs, and (3) PCA. Linear regression analysis: Linear regression analysis uses correlation coefficients to examine linear relationships between input variables and the target parameter/s. Features with a small linear correlation with the parameter of interest can be identified and removed. Using this method, it is also possible to identify and remove the input features with a high linear relationship so that duplicate patterns are not introduced into the training process. 10 M.H. Sharifinasab, M. Emami Niri and M. Masroor Applied Soft Computing 136 (2023) 110067 Fig. 15. Graph comparing measured data (red lines) with denoised data (black lines) for well B. Fig. 16. Graph comparing measured data (red lines) with denoised data (black lines) for well C. If vector X (x1 , x2 , . . . , xM ) is the ANN input layer and vector Wj (w1j , w2j , . . . , wMj ) is the weights of neuron j (j = 1, 2, . . . , N) in the hidden layer, the output of neuron j can be expressed as Eq. (5). output. oj = σ (X T Wj ) In Table 3, the non-linear correlations between the features in the training dataset and the ROP parameter are reported. Based on the ANN trained in the data denoising section that had the highest R2 , these values were determined. The linear regression analysis concluded that two parameters among DT, NPHI, and RHOB must be removed due to their close linear relationship. However, their high linear correlation with the ROP parameter made it challenging to choose one of them to be retained. After examining the non-linear relationship between these three parameters and ROP, we determined that the NPHI and RHOB parameters are significantly less critical than DT and should be excluded from the dataset. Principal component analysis (PCA): PCA is a widely used technique for reducing dimensionality in datasets [51]. It aims ∑N ⏐⏐ ⏐⏐ j=1 wij feature importancexi = ∑M ∑N ⏐ ⏐ ⏐wij ⏐ i=1 (5) Where σ is a non-linear activation function applied in the ANN’s hidden layer that nonlinearly transmits the contents of a neuron in the hidden layer to the next layer. As illustrated in Fig. 18, N weights are attached to the N neurons in the hidden layer for each feature, and the greater the weight which attaches a feature to neuron j, results in the more effect of that feature on neuron j. As shown in Eq. (6), the magnitude of the effect of a particular feature (xi ) on the ANN hidden layer (feature importance) may be determined by calculating the sum of the absolute values of the attaching weights of that feature which can be divided by the sum of all features’ importance. In other words, feature importance is a kind of non-linear correlation between the feature and the ANN 11 (6) j=1 M.H. Sharifinasab, M. Emami Niri and M. Masroor Applied Soft Computing 136 (2023) 110067 Fig. 17. Feature correlation heat map that shows correlation coefficients between every two features. Table 3 Non-linear correlations between dataset features and the ROP (features importance), derived by ANN concept. Parameter Feature importance DS WOB SPP Torque MW Q CGR DT NPHI RHOB 0.474447165 0.164021691 0.037629097 0.101870934 0.019082706 0.031409586 0.065480516 0.238409536 0.025232925 0.033376421 to reduce the number of variables in a dataset while preserving as much information as possible. By definition, PCA finds the directions called Principal Components (PCs) that demonstrate the highest possible variance or the direction possessing the most information. The original space is transformed into a new one using PCA (Fig. 19). The PCs consist of uncorrelated variables generated by linearly combining existing variables in a way that the first PC has the most variance (information), the second PC has the most remaining information, and so forth. It is necessary to standardize the existing data before using PCA. The standardization of the dataset can be achieved using Eq. (7). xi,std = xi − av eragex Fig. 18. A schematic view of an ANN with a single hidden layer. Each node (input feature) in the input layer is connected to the hidden layer neurons using weights. The weights indicate the influence of inputs on the hidden layer neurons. For instance, weight w11 indicates the impact of feature 1 on hidden layer neuron 1, while weight w21 indicates the effect of feature 2 on hidden layer neuron 1. Once the covariance matrix is generated, the eigenvalues (λ1 , λ2 , . . . , λN ), which indicate the variation of corresponding PCs, and eigenvectors (v1 , v2 , . . . , vN ) are calculated. There is a corresponding eigenvector for each eigenvalue that has N number of relative coefficients (vi = RC1i , RC2i , . . . , RCNi ). RCji represents the effect of feature j on the eigenvector i. Following the calculation of eigenvalues and eigenvectors, the PCs that exhibit the highest number of eigenvalues are selected. Fig. 20 shows that, among all ten PCs, 97.86% of the total dataset variation is accounted for by the first six PCs (PC1 to PC6 ). In contrast, the other four PCs comprise only 2.14% of the total variation. As a result, the 10-dimensional dataset of this study (dataset with ten variables) can be condensed into a 6-dimensional dataset. (7) standard dev iationx In the next step, the covariance matrix is calculated. ⎡ Cov.Matrix = ⎣ ⎢ Cov (p1 , p1 ) .. . Cov (pN , p1 ) ··· .. . ··· N = Number of v ariables indataset . Cov (p1 , pN ) ⎤ .. . ⎥ ⎦, Cov (pN , pN ) (8) where Cov (pi , pj ) indicates covariance of the ith and the jth parameters in the dataset. 12 M.H. Sharifinasab, M. Emami Niri and M. Masroor Applied Soft Computing 136 (2023) 110067 Table 4 Relative coefficients of the first six PCs. Parameter v1 v2 v3 v4 v5 v6 DS WOB SPP Torque MW Q CGR DT NPHI RHOB −0.28095 −0.4214 −0.43723 −0.39923 −0.26045 0.048657 −0.03736 −0.13074 0.245571 −0.93617 0.047275 −0.19252 0.018546 0.043967 −0.02924 0.036426 0.012969 0.046121 −0.27941 −0.23612 −0.01542 0.854505 0.275147 0.212894 −0.10539 −0.25636 0.092881 −0.81423 −0.02465 0.325951 0.058472 0.107607 0.266467 −0.21197 −0.11927 0.276378 0.196753 −0.30271 −0.30614 −0.15363 −0.25305 0.181297 −0.43038 −0.44038 0.444217 0.024681 −0.44764 −0.20187 0.232894 0.232689 −0.23217 0.31161 −0.33444 0.754833 0.160608 −0.21609 0.260013 0.091791 0.08324 −0.01008 Table 5 Mathematical metrics used in this study to evaluate the performance of models. Metric Expression of mathematics Correlation coefficient (R) R = ∑m Root Mean Square Error (RMSE) RMSE = ( 1 ∑m Mean Absolute Error (MAE) MAE = ∑m Mean Absolute Percentage Error (MAPE) MAPE = ∑m x)(y −y) i=1 (xi − ∑m i 2 2 i=1 (yi −y) i=1 (xi −x) m 1 m 1 m i=1 (xi − yi )2 ) 12 i=1 |xi − yi | ∑m ⏐⏐ xi −yi ⏐⏐ i=1 ⏐ xi ⏐ (blind dataset). To predict ROP, we used a MLP-NN with a single hidden layer (a shallow neural network), 1D-CNN (a deep convolutional neural network), and Res 1D-CNN (a modified (residual) deep convolutional neural network) structures before and after the boosting process. We also applied a widely accepted physics-based model (Bingham model) for ROP estimation as a baseline approach. Each of the applied ANNs has its own set of hyperparameters that need to be adjusted before model training, as these hyperparameters affect the accuracy and performance of the models [52]. In order to determine the optimal set of hyperparameters for each network, the PSO algorithm was used [53]. Before boosting predictive ANNs, the training is carried out by the training dataset so that 30% of the training section act as samples used to set hyperparameters and training validation samples to prevent overtraining. To train GB-NNs, it is first necessary to adjust the GAN’s generator weights with ROP samples. Accordingly, GAN training was performed using the ROP samples in the training dataset. The structure of the predictive ANNs is initialized once the GAN is trained. Next, GAN’s generator, in the frozen state, replaces the output layer neuron within each predictive network to form the GB-NNs structure. In training GB-NNs, dataset splitting is similar to dataset splitting for training unboosted ANNs. Then, the final validity and performance of both unboosted NNs and GB-NNs are evaluated using the blind dataset and through the statistical metrics in Table 5. Fig. 21 illustrates how data is split for training and evaluating models. Fig. 19. PCA converts data space from (X , Y ) to (X ′ , Y ′ ). Table 4 lists the eigenvectors for the first 6 PCs. There are ten relative coefficients in vi that indicate the significance of the corresponding parameters in PCi . A parameter’s importance in a PC increases as its absolute relative coefficient increases. For example, the two highest relative coefficients in PC3 are −0.93617 and 0.245571, which relate to MW and Torque, respectively, meaning that a slight change in these values will have a substantial impact on PC3 . The dataset dimension should be reduced from 10 to 6. So, one can specify the four parameters (marked in red in Table 3) that are the least significant on each of the six PCs. Therefore, according to all PCs, the four insignificant parameters can be removed. The MW in four directions (PC1, PC2, PC5, and PC6) is one of the four least important parameters. Also, as indicated above, the mean of ANN’s weights for this parameter was negligible in the non-linear analysis section. Thus, this parameter is removed from the dataset. Q, which was proposed to be removed in the linear regression analysis section, has the lowest relative coefficient in three directions (PC1, PC4, and PC6). Additionally, linear regression and non-linear ANN analysis indicated a small correlation between this parameter and ROP. Therefore, it has been removed from the dataset. Given that the variable Q is a function of SPP, it is noteworthy that using both in modeling results in a bias toward repetitive patterns. RHOB and NPHI variables which should be omitted according to the non-linear analysis section are included in four parameters with minimal effects on variation in three PCs (PC2, PC3, and PC5). In addition, our linear analysis revealed that DT and both of these parameters had a high correlation with each other because all three parameters are a measure of porosity and are related to each other, which makes one of them sufficient for modeling. Therefore, RHOB and NPHI are excluded from the dataset. 4. Experiments, results, and discussions 4.1. Hyper-parameters setting Each of the three included GB-NNs has specific hyper-parameters tuned with a PSO optimization algorithm. The tuned hyper-parameters values of GB-MLP-NN, GB-1D-CNN, and GBRes 1D-CNN are reported in Table 6. It is also worth noting that PSO also determined the optimal values of hyper-parameters for unboosted ANNs. 3.3. Model training and evaluation As already mentioned, the dataset used in this study contains samples from wells A and B (training dataset) and well C 13 M.H. Sharifinasab, M. Emami Niri and M. Masroor Applied Soft Computing 136 (2023) 110067 λ Fig. 20. Percentage of variations ( ∑10i i=1 λi ) for different PCs. Fig. 21. The segmentation of data during the modeling and validation processes. The depth interval of the Sarvak Formation was selected as the length of the dataset in wells A, B, and C. Data from wells A and B are used for the model’s training process (blue). Data from well C is used for the final validation of the model (green). 4.2. Effect of GAN structure is involved). Based on Fig. 22, we can see that GB-NNs have lower errors than unboosted-ANNs because the error line (blue line) for GB-NNs is more similar to a straight line close to zero value. Fig. 23 shows the actual ROP values versus those estimated by predictive ANNs. A higher accumulation of black dots around To highlight the effect of applying GAN structure on predictive ANN’s performance in our ROP prediction problem, we compared the GB-NNs with the unboosted ANN models (no GAN structure 14 M.H. Sharifinasab, M. Emami Niri and M. Masroor Applied Soft Computing 136 (2023) 110067 Table 6 PSO-optimized values for hyper-parameters of GB-MLP-NN ,GB-1D-CNN, and GB-Res 1D-CNN. Method Optimization function (optimizer) Learning rate Activation functions Number of samples for gradient update (batch_size) Number of epochs to train the model Validation loss monitoring to stop training & patient number Number of layers Number of neurons GAN Adam 0.002 Fully Connected Layers: Leaky ReLU & Linear 16 2000 No Generator: 3 Discriminator: 2 Generator: 20 & 10 & 1 Discriminator: 128 & 1 GB-MLP Adam 0.00113 Fully Connected Layers: Leaky ReLU & Linear 16 2000 Yes & 50 Fully Connected Layers: 2 Fully Connected Layers: 50 & 1 GB-1D-CNN Adam 0.00021 Conv1D Layers: Leaky ReLU Fully Connected Layers: ReLU & Linear 8 2000 Yes & 50 Conv1D Layers: 3 Fully Connected Layers: 2 Conv1D Layers: 32 & 8 & 32 Dense Layers: 50 & 1 GB-Res 1D-CNN Adam 0.0001 Conv1D Layers: Leaky ReLU Fully Connected Layers: ReLU & Linear 64 2000 Yes & 50 Conv1D Layers: 3 Fully Connected Layers: 2 Conv1D Layers: 32 & 8 & 32 Dense Layers: 50 & 1 Table 7 Statistical results of ANN methods and a physics-based method of Bingham on the blind dataset (Well C). Method R RMSE MAE MAPE Error standard dev. GB-MLP-NN GB-1D-CNN GB-Res 1D-CNN Unboosted MLP-NN Unboosted 1D-CNN Unboosted Res 1D-CNN Bingham 0.9287 0.9443 0.9690 0.9030 0.9270 0.9520 0.7275 0.6600 0.6016 0.4526 1.0070 0.6743 0.5356 1.8260 0.4656 0.4326 0.3245 0.6950 0.4746 0.3740 1.4570 0.0990 0.0925 0.0713 0.1652 0.0971 0.0821 0.2393 0.658 0.6 0.4304 0.979 0.6685 0.5303 1.315 the blue line (correlation line) and greater red line (regression line) conformity with the blue line indicates improved results for GB-NNs. Also, according to the reported values of the statistical parameters in Table 7, it can be observed that adding the GAN’s pre-trained generator structure does help improve the ROP prediction performance. This performance improvement occurs for two reasons. (1) Generator weights do not need to be adjusted during model training. (2) The weights of the hidden part should be adjusted so that the output of this part of the network is a matrix with normal distribution. So, it can be said that with the addition of a pre-trained generator, the network becomes deeper, and the computational load and the complexity of the network training process are reduced, which ultimately enhances the model performance. Besides, we compare the ANN methods (GB-NNs and original ANNs) with one physics-based method: Bingham [6]. All the methods were trained and evaluated using a similar training dataset (Well A and Well B) and the blind dataset (Well C). The statistical results of all approaches on the blind dataset are reported in Table 7. As it shows, our proposed ANN models outperform the physics-based method in terms of the employed performance metrics. 4.3. Results and statistics of the proposed GB-NNs This section presents the final result of each GB-NN on the train and blind datasets. The predicted ROP of all three models (GB-MLP-NN, GB-1D-CNN, and GB-Res 1D-CNN) is compared to the intended well A, B, and C to assess each model’s performance and accuracy. For this purpose, the corresponding values of R, RMSE, MAE, and MAPE performance assessment metrics for each evaluation are calculated. The performance of GB-MLP-NN, GB1D-CNN, and GB-Res 1D-CNN models on the blind dataset (well C) are shown in Figs. 24 to 26. Figs. 24(A) to 26(A) illustrate the ROP profile of the blind well. It can be seen that the ROP predicted by the GB-Res 1D-CNN (solid black line) is more consistent with the real one (solid red line) compared with those predicted by the GB-MLP and GB-1D-CNN methods. Besides, Figs. 24(B) to 26(B) show the cross plots of the measured ROP versus predicted ROP for GB-MLP-NN, GB-1D-CNN, and GB-Res 1D-CNN methods in well C. The black dots representing the predicted ROP in Fig. 26(B) is closer to the red and blue lines than those in Figs. 24(B) and 25(B); demonstrate the strong correlation between the measured and predicted ROP values in 15 M.H. Sharifinasab, M. Emami Niri and M. Masroor Applied Soft Computing 136 (2023) 110067 Fig. 22. A comparison of the performance of unboosted predictive ANNs (top row) and GB-NNs (bottom row) in estimating the value of ROP. The red line displays the actual value of ROP, the black line displays the estimated value, and the blue line shows the model’s error. Fig. 23. The linear regression between the actual ROP values and the estimated values for unboosted predictive ANNs (top row) and GB-NNs (bottom row). relatively small, which means that the predicted result based on the GB-Res 1D-CNN method is reliable. The error bar comparison of all three settings on well A, well B, and well C is shown in Fig. 27. The GB-Res 1D-CNN method appears to perform the best (closest R-value to 1 and RMSE, MAE, and MAPE values closest to 0). When we compare the performance of the GB-CNNs (GB-1DCNN and GB-Res 1D-CNN) with the GB-MLP-NN method, it can be understood that the performance of GB-CNNs in the training and the blind dataset is better than the GB-MLP-NN method (Table 8). This performance is because of the efficient feature extraction of 1D-CNN. Besides the inherent superiority of the GB-1D-CNN method owing to its deeper structure and pattern recognition potential, the applied modification (applying residual structure) has improved its performance. According to the reported values of the statistical parameters in Table 8, it can be observed that adding the residual structure does help improve the ROP prediction performance. This performance improvement is due to the Residual Fig. 26(B). The statistical parameters of the predicted results are listed in Table 8. It can be seen that the GB-Res 1D-CNN supplies the highest R-value and the lowest RMSE, MAE, and MAPE values, which demonstrates that the GB-Res 1D-CNN can more effectively identify correlations between the conventional well logs and reservoir ROP. The errors between the target values and the predicted outputs are shown in Figs. 24(C) to 26(C). The relatively small values of the error between the measured and predicted ROP values (solid blue line) demonstrate that the predicted ROP by the proposed GB-Res 1D-CNN is reliable. The smaller error values mean the higher confidence of the predictions. The position with larger errors corresponds to the large deviation between the predicted and the measured ROP. Figs. 24(D) to 26(D) show the histogram of the error between the measured and predicted ROP values for GB-MLP-NN, GB1D-CNN, and GB-Res 1D-CNN methods in well C. The GB-Res 1D-CNN’s average and standard deviation of the error values are 16 M.H. Sharifinasab, M. Emami Niri and M. Masroor Applied Soft Computing 136 (2023) 110067 Fig. 24. ROP prediction performance of GB-MLP-NN on the blind dataset. (A) True (Targets) versus predicted (Outputs) ROP values. (B) Cross-plot showing the true versus estimated ROP values. (C) The relative deviation of GB-MLP for ROP prediction versus relevant true ROP data samples. (D) Histogram of the error between the true and estimated ROP values. Fig. 25. ROP prediction performance of GB-1D-CNN on the blind dataset. (A) True (Targets) versus predicted (Outputs) ROP values. (B) Cross-plot showing the true versus estimated ROP values. (C) The relative deviation of GB-1D-CNN for ROP prediction versus relevant true ROP data samples. (D) Histogram of the error between the true and estimated ROP values. Table 8 Statistical results of GB-MLP-NN, GB-1D-CNN, and GB-Res 1D-CNN on the blind dataset (well C). Method R RMSE MAE MAPE GB-MLP-NN GB-1D-CNN GB-Res 1D-CNN 0.9287 0.9443 0.9690 0.6600 0.6016 0.4526 0.4656 0.4326 0.3245 0.0990 0.0925 0.0713 4.4. Sensitivity analysis Once the GB-Res 1D-CNN model is validated, an ROP sensitivity analysis using the samples of well C was conducted, and the results are demonstrated in Fig. 28. To perform a sensitivity analysis, a baseline case must be defined. The baseline case in this study is one in which all the input features have an average value of well C samples, which is located in the center of the graph. When each parameter is changed with respect to the baseline case, the ROP also changes, which is shown as a percentage change. As a result of this analysis, the DS feature has the greatest impact on ROP, among other features, as it has also been demonstrated in linear and non-linear analyses on actual well samples. This impact is also quite significant; for example, with an increase structure can position the input layer next to the output of the 1D-CNN feature extraction section. As a result, the patterns in the input layer can remain intact alongside the extracted features. Thus, the learning section of 1D-CNN can use more information. Considering all the output results, GB-Res 1D-CNN has shown satisfactory stability and capability of generalization. 17 M.H. Sharifinasab, M. Emami Niri and M. Masroor Applied Soft Computing 136 (2023) 110067 Fig. 26. ROP prediction performance of GB-Res 1D-CNN on the blind dataset. (A) True (Targets) versus predicted (Outputs) ROP values. (B) Cross-plot showing the true versus estimated ROP values. (C) The relative deviation of GB-Res 1D-CNN for ROP prediction versus relevant true ROP data samples. (D) Histogram of the error between the true and estimated ROP values. Fig. 27. Comparison of the applied GB-NN models on training (well A & B) and blind datasets (well C) to evaluate performance in terms of (A) correlation coefficient (R), (B) root mean squared error (MSE), (C) mean absolute error (MAE), and (D) mean absolute percentage error (MAPE). between CGR and ROP in the linear analysis of features may be attributable to the influence of other features that significantly impact ROP. of 5% in DS, the ROP increase is approximately 30%. Another feature that has a significant effect on the ROP is the DT. When the DT increases, the ROP rises dramatically. As NPHIE and RHOB are eliminated from linear and non-linear analyses, the DT indicates the compaction and porosity of the rocks. Therefore, the change in DT indicates the change in rock strength [54], explaining ROP’s sensitivity to DT. Theoretically, the Torque and WOB factors, which are proportional to the energy needed to overcome rocks’ mechanical specific energy (MSE), should increase ROP, assuming other conditions remain constant [55]. Model results also reflect this effect, although ROP’s sensitivity to Torque and WOB is relatively low. The increase in CGR feature, which indicates more clay minerals in the rock formation, represents the drilled rock block’s softening. Under ideal conditions for cleaning, this softening increases the drilling speed. The sensitivity analysis indicates that this factor has no significant effect on the ROP compared to DS and DT. Due to this, the negative relationship 5. Conclusion This study proposed an innovative DL-based approach to estimate ROP using drilling data and conventional well logs. Using the GAN structure, we boosted ANN models and eventually developed GB-NN models. As a first step, GB-NNs were constructed by learning the GAN structure. Then the generator part, responsible for converting the latent matrix to ROP, was substituted for the neuron of the predictive output layer in ANNs. We concluded that using GAN boosting techniques for ROP prediction enhances the prediction performance of ANNs. The main reasons for this performance improvement are as follows: (1) A reduction in the calculation load during the training process because the generator 18 M.H. Sharifinasab, M. Emami Niri and M. Masroor Applied Soft Computing 136 (2023) 110067 Fig. 28. GB-Res 1D-CNN model’s sensitivity analysis plot. References of the GAN has been frozen. (2) the hidden part is adapted to result in an output with a normal distribution, which enhances the training quality of the network. As the side results of this study, the following conclusions are also drawn: (1) ANN models outperform the commonly used physicsbased Bingham model. (2) 1D-CNN model performs better in both boosted and unboosted modes than the MLP-NN model, which is a shallow ANN. This is due to the communication between the features of the input layer caused by convolution units and extracting features from parameters through convolution sequences. (3) The Res 1D-CNN model outperforms the 1D-CNN in both boosted and unboosted modes due to its residual structure. It is because the input layer is added to the convolution section’s output, which means that the input layer patterns are intact alongside the features extracted from it, so the fully connected section can obtain more information. [1] L.F.F. Barbosa, A. Nascimento, M.H. Mathias, J.A. de Carvalho Jr., Machine learning methods applied to drilling rate of penetration prediction and optimization–A review, J. Pet. Sci. Eng. 183 (2019) 106332, http://dx.doi. org/10.1016/j.petrol.2019.106332. [2] A. Alsaihati, S. Elkatatny, H. Gamal, Rate of penetration prediction while drilling vertical complex lithology using an ensemble-learning model, J. Pet. Sci. Eng. 208 (2022) 109335, http://dx.doi.org/10.1016/j.petrol.2021. 109335. [3] A.T. Bourgoyne, K.K. Millheim, M.E. Chenevert, F.S. Young, Applied Drilling Engineering, Vol. 2, Society of Petroleum Engineers, Richardson, 1986, p. 514. [4] O. Bello, J. Holzmann, T. Yaqoob, C. Teodoriu, Application of artificial intelligence methods in drilling system design and operations: A review of the state of the art, J. Artif. Intell. Soft Comput. Res. 5 (2015) http: //dx.doi.org/10.1515/jaiscr-2015-0024. [5] C. Hegde, H. Daigle, H. Millwater, K. Gray, Analysis of rate of penetration (ROP) prediction in drilling using physics-based and data-driven models, J. Pet. Sci. Eng. 159 (2017) 295–306, http://dx.doi.org/10.1016/j.petrol.2017. 09.020. [6] G. Bingham, A new approach to interpreting rock drillability, Technical Manual Reprint Oil Gas J. 1965 (1965) 93. [7] A.T. Bourgoyne, F.S. Young, A multiple regression approach to optimal drilling and abnormal pressure detection, Soc. Petrol. Eng. J. 14 (04) (1974) 371–384, http://dx.doi.org/10.2118/4238-PA. [8] H.R. Motahhari, G. Hareland, J.A. James, Improved drilling efficiency technique using integrated PDM and PDC bit parameters, J. Can. Pet. Technol. 49 (10) (2010) 45–52, http://dx.doi.org/10.2118/141651-PA. [9] C. Soares, K. Gray, Real-time predictive capabilities of analytical and machine learning rate of penetration (ROP) models, J. Pet. Sci. Eng. 172 (2019) 934–959, http://dx.doi.org/10.1016/j.petrol.2018.08.083. [10] A. Bahari, A. Baradaran Seyed, Trust-region approach to find constants of Bourgoyne and Young penetration rate model in Khangiran Iranian gas field, in: Latin American & Caribbean Petroleum Engineering Conference, OnePetro, 2007, http://dx.doi.org/10.2118/107520-MS. [11] H. Rahimzadeh, M. Mostofi, A. Hashemi, A new method for determining Bourgoyne and Young penetration rate model constants, Petrol. Sci. Technol. 29 (9) (2011) 886–897, http://dx.doi.org/10.1080/ 10916460903452009. [12] M. Najjarpour, H. Jalalifar, S. Norouzi-Apourvari, Half a century experience in rate of penetration management: Application of machine learning methods and optimization algorithms–A review, J. Pet. Sci. Eng. 208 (2022) 109575, http://dx.doi.org/10.1016/j.petrol.2021.109575. [13] A. Al-AbdulJabbar, A.A. Mahmoud, S. Elkatatny, Artificial neural network model for real-time prediction of the rate of penetration while horizontally drilling natural gas-bearing sandstone formations, Arab. J. Geosci. 14 (2) (2021) 1–14, http://dx.doi.org/10.1007/s12517-021-06457-0. CRediT authorship contribution statement Mohammad Hassan Sharifinasab: Conceptualization, Methodology, Software, Coding, Investigation, Writing – original draft, Formal analysis. Mohammad Emami Niri: Conceptualization, Validation, Resources, Supervision, Writing – review & editing. Milad Masroor: Conceptualization, Methodology, Software Coding, Investigation, Writing – original draft, Formal analysis. Declaration of competing interest The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper. Data availability The data that has been used is confidential. 19 M.H. Sharifinasab, M. Emami Niri and M. Masroor Applied Soft Computing 136 (2023) 110067 [33] S. Elkatatny, Real-time prediction of rate of penetration in S-shape well profile using artificial intelligence models, Sensors 20 (12) (2020) 3506, http://dx.doi.org/10.3390/s20123506. [34] C. Hegde, S. Wallace, K. Gray, Using trees, bagging, and random forests to predict rate of penetration during drilling, in: SPE Middle East Intelligent Oil and Gas Conference and Exhibition, OnePetro, 2015, http://dx.doi.org/ 10.2118/176792-MS. [35] S. Chauhan, L. Vig, M.De.Filippo.De. Grazia, M. Corbetta, S. Ahmad, M. Zorzi, A comparison of shallow and deep learning methods for predicting cognitive performance of stroke patients from MRI lesion images, Front. Neuroinform. 13 (53) (2019) http://dx.doi.org/10.3389/fninf.2019.00053. [36] S. Harbola, V. Coors, One-dimensional convolutional neural network architectures for wind prediction, Energy Convers. Manage. 195 (2019) 70–75, http://dx.doi.org/10.1016/j.enconman.2019.05.007. [37] C.L. Yang, Z.X. Chen, C.Y. Yang, Sensor classification using convolutional neural network by encoding multivariate time series as two-dimensional colored images, Sensors 20 (1) (2019) 168, http://dx.doi.org/10.3390/ s20010168. [38] M. Matinkia, A. Sheykhinasab, S. Shojaei, A. Vojdani Tazeh Kand, A. Elmi, M. Bajolvand, M. Mehrad, Developing a new model for drilling rate of penetration prediction using convolutional neural network, Arab. J. Sci. Eng. (2022) 1–33, http://dx.doi.org/10.1007/s13369-022-06765-x. [39] M. Masroor, M. Emami Niri, A.H. Rajabi-Ghozloo, M.H. Sharifinasab, M. Sajjadi, Application of machine and deep learning techniques to estimate NMR-derived permeability from conventional well logs and artificial 2D feature maps, J. Petrol. Explor. Product. Technol. (2022) 1–17, http://dx. doi.org/10.1007/s13202-022-01492-3. [40] M. Masroor, M.E. Niri, M.H. Sharifinasab, A multiple-input deep residual convolutional neural network for reservoir permeability prediction, Geoenergy Sci. Eng. (2023) 211420, http://dx.doi.org/10.1016/j.geoen.2023. 211420. [41] C. Gallo, V. Capozzi, Feature selection with non linear PCA: A neural network approach, J. Appl. Math. Phys. 7 (10) (2019) 2537–2554, http: //dx.doi.org/10.4236/jamp.2019.710173. [42] K. He, X. Zhang, S. Ren, J. Sun, Deep residual learning for image recognition, in: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016, pp. 770–778, http://dx.doi.org/10.1109/CVPR.2016.90. [43] I. Bratko, Machine learning in artificial intelligence, Artif. Intell. Eng. 8 (3) (1993) 159–164, http://dx.doi.org/10.1016/0954-1810(93)90002-W. [44] M. Cardiff, P.K. Kitanidis, Fitting data under omnidirectional noise: A probabilistic method for inferring petrophysical and hydrologic relations, Math. Geosci. 42 (8) (2010) 877–909, http://dx.doi.org/10.1007/s11004010-9301-x. [45] L.P. Garcia, A.C. de Carvalho, A.C. Lorena, Effect of label noise in the complexity of classification problems, Neurocomputing 160 (2015) 108–119, http://dx.doi.org/10.1016/j.neucom.2014.10.085. [46] A. Savitzky, M.J. Golay, Smoothing and differentiation of data by simplified least squares procedures, Anal. Chem. 36 (8) (1964) 1627–1639, http: //dx.doi.org/10.1021/ac60214a047. [47] D.P. Kingma, J. Ba, Adam: A method for stochastic optimization, 2014, http: //dx.doi.org/10.48550/arXiv.1412.6980, arXiv preprint arXiv:1412.6980. [48] G.D. Garson, Interpreting neural-network connection weights, AI Expert 6 (4) (1991) 46–51, https://dl.acm.org/doi/abs/10.5555/129449.129452. [49] A.T. Goh, Back-propagation neural networks for modeling complex systems, Artif. Intell. Eng. 9 (3) (1995) 143–151, http://dx.doi.org/10.1016/ 0954-1810(94)00011-S. [50] C.H. Su, C.H. Cheng, A hybrid fuzzy time series model based on ANFIS and integrated nonlinear feature selection method for forecasting stock, Neurocomputing 205 (2016) 264–273, http://dx.doi.org/10.1016/j.neucom. 2016.03.068. [51] R.A. Kolajoobi, H. Haddadpour, M.E. Niri, Investigating the capability of data-driven proxy models as solution for reservoir geological uncertainty quantification, J. Pet. Sci. Eng. 205 (2021) 108860, http://dx.doi.org/10. 1016/j.petrol.2021.108860. [52] E. Brenjkar, E.B. Delijani, Computational prediction of the drilling rate of penetration (ROP): A comparison of various machine learning approaches and traditional models, J. Pet. Sci. Eng. 210 (2022) 110033, http://dx.doi. org/10.1016/j.petrol.2021.110033. [53] M. Foysal, F. Ahmed, N. Sultana, T.A. Rimi, M.H. Rifat, Convolutional neural network hyper-parameter optimization using particle swarm optimization, in: Emerging Technologies in Data Mining and Information Security, Springer, Singapore, 2021, pp. 363–373, http://dx.doi.org/10.1007/978981-33-4367-2_35. [54] M.D. Zoback, Reservoir Geomechanics, Cambridge University Press, 2010. [55] R. Teale, The concept of specific energy in rock drilling, Int. J. Rock Mech. Min. Sci. Geomech. Abstracts 2 (1) (1965) 57–73, http://dx.doi.org/10.1016/ 0148-9062(65)90022-7. [14] O. Hazbeh, S.K.Y. Aghdam, H. Ghorbani, N. Mohamadian, M.A. Alvar, J. Moghadasi, Comparison of accuracy and computational performance between the machine learning algorithms for rate of penetration in directional drilling well, Petrol. Res. 6 (3) (2021) 271–282, http://dx.doi. org/10.1016/j.ptlrs.2021.02.004. [15] M. Emami Niri, R. Amiri Kolajoobi, M.K. Arbat, M.S. Raz, Metaheuristic optimization approaches to predict shear-wave velocity from conventional well logs in sandstone and carbonate case studies, J. Geophys. Eng. 15 (3) (2018) 1071–1083, http://dx.doi.org/10.1088/1742-2140/aaaba2. [16] H. Haddadpour, M. Emami Niri, Uncertainty assessment in reservoir performance prediction using a two-stage clustering approach: Proof of concept and field application, J. Pet. Sci. Eng. 204 (2021) 108765, http: //dx.doi.org/10.1016/j.petrol.2021.108765. [17] Y. Haghshenas, M. Emami Niri, S. Amini, R.A. Kolajoobi, A physicallysupported data-driven proxy modeling based on machine learning classification methods: Application to water front movement prediction, J. Pet. Sci. Eng. 196 (2021) 107828, http://dx.doi.org/10.1016/j.petrol.2020. 107828. [18] M.S. Jamshidi Gohari, M. Emami Niri, J. Ghiasi-Freez, Improving permeability estimation of carbonate rocks using extracted pore network parameters: A gas field case study, Acta Geophys. 69 (2) (2021) 509–527, http://dx.doi.org/10.1007/s11600-021-00563-z. [19] R.A. Kolajoobi, H. Haddadpour, M. Emami Niri, Investigating the capability of data-driven proxy models as solution for reservoir geological uncertainty quantification, J. Pet. Sci. Eng. 205 (2021) 108860, http://dx.doi.org/10. 1016/j.petrol.2021.108860. [20] Q. Gao, L. Wang, Y. Wang, C. Wang, Crushing analysis and multiobjective crashworthiness optimization of foam-filled ellipse tubes under oblique impact loading, Thin-Walled Struct. 100 (2016) 105–112, http://dx.doi.org/ 10.1016/j.tws.2015.11.020. [21] O.E. Agwu, J.U. Akpabio, S.B. Alabi, A. Dosunmu, Artificial intelligence techniques and their applications in drilling fluid engineering: A review, J. Pet. Sci. Eng. 167 (2018) 300–315, http://dx.doi.org/10.1016/j.petrol.2018. 04.019. [22] A. Gowida, S. Elkatatny, A. Abdulraheem, Application of artificial neural network to predict formation bulk density while drilling, PetrophysicsSPWLA J. Formation Eval. Reserv. Description 60 (05) (2019) 660–674, http://dx.doi.org/10.30632/PJV60N5-2019a9. [23] E.A. Løken, J. Løkkevik, D. Sui, Data-driven approaches tests on a laboratory drilling system, J. Petrol. Explor. Product. Technol. 10 (7) (2020) 3043–3055, http://dx.doi.org/10.1007/s13202-020-00870-z. [24] A. Alsaihati, S. Elkatatny, A.A. Mahmoud, A. Abdulraheem, Use of machine learning and data analytics to detect downhole abnormalities while drilling horizontal wells, with real case study, J. Energy Resources Technol. 143 (4) (2021) http://dx.doi.org/10.1115/1.4048070. [25] K. Amar, A. Ibrahim, Rate of penetration prediction and optimization using advances in artificial neural networks, a comparative study, in: Proceedings of the 4th International Joint Conference on Computational Intelligence, Barcelona, Spain, 2012, pp. 5–7. [26] M.M. Amer, A.S. Dahab, A.A.H. El-Sayed, An ROP predictive model in nile delta area using artificial neural networks, in: SPE Kingdom of Saudi Arabia Annual Technical Symposium and Exhibition, OnePetro, 2017, http: //dx.doi.org/10.2118/187969-MS. [27] S. Eskandarian, P. Bahrami, P. Kazemi, A comprehensive data mining approach to estimate the rate of penetration: Application of neural network, rule based models and feature ranking, J. Petrol. Sci. Eng. 156 (2017) 605–615, http://dx.doi.org/10.1016/j.petrol.2017.06.039. [28] A.K. Abbas, S. Rushdi, M. Alsaba, M.F. Al Dushaishi, Drilling rate of penetration prediction of high-angled wells using artificial neural networks, J. Energy Resourc. Technol. 141 (11) (2019) http://dx.doi.org/10.1115/1. 4043699. [29] A. Ahmed, A. Ali, S. Elkatatny, A. Abdulraheem, New artificial neural networks model for predicting rate of penetration in deep shale formation, Sustainability 11 (22) (2019) 6527, http://dx.doi.org/10.3390/su11226527. [30] S.B. Ashrafi, M. Anemangely, M. Sabah, M.J. Ameri, Application of hybrid artificial neural networks for predicting rate of penetration (ROP): A case study from Marun oil field, J. Pet. Sci. Eng. 175 (2019) 604–623, http: //dx.doi.org/10.1016/j.petrol.2018.12.013. [31] F. Hadi, H. Altaie, E. AlKamil, Modeling rate of penetration using artificial intelligent system and multiple regression analysis, in: Abu Dhabi International Petroleum Exhibition & Conference, OnePetro, 2019, http: //dx.doi.org/10.2118/197663-MS. [32] A. Al-AbdulJabbar, S. Elkatatny, A.Abdulhamid. Mahmoud, T. Moussa, D. Al-Shehri, M. Abughaban, A. Al-Yami, Prediction of the rate of penetration while drilling horizontal carbonate reservoirs using the self-adaptive artificial neural networks technique, Sustainability 12 (4) (2020) 1376, http://dx.doi.org/10.3390/su12041376. 20