VII. Neural Networks and Learning 11/24/03 1

advertisement

VII. Neural Networks

and Learning

11/24/03

1

Supervised Learning

• Produce desired outputs for training inputs

• Generalize reasonably & appropriately to

other inputs

• Good example: pattern recognition

• Feedforward multilayer networks

11/24/03

2

input

layer

11/24/03

hidden

layers

...

...

...

...

...

...

Feedforward Network

output

layer

3

Typical Artificial Neuron

connection

weights

inputs

output

threshold

11/24/03

4

Typical Artificial Neuron

linear

combination

activation

function

net input

(local field)

11/24/03

5

Equations

n

hi = ∑ w ij s j − θ

j=1

h = Ws − θ

Net input:

Neuron output:

€

11/24/03

s′i = σ ( hi )

s′ = σ (h)

6

11/24/03

...

...

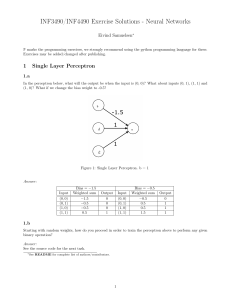

Single-Layer Perceptron

7

Variables

x1

w1

xj

Σ

wj

h

Θ

y

wn

xn

11/24/03

θ

8

Single Layer Perceptron

Equations

Binary threshold activation function :

1, if h > 0

σ ( h ) = Θ( h ) =

0, if h ≤ 0

1, if ∑ w x > θ

j j

j

Hence, y =

0, otherwise

€

1, if w ⋅ x > θ

=

0, if w ⋅ x ≤ θ

11/24/03

€

9

€

2D Weight Vector

w2

w ⋅ x = w x cos φ

v

cos φ =

x

+

w

w⋅ x = w v

φ

€

€

€

x

–

w⋅ x > θ

⇔ w v >θ

⇔v >θ w

11/24/03

v

w1

θ

w

10

N-Dimensional Weight Vector

+

w

normal

vector

separating

hyperplane

–

11/24/03

11

Goal of Perceptron Learning

• Suppose we have training patterns x1, x2,

…, xP with corresponding desired outputs

y1, y2, …, yP

• where xp ∈ {0, 1}n, yp ∈ {0, 1}

• We want to find w, θ such that

yp = Θ(w⋅xp – θ) for p = 1, …, P

11/24/03

12

Treating Threshold as Weight

n

h = ∑ w j x j − θ

j=1

x1

n

= −θ + ∑ w j x j

w1

xj

j=1

Σ

wj

wn

xn

11/24/03

h

Θ

y

€

θ

13

Treating Threshold as Weight

n

h = ∑ w j x j − θ

j=1

x0 = –1

x1

n

w1

xj

wn

xn

j=1

Σ

wj

= −θ + ∑ w j x j

θ = w0

h

Θ

y

€

Let x 0 = −1 and w 0 = θ

n

n

˜ ⋅ x˜

h = w 0 x 0 + ∑ w j x j =∑ w j x j = w

j=1

11/24/03

€

j= 0

14

Augmented Vectors

θ

w1

˜ =

w

M

wn

−1

p

x

1

p

x˜ =

M

p

xn

˜ ⋅ x˜ p ), p = 1,K,P

We want y p = Θ( w

€

11/24/03

€

15

Reformulation as Positive

Examples

We have positive (y p = 1) and negative (y p = 0) examples

˜ ⋅ x˜ p > 0 for positive, w

˜ ⋅ x˜ p ≤ 0 for negative

Want w

€

€

€

€

Let z p = x˜ p for positive, z p = −x˜ p for negative

˜ ⋅ z p ≥ 0, for p = 1,K,P

Want w

Hyperplane through origin with all z p on one side

11/24/03

16

Adjustment of Weight Vector

z5

z1

z9

z10 11

z

z6

z2

z3

z8

z4

z7

11/24/03

17

Outline of

Perceptron Learning Algorithm

1. initialize weight vector randomly

2. until all patterns classified correctly, do:

a) for p = 1, …, P do:

1) if zp classified correctly, do nothing

2) else adjust weight vector to be closer to correct

classification

11/24/03

18

Weight Adjustment

p

η

z

˜ ′′

p

w

ηz

z

˜′

w

p

˜

w

€ €

€

€

€

€

11/24/03

19

Improvement in Performance

p

˜ ⋅ z < 0,

If w

˜ ′ ⋅ z = (w

˜ + ηz ) ⋅ z

w

p

p

p

p

p

p

˜

= w ⋅ z + ηz ⋅ z

€

p

˜ ⋅ z +η z

=w

p 2

p

˜

> w⋅ z

11/24/03

€

20

Perceptron Learning Theorem

• If there is a set of weights that will solve the

problem,

• then the PLA will eventually find it

• (for a sufficiently small learning rate)

• Note: only applies if positive & negative

examples are linearly separable

11/24/03

21

Classification Power of

Multilayer Perceptrons

• Perceptrons can function as logic gates

• Therefore MLP can form intersections,

unions, differences of linearly-separable

regions

• Classes can be arbitrary hyperpolyhedra

• Minsky & Papert criticism of perceptrons

• No one succeeded in developing a MLP

learning algorithm

11/24/03

22

Credit Assignment Problem

input

layer

11/24/03

hidden

layers

...

...

...

...

...

...

How do we adjust the weights of the hidden layers?

output

layer

23