Binary Dependent Variable Regression: LPM & Probit/Logit Models

advertisement

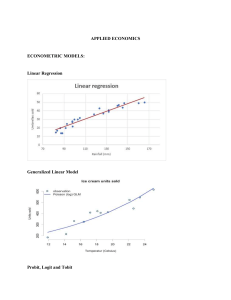

Regression with a Binary Dependent Variable Outline of the Chapter Introduction Example: Labor Force Participation Linear Probability Model Interpretation of Coefficients Estimation Heteroskedasticity of Errors Fit Shortcomings of the Linear Probability Model Probit/Logit Models Motivation Estimation Fit 2 Introduction Many situations in Economics require each individual in a population to make a decision with two possible outcomes. The decision may be modeled with a binary (0 or 1) dependent variable Y. For example, Y can be defined to indicate whether an adult has a high school education; Y can indicate whether a loan was repaid or not; Y can indicate whether a firm was taken over by another firm during a given year We are typically interested in the determinants of the decision, on how likely a particular individual is to make a choice 1, instead of choice 0. 3 Example – Labor Force Participation Decision by Women 𝑖𝑛𝑙𝑓 = 𝛽0 + 𝛽1 𝑛𝑤𝑖𝑓𝑒𝑖𝑛𝑐 + 𝛽2 𝑒𝑑𝑢𝑐 + 𝛽3 𝑒𝑥𝑝𝑒𝑟 + 𝛽4 𝑎𝑔𝑒 + 𝛽5 𝑘𝑖𝑑𝑠𝑙𝑒𝑡6 + 𝛽6 𝑘𝑖𝑑𝑠𝑔𝑒6 + 𝑢 where inlf = binary variable that takes the value 1 if woman reports working for a wage outside the home at some point during the year 1975 nwifeinc = other sources of income (including husband’s income) educ = years of educ 4 Example (cont) 𝑖𝑛𝑙𝑓 = 𝛽0 + 𝛽1 𝑛𝑤𝑖𝑓𝑒𝑖𝑛𝑐 + 𝛽2 𝑒𝑑𝑢𝑐 + 𝛽3 𝑒𝑥𝑝𝑒𝑟 + 𝛽4 𝑎𝑔𝑒 + 𝛽5 𝑘𝑖𝑑𝑠𝑙𝑒𝑡6 + 𝛽6 𝑘𝑖𝑑𝑠𝑔𝑒6 + 𝑢 exper = past years of labor market experience age = age in years kidslet6 = number of children younger than 6 years old kidsge6 = number of children whose age is between 6 and 18 The sample includes 753 married women, 428 of which participated in the labor force 5 Example (cont) The estimation (using OLS) produced the following results: i nˆ lf = 0.707- 0.0033 nwifeinc+ 0.040 educ + 0.023 exper (0.146) (0.0016) (0.007) (0.002) - 0.018 age- 0.272 kidslet6 + 0.0125 kidsge6 (0.002) n = 753, (0.031) (0.0136) R2 = 0.254 All variables, except kidsge6, are statistically significant. 6 Example (cont) Plot of the fitted line against years of schooling when nwifeinc = 50, exper = 5, age = 30, kidslt6 = 1, kidsge6 = 0 7 Meaning of the coefficients What does the slope of the line (𝛽2 =0.04) mean? When educ = 12, 𝑖𝑛𝑓𝑙 = 0.33. What does this 0.33 mean? Lets go back to the model: inlf = b0 + b1nwifeinc+ b2 educ+ b 3exper + b 4 exper 2 + +b5 age+ b6 kidslet6 + b 7 kidsge6 + u Because Y = inlf can only take two values, β2 cannot be interpreted as the change in Y, on average, given a one-year increase in the years of schooling, holding other factors fixed: Y either changes from zero to one or from one to zero (or does not change). 8 Interpretation of Coefficients Consider the generic model: Y = β0 + β1 X1 + + β2 X2 + ... + βk Xk + u Y can be a variable with quantitative meaning (like wage). or a binary dependent variable (like inlf) E[Y|X1, X2, …, Xk] = E[β0 + β1 X1 + β2 X2 + ... + βk Xk + u |X1, X2, …, Xk] E[Y|X1, X2, …, Xk] = E[β0 |X1, X2, …, Xk ]+ E[ β1 X1 |X1, X2, …, Xk ] + E[β2 X2 |X1, X2, …, Xk ]+ ... ….+ E[βk Xk |X1, X2, …, Xk] + E[u |X1, X2, …, Xk] E[Y|X1, X2, …, Xk] = β0+ β1 X1 + β2 X2 + ….+ βk Xk + E[u |X1, X2, …, Xk] Assume the first OLS assumption E[u|X1, X2, …, Xk] = 0 holds, then E[Y|X1, X2, …, Xk] = β0+ β1 X1 + β2 X2 + ….+ βk Xk 10 Interpretation of Coefficients E[Y|X1, X2, …, Xk] = β0+ β1 X1 + β2 X2 + ….+ βk Xk When Y has a quantitative meaning: Predicted value from the population regression function is the mean value of Y, given all the values of the regressors β1 = expected change in Y resulting from changing X1 by one unit, holding constant X2, , …, Xk. OLS predicted values 𝑌 = 𝛽0 + 𝛽1 X1 + 𝛽2 X2 + ….+ 𝛽𝑘 Xk 𝛽1 = estimated value of β1 11 Interpretation of Coefficients E[Y|X1, X2, …, Xk] = β0+ β1 X1 + β2 X2 + ….+ βk Xk But when Y can take only values 0 or 1 then E[Y|X1, …, Xk] = 0 × Pr[Y=0|X1, …, Xk] + 1 × Pr[Y=1|X1, …, Xk] E[Y|X1, …, Xk] = Pr[Y=1|X1, …, Xk] Thus, Pr[Y=1|X1, …, Xk] = β0 + β1 X1 + β2 X2 + ... + βk Xk 12 Meaning of the coefficients P[Y=1|X1, …, Xk] = β0 + β1 X1 + β2 X2 + ... + βk Xk Predicted value from the population regression function is the probability that Y = 1, given all the values of the regressors This model is called Linear Probability Model (LPM), because the population regression function is linear on the parameters and it represents a probability βj measures the change in probability that Y=1 when Xj changes by one unit, keeping all the other factors fixed. 13 Estimation of the LPM The mechanics of OLS are the same as before. When we estimate the parameters, we obtain Yˆ = b0 + bˆ1 X1 + bˆ 2 X2 +… + bˆ k Xk 𝛽𝑗 = estimate of the change in probability that Y = 1, for a unit change in Xj, keeping all the other factors constant 𝑌 = estimated probability that Y = 1, given values for the regressors 14 Back to the example • An additional year of education is estimated to increase the probability of labor force participation by 0.04 (4 percentage points), keeping all the other factors constant • If education = 12, the probability of labor participation is estimated to be around 33% (for the specified values for all the other variables in the graph on slide 7) • For the values of all the other variables specified in our graph, the predicted probability of participating in the labor force is negative when education is less than 3.75 years. • In this case, this is not much cause for concern since no woman in this sample has less than 5 years of education. Moreover, for the highest education level in the sample (17 years of schooling) the predicted probability of labor participation 0.527. 15 Back to the example The coefficient on nwifeinc implies that if other sources of income increases by $10,000, ceteris paribus, the probability that a woman is in the labor force falls by 0.033 (3.3%). Holding other factors fixed, one more year of experience in the job market increases the probability of labor participation by 0.023 (2.3%) Unlike the number of older children, the number of younger children has a huge impact on labor force participation. Having one additional child younger than six years old reduces the probability of participation by 0.272 (27.2%), keeping all other variables constant. 16 Special Features of the LPM (1) Errors from the LPM are always heteroskedastic. To show why, lets use a single regression model: Y = β 0 + β1 X + u If model is well specified E[u| X] = 0, then E[Y | X] = β0 + β1 X Var[u|X] = Var[Y - β0 - β1 X | X] = Var[Y|X] 17 Special Features of the LPM Since Yi can only take two values (0 and 1) 𝑉𝑎𝑟 𝑌 𝑋 = 0 − 𝐸 𝑌 𝑋 2 × 𝑃 𝑌 =0 𝑋 + 1−𝐸 𝑌 𝑋 2 × 𝑃 𝑌=1𝑋 𝑉𝑎𝑟 𝑌 𝑋 = 0 − (β0 + β1 X) 2 × 𝑃 𝑌 = 0 𝑋 + 1 − (β0 + β1 X) 2 𝑉𝑎𝑟 𝑌 𝑋 = 0 − (β0 + β1 X) 2 × (1−(β0 + β1 X)) + 1 − (β0 + β1 X) × 𝑃 𝑌=1𝑋 2 × (β0 + β1 X) 𝑉𝑎𝑟 𝑌 𝑋 = (β0 + β1 X) × (1−(β0 + β1 X)) Thus, Var[Y| X] = Var[u | X] is not constant, it depends on X. The errors are heteroskedastic. • (2) R2 concept does not carry over to LPM. 18 Another Example Population: Young man in California born in 1960-61 who have at least a prior arrest ar rˆ 86 = 0.380 + 0.152 pcnv + 0.0046avgsen -0.0026tottime- 0.024 ptime86 - 0.038qemp86 +0.170black + 0.096hispan 19 Another Example (cont) where arr86= binary variable that takes the value 1 if young man was arrested in 1986, 0 otherwise pcnv= proportion of prior arrests that led to conviction avgsen= average sentence from prior convictions tottime= months spent in prison since age 18 prior to 1986 ptime86 = months spent in prison in 1986 qemp86 = number of quarters employed in 1986 black = binary variable that takes value 1 if young man is black hispan = binary variable that takes value 1 if young man is hispanic 20 Another Example (cont) ar rˆ 86 = 0.380 + 0.152 pcnv + 0.0046avgsen -0.0026tottime- 0.024 ptime86 - 0.038qemp86 +0.170black + 0.096hispan The model tells us that the probability of arrest is 17 percentage points higher for a black man than for a white man (the base group), keeping all the other variables constant. 21 Shortcomings of LPM The LPM has the advantage of being easy to estimate and interpret, but its linearity is also the root of its major flaws. Shortcomings: • Predicted probabilities might be either less than zero or greater than one. In our example, out of the 753 women, 16 predicted probabilities of entering the labor force were negative and 17 were greater than one. 22 Shortcomings of LPM • Probabilities cannot be linearly related to the independent variables for all their possible values. • In our example, the effect on the probability of working of having an additional child is always the same (0.272). • Thus, the effect is the same if the woman goes from having no children to having one child or if the woman goes from having three children to having four children. That, most likely, is not realistic. • Also, taken to the extreme, going from zero to four children diminishes the probability of entering the workforce by 1.088 (108.8%)! • It seems more realistic that the first small child would reduce the probability by a large amount, but subsequent children would have a smaller marginal effect. This is not captured in the LPM specification. 23 Shortcomings of LPM Coefficients from an LPM may mischaracterize the relationship between X and Y. 24 Linear Probability Model • Even with these problems, the LPM is useful and often applied in Economics. The LPM works well for values of the regressors that are near the averages of the sample. • The predicted probabilities outside the unit interval are a little troubling. Still, we are able to use the estimated probabilities to predict the zero-one outcome: 𝑌𝑖 are the predicted probabilities (the fitted values). Then if 𝑌𝑖 is greater or equal to 0.5, we would predict the outcome of Y = 1 (woman enters the workforce); If 𝑌𝑖 is less than 0.5, we would predict the outcome of Y = 0 (woman does not enter the workforce). Then, we could compare the actual data with our prediction of the decision and find the percentage of correct predictions we get from the model (a goodness of fit measure for binary dependent variables). 25 Probit and Logit Models LPM: P[Y=1|X1, …, Xk] = β0 + β1 X1 + β2 X2 + ... + βk Xk Now, P[Y = 1 | X1, …, Xk] = G(α0 + α1 X1 + α2 X2 + ... + αk Xk) where G is a function that takes values between zero and one. That ensures that the estimated probabilities are strictly between 0 and 1. What kind of functions do we know that vary between zero and one? Cumulative Distribution Functions (cdf). 26 Probit and Logit Functions Probit Model: G is the standard normal cumulative distribution function (cdf) 𝑧 𝐺 𝑧 = −∞ 2 −𝑧 2𝜋 −1/2 exp 𝑑𝑧 2 Logit Model: G is the logistic function (the cdf of a standard logistic variable) exp(𝑧) 1 𝐺 𝑧 = = 1 + exp 𝑧 1 + exp(−𝑧) 27 Probit vs LPM - Illustration Goal: Instead of fitting a straight line, fit a curve that is constrained to be between 0 and 1. 28 Latent variable What is the idea of these models? The idea behind these models is that there exist an unobserved (continuous) variable Y* (latent variable) that is driving the dependent variable Y: Y* = α0 + α1 X1 + α2 X2 + ... + αk Xk + u In the example of women’s participation in the work force, Y* is the difference in utility between going to work or not. That depends on a bunch of factors (kids, other sources of income, education, etc.). Y is the variable we observe. In our example, it is the decision of going to work (Y = 1) or not (Y = 0). If the utility of going to work is higher than the utility of staying at home (Y* > 0), then the woman will go to work (Y = 1). Otherwise, she will choose not to participate in the labor force. Thus, If Y* > 0 then Y = 1 If Y* ≤ 0 then Y = 0 29 Latent variable (cont) 𝑌 ∗ = 𝛼0 + 𝛼1 𝑋1 + 𝛼2 𝑋2 + ⋯ + 𝛼𝑘 𝑋𝑘 + 𝑢 𝑃 𝑌 = 1 𝑋1 , ⋯ , 𝑋𝑘 ] = = 𝑃 𝑌 ∗ > 0 𝑋1 , ⋯ , 𝑋𝑘 ] = = 𝑃 𝛼0 + 𝛼1 𝑋1 + 𝛼2 𝑋2 + ⋯ + 𝛼𝑘 𝑋𝑘 + 𝑢 > 0 𝑋1 , ⋯ , 𝑋𝑘 = = 𝑃 𝑢 > − 𝛼0 + 𝛼1 𝑋1 + 𝛼2 𝑋2 + ⋯ + 𝛼𝑘 𝑋𝑘 𝑋1 , ⋯ , 𝑋𝑘 = = 1 − 𝑃 𝑢 ≤ − 𝛼0 + 𝛼1 𝑋1 + 𝛼2 𝑋2 + ⋯ + 𝛼𝑘 𝑋𝑘 𝑋1 , ⋯ , 𝑋𝑘 = = 1 − 𝐺 − 𝛼0 + 𝛼1 𝑋1 + 𝛼2 𝑋2 + ⋯ + 𝛼𝑘 𝑋𝑘 30 Latent variable (cont) 𝑃 𝑌 = 1 𝑋1 , ⋯ , 𝑋𝑘 ] = 1 − 𝐺 − 𝛼0 + 𝛼1 𝑋1 + 𝛼2 𝑋2 + ⋯ + 𝛼𝑘 𝑋𝑘 If density function of u is symmetric around zero (true for a standard normal and a standard logistic random variable) then 𝑃 𝑌 = 1 𝑋1 , ⋯ , 𝑋𝑘 ] = 𝐺 𝛼0 + 𝛼1 𝑋1 + 𝛼2 𝑋2 + ⋯ + 𝛼𝑘 𝑋𝑘 31 Probit and Logit Models The LPM may have its problems, but it is definitely easy to interpret: a one-unit increase in Xj is associated with a 𝛽𝑗 increase in the probability of Y = 1, all else constant. Probit and logit models have their strength but being easy to interpret is not one of them. The effect of Xj on the probability of Y = 1 is not constant. We will see that that effect depends not only on the value of Xj, but also on the value of the other independent variables. 32 Probit Model P[Y=1|X1, X2, …, Xk] = Φ(α0 + α1 X1 + …. + αk Xk) where Φ is the standard normal cdf We are primarily interested in the effects of each variable (Xj) on P[Y = 1 | X1, X2, …, Xk]. We are not interested in knowing the effect of Xj on Y*, i.e., we are not really interested in the magnitude of αj. Since the direction of the effect of Xj on E[Y*|X1, X2, …, Xk] is the same as the direction of the effect of of Xj on E[Y|X1, X2, …, Xk] = P[Y = 1 |X1, X2, …, Xk], we are interested on the sign of αj. 33 Interpretation of Coefficients αj > 0 then an increase in Xj increases P[Y=1|X1, X2, …, Xk] αj < 0 then an increase in Xj decreases P[Y=1|X1, X2, …, Xk] Beyond this, do not interpret the α coefficients directly. To find the magnitude of the effects (on the probability that Y = 1) of changing the independent variables, we have to compute changes in the conditional probabilities. If X1 changes by ΔX1, then P[Y=1 |X1, X2, …, Xk] changes by Φ(α0 + α1 (X1+ΔX1) + … + αk Xk) – Φ(α0 + α1 X1 + … + αk Xk) 34 Interpretation of Coefficients Suppose P[inlf = 1 | nwifeinc] = Φ(α0 + α1 nwifeinc) and α0 = 0.432 and α1 = -0.013 What is the P[inlf = 1 | nwifeinc=10] = ? When nwifeinc = 10, α0 + α1 nwifeinc = 0.432 -0.013 x 10 = 0.302. Thus, P[inlf = 1 | nwifeinc = 10] = Φ(0.302) = 0.6187 What happens when nwifeinc changes from 10 to 20? (Δnwifeinc = 10) P[inlf = 1 |nwifeinc = 20] = Φ(0.432 -0.013 x 20) = Φ(0.172) = 0.5683. Thus, ΔP[inlf = 1] = 0.5683 – 0.6187 = - 0.0504 35 Interpretation of Coefficients Suppose P[inlf = 1 | nwifeinc] = Φ(α0 + α1 nwifeinc) and α0 = 0.432 and α1 = -0.013 What happens when nwifeinc changes from 80 to 90? (Δnwifeinc = 10) P[inlf = 1 |nwifeinc = 80] = Φ(0.432 -0.013 x 80) = Φ(-0.608) = 0.2716. P[inlf = 1 |nwifeinc = 90] = Φ(0.432 -0.013 x 90) = Φ(-0.738) = 0.2303. Thus, ΔP[inlf = 1] = 0.2303 – 0.2716 = - 0.0413 The effect of nwifeinc depends on the value of nwifeinc 36 Interpretation of Coefficients Now, P[inlf = 1 | nwifeinc, educ] = Φ(α0 + α1 nwifeinc + α2 educ) and α0 = -1.131, α1 = -0.021 and α2 = 0.142 What is the P[inlf = 1 | nwifeinc = 20, educ = 5] = ? P[inlf = 1 | nwifeinc = 20, educ = 5] = Φ(-1.131 -0.021 x 20 + 0.142 x 5) = Φ(-0.841) = 0.2002 What happens when educ changes from 5 to 10?(Δeduc = 5) P[inlf = 1 |nwifeinc = 20, educ = 10] = Φ(-1.131 -0.021 x 20 + 0.142 x 10) = Φ(-0.131) = 0.4479 Thus, ΔP[inlf = 1] = 0.4479 – 0.2002 = 0.2477 37 Effect of Education nwifeinc = 20 Prob[Y=1] 1 0.9 When education increases from 5 to 10, the change in probability of entering the labor force = 0.25 0.8 0.7 0.6 0.5 0.4 0.3 0.2 0.1 0 0 5 10 15 20 25 Education When education increases from 15 to 20, the change in probability of entering the labor force = 0.18 38 Interpretation of Coefficients P[inlf = 1 | nwifeinc, educ] = Φ(α0 + α1 nwifeinc + α2 educ) and α0 = -1.131, α1 = -0.021 and α2 = 0.142 What is the P[inlf = 1 | nwifeinc = 100, educ = 5] = ? P[inlf = 1 | nwifeinc = 100, educ = 5] = Φ(-1.131 -0.021 x 100 + 0.142 x 5) = Φ(-2.521) = 0.0059 What happens when educ changes from 5 to 10?(Δeduc = 5) P[inlf = 1 |nwifeinc = 100, educ = 10] = Φ(-1.131 -0.021 x 100 + 0.142 x 10) = Φ(-1.811) = 0.0351 Thus, ΔP[inlf = 1] = 0.0351 - 0.0059 = 0.0292 The effect of educ depends on the value of nwifeinc 39 Effect of Education nwifeinc = 100 Prob[Y=1] 0.45 0.4 When education increases from 5 to 10, the change in probability of entering the labor force = 0.03 0.35 0.3 0.25 0.2 0.15 0.1 0.05 0 0 5 10 15 20 25 Education 40 Effect of Other Sources of Income educ = 12 Prob[Y=1] 0.8 When (other sources of) income increases from 10 to 20, the change in probability of entering the labor force = -0.08 0.7 0.6 0.5 0.4 0.3 0.2 0.1 0 0 10 20 30 40 50 60 70 80 90 100 110 120 130 140 Other sources of Income When (other sources of) income increases from 120 to 130, the change in probability of entering the labor force = -0.01 41 Effect of Other Sources of Income educ = 16 Prob[Y=1] 1 When (other sources of) income increases from 10 to 20, the change in probability of entering the labor force = -0.06 0.9 0.8 0.7 0.6 0.5 0.4 0.3 0.2 0.1 0 0 10 20 30 40 50 60 70 80 90 100 110 120 130 140 Other sources of Income 42 Interpretation of Coefficients To sum up, for a probit (or logit) model • The effect of variable Xj on the P[Y=1 | X1, …, Xk] depends on the value of Xj • The effect of variable Xj on the P[Y=1 | X1, …, Xk] depends on the values of the other independent variables 43 Probit Model – Estimation Results Now, lets estimate the full model. The estimation produced the following results: Pr[Y = 1| X] = F(0.580- 0.0116 nwifeinc + 0.134 educ + (0.494 ) (0.0051) (0.026) + 0.070 exper - 0.056 age- 0.874 kidslet6 + (0.008) (0.008) (0.115) + 0.0345 kidsge6) (0.0442) 44 Probit Model – Estimation Results • Now, we are assured that P(inlf = 1 | X) will be estimated between zero and one. • The signs of the coefficients are all like in the LPM • Also, just like in the LPM, all variables are statistically significant except kidsge6. • In the LPM, one additional child is estimated to decrease the probability of labor participation by 27.2%, regardless of how many children the woman has. In the probit model, the effect of one additional child on the probability of labor participation when the woman has no children is ΔP[inlf = 1] = Φ(-0.5016) – Φ(0.3727) = 0.3080 – 0.6453 = -0.3373. (Note: for this computation, we kept nwifeinc, educ, exper and age at their means and kidsge6 at 0) 45 Probit Model – Estimation Results And, keeping all the other variables constant at the values specified, the effect of one additional child on the probability of labor participation when the woman has already one child is ΔP[inlf = 1] = Φ(-1.3759) - Φ(-0.5016) = 0.0844 – 0.3080 = 0.2236. Therefore, the labor force participation probability is estimated to decrease by 33.7% for an additional child when the woman has no children initially, and by 22.4% when the woman has already one child. The marginal effect of one additional child is smaller when the woman has already one child (younger than 6) compared with when the woman has no children. This makes more sense than the constant marginal effect of 27% we found with the LPM. 46 Effect of number of young children Prob[Y=1] 0,7 All variables are set at their averages, except the number of older kids which is kept at zero. 0,6 0,5 0,4 0,3 0,2 0,1 0 0 1 2 3 4 Number of young children 5 47 Logit Model – Estimation Results The estimation produced the following results: Pr[Y = 1| X] = F(0.838- 0.0202 nwifeinc + 0.227 educ + (0.838) (0.0087) (0.044) + 0.120 exper - 0.091age-1.439 kidslet6 + (0.015) (0.014 ) (0.200) + 0.0582 kidsge6) (0.0772) 48 Logit Model (cont) The results from the logit model are very similar to the probit model. The signs of the coefficients are the same as with the LPM or the probit model. The magnitude of the effects is very similar also (check). 49 Estimation The coefficients of the probit and logit model cannot be estimated by OLS, since the P[Y =1 |X] is a non-linear function on the parameters α’s. We can estimate the the coefficients of the probit or logit model using two different methods: Non Linear Least Squares (NLLS) and Maximum Likelihood Estimation (MLE) 50 Non Linear Least Squares Find the values of the parameters that minimize SSR (sum of squared residuals) E[Y | X] = G(α0 + α1 X1 + …. + αk Xk), Then, NLLS finds the values of the α’s that minimize Σ [Yi - G(α0 + α1 X1 + …. + αk Xk)]2 The NLLS estimators are consistent, asymptotically normal but they are not efficient (there are other estimators with smaller variance). 51 Maximum Likelihood Estimation What is it? Likelihood function: Joint Probability function of the data (as a function of the unknown coefficients) Maximum Likelihood estimates: values of the coefficients that maximize the likelihood function MLE: Chooses the values of the parameters to maximize the probability of drawing the data that are actually observed; picks the parameter values “most likely” to have produced the data we observe. 52 MLE Example: Two iid observations y1 and y2 on a binary dependent variable (Y is a Bernoulli). Assume for now that there are no regressors, to make it simple. P(Y = y) = py (1-p)(1-y) We want to estimate p = P(Y = 1) (= Mean of Y) What is the Likelihood Function? P(Y1 = y1, Y2 = y2) = P(Y1 = y1) × P(Y2 = y2) = = py1 (1-p)(1-y1) py2 (1-p)(1-y2) = p(y1 + y2) (1-p)(2-y1-y2) 53 MLE We need to find the value of p that maximizes the Likelihood Function: P(Y1 = y1, Y2 = y2) = p(y1 + y2) (1-p)(2-y1-y2) Take derivative of the Likelihood function, set it equal to zero and solve: (y1 + y2) p(y1 + y2)-1 (1-p)(2-y1-y2) - p(y1 + y2) (2-y1-y2)(1-p)(1-y1-y2) = 0 (y1 + y2) p(y1 + y2)-1 (1-p)(2-y1-y2) = p(y1 + y2) (2-y1-y2)(1-p)(1-y1-y2) Divide both sides by p(y1 + y2) (1-p)(1-y1-y2) (y1 + y2) p-1 (1-p) = (2-y1-y2) p-1 – 1 = (2-y1-y2)/(y1 + y2) p-1 = 2/(y1 + y2) p = (y1 + y2) / 2 … (sample average!) 54 MLE Probit/Logit example: n iid observations y1, y2 , …, yn on a binary dependent variable, and P[Yi = 1 |X1i, X2i, …, Xki] = G(α0 + α1 X1i + …. + αk Xki)= pi We want to estimate the values of α0, α1, … , αk that maximize the Likelihood function What is the Likelihood Function? For the ith observation P[Yi = yi |X1i, …, Xki] = piyi(1-pi)(1-yi) Then L = P(Y1 = y1, Y2 = y2, …, Yn = yn|X1i, …, Xki) = = P(Y1 = y1|X11, …, Xk1) × P(Y2 = y2|X12, …, Xk2) × … × P(Yn = yn|X1n, …, Xkn) = p1y1(1-p1)(1-y1) p2y2 (1-p2)(1-y2)... pnyn (1-pn)(1-yn) 55 MLE Probit/Logit example (cont): Take logs to obtain Log L = y1 log(p1) + (1-y1) log(1-p1)+ y2 log p2 + (1-y2) log(1-p2) + … + yn log(pn)+ (1-yn) log(1-pn) = = Σ yi log(pi) + Σ(1-yi) log(1-pi) = = Σ yi log(G(α0 + α1 X1i + …. + αk Xki)) + Σ(1-yi) log(1-G(α0 + α1 X1i + …. + αk Xki)) The values of α0, α1, … , αk that maximize the Log Likelihood function are the MLE estimators. Numerical methods are used to obtain the MLE estimates. 56 MLE The Maximum Likelihood estimators are consistent, asymptotically normal and they are also efficient. The t-stats, F-stats, confidence intervals can be computed in the usual way 57 Measures of Fit (1) Fraction correctly predicted If Yi = 1 and the estimated P[Y = 1 | X] ≥ 0.50, then we have a correct prediction. If Yi = 0 and the estimated P[Y = 1 | X] < 0.50, then we have a correct prediction. Fraction correctly predicted = (number of observations correctly predicted) / total number of observations 58 Measures of Fit (cont) (2) Pseudo-R2 Recall R2 in linear regression model: we compare the sum of squared residuals from our model (when all regressors are present) to the sum of squared residuals we would obtain when none of the regressors are included We use a similar approach here: we compare the value of the log likelihood function we obtain for our probit/logit model (when all regressors are present) with the one we would obtain when none of the regressors are included Pseudo-R2 = 1 – (ln L / ln L0). Lets look at two possible extremes: Your model explains absolutely nothing. Then, ln L = ln L0, and PseudoR2 = 0. Your model explains everything. Then, the likelihood function is 1, which means that ln L = 0 and Pseudo-R2 = 1 59