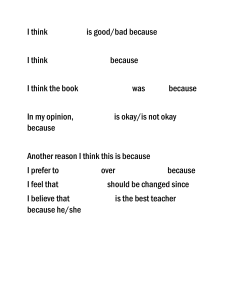

Empiricism and the Scientific Method Transcript Okay. So to start the course off, it's important that we're all on the exact same page about what is truth in psychology? How do we go about acquiring knowledge in a way that is reliable and accurately reflects the world around us? So we'll start off by talking about empiricism, which is this knowledge, framework, this philosophy that guides psychological science, and then the scientific method, which is our means of actually seeking out and acquiring this knowledge. Okay. So empiricism is the belief that in order to be true and reliable, knowledge must come from systematic observations that are documented or recorded as data. Okay. So we're trying to turn our own subjective experiences into something more objective, something that you can reproduce, that you can clearly document and show to other people as evidence of your finding. So as important as it is to talk about what empiricism is, it's important to think about what empiricism isn't. So what isn't a valid means of acquiring knowledge and psychology? Throughout your life you might encounter people saying, "I think that..." where they share their opinion, they share their thoughts, they hypothesize about how the world works. As an empiricist, it's important to counter that by saying, "Okay, prove it. Give me some evidence. What have you documented that you can show me beyond your own opinion or your own self-reflection that proves what you're saying?" Oftentimes we'll hear about esteemed names like Sigmund Freud, who theorized one thing or another. Now as esteemed of a name as Freud is, as an empiricist, we would have to ask, "Okay, Freud, where's your evidence? What have you documented that proves what you're saying is true?" We can't just rely on one person's thoughts or reflections to tell us anything truthful about the world. It's common knowledge. This is a common thing you'll hear in day to day life and often people will make these kind of appeals to folk wisdom and saying that, "We all know X," or. "We all know Y." But as an empiricist, we have to really check our assumptions and say, "Okay, show me the data. Prove to me that this is common knowledge. Prove to me that this common knowledge is actually true and people aren't being commonly misled." And finally, in my opinion, now, all research questions are going to start with our own ideas and our own thoughts about how the world might work. But as an empiricist, it's important to remember that we need objectivity. Opinions are subjective, and in order to back them up and to see if they hold any water or they actually reflect some truth in the world, we need to strive for objectivity. We need to strive to document something concrete about our world, that we can show to other people and say, "This supports my belief." So as a psychologist, it's always important to hold yourself to hold yourself to this gold standard of knowledge. So it's not enough just to think something or to assume something about the world is true. You actually need to go out and either find somebody who has documented or document it yourself. So how do we go about actually acquiring this knowledge? We use the scientific method. This is the empirical approach to testing our beliefs that involves choosing a question, formulating hypothesis, testing that hypothesis, and finally drawing a conclusion from your data. Now already I've introduced some terminology like, "Okay, what is a hypothesis? How is that different from a research question?" So really quickly, I want to go over some of these key definitions and highlight some of the differences between them. So a theory is the overarching system of interrelated ideas that are used to explain and unify a wide set of observations and to guide future research. So here we're not talking about any one particular finding. We are integrating a whole bunch of different findings into an overall idea or explanation for how at a very broad and high level, how the world works. So here's an example. A child's social development is uniquely molded by the interactions between their temperament and their self-regulation skills. Okay? So this broad theory is describing how specific aspects of a child's personality or their self-regulation works together to govern how they end up developing in their social world. This is a very broad idea. We're not talking about a very specific study. And in fact, there's lots of different studies, you could imagine, would feed into this idea and could be tested from this idea. It's very broad, it's condensing a lot of ideas into one higher order organizing system of ideas that we're going to call a theory. A research question is more specifically the question you are trying to address with a particular study. So this can be conceptual and it doesn't need to mention specific measures, but it's a lot more specific than a theory. A research question might emerge from a theory or test a theory. So to stick with our example of a child's social development, and this theory of that, our research question in this domain might be, how does shyness relate to children's ability to control their impulses over prolonged periods of time? So this is a research question that emerges from one component of our broader theory. So we're interested in how shyness, an aspect of kids' temperament relates to their ability to control their impulses, an aspect of their selfregulation skills. So our theory suggests that these two things should be intimately related. Our research question says, "Okay, let's put that idea into practice. How does this aspect of temperament relate to this aspect of selfregulation?" Now, when we actually go to test this research question, we're going to generate a hypothesis. So hypothesis is a statement that specifies a relationship between two or more measurable variables that can be proved or disproved within the bounds of your study. So we have a broader question. So how does shyness relate to how children can control their impulses over a prolonged period of time? Now, our hypothesis is our hunch, is our idea, it's guided by past work to say like, "Well, based on past work, we think these two aspects of personality or these two factors of the child will be related in this particular way." So an example of hypothesis here might be children scoring higher in a shyness questionnaire will be able to resist eating a marshmallow for longer than non shy children. Right? So by testing this very specific hypothesis, we'll be able to answer our research question, and in doing so will inform our theory. So we can see that these three ideas are kind of hierarchically organized. So at the broadest level, you have your theory, which governs a wide set of phenomena. From that theory merges a very specific research question. So, okay, how would this theory play out in this particular set of circumstances? Based on past work, you'll generate a hypothesis about how you think the world would work. You'd measure what you're measuring in your study, and your findings would either support or fail to support that hypothesis. And then that has implications back up the chain. So if our hypothesis was not supported, and that addresses our research question in that way, this is going to have implications for our theory. Now, one hypothesis that isn't going to totally cause a theory to crumble, but if enough evidence of accumulates over a series of different studies, maybe we'll have to update our theory or shift to a new theory that may provide an alternative interpretation at a broad level of how the world works. The really cool studies, rather than just testing one theory, actually kind of pit two different theories against each other. So then its findings can actually put them to the test and will either support one theory while failing to support the other. This is a really good way of scientifically evaluating these different high order ideas and finding what's the best way of explaining what we observe in the world. Cross-Sectional Designs Transcript Okay. Now we have terminology out of the way. We've talked about some of the different contexts and methods, which we can use to study children. Now, let's really talk about how we're organizing the designs of our studies. How are we choosing which groups of children to compare? Are we using the same children or different children? And we'll start off today by talking about cross sectional designs. A cross sectional design is when you use different children of different ages and you compare their performance. Each participant is usually only tested once, and we use this to highlight developmental trends in children's performance. You'd have a group of three year olds, a group of four year olds, a group of five year olds, and you'd test them all independently, and you'd see, "Hmm. How did the three year olds perform differently than the four year olds? Or the four year olds from the five year olds?" Et cetera. Okay. Let's look at one of my favorite examples of a cross-sectional study. This is a study by Shtulman and Carey from the year 2007. They were really interested in how children think about the possibility of events from mid-early into later childhood. How do children reason about what can happen versus what, no, no, no, it's impossible. It couldn't happen? They showed children of different age groups, three different kinds of stimuli. First, they showed them totally ordinary events, or they asked them about ordinary events. They would say, "Could you drink orange juice? Or could somebody drink orange juice?" It's pretty ordinary. They would ask them about improbable events, like, "Could somebody drink onion juice?" I mean, I've never had onion juice myself, but I know that onions produce juice, so you could, theoretically, create onion juice. And then, they ask them about totally impossible events, "Could you drink lightning juice?" No. As adults, we know that you can't turn lightning into juice. This is an example of an impossible event. But they're interested in how children would reason about these three different kinds of events, as they got older. When would they start thinking that impossible events were impossible, or that improbable events were improbable, but still possible, et cetera. All right. I'm going to show you a graph of their data, really, really cool stuff. On the Y axis, going up on the left hand side, is the average number of events judged possible. The higher point is, for any particular group, the more possible that group judged the event to be. On the X axis, right along the bottom, we have our four separate age groups. Remember, this is a cross sectional design. That means we have four distinct groups that were tested. We have four year olds, six year olds, eight year olds, and adults. Now, let me show you some of their findings. Here's all their data. First along the top, where we have these circular dots, this is how children responded to ordinary events. Even from the age of four years olds, onwards, everybody understands that these ordinary events, like drinking orange juice are totally possible, even young children understand this. If we look down at the bottom, we can see how people responded to impossible events like drinking lighting juice. Four year olds, just like adults, understand that lighting juice just doesn't exist, so no, you can't drink lighting juice. Now, what's really interesting, is what happens in the case of these improbable events. What we see here is that, early in childhood, around the age of four, children thought that drinking onion juice was impossible. It wasn't just improbable, it was impossible. You couldn't do it. On the far top right corner, we can see that adults realize that, as weird as it is to drink onions juice, there's nothing stopping you from doing it. It's totally a possible thing that you could do. And in the middle, we can see that this kind of understanding that these improbable or weird events, are nonetheless possible. This emerges over time. It's not like you either know it, or you don't. It seems like as children were getting older, they increasingly realized that improbable events could, nonetheless, still occur. I really love this study and I think it really perfectly demonstrates the power of cross-sectional designs and what they're really useful for. Here we have a perfect example where at one point in development, at the age of four, children were thinking about things a certain way. Now, by getting these little snapshot images of how children at different stages, all the way up until adulthood, think about these kind of problems, we can see how children's thinking changes as they develop. This gives us great snapshots that we can compare across different stages of development. Cross-sectional designs have their drawbacks too. One of the major drawbacks is that, because all of these four groups featured different children, it doesn't give us any insight into the stability or patterns of change within individual children. We don't know exactly what individual differences might promote kids' thinking about one thing or the other. Maybe within each age group, there's some variability, and some kids are thinking a certain way, but other kids aren't, we don't really get to look at any of that, because we're just grouping all children of the same age in together. What it do well, it allows us to map note these changes across broad developmental time. What it doesn't allow us to do, is to hone in on the individual and to see, "Okay, what is it that's unique about that individual, that predicts how they develop." Correlational Designs Transcript Okay, so now that we've talked about experimental designs, we'll talk about another empirical research design, which is known as correlational designs. So, in correlational studies, you examine how variables are related to or associated with one another. Okay? So, this is really, really useful when we're looking at variables that can't be directly manipulated by the experimenter. This is things like age, someone's, gender, their personality traits, their socioeconomic status. As an experimenter, we can't assign someone, or randomly assign someone an age, or randomly assign someone socioeconomic status. These are things that occur in the person, and there's not really anything we can do as an outside influence to assign someone, one of these characteristics. So the best we can do to empirically study these topics or these factors is to look at their relationship with other factors. Importantly, however, there's some caveats we need to consider in how we interpret correlational studies. One is that we need to be really careful, as some correlations could be totally coincidental. Consider this totally made up data I'm going to present on the left hand side of the screen. So, let's imagine this first line represents the average number of diagnoses of autism spectrum disorder each year in Canada, over a 10 year period. The second line, let's consider this to be the average number of vegetables eaten by a family every day, or for over a 10 year period. So for both of these two lines, they're both increasing gradually over this period. Now, if you were to eyeball it, you might say, hmm, it seems like these two are associated with one another, but importantly, we have absolutely no theoretical reason for thinking that there be a relationship between these two variables, right? Just because we may perceive there to be an association between the two is not enough to say that necessarily they're related to one another, right? It could be total coincidence. So, when we are proposing that there is an association between two variables, it's important that we really reflect on, does this make sense with it, in our broader understanding of the world. Is this rooted in previous empirical observations or existing theories, right? Does it make sense for us to infer an association between these two? Another important consideration is that we cannot determine causality or the direction of associations between two correlated variables, okay? So, just because two things are associated with one another, we cannot just base solely on correlational studies, say that one thing caused the other, or that one thing is influencing the other, and not the other way around. I'll give you an example of that in just a second, but you've probably heard the mantra before, correlation is not causation. And this is really something to take to heart. Correlational studies can be really, really important. They can be really illustrative and can give us a lot of insight into these, the associations between these variables or these factors that we can't manipulate ourselves, but, we just need to recognize the limitations of this particular kind of empirical study. Okay, so let's consider an example. A lot of people are really interested in the relationship between children's screen time, so how much the time they spend on computers or iPads, cell phones, et cetera, and how that relates to their behaviors. For example, their hyperactivity or their impulsivity. Some people might argue that screen time act actually promotes hyperactivity or impulsivity. So let's imagine we saw an association between these two variables, kids who spent more time on their phones also tended to be more hyperactive or more impulsive. It could be that maybe screen time is actually directly promoting or causing this hyperactivity. But, on the other hand, based on the exact same correlational data, it could be just that more impulsive high energy children naturally gravitate towards screens. There's something about screens they find really compelling. And so this relationship between more screen time and higher impulsivity, it could be the opposite direction, right? Or, as a third possibility, it may be that there's some third variable that's actually explaining the relationship between these altogether, right? Maybe children who have a particular diet, for example, one that's higher in sugar is influencing both of these things. Maybe that's what's resulting in their hyperactivity and also resulting in their increased screen time. And it's this third variable that's actually misleading us into thinking that these things are directly related to one another, right? Keep in mind, I just made this up for the purpose of this example. There's lots of really cool research around both these topics, if you find them interesting, I'd encourage you to go take a look. But anyway, the big takeaway here is that correlational research, really important, can help us understand lots of really cool domains of psychology, but they're only one piece of the broader research puzzle. And there's the limitations of them we really need to be aware of, right? So, they're great when we can't directly manipulate the characteristics of interest, right? Things like age, SES, but we can't employ, we can't determine causality and we can't strongly imply a directionality just from correlations either, right? That's all they are, is associations between these two, and on their own, they can only tell us so much. Okay? Important Terminology in Empirical Research Transcript All right now that we've defined empiricism and the scientific method, we can jump into some other important terminology and empirical research. Okay. So, let's start off by looking at our measures. An operational definition is exactly what is meant by each variable in the context of a study. This really governs how you're going to turn these abstract ideas or concepts that you're interested in into something more concrete and measurable. So, let's imagine we're interested in studying shyness. Shyness is an aspect of someone's personality. It's not really something you can put your finger on or measure on its own. So, the way we might get at, what is shyness is perhaps by, giving someone a questionnaire and looking at their score on a variety of items that are assessing their experience of shyness in day to day life, right? So, by turning this abstract aspect of personality and operationally defining it as their score in response to these questions, now we have something palpable that we can measure. Another example might be someone's reading ability. You might give them a comprehension test where you ask them to read a passage or a couple passages, and then answer questions on those, right? And you'd say, okay, we will define how good someone is at reading based on how well they can answer these comprehension questions. Or maybe you're looking at something like, someone's memory. You might give them a long string of numbers and only give them a very short period to look through them and then say, okay, what's the longest string of numbers that you could recall after this short presentation, right? All right. So, the key idea here is we're taking something abstract, like an aspect of personality or an aspect of someone's cognition, and we're boiling it down into something that's actually measurable. Something that we can reliably and objectively measure. And we'll use this to address our research questions. Okay. So, now that measure are out of the way, let's talk about what actually we mean when we're talking about an experiment. So, an experiment is a situation in which the investigator holds all things constant, except for one aspect, which is strategically varied across conditions. The thing that we actually change or manipulate across these conditions is what we'll call, an independent variable. Okay? So all things are going to be held entirely consistent in this experiment with the exception of one thing, which we're going to change. An example might be you have two groups, your independent variable is the kind of music that is being played to the people in each of these groups. Maybe in one group, they're listening to classical music and the other they're listening to rock, right? So the key here is that literally everything else about the environment of these two groups is identical. The only thing that we're changing is the music being played to them. And in this way, any differences that we observe between these two groups, we can totally chalk up to that thing we manipulated. The dependent variable on the other hand, is whatever you're measuring as your outcome. This is like maybe the measures that we identified in the previous slide. So, for example, in this study, we might be interested in how the music being played impacts people's scores on a math test. So we have of people in these two groups, one listening to classical, the other, listening to rock, and they are studying or completing a math test. Everything else is exactly the same. That way, whatever differences we see in the two groups' scores on these math tests, we can totally chalk up to the kind of music that was being played for them. Then you might be thinking, wait a minute, there's one other thing that could be influencing the results here. That's the fact that some people are better at math than others, right? And this could be influencing our results. Maybe there are just more people in the piano group who are better at math than people in the rock group. And that's going to throw off the results of our study. Luckily, there's a solution to this problem. And this is called, random assignment. The central idea behind random assignment is that when you have your full sample and you're sorting people into the two groups you need to do so entirely at random, right? So half of the people will go in one group in our classical music group and the other half will go into the rock music group. This is totally done at random using like a random number generator or something of that sort. There's absolutely no decision making, putting people into either group based on any particular characteristic, right? It is totally at random. The key idea here is that because we are randomly sorting people into these two groups, all traits, for example, math skill in this case, should be equally distributed across the two conditions. So if we turned out that we have three people in our classical condition, that are excellent at math, chances are there would likely be just as many people who are really good at math in the rock music condition as well. So random assignment controls for this variability or this individual variability in math skill. Okay. So, obviously random assignment is central to the underlying logic of experimental design, but it doesn't always work out like that in the real world. So, it's important to consider what can go wrong or what we need to do to ensure that random assignment does its job. One is that we need to make sure we have enough people going into both of our groups. With an insufficient sample size, it's more likely that you're going to have a couple math whizzes in one group versus the other. That's totally going to throw off your results, right? You need to make sure that there's enough people that you're sorting into these two groups to ensure that everything averages out. The other thing is that it's good just to measure any variable, you're worried about throwing off your results. What we might call an extraneous variable. So if we were really concerned that people's intrinsic math skills was going to throw off the results of the math quiz, we gave them under these specific conditions. Maybe we want to have some other measurement of their intrinsic math skill, like maybe their grades in a math class, right? That way we can double check. Hmm. Do we get as many math whizzes in this group, as in the other one? Is the average math skill intrinsic, math skill of our two groups equal. If we didn't have this measurement of this extrinsic or extraneous variable, we wouldn't even be able to identify if there was a problem. So if you're designing a study, an experiment and you identify that there's maybe some intrinsic characteristic of your participants that could throw off your results, it's a good idea to measure them, to make sure that you're, when you do your random assignment, that it does adequately sort them equally into these two groups. Okay. Experimental Designs Transcript In this video, we're going to do a deeper dive into experimental designs. We're also going to revisit an example from the correlational designs video and interpret it again, this time through an experimental lens. So the important distinction between experimental and correlational designs is that unlike correlational studies, experimental studies can infer causation. The reason we can do that is that everything is kept constant except for the variables of interest, right? So the variable that we manipulate is known as the independent variables, right? This is the one factor that we change between our different treatment groups, and therefore, any differences we see between those groups, we can only chalk up to that experimental manipulation. We can isolate the causal factor as being that thing that we changed, that independent variable. The dependent variable, by contrast is, what we are measuring, right? So this is the change we're looking to document as a function of the manipulation of our independent variable. Again, experimental studies really rely on random assignment so that participants are randomly assigned into either conditions as a means of ruling out whether any individual characteristics could influence our results. In our previous video on correlational designs, which talked about this potential association between screen time and impulsivity in children. Now, remember this was a made-up hypothetical situation, but let's imagine we wanted to explore that exact same question in an experimental design, or use an experimental design rather than a correlational design. Here's an example of how we go about doing that. So let's imagine we had our two groups, okay? We split our sample into two groups. Each group is going to be given... Of children is going to be given a certain amount of screen time, or time playing on an iPad, okay? So one group is going to be given an iPad to play with for five minutes. The other group is going to be given an iPad to play with for a period of 30 minutes. So that's our independent variable out of the way. Now for our dependent variable, we're going to give kids a choice. After they're done playing with their iPad, they're going to be offered two options. One, they can either have a candy cane now, or if they're willing just to sit and wait a little bit longer, we'll give them two candy canes later on, right? So our dependent variable is going to be, which of these two options do children select? And children in each of our two treatment groups, group one and group two, are both going to have the same choice to make. Now, if screen time causes an increase in impulsivity, what we would predict is that children in the first group, who only had five minutes of iPad time, would say, "No, I can wait a little bit," and they would pick the bigger reward later, while kids in the second group, who only who had 30 minutes of iPad time, would say, "No, no, no. I'm more impulsive. I want my reward now," and they would pick the only one candy cane right now. So this would be in line with our prediction that screen time caught causes an increase in impulsivity. So from this, you should be able to contrast the distinction between experimental and correlational research. While the first correlational study only allowed us to look at the association between these two variables, the experimental study allowed us to hone in on the causal relationship between the two. One of those factors was causing the other, and that's the power of experimental research. Microgenetic Designs Transcript Microgenetic design sounds like it's straight out of a biology textbook, but it's actually another design that's used in some niche psychology studies. So the specialty of microgenetic designs, almost as a counterpoint to longitudinal designs, which look across very long periods of time, microgenetic designs really try and pull apart the single processes and try to understand how people think in very particular circumstances. Microgenetic designs track small scale developments in children's cognitive and behavioral processes. So usually this means having a couple or several observations of the same child over a short period of time, so whether it's a couple different sessions throughout the same day or over a couple weeks, and what you're really trying to do in these microgenetic studies is you're trying to observe how a child's cognition or behavior is changing with prolonged experience in a particular context. So here's an example. Imagine you're trying to observe how a child learns to solve a complex puzzle over the course of several sessions. You're really trying to identify what was the child doing right before their eureka moment when they suddenly could figure out how to solve it. So what microgenetic designs offer that other designs in developmental psychology don't, is a really detailed look at how specific processes develop, so much more fine grain than the time scale that other designs would allow you to look at. If you were really interested in what specific patterns of behavior led to a child having a new kind of insight or having a cognitive breakthrough, that's when a microgenetic design would be really, really useful. So this is a very specific function. This would be really useful for someone who is interested in learning processes, or maybe in certain applications in clinical psychology. That being said, though, it's not that common in most other areas of developmental psychology, and it's not something you'll encounter very often in modern developmental research. For most of the research questions that we're going to be asking, you'll encounter cross-sectional research, occasionally short-term longitudinal or longterm longitudinal occasionally. Those are the real bread and butter of modern psychology. Microgenetic designs are definitely super cool, but not something you're going to encounter all the time. Interviews and Questionnaires Transcript There are lots of different contexts and methods by which we can acquire data from children in developmental studies. So in this first video, we'll start talking about interviews and questionnaires. So we'll start off by looking at structured interviews. So structured interview occurs when the experimenter asks a participant a series of predetermined questions and records their responses. So an example might be experimenter asking "If you had to choose between these two options, which would you pick?" Then after the child provided their like dichotomous choice of which one they would prefer, the experimenter might in a very predetermined fashion follow up with the question, "Why?" The responses the child gives to this structured interview would be recorded at the time of data collection and then later on would be analyzed and coded. So when we're talking about coding, what we mean is that their answers are going to be interpreted and categorized by a trained coder. So coders will often work in teams to ensure that there's a lot of inter rater reliability, which you'll recall all means that different people who are interpreting the same answers from the child would categorize them in very similar ways. Oftentimes, they'll also convert different aspects of children's responses or their behavior into scales, so following predetermined coding criteria. So there might be certain ways of interpreting child's responses being either very negative or very positive and coders in an effort to make things more objective might turn their response from like a transcript or a sentence into just a numerical code of this answer was very positive or very negative or somewhere in between, etc. So let's look at a couple examples of studies that you use structured interviews of varying complexity. So here's a study by Golding Accounts and Friedman from the year 2019. So in this study, they were really interested in whether children's beliefs about their preferences when they later became adults will be different from their preferences than as children. So do children think that the things they like now they're going to like forever. In this study, they asked very straightforward questions. They would give children the choice between two options. So here a sippy cup and a coffee cup, and they would say, "Which one will you like when you grow up?" A very straightforward a question with these stimuli accompanying it to which children could just give a simple answer in the form of either a pointing finger or by naming the object. So they would say, "I think when I grow up, I would like a sippy cup," for example. So this very straightforward. Coding children's responses here is very easy because it's just an answer to a dichotomous or two option choice. All right. So now let's look at a slightly more involved example. This is a study by Slaughter and Griffiths from 2007 in which they used a structured interview to examine children's thinking about death. So here's an example in this table of some of the questions and the scoring criteria they used in their study. The first column on the far left talks about some of the sub components that they were interested in. The middle column looks at some of the interview questions they used and the right most column looks at their scoring criteria. So if we look at some of their interview questions, they ask questions like tell me some things that die. If children did not name people as some things that die, there was a planned follow up where the experiments asked, "Do people die?" So the important thing here in is that while there was a contingency in place where if they didn't mention people in their answer, the experimenter was planned to follow up. This wasn't just a random diversion that the experimenter chose to follow up on. In a structured interview, everything needs to be planned out and you need to follow the exact same structure with all participants in your study. You can't just shoot from the hip. Now, when it came to actually scoring children's responses to these, there were like numerical values given to their responses. So for example, to that first question, tell me some things that don't die. If the kid did not mention that people died and when asked if people died, they said, "No, people don't die," they were given a score or of zero. By contrast, if children would volunteer that "Yes, people die," they would actually be given a score of two. So in this way, tallying their scores across all the items would probably give you a total score that would say how much does this child know about the nature of death. So this is an example of a structured interview that can be used to build a really interesting measure of children's comprehension of death. So an alternative to a structured interview that's similar in its content, but a little different in how it's administered is a questionnaire. So questionnaires give participants a pen and paper and allow them to read through items and to respond using multiple choice or scales, etc. This is great if your participants can read, because it's a fast and efficient alternative to doing more lengthy drawn out interviews. So for example, if you wanted to collect data from an entire school or an entire grade of children, giving out questionnaires to everybody would be much more efficient than interviewing them all one on one. So here's an example of a questionnaire that yeah, you might use in child research. So this is the screen for child anxiety related disorders, AKA the SCARED. It was developed by Birmaher and Associates in 1999. So basically it's a questionnaire. You can see it on the left hand side of the screen. You have a series of questions. This is the first page. There will be a lot more with questions like when I feel frightened, it is hard for me to breathe or I am nervous or people tell me that I look nervous. To any of these items, participants could circle the answer that best applies to them. So they could say not true or hardly ever true, which would give them a score of zero, somewhat true or sometimes true, which would give them a score of one, or very true or very often true, which give them a score of two. So basically at the end when the participant has completed this questionnaire, they could hand it in, a researcher could very quickly tell you their score and you'd have a total score for their anxiety with a higher score meaning more anxious-like symptoms. So very fast, very efficient. But again, this is all with the assumption that the participants in your study, the children are able to read through the item by themselves and answer honestly without any kind of confused or question about whether or not they'd be able to complete the survey accurately. So another type of interview we'll talk about is called the clinical interview. So in a clinical interview, questions can branch off from the pre-planned questions to follow up on the answers that the interviewee provides. So imagine the child is responding and says something that the interviewer based on their training thinks they want a little bit more insight into, or they would like a little bit more of an explanation. They could follow their intuition and ask a different follow up question. So this is very unlike the structured interview in which the structure of the interview is set from the beginning. So this is really useful for like clinicians or like child psychologists for obtaining in depth info about a particular child. So if they were trying to diagnose a clinical disorder or a learning stability, this is the kind of follow up question that they would likely ask. However, for larger scale psychological studies, when we're not trying to learn about an individual child, but rather we're trying to learn about children in general and generalize to a broader population, this type of interview is not really what you would use. The reason being that in order to ensure you have high internal validity in your studies, that you can actually generalize your findings to the real world, all the participants in your study need to be presented with the exact same questions and prompts. If you don't present the exact same questions and prompts like you would in a clinical or in a structured interview, you wouldn't be able to generalize your findings because every child's interview would be different. So now you should have a clear understanding of the distinction between structured interviews, questionnaires and clinical interviews and in what kind of context you might use each of them. Longitudinal Designs Transcript So longitudinal designs are great because they give us snapshot glimpses of different stages of development. So we can see developmental trends over time, or we can capture when there's suddenly a eureka moment, where suddenly children at a certain age grasp a new concept that they wouldn't have gotten earlier. Right? So we can really map out these protracted changes across development. But one thing cross-sectional designs don't do well is give us insight to the individual. And this is where longitudinal designs really shine. So longitudinal designs look at changes within the same children over significant periods of development. This could be months, years, decades, et cetera. Right? So what question you could ask with the longitudinal design might be, within a single child, how does their self-esteem change across adolescents? Right? So in this graph I've made over here, again, this is all invented data. We have self-esteem on the Y axis. So higher points on this graph mean greater self-esteem. And on the X axis along the bottom, we have age in years going from age 11 all the way up to age 18. So as we can see with this first line, starting at age 11, it's relatively high on the scale. It increases gradually up until around about the age of 15, at which point, which we see a big plummet in self-esteem all the way down, continuing to go all the way down to around the age of 17, and then stabilizing somewhat or starting to rebound. Right? So this could be the pattern that emerged in one particular child. Now, if we looked at a different child, we might see a different trajectory altogether, right? So this child started off slightly lower at age 11, but their selfesteem seemed to maintain relatively similar levels across adolescence, and in fact, increasing ever so slightly. So whereas the first child plummeted at age 15, this child did not. Right? If we were just looking at this from a cross-sectional perspective, both of these children would've been grouped in together at each of these developmental stages, right? First of all, we would've only looked at them once, but they also would've been averaged out. Right? So all of these differences in their unique developmental trajectories would've been lost in a cross-sectional design, but not in a longitudinal design. And so when we see these different trajectories, we can ask followup questions like, "Okay. What individual factors are actually impacting these different trajectories?" And it's really only by looking at these changes within the individual and these individual factors can we really answer questions like this. You wouldn't be able to a answer question about these individual factors using a cross-sectional design. That being said, there are also some problems, or not problems, but some difficulties that come with longitudinal designs. So as rewarding as they are, they're very difficult to pull off for a couple of reasons. One is it's a really big investment. So it costs a lot of time and a lot of money, right? You need to be able to facilitate people coming back to participate in the study over long periods of time. But of course, people move and people will fall out of the study. So you need to find ways of really incentivizing them to stay in the study, to facilitate them coming to participate in the study, even if that means buying them a bus ticket or buying them a flight. So basically, you need a lot of money and a lot of planning to pull off a longitudinal study. I just alluded to this. Many participants are likely to drop out, right? So over the example I gave here was an eight year period. A lot of your participants are likely to drop out, right? Maybe they move away and they don't want to participate anymore, or maybe they had a bad experience, or maybe they've become shyer. There's any number of reasons why someone might not want to participate in the study over time. But for that reason, you tend to lose people over time, which makes them a high risk, but high reward endeavor. So really, oftentimes, cross-sectional designs are just a lot more practical because you can get a bunch of people at single time points. They only have to come in and be studied a single time, making it a lot more practical to answer most, but not all developmental research questions. When people think about developmental psychology, I think they usually think about these longitudinal studies. But in reality, these are quite rare. You will not see near as many longitudinal studies due to these difficulties we just discussed as you would see cross-sectional studies. What really makes them special is their ability to look within the individual and to see how individual differences in people influence their developmental trajectory across time. That's the real key question that a longitudinal design can answer that other designs just can't. Reliability Transcript For a study to provide worthwhile and meaningful results, we need to have good measures, right? And so in this video we're going to be talking about the reliability of the measures we use in psych studies. When we talk about reliability, we're really talking about the consistency of a measure. We're asking, does our measure produce similar results when we expect that it should? If not, we got a problem. The first form of reliability we'll talk about is called test-retest reliability. If a trait being measured is supposed to be relatively stable across time, our measurements of that trait should be relatively stable across time too. The thing we're trying to measure isn't supposed to be changing from day-to-day. Maybe it's thought to be a permanent aspect of someone's cognition or personality, or maybe it's something that changes over a much slower and longer time scale. If we're getting day-to-day changes, we got a problem, right? If the thing you're supposed to be measuring is supposed to be relatively stable across time but your measurements aren't relatively stable across time, you have a problem with test-retest reliability. Here's an example. Imagine we're developing a new test of children's abstract reasoning. Okay? On the first day, Timmy completes the task and is told that he's highly gifted. Like, wow. He scored really, really well. That's awesome. Timmy gets the exact same test four days later only this time his results indicate that he's only about average. Given that his abstract reasoning is conceived to be a relatively stable trait about his cognition, it couldn't have changed internally in about four days of time, right? So we know that something's up with our measure. If our measure is not getting consistent results for this relatively stable trait, we have a problem. Another kind of reliability is known as inter-rater reliability. In this case you have two different experimenters who are both using the exact same measure and they're scoring the same participant so you'd expect them to get similar results. The question is, if two different experimenters are scoring a measure, do they get similar results? Let's generate a hypothetical example. Let's imagine the University of Waterloo developed this new scale for evaluating how cute a baby is. The Waterloo Baby Cuteness Scale. We have two different experimenters who are using this scale to evaluate the cuteness of a baby. Experimenter one says, this is the cutest baby I've ever seen, 15 out of 15, very cute. Experimenter two on the other hand using the exact same scale, came up with the finding that, eh, it's a pretty standard baby, only giving it an 8 out of 15. So these are two people who are supposed to be using the same objective measure of cuteness and they have dramatically different results. This is an example of poor inter-rater reliability. If our goal is to have an objective measure of cuteness, then our measure is currently not reliable. Okay, so let's try and improve on this. Imagine we have a different inventory now. This is the Wilson Cuteness Inventory. This one's broken down a little bit more specifically into subscales so it has specific criteria, so cheek size. Both of our raters would say, okay, I've been trained on how to evaluate cheek size and this is a 4 out of 5. Head roundness, okay, this is 4 out of 5. Nose stubbiness, very stubby, 5 out of 5. Total score, 13 out of 15. Following these very strict criteria, both of our evaluators landed on the exact same score and this would indicate for us that now this new scale, the WCI, is much more reliable than our previous scale because two people, two independent coders are able to land on the exact same results for the same person. So that's inter-rater reliability. One important thing to remember is that some things that would be measuring do vary naturally, right? For example, mood. Your mood on Monday could be totally different from your mood on Tuesday, which could be totally different from your mood on Wednesday, et cetera. If we had a mood scale that varied dramatically across these three days, that's totally fine because we also expected the underlying construct that we're measuring, mood, to vary as well. The important thing about reliability in this case is that your measurements should only vary as much as the thing you're measuring varies. That's the key point to take away here. Observational Research Transcript Okay. Now that we've talked a little bit about interviews and questionnaires, let's take a little look at observational research. There are two main kinds of observational studies that we're going to look at. The first is, naturalistic observations. This occurs when you examine children in their usual environments, you might be examining how children and their parents interact at home, or how children interact at school, in the classroom, or on the playground. What the researcher is doing in this context, is that they're not in control of what the child does at all. They've chosen their context in which they're going to observe the child's behavior, and they just code whatever naturally elicited behaviors occur. For example, they might code or enumerate how often a child engages with a peer, how much time they spend engaged in a particular type of play, for example, in the classroom, how much of their time do they spend playing with blocks? They might code how often or how much time is spent smiling, or laughing, or expressing happiness, as opposed to neutral emotion, or a sad, or angry emotion. Think back for a second to our discussion about validity. When it comes to external validity, you can't really get better than naturalistic observations. This is a... People out in the real world are interacting in very natural environments. And so, this is about as valid as it gets when it comes to extending the findings from our research to real world context. However, what you sacrifice there is the ability to control what's going on. So, if we were studying children on a playground, we have no means of controlling how many children are there. What they're doing on the playground, who may enter the scene or leave the scene, what people might say to each other. We have zero control at all. It's always a trade off like that. Now, you can recoup some of that control in what is known as a structured observation. A structured observation would involve each child being placed in the exact same controlled situation, while their behavior is recorded. Each child will get the exact same environment, the exact same instructions, and the exact same proceeding interactions. Imagine you took two children, you brought them into an observation room and you said, "Okay, I need the two of you to complete this Lego model. It's a really complex Lego model. I'm going to give you five minutes, have at it." And then you left the room, you had a camera recording, and you observe their behaviors as they interacted with each other to try and complete the Lego model. By tightly controlling all these environmental factors, the exact same room, the exact same number of children, the exact same instructions, researchers can then identify what specific factors are influencing children's behavior. For example, you could vary some of the different task characteristics. The different roles in the game. You could bring two children in to do this Lego model. One of them could be the planner and the other one could be the builder. And you could observe how these different roles influence the communications they engage in, in each of these roles. You could vary different environmental factors. Maybe in one study you look at two children per room, whereas in a different study, you look at four children per room, and you see how the dynamic changes, or how their observed behavior changes, as a function of how many kids are in the room. Or, you could even look at individual differences. You could purposefully seek out children with certain personality characteristics, such as higher, low shy, children. And you could pair them in groups as a function of that, and observe how their communications differ as a function of these individual differences. The key here is that, it's still an observation, so you're still leaving children to their own devices and observing their behavior, but rather than being out in the wild, you're in a much more predictable environment, and you can control for external influence to an extent. What the children do when they're in the room is still up to them, but you've provided them a closed system, in which to interact. Validity Transcript Okay. So we've talked about reliability, which is how consistent a measure is. Now we're going to look at validity, it's counterpart. So validity focuses on the accuracy of a measure. Or it asks, are we actually measuring what we meant to measure? A measure is only as good as it's telling us what we need it to tell us. So questioning whether or not our measure is accurate, or whether it's actually getting at the kind of conceptual content that we want to be measuring, is really, really important. There's two components, or two aspects, of validity that we're going to talk about. And in any study, you're really trying to balance these two aspects, because sometimes what will increase one might decrease the other. So it's always a balancing act. First there's internal validity. Internal validity asks, are we sure that the effects in our study are caused by the manipulations we made? Or could other factors be influencing the outcome? By contrast, external validity asks, are we sure that the finding of our study will generalize to other groups, for example, the children from another culture, or to real world situations outside of the lab? For example, in real world social interactions. So the thing about designing a study with internal and external validity in mind, is that oftentimes you have to make trade offs when making decisions about how you're going to design your study. So the decisions that might optimize your internal validity, give you lots of control and the power to really clearly see what's influencing what, might at the same time kind of reduce how much your experimental situation really resembles the real world. By contrast, when you design a study that really closely resembles the real world, or maybe even takes place in a real world context, you end up losing the power to tightly manipulate and control every single variable that's going on in the environment. So we're going to look at an example now of two different studies and how they exemplify this trade off between internal and external validity. Let's imagine we were really interested in examining the research question, are shy children more sociable in the presence or absence of an authority figure? Okay? So here's one approach to designing a study to investigate this question. Let's imagine we put kids in, they brought them into the lab, and we gave them a task, in which they were supposed interact with another child over the computer, right? So secretly, this child they're interacting with is all pre-programmed by the computer to make sure that every kid who participates in this study has the exact same interaction, right? In one condition, you leave the kid to have this interaction with this unknown peer. In the second condition, it's the exact same setup, but in this time you have a teacher or an authority figure who is monitoring the situation on the computer. So this is again going to be a very tightly controlled, like popup window, where it says "The authority figure is watching what you're doing right now." Right? In this case, what you'd be looking at is whether the child's social behavior, the kind of messages they type to this peer they're interacting with, would be more social in condition one, when there is no authority figure, or in condition two, when there is an authority figure. And whether that varies as a function of the kid's shyness. So the positive aspect of this study is that it has really high internal validity. So we've got a really tightly controlled environment here, right? So we have every kid in the exact same situation. Their peer is going to have the exact same communications. In the authority figure condition, that authority figure is going to have the exact same communication. So the only difference between these two conditions is the presence of the authority figure. Everything else is very, very highly controlled. The weird thing about this study though, is going to be that, if what we're really trying to learn about is how shy kids socialize with other kids, this doesn't really look a whole lot like normal socialization context, right? This is very contrived and it doesn't really reflect the kind of socialization that they might do on the average day-to day basis, right? So while we have a tightly controlled situation, what we don't have is something that might necessarily extend to all circumstances. Okay. Let's take a slightly different approach. Now let's consider actually going out into the world and observing kids' behavior in a playground context, or in a schoolyard context. So we go out and we start observing children as they're interacting with each other in the school yard. And we make note of two different circumstances. One, how children are behaving when they're all alone, it's just the kids. Versus two, how the kids are behaving when their teacher is nearby. Okay? So we're coding their behavior, and we're making note of whether their teacher is there or not there. The pro of this design is that we have much higher external validity, right? We are not controlling anything at all. We have not made up some weird lab context. This is the real world context that we're trying to learn about. We're observing kids in their real world environment. But the thing we're sacrificing is, we have no control about any other factors, right? We don't know what games they're playing. We don't know what kind of external things might be going on, what they might have been doing immediately before they were being observed or after. What kind of interruptions may occur. We don't know how often their teacher is going to be around, or how their teacher is going to be interacting with them. So while we have the high external validity of observing them in this very real world natural context, what we don't have is high internal validity, because we have very little control over what's actually going on in this environment. So is one of these studies better than the other? Not really. They're both really important actually. And they really compliment each other. So really, if you had a theory about how shy kids were interacting with their peers in the presence of a authority figure, you really would want both of these studies to really work together to inform your theory, right? The point is that, when you have certain considerations to maximize one kind of validity, you might actually be making sacrifices about the other. That's the real takeaway point here. So hopefully that's nice and clear.